Lip-Syncing Robot Emo Learns to Talk Like Humans by Watching YouTube Videos

6 Sources

6 Sources

[1]

This Lip-Syncing Robot Face Could Help Future Bots Talk Like Us

Julian is a contributor and former staff writer at CNET. He's covered a range of topics, such as tech, crypto travel, sports and commerce. His past work has appeared at print and online publications, including New Mexico Magazine, TV Guide, Mental Floss and NextAdvisor with TIME. On his days off, you can find him at Isotopes Park in Albuquerque watching the ballgame. The slight unease that creeps up your spine when you see something that acts human but isn't remains a big issue in robotics -- especially for robots built to look and speak like us. That peculiar feeling is called the uncanny valley. One way roboticists work to bridge that valley is by matching a robot's lip movements with its voice. On Wednesday, Columbia University announced research that delves into how a new wave of robot faces can speak more realistically. Hod Lipson, a Columbia engineering professor who worked on the research, told CNET that a main reason why robots are "uncanny" is they don't move their lips like us when they talk. "We are aiming to solve this problem, which has been neglected in robotics," Lipson said. Central among those are humanoid robots that come with bodies, faces and synthetic skin that mimics our own. The CES cohort included human-looking robots from Realbotix that could work information booths or provide comfort to humans, as well as a robot from Lovense designed for relationships that's outfitted with AI to "remember" intimate conversations. But a split-second mismatch between lip movement and speech can mean the difference between a machine that you can form an emotional attachment to and one that's little more than an unsettling animatronic. So if people are going to accept humanoid robots "living" among us in everyday life, it's probably better if they don't make us mildly uncomfortable whenever they talk. To make robots with human faces that speak like us, the robot's lips must be carefully synced to the audio of its speech. The Columbia research team developed a technique that helps robot mouths move like ours do by focusing on how language sounds. First, the team built a humanoid robot face with a mouth that can talk -- and sing -- in a way that reduces the uncanny valley effect. The robot face, made with silicone skin, has magnet connectors for complex lip movements. This enables the face to form lip shapes that cover 24 consonants and 16 vowels. To match the lip movements with speech, they designed a "learning pipeline" to collect visual data from lip movements. An AI model uses this data for training, then generates reference points for motor commands. Next, a "facial action transformer" turns the motor commands into mouth motions that synchronize with audio. Using this framework, the robot face, called Emo, was able to "speak" in multiple languages, including languages that weren't part of the training, such as French, Chinese and Arabic. The trick is that the framework analyzes the sounds of language, not the meaning behind the sound. "We avoided the language-specific problem by training a model that goes directly from audio to lip motion," Lipson said. "There is no notion of language." Humans have been working alongside robots for a long time but they have always looked like machines, not people -- the disembodied and very mechanical-looking arms on assembly lines or the chunky disc that is a robot vacuum scooting around our kitchen floors. However, as the AI language models behind chatbots have become more prevalent, tech companies are working hard to teach robots how to communicate with us using language in real time. There's a whole field of study called human-robot interaction that examines how robots should coexist with humans, physically and socially. In 2024, a study out of Berlin that used 157 participants found that a robot's ability to express empathy and emotion through verbal communication is critical for interacting effectively with humans. And another 2024 study from Italy found that active speech was important for collaboration between humans and robots when working on complex tasks like assembly. If we're going to rely on robots at home and at work, we need to be able to converse with them like we do with each other. In the future, Lipson says, research with lip-syncing robots would be useful for any kind of humanoid robot that needs to interact with people. It's also easy to imagine a future where humanoid robots are identical to us. Lipson says careful design could ensure that people understand they're talking to a robot, not a person. One example would be requiring humanoid robots to have blue skin, Lipson says, "so that they cannot be mistaken for a human."

[2]

Robot learns how to lip sync using AI and YouTube

Robots can already dance, play football, and even help out with jobs around the house. But now, engineers in the US have invented one that lip sync. The robot is called EMO, and it can learn and recreate the way that humans move their lips when they talk. Human lip movements happen due to a complicated combination of muscles, bones and skin working together - which scientist say is very hard to reproduce. Instead of being given step-by-instructions to follow, EMO used artificial intelligence (AI) and a process called 'observational learning' - which means learning by watching and copying another person's behaviour. The robot even learned to sing a song out of its own AI-generated debut album "hello world".

[3]

Lip-syncing robot trains itself to talk like one of us

When it comes to ultra-humanlike Westworld-style robots, one of their most defining features are lips that move in perfect sync with their spoken words. A new robot not only sports that feature, but it can actually train itself to speak like a person. Developed by robotics PhD student Yuhang Hu, Prof. Hod Lipson and colleagues at Columbia University, the EMO "robot" is in fact a robotic head with 26 tiny motors located beneath its flexible silicone facial skin. As those motors are activated in different combinations, the face takes on different expressions, and the lips form different shapes. The scientists started by placing EMO in front of a mirror, where it was able to observe itself as it randomly made thousands of random facial expressions. Doing so allowed it to learn which combinations of motor activations produce which visual facial movements. This type of learning is what's known as a "vision-to-action" (VLA) language model. The robot next watched many hours of YouTube videos of people talking and singing, in order to understand which mouth movements accompany which vocal sounds. Its AI system was subsequently able to merge that knowledge with what it learned via the VLA model, allowing it to form lip movements that corresponded to words it was speaking via a synthetic voice module. The technology still isn't perfect, as EMO struggles with sounds such as "B" and "W." That should change as it gains more practice at speaking, however, as should its ability to engage in natural-looking conversations with humans. "When the lip sync ability is combined with conversational AI such as ChatGPT or Gemini, the effect adds a whole new depth to the connection the robot forms with the human," says Hu. "The more the robot watches humans conversing, the better it will get at imitating the nuanced facial gestures we can emotionally connect with. The longer the context window of the conversation, the more context-sensitive these gestures will become." A paper on the research was recently published in the journal Science Robotics.

[4]

Robots are finally learning how to move their lips like humans

Robots struggle with something humans barely think about: lip movement. Nearly half of our attention in a face-to-face conversation lands on the way someone's mouth moves. That's a lot of brainpower spent on a small patch of skin. It explains why a video call with bad audio feels off, or why a person who can't move their face well can seem hard to read, even when the words are right. Robots run straight into that problem. A robot can walk, pick things up, and even answer questions - but if its mouth doesn't match its voice, people tense up. We cut robots some slack for clunky steps or stiff arms. We don't cut them slack for a face that looks almost human but not quite. That standard is known as the "uncanny valley." A realistic mouth isn't just a jaw flapping open and shut. Human speech is built from tiny sound units, and each one has a matching pattern of lip shapes and timing. The lips stretch, press together, curl, and relax in fast sequences. They also sync with cheeks, jaw, and the area around the mouth. Our brains notice when the timing is off by a fraction of a second. That's part of why many humanoid robots look lifeless or even creepy. Even the expensive ones often do what the researchers call "muppet mouth gestures." If a robot has a face at all, it's usually rigid. The motion is often pre-planned, like a puppet routine, instead of reacting smoothly to the sounds it's making. A Columbia Engineering team has built a robot that can learn facial lip motions for tasks such as speech and singing. The team showed the robot speaking in multiple languages and singing from its AI-generated debut album "hello world." The key is how it learned. Instead of feeding it a big set of rules about where each motor should go for each sound, the robot learned by watching. First, it learned its own face. The researchers put a robotic face with 26 motors in front of a mirror and let it experiment. The robot made thousands of random expressions and lip gestures, then connected what it saw to what it did. This is called a "vision-to-action" language model (VLA). After that, the robot studied people. It watched hours of YouTube videos of humans talking and singing. That gave the AI driving the face a chance to pick up how real mouths move when specific sounds happen. With those two pieces together, the system could translate audio into motor actions that move the lips in sync. In the tests, the robot didn't need to understand the meaning of the audio clips. It just had to match motion to sound, across different voices, languages, and even songs. That's important because it separates lip timing from language understanding. A robot can, in theory, learn the physical rhythm of speech before it learns the message. The researchers noted that the results aren't perfect yet. Professor Hod Lipson is the director of Columbia's Creative Machines Lab, where the work was conducted. "We had particular difficulties with hard sounds like 'B' and with sounds involving lip puckering, such as 'W'. But these abilities will likely improve with time and practice," said Lipson. That fits with what we know about learning systems in general. When an AI model trains on more examples, it often gets better at the rare, tricky cases. In speech, those tricky cases include fast changes and sounds that depend on precise lip contact, like when the lips must fully close and pop open. Getting the mouth right isn't only about looking less awkward. It's about communication. People lean heavily on facial cues to decide whether someone is friendly, bored, joking, or serious. A face also tells you when it's your turn to talk. Those cues matter in classrooms, hospitals, customer service desks, and anywhere a robot might need to work around people without making them uneasy. "Much of humanoid robotics today is focused on leg and hand motion, for activities like walking and grasping," said Lipson. "But facial affection is equally important for any robotic application involving human interaction." Yuhang Hu, who led the study for his PhD, ties the face to modern chat systems that already handle conversation well. "When the lip sync ability is combined with conversational AI such as ChatGPT or Gemini, the effect adds a whole new depth to the connection the robot forms with the human," explained Hu. "The more the robot watches humans conversing, the better it will get at imitating the nuanced facial gestures we can emotionally connect with." According to Hu, the longer the context window of the conversation, the more context-sensitive these gestures will become. If humanoid robots become common, that pressure to feel natural will only grow. The team noted that some economists predict over a billion humanoids will be manufactured in the next decade. That's not a niche future. That's a world where faces on machines show up in everyday places, and people will judge them in seconds. "There is no future where all these humanoid robots don't have a face. And when they finally have a face, they will need to move their eyes and lips properly, or they will forever remain uncanny," Lipson estimates. "We humans are just wired that way, and we can't help it," said Hu. "We are close to crossing the uncanny valley." Like what you read? Subscribe to our newsletter for engaging articles, exclusive content, and the latest updates.

[5]

This robot learned to lip sync like humans by watching YouTube

Researchers trained the robot using self-supervised audio visual learning Researchers at Columbia Engineering have trained a human-like robot named Emo to lip-sync speech and songs by studying online videos, showing how machines can now learn complex human behaviour simply by observing it. Emo is not a full humanoid body but a highly realistic robotic face built to explore how humans communicate. The face is covered with silicone skin and driven by 26 independently controlled facial motors that move the lips, jaw, and cheeks. Recommended Videos These motors allow Emo to form detailed mouth shapes that cover 24 consonants and 16 vowels, which is critical for natural speech and singing. The goal was to reduce the uncanny valley effect, where robots look almost human but still feel unsettling because their facial movements do not match their voice. How Emo learned to lip sync like a human The learning process happened in stages. First, Emo explored its own face by moving its motors while watching itself in a mirror. This helped the system understand how motor commands change facial shapes. Researchers then introduced a learning pipeline that connects sound to movement. Emo watched hours of YouTube videos of people speaking and singing, while an AI model analysed the relationship between audio and visible lip motion. Instead of focusing on language or meaning, the system studied the raw sounds of speech. A facial action transformer converted those learned patterns into real-time motor commands. This approach allowed Emo to lip sync not only in English but also in languages it was never trained on, including French, Arabic, and Chinese. The same method worked for singing, which is harder because of stretched vowels and rhythm changes. Researchers say this matters because future robots will need to communicate naturally if they are going to work alongside people. This advancement has arrived when interest in robots for homes and workplaces is climbing fast. At CES 2026, that momentum was on full display, with demos ranging from Boston Dynamics' Atlas humanoid which is ready to enter workplace to SwitchBot's household-focused robot that can cook meals and do your laundry, and LG's upcoming home assistant robot designed to make everyday life easier. Add advances like artificial skin that gives robots human-like sensitivity, and paired with realistic lip syncing, it is easy to see how robots are starting to feel less like machines and more like social companions. Emo is still a research project, but it shows how robots may one day learn human skills the same way we do by watching and listening.

[6]

A Robot Learns to Lip Sync | Newswise

Newswise -- New York, NY -- Jan. 14, 2026 -- Almost half of our attention during face-to-face conversation focuses on lip motion. Yet, robots still struggle to move their lips correctly. Even the most advanced humanoids make little more than muppet mouth gestures - if they have a face at all. We humans attribute outsized importance to facial gestures in general, and to lip motion in particular. While we may forgive a funny walking gait or an awkward hand motion, we remain unforgiving of even the slightest facial malgesture. This high bar is known as the "Uncanny Valley." Robots oftentimes look lifeless, even creepy, because their lips don't move. But that is about to change. A Columbia Engineering team announced today that they have created a robot that, for the first time, is able to learn facial lip motions for tasks such as speech and singing. In a new study published in Science Robotics, the researchers demonstrate how their robot used its abilities to articulate words in a variety of languages, and even sing a song out of its AI-generated debut album "hello world_." The robot acquired this ability through observational learning rather than via rules. It first learned how to use its 26 facial motors by watching its own reflection in the mirror before learning to imitate human lip motion by watching hours of YouTube videos. "The more it interacts with humans, the better it will get," promised Hod Lipson, James and Sally Scapa Professor of Innovation in the Department of Mechanical Engineering and director of Columbia's Creative Machines Lab, where the work was done. Achieving realistic robot lip motion is challenging for two reasons: First, it requires specialized hardware containing a flexible facial skin actuated by numerous tiny motors that can work quickly and silently in concert. Second, the specific pattern of lip dynamics is a complex function dictated by sequences of vocal sounds and phonemes. Human faces are animated by dozens of muscles that lie just beneath a soft skin and sync naturally to vocal chords and lip motions. By contrast, humanoid faces are mostly rigid, operating with relatively few degrees of motion, and their lip movement is choreographed according to rigid, predefined rules. The resulting motion is stilted, unnatural, and uncanny. In this study, the researchers overcame these hurdles by developing a richly actuated, flexible face and then allowing the robot to learn how to use its face directly by observing humans. First, they placed a robotic face equipped with 26 motors in front of a mirror so that the robot could learn how its own face moves in response to muscle activity. Like a child making faces in a mirror for the first time, the robot made thousands of random face expressions and lip gestures. Over time, it learned how to move its motors to achieve particular facial appearances, an approach called a "vision-to-action" language model (VLA). Then, the researchers placed the robot in front of recorded videos of humans talking and singing, giving AI that drives the robot an opportunity to learn how exactly humans' mouths moved in the context of various sounds they emitted. With these two models in hand, the robot's AI could now translate audio directly into lip motor action. The researchers tested this ability using a variety of sounds, languages, and contexts, as well as some songs. Without any specific knowledge of the audio clips' meaning, the robot was then able to move its lips in sync. The researchers acknowledge that the lip motion is far from perfect. "We had particular difficulties with hard sounds like 'B' and with sounds involving lip puckering, such as 'W'. But these abilities will likely improve with time and practice," Lipson said. More importantly, however, is seeing lip sync as part of more holistic robot communication ability. "When the lip sync ability is combined with conversational AI such as ChatGPT or Gemini, the effect adds a whole new depth to the connection the robot forms with the human," explained Yuhang Hu, who led the study for his PhD. "The more the robot watches humans conversing, the better it will get at imitating the nuanced facial gestures we can emotionally connect with." "The longer the context window of the conversation, the more context-sensitive these gestures will become," he added. The researchers believe that facial affect is the 'missing link' of robotics. "Much of humanoid robotics today is focused on leg and hand motion, for activities like walking and grasping," said Lipson. "But facial affection is equally important for any robotic application involving human interaction." Lipson and Hu predict that warm, lifelike faces will become increasingly important as humanoid robots find applications in areas such as entertainment, education, medicine, and even elder care. Some economists predict that over a billion humanoids will be manufactured in the next decade. "There is no future where all these humanoid robots don't have a face. And when they finally have a face, they will need to move their eyes and lips properly, or they will forever remain uncanny," Lipson estimates. "We humans are just wired that way, and we can't help it. We are close to crossing the uncanny valley," added Hu. This work is part of Lipson's decade-long quest to find ways to make robots connect more effectively with humans, through mastering facial gestures such as smiling, gazing, and speaking. He insists that these abilities must be acquired by learning, rather than being programmed using stiff rules. "Something magical happens when a robot learns to smile or speak just by watching and listening to humans," he said. "I'm a jaded roboticist, but I can't help but smile back at a robot that spontaneously smiles at me." Hu explained that human faces are the ultimate interface for communication, and we are beginning to unlock their secrets. "Robots with this ability will clearly have a much better ability to connect with humans because such a significant portion of our communication involves facial body language, and that entire channel is still untapped," Hu said. The researchers are aware of the risks and controversies surrounding granting robots greater ability to connect with humans. "This will be a powerful technology. We have to go slowly and carefully, so we can reap the benefits while minimizing the risks," Lipson said.

Share

Share

Copy Link

Columbia University researchers developed Emo, a lip-syncing robot that masters realistic facial lip movements by watching YouTube videos. Using AI and 26 facial motors beneath silicone skin, the robot learned to synchronize robot lip movements with speech across multiple languages, addressing the uncanny valley effect that makes human-robot interaction uncomfortable.

Columbia University Develops Lip-Syncing Robot That Learns from YouTube

Researchers at Columbia University have unveiled Emo, a lip-syncing robot that learns realistic facial lip movements by watching hours of YouTube videos. Led by robotics PhD student Yuhang Hu and Professor Hod Lipson at Columbia's Creative Machines Lab, the project addresses a critical challenge in human-robot interaction: the uncanny valley effect that makes nearly-human robots feel unsettling when their lip movements don't match their speech

1

3

.

Source: CNET

The robotic face features silicone skin covering 26 tiny motors that enable complex robot facial expressions. These motors allow Emo to form lip shapes covering 24 consonants and 16 vowels, creating the foundation for natural speech and singing capabilities

1

3

. The research, published in Science Robotics, demonstrates how machines can now acquire complex human behaviors through an observational learning process rather than following pre-programmed instructions2

3

.How the Observational Learning Process Works

The training methodology unfolds in carefully designed stages. First, researchers placed Emo in front of a mirror where it made thousands of random facial expressions, learning which motor commands produce which visual movements. This self-supervised learning approach, known as a vision-to-action or VLA language model, allowed the robot to understand its own face

3

4

.Next, the AI model analyzed hours of YouTube footage showing people talking and singing, studying how real mouths move with specific vocal sounds

2

5

. A facial action transformer then converted these learned patterns into real-time motor commands that synchronize robot lip movements with audio1

. Crucially, the system analyzes the sounds of language rather than meaning, allowing Emo to speak and sing in languages it wasn't trained on, including French, Chinese, and Arabic1

5

.Bridging the Uncanny Valley for Natural Human-Robot Communication

Humans dedicate nearly half their attention during face-to-face conversations to watching mouth movements, making accurate lip synchronization essential for comfortable humanoid robot communication

4

. "We are aiming to solve this problem, which has been neglected in robotics," Hod Lipson explained, noting that mismatched lip movements create the unsettling feeling known as the uncanny valley1

.A 2024 study from Berlin involving 157 participants found that a robot's ability to express empathy and emotion through verbal communication proves critical for effective human-robot interaction

1

. Another 2024 Italian study confirmed that active speech matters significantly for human-robot collaboration on complex assembly tasks1

. These findings underscore why natural human-robot communication extends beyond functional necessity into the realm of social acceptance.Related Stories

Future Applications in Conversational AI and Robotics

The technology still faces challenges with certain sounds. "We had particular difficulties with hard sounds like 'B' and with sounds involving lip puckering, such as 'W'," Lipson acknowledged, though these abilities should improve with continued practice

4

. As the audio-visual learning system trains on more examples, it will likely handle these tricky cases more effectively.Yuhang Hu sees significant potential when combining this capability with conversational AI platforms. "When the lip sync ability is combined with conversational AI such as ChatGPT or Gemini, the effect adds a whole new depth to the connection the robot forms with the human," Hu explained

3

4

. The more the robot watches humans conversing, the better it becomes at imitating nuanced facial gestures that create emotional connections, with longer conversation context windows enabling more context-sensitive gestures3

4

.With economists predicting over a billion humanoid robots could be manufactured in the next decade, the pressure for machines to feel natural will intensify

4

. This research arrives as interest in home and workplace robots climbs, with recent demonstrations at CES 2026 showcasing everything from Boston Dynamics' Atlas humanoid to household-focused robots from SwitchBot and LG5

. Lipson notes that while much of robotics focuses on leg and hand motion for walking and grasping, facial affection proves equally important for any robotic application involving human interaction4

.References

Summarized by

Navi

[5]

Related Stories

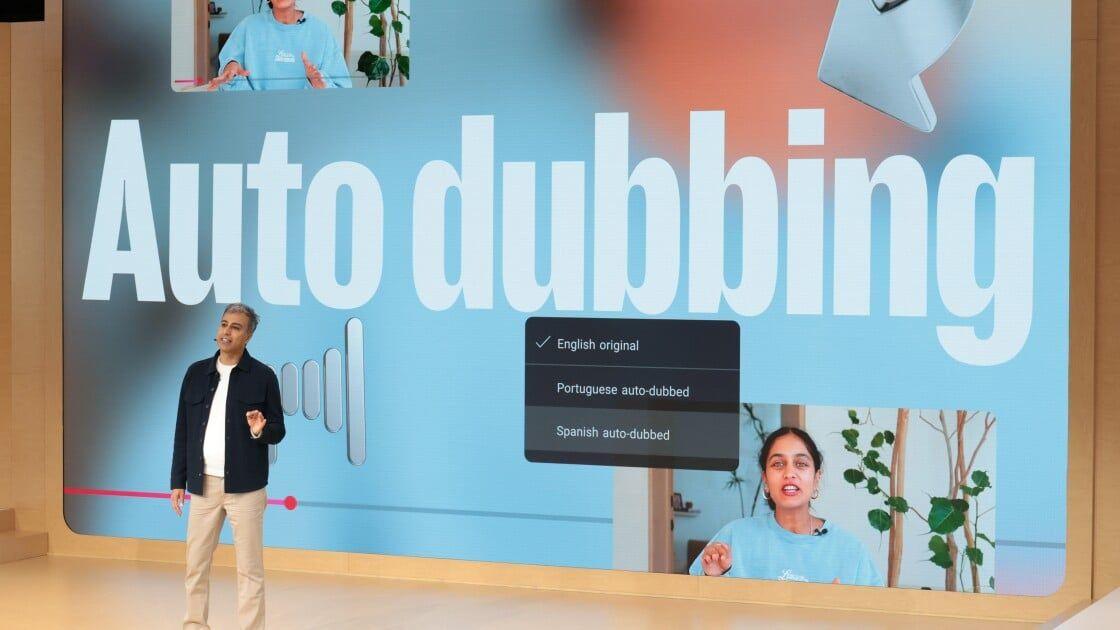

YouTube's AI Lip-Sync Technology: Revolutionizing Auto-Dubbing for Global Content

14 Oct 2025•Technology

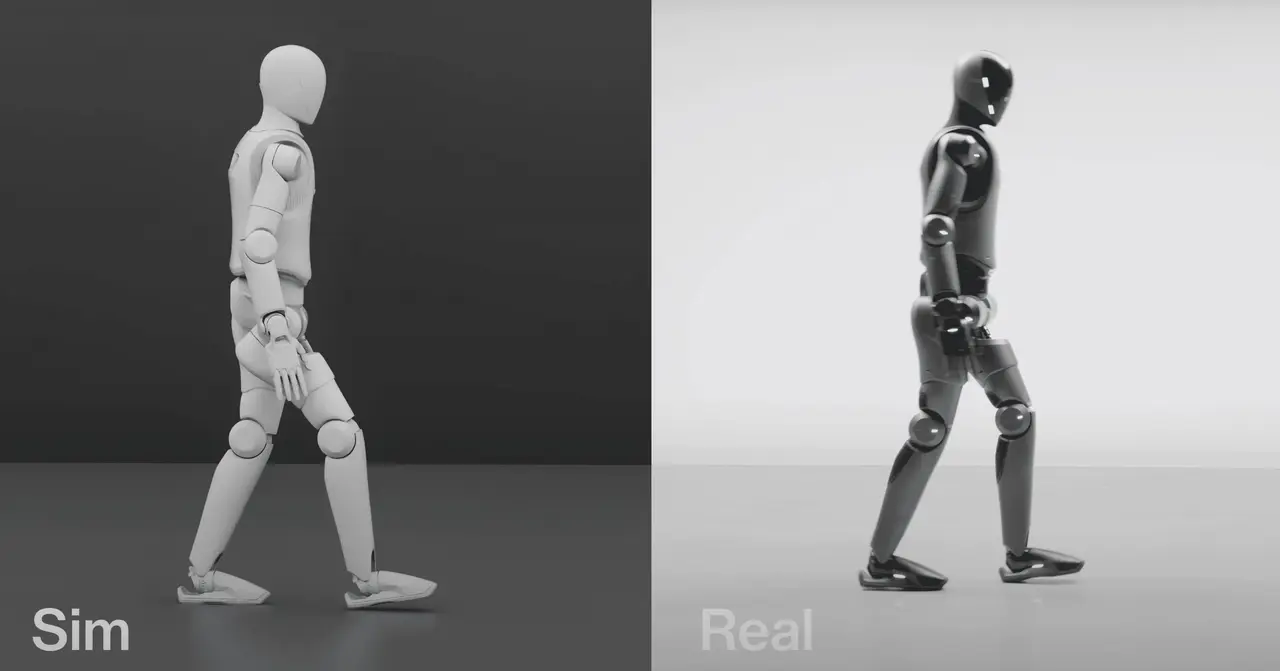

Humanoid Robot Masters Waltz and More Through AI-Powered Human Movement Mirroring

17 Jan 2025•Technology

RHyME: Revolutionary AI System Enables Robots to Learn from a Single Video

23 Apr 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology