Deepfake Detection Challenge: Only 0.1% of Participants Succeed in Identifying AI-Generated Content

3 Sources

3 Sources

[1]

2000 people took a deepfake test -- only 2 got it right

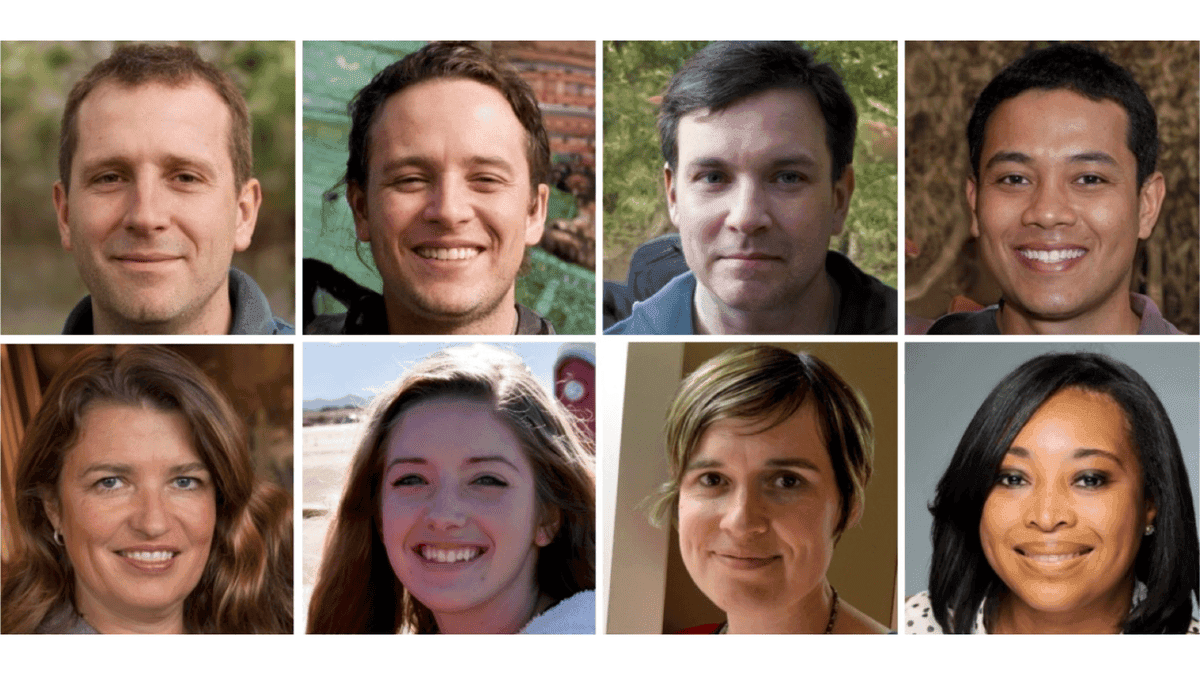

As deepfake technology advances, concerns over misinformation and identity theft are rising, highlighted by a recent iProov study revealing that most individuals struggle to distinguish between real and AI-generated content. The study involved 2,000 participants across the UK and US, exposing them to a mix of genuine and deepfake images and videos. Alarmingly, only 0.1% of participants, totaling just two individuals, could accurately differentiate between the real and deepfake stimuli. Older adults emerged as particularly vulnerable to AI-generated deception. Approximately 30% of participants aged 55-64 and 39% of those over 65 reported they had never heard of deepfakes prior to the study. Although younger participants (aged 18-34) displayed greater confidence in their ability to detect deepfakes, their actual performance did not reflect improvement. The study indicated that detecting deepfake videos was significantly more challenging than identifying images. Participants were 36% less likely to accurately identify a synthetic video compared to a synthetic image, raising concerns regarding the potential for video-based fraud, such as impersonation during video calls. Social media platforms were identified as major sources of deepfake content. Nearly half of the participants (49%) cited Meta platforms, including Facebook and Instagram, as the most common sites for deepfakes, while 47% pointed to TikTok. Andrew Bud, founder and CEO of iProov, commented on the findings, noting the heightened vulnerability of both organizations and consumers to identity fraud in the deepfake era. He stated, "Criminals are exploiting consumers' inability to distinguish real from fake imagery, putting personal information and financial security at risk." Despite the alarming results, the study found that only 20% of respondents would report a suspected deepfake online. As deepfakes become increasingly sophisticated, iProov suggests that human perception alone is insufficient for reliable detection. Bud emphasized the necessity for biometric security solutions with liveness detection to combat the threat posed by convincing deepfake material. iProov's research showcases a pressing need for organizations to protect their customers by integrating robust security measures. Bud believes that the use of facial biometrics with liveness detection offers a trustworthy authentication factor that prioritizes both security and individual control. According to the study, only 22% of consumers had heard of deepfakes before participating. Furthermore, many individuals exhibited significant overconfidence regarding their detection skills, with over 60% believing they could identify deepfakes, despite a majority performing poorly. Among younger adults, this false sense of security was especially prevalent. The findings also indicated a decline in trust towards social media platforms after users became aware of deepfakes, with 49% reporting a decrease in trust. Meanwhile, 74% of participants expressed concerns about the societal ramifications of deepfakes, particularly the spread of misinformation, which was a top concern for 68% of respondents. This apprehension was notably strong among older generations, where up to 82% of individuals aged 55 and above expressed fears regarding false information dissemination. Why small AI models can't keep up with large ones Less than a third of those surveyed (29%) indicated they would take no action upon encountering a suspected deepfake. The lack of engagement is partly due to 48% of respondents stating they do not know how to report deepfakes, while a quarter admitted indifference toward suspected deepfakes. Only 11% critically analyze sources and context to determine the authenticity of information, creating a landscape where many individuals remain highly susceptible to deception. Professor Edgar Whitley, a digital identity expert, warned that organizations cannot rely solely on human judgment to detect deepfakes and must explore alternative methods of user authentication. The growing prevalence of deepfakes poses significant challenges in the digital landscape. iProov's 2024 Threat Intelligence Report indicated a staggering 704% increase in face swaps, emphasizing their role as tools for cybercriminals seeking unauthorized access to sensitive data. This trend highlights the urgent need for enhanced awareness and technological solutions to thwart deepfake-related threats.

[2]

In a test, 2000 people were shown deepfake content, and only two of them managed to get a perfect score

As deepfake technology continues to advance, concerns over misinformation, fraud, and identity theft are growing, thanks to literacy in AI tools being at a startling low. A recent iProov study claims most people struggle to distinguish deepfake content from reality, as it took 2,000 participants from the UK and US being exposed to a mix of real and AI-generated images and videos, finding only 0.1% of participants - two whole people - correctly distinguished between real and deepfake stimuli. The study found older adults are particularly susceptible to AI-generated deception. Around 30% of those aged 55-64, and 39% of those over 65, had never heard of deepfakes before. While younger participants were more confident in their ability to detect deepfakes, their actual performance in the study did not improve. Deepfake videos were significantly harder to detect than images, the study added,as participants were 36% less likely to correctly identify a fake video compared to an image, raising concerns about video-based fraud and misinformation. Social media platforms were highlighted as major sources of deepfake content. Nearly half of the participants (49%) identified Meta platforms, including Facebook and Instagram, as the most common places where deepfakes are found, while 47% pointed to TikTok. "[This underlines] how vulnerable both organizations and consumers are to the threat of identity fraud in the age of deepfakes," said Andrew Bud, founder and CEO of iProov. "Criminals are exploiting consumers' inability to distinguish real from fake imagery, putting personal information and financial security at risk." Bud added even when people suspect a deepfake, most take no action. Only 20% of respondents said they would report a suspected deepfake if they encountered one online. With deepfakes becoming increasingly sophisticated, iProov believes that human perception alone is no longer reliable for detection, and Bud emphasized the need for biometric security solutions with liveness detection to combat the threat of ever more convincing deepfake material. "It's down to technology companies to protect their customers by implementing robust security measures," he said. "Using facial biometrics with liveness provides a trustworthy authentication factor and prioritizes both security and individual control, ensuring that organizations and users can keep pace with these evolving threats."

[3]

It's Almost Impossible to Spot Deepfakes Now... as This Quiz Proves

While many people like to think that they're immune from being fooled by artificial intelligence, it turns out that the vast majority of people cannot accurately determine what's real and what's fake. So, can you do any better? Only 0.1% of People Can Detect Deepfakes A company called iProov, which describes itself as "the world's leading provider of science-based solutions for biometric identity verification," recently conducted a study to determine how many people can identify deepfakes. And the results are astounding. For the uninitiated, deepfakes are AI-generated images and videos designed to be so realistic that they fool people into thinking they're real. And they've been used to make people think they're talking to celebrities, or that politicians have said things they have actually never uttered. iProov's study saw 2,000 people from the US and the UK determining whether images and video were real or deepfaked. And, according to its press release, just two people, or 0.1 percent of the participants, achieved a perfect score. Reacting to the study, Andrew Bud, founder and CEO of iProov, said: "Just 0.1% of people could accurately identify the deepfakes, underlining how vulnerable both organizations and consumers are to the threat of identity fraud in the age of deepfakes. And even when people do suspect a deepfake, our research tells us that the vast majority of people take no action at all. Criminals are exploiting consumers' inability to distinguish real from fake imagery, putting our personal information and financial security at risk." Before writing this article, I took iProov's quiz myself. And to be perfectly frank, not only did I do pretty well, scoring 9 out of 10, I found it pretty easy. Hence, my time of just 44 seconds. Then again, I did get one wrong, proving the fallability of humans when it comes to detecting deepfakes. Can You Tell What's Real, and What's Fake? You can take the iProov deepfake quiz yourself to see how you fare. And if you manage to accurately determine whether all 10 are real or fake, you will not only score more highly than me, you'll score more highly than 99.9 percent of the participants. However, regardless of how well you do, or how badly the original 2,000 participants did, deepfakes are a serious issue. They're already out there, and being used to spread misinformation and scam people out of money, and the technology is only going to get better. Related Deepfakes Explained: The AI That's Making Fake Videos Too Convincing What are deepfakes? Here's a look at the definition and how they're changing the nature of online video. Posts So, it's not only on us to improve our understanding of artificial intelligence in order to better determine what's real and what's fake, but on the technology companies at the forefront of this revolution to offer greater protections.

Share

Share

Copy Link

A recent study by iProov reveals that only 2 out of 2,000 participants could accurately distinguish between real and AI-generated deepfake content, highlighting the growing threat of misinformation and identity fraud in the digital age.

iProov Study Reveals Alarming Inability to Detect Deepfakes

A recent study conducted by iProov, a leading provider of biometric identity verification solutions, has uncovered a startling reality about the public's ability to detect deepfakes. The research, involving 2,000 participants from the UK and US, found that only 0.1% of individuals could accurately differentiate between real and AI-generated content

1

.Key Findings of the Study

The study exposed participants to a mix of genuine and deepfake images and videos. The results were concerning:

- Only two out of 2,000 participants achieved a perfect score in identifying deepfakes

2

. - Older adults were particularly vulnerable, with 30% of those aged 55-64 and 39% of those over 65 having never heard of deepfakes before the study

1

. - Younger participants (18-34) showed more confidence in their ability to detect deepfakes but did not perform significantly better

1

. - Detecting deepfake videos proved more challenging than identifying synthetic images, with participants 36% less likely to accurately identify fake videos

2

.

Social Media and Deepfake Prevalence

The study highlighted social media platforms as major sources of deepfake content:

- 49% of participants identified Meta platforms (Facebook and Instagram) as common sources of deepfakes

1

. - 47% pointed to TikTok as another significant platform for deepfake content

1

.

Implications and Concerns

Andrew Bud, founder and CEO of iProov, emphasized the vulnerability of both organizations and consumers to identity fraud in the age of deepfakes

2

. The study revealed several alarming trends:- Only 22% of consumers had heard of deepfakes before participating in the study

1

. - Over 60% of participants believed they could identify deepfakes, despite poor performance in the test

1

. - 49% reported decreased trust in social media platforms after learning about deepfakes

1

. - 74% expressed concerns about the societal impact of deepfakes, with 68% worried about the spread of misinformation

1

.

Related Stories

Response and Recommendations

The study's findings underscore the need for enhanced awareness and technological solutions:

- iProov suggests that human perception alone is insufficient for reliable deepfake detection

2

. - Bud emphasizes the necessity for biometric security solutions with liveness detection to combat sophisticated deepfake threats

2

. - Organizations are urged to implement robust security measures to protect their customers

2

. - Professor Edgar Whitley, a digital identity expert, warns against relying solely on human judgment for deepfake detection

1

.

The Growing Threat of Deepfakes

iProov's 2024 Threat Intelligence Report indicated a 704% increase in face swaps, highlighting the escalating use of deepfakes by cybercriminals seeking unauthorized access to sensitive data

1

. This trend emphasizes the urgent need for improved detection methods and increased public awareness to combat deepfake-related threats in our increasingly digital world.References

Summarized by

Navi

[1]

[2]

Related Stories

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

ChatGPT cracks decades-old gluon amplitude puzzle, marking AI's first major theoretical physics win

Science and Research