Over 800 Public Figures Call for Ban on AI Superintelligence Development

28 Sources

28 Sources

[1]

A long list of public figures are calling for a ban on superintelligent AI.

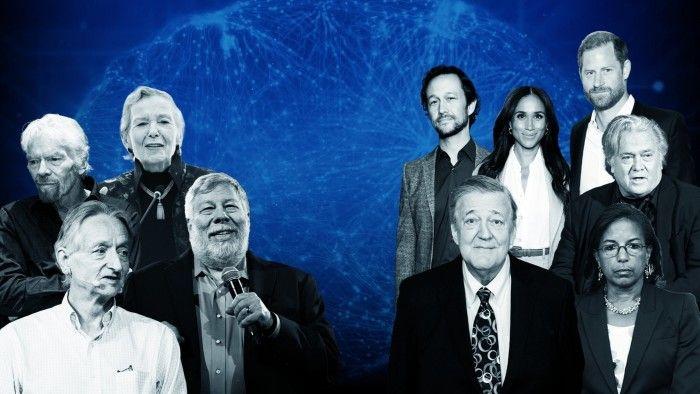

The initiative, announced Wednesday by the Future of Life Institute, put together an open letter signed by public figures from Nobel Laureates and national security experts to prominent AI researchers and religious leaders -- calling for "a prohibition on the development of superintelligence until the technology is reliably safe and controllable, and has public buy-in - which it sorely lacks." Signatories included actor Joseph Gordon-Levitt; musician will.i.am; leading computer scientist Geoffrey Hinton; billionaire investor Richard Branson; and Apple co-founder Steve Wozniak.

[2]

Prince Harry, Geoffrey Hinton Call for Ban on AI Superintelligence

Prince Harry and Meghan, the Duke and Duchess of Sussex, Steve Bannon and artificial intelligence pioneer Geoffrey Hinton are part of a group calling for a ban on AI superintelligence until that technology can be deployed safely. In a statement organized by the nonprofit the Future of Life Institute, the group of scientists and other public figures advocated for a prohibition on the development of superintelligence -- or AI that is vastly more capable than humans -- until there is "broad scientific consensus that it will be done safely and controllably." Other notable signatories include Apple Inc. co-founder Steve Wozniak, economist Daron Acemoglu, and former National Security Adviser Susan Rice.

[3]

Steve Bannon and Meghan Markle among 800 public figures calling for AI 'superintelligence' ban

Steve Bannon, Meghan Markle and Stephen Fry have joined a group of public figures calling for a "prohibition" on the development of so-called superintelligence in an unlikely alliance against advanced artificial intelligence systems. More than 800 people including AI scientists, politicians, celebrities and religious leaders have signed a statement to prevent the creation of "superintelligence", AI systems that are more intelligent than most humans. "We call for a prohibition on the development of superintelligence, not lifted before there is broad scientific consensus that it will be done safely and controllably, and strong public buy-in," the statement said. Its signatories include the godfathers of AI Geoffrey Hinton and Yoshua Bengio, former Ireland president Mary Robinson and Prince Harry. The Future of Life Institute (FLI), a non-profit campaign group, published the letter on Wednesday along with a poll showing 5 per cent of Americans support "the current status quo of unregulated development". Nearly three-quarters of respondents were in favour of robust regulation, according to the survey conducted by the campaign group. The Institute's president Max Tegmark told the Financial Times: "It is our humanity that brings us all together here . . . More and more people are starting to think that the biggest threat isn't the other company or even the other country but maybe the machines we are building." Several leading Big Tech groups and AI start-ups including OpenAI, Meta and Google are locked in a fierce competition to be the first to develop "superintelligence" or artificial general intelligence. Both terms generally refer to AI systems that can outperform humans on most tasks. In March 2023, five months after the launch of ChatGPT, tech experts including Elon Musk released a similar statement the FLI organised, which called for a six-month moratorium on all AI development. However, Musk's xAI continues to build AI systems. The latest statement is narrower and is "absolutely not calling for a pause on AI development", Tegmark said. "You don't need superintelligence for curing cancer, for self-driving cars, or to massively improve productivity and efficiency," Tegmark added. Several prominent Chinese scientists have signed the statement, including Andrew Yao and Ya-Qin Zhang, former president of Baidu. Other signatories include former government officials Susan Rice, national security adviser under then-president Barack Obama, and Mike Mullen, chairman of the joint chiefs of staff in the Obama and George W Bush administrations. Tegmark said: "Loss of control is something that is viewed as a national security threat both by the West and in China. They will be against it for their own self-interests, so they don't need to trust each other at all." Other signatories include Apple co-founder Steve Wozniak and Virgin co-founder Richard Branson, as well as faith leaders across religions. The global regulatory landscape for AI is moving slowly, with the most advanced legislation, the EU AI Act, being rolled out in stages despite fierce criticism from industry. In the US, states including California, Utah and Texas have enacted specific laws on AI. A proposed 10-year moratorium on AI regulation was pulled from the federal budget bill in July.

[4]

Steve Wozniak, Prince Harry and 800 others want a ban on AI 'superintelligence'

More than 800 public figures including Steve Wozniak and Prince Harry, along with AI scientists, former military leaders and CEOs signed a statement demanding a ban on AI work that could lead to superintelligence, The Financial Times reported. "We call for a prohibition on the development of superintelligence, not lifted before there is broad scientific consensus that it will be done safely and controllably, and strong public buy-in," it reads. The signers include a wide mix of people across sectors and political spectrums, including AI researcher and Nobel prize winner Geoffrey Hinton, former Trump aide Steve Bannon, one time Joint Chiefs of Staff Chairman Mike Mullen and rapper Will.i.am. The statement comes from the Future of Life Institute, which said that AI developments are occurring faster than the public can comprehend. "We've, at some level, had this path chosen for us by the AI companies and founders and the economic system that's driving them, but no one's really asked almost anybody else, 'Is this what we want?'" the institute's executive director, Anthony Aguirre, told NBC News. Artificial general intelligence (AGI) refers to the ability of machines to reason and perform tasks as well as a human can, while superintelligence would enable AI to do things better than even human experts. That potential ability has been cited by critics (and the culture in general) as a grave risk to humanity. So far, though, AI has proven itself to be useful only for a narrow range of tasks and consistently fails to handle complex tasks like self-driving. Despite the lack of recent breakthroughs, companies like OpenAI are pouring billions into new AI models and the data centers needed to run them. Meta CEO Mark Zuckerberg recently said that superintelligence was "in sight," while X CEO Elon Musk said superintelligence "is happening in real time" (Musk has also famously warned about the potential dangers of AI). OpenAI CEO Sam Altman said he expects superintelligence to happen by 2030 at the latest. None of those leaders, nor anyone notable from their companies, signed the statement. It's far from the only call for a slowdown in AI developement. Last month, more than 200 researchers and public officials, including 10 Nobel Prize winners and multiple artificial intelligence experts, released an urgent call for a "red line" against the risks of AI. However, that letter referred not to superintelligence, but dangers already starting to materialize like mass unemployment, climate change and human rights abuses. Other critics are sounding alarms around a potential AI bubble that could eventually pop and take the economy down with it.

[5]

Over 800 public figures, including Apple co-founder Steve Wozniak and Virgin's Richard Branson urge AI 'superintelligence' ban

The statement from various public figures calls for a prohibition on superintelligence development until it can be done safely and controllably. A group of prominent figures, including artificial intelligence and technology experts, has called for an end to efforts to create 'superintelligence' -- a form of AI that would surpass human intellect. More than 800 people, including Apple cofounder Steve Wozniak and former U.S. National Security Advisor Susan Rice, signed a statement published Wednesday calling for a pause on the development of superintelligence. In a statement published Wednesday, with over 800 signatories, including prominent AI figures and the biggest names in AI, ranging from Apple cofounder Steve Wozniak to former National Security Advisor Susan Rice, called for a pause on the development of superintelligence. The list of signatories notably includes prominent AI leaders, including scientists like Yoshua Bengio and Geoff Hinton, who are widely considered "godfathers" of modern AI. Leading AI safety researchers like UC Berkeley's Stuart Russell also signed on. Superintelligence has become a buzzword in the AI world, as companies from xAI to OpenAI compete to release more advanced large language models. Meta notably has gone so far as to name its LLM division the 'Meta Superintelligence Labs.' But signatories of the recent statement warn that the prospect of superintelligence has "raised concerns, ranging from human economic obsolescence and disempowerment, losses of freedom, civil liberties, dignity, and control, to national security risks and even potential human extinction." The statement calls for a prohibition on superintelligence development until strong public buy-in and a broad scientific consensus that it can be done safely and controllably is reached. In addition to the AI figures, the names behind the statement come from a broad coalition of academics, media personalities, religious leaders and ex-politicians. Other prominent names include Virgin's Richard Branson, former chairman of the Joint Chiefs of Staff Mike Mullen, and British royal family member Meghan Markle. Prominent media allies to the U.S. President Donald Trump, including Steve Bannon and Glen Beck also signed on. As of Wednesday, the list of signatories was still growing.

[6]

Prince Harry, Meghan join call for ban on development of AI 'superintelligence'

Prince Harry and his wife Meghan have joined prominent computer scientists, economists, artists, evangelical Christian leaders and American conservative commentators Steve Bannon and Glenn Beck to call for a ban on AI "superintelligence" that threatens humanity. The letter, released Wednesday by a politically and geographically diverse group of public figures, is squarely aimed at tech giants like Google, OpenAI and Meta Platforms that are racing each other to build a form of artificial intelligence designed to surpass humans at many tasks. The 30-word statement says: "We call for a prohibition on the development of superintelligence, not lifted before there is broad scientific consensus that it will be done safely and controllably, and strong public buy-in." In a preamble, the letter notes that AI tools may bring health and prosperity, but alongside those tools, "many leading AI companies have the stated goal of building superintelligence in the coming decade that can significantly outperform all humans on essentially all cognitive tasks. This has raised concerns, ranging from human economic obsolescence and disempowerment, losses of freedom, civil liberties, dignity, and control, to national security risks and even potential human extinction." Prince Harry added in a personal note that "the future of AI should serve humanity, not replace it. I believe the true test of progress will be not how fast we move, but how wisely we steer. There is no second chance." Signing alongside the Duke of Sussex was his wife Meghan, the Duchess of Sussex. "This is not a ban or even a moratorium in the usual sense," wrote another signatory, Stuart Russell, an AI pioneer and computer science professor at the University of California, Berkeley. "It's simply a proposal to require adequate safety measures for a technology that, according to its developers, has a significant chance to cause human extinction. Is that too much to ask?" Also signing were AI pioneers Yoshua Bengio and Geoffrey Hinton, co-winners of the Turing Award, computer science's top prize. Hinton also won a Nobel Prize in physics last year. Both have been vocal in bringing attention to the dangers of a technology they helped create. But the list also has some surprises, including Bannon and Beck, in an attempt by the letter's organizers at the nonprofit Future of Life Institute to appeal to President Donald Trump's Make America Great Again movement even as Trump's White House staff has sought to reduce limits to AI development in the U.S. Also on the list are Apple co-founder Steve Wozniak; British billionaire Richard Branson; the former Chairman of the U.S. Joint Chiefs of Staff Mike Mullen, who served under Republican and Democratic administrations; and Democratic foreign policy expert Susan Rice, who was national security adviser to President Barack Obama. Former Irish President Mary Robinson and several British and European parliamentarians signed, as did actors Stephen Fry and Joseph Gordon-Levitt, and musician will.i.am, who has otherwise embraced AI in music creation. "Yeah, we want specific AI tools that can help cure diseases, strengthen national security, etc.," wrote Gordon-Levitt, whose wife Tasha McCauley served on OpenAI's board of directors before the upheaval that led to CEO Sam Altman's temporary ouster in 2023. "But does AI also need to imitate humans, groom our kids, turn us all into slop junkies and make zillions of dollars serving ads? Most people don't want that." The letter is likely to provoke ongoing debates between the AI research community about the likelihood of superhuman AI, the technical paths to reach it and how dangerous it could be. "In the past, it's mostly been the nerds versus the nerds," said Max Tegmark, president of the Future of Life Institute and a professor at the Massachusetts Institute of Technology. "I feel what we're really seeing here is how the criticism has gone very mainstream." Confounding the broader debates is that the same companies that are striving toward what some call superintelligence and others call artificial general intelligence, or AGI, are also sometimes inflating the capabilities of their products, which can make them more marketable and have contributed to concerns about an AI bubble. OpenAI was recently met with ridicule from mathematicians and AI scientists when its researcher claimed ChatGPT had figured out unsolved math problems -- when what it really did was find and summarize what was already online. "There's a ton of stuff that's overhyped and you need to be careful as an investor, but that doesn't change the fact that -- zooming out -- AI has gone much faster in the last four years than most people predicted," Tegmark said. Tegmark's group was also behind a March 2023 letter -- still in the dawn of a commercial AI boom -- that called on tech giants to pause the development of more powerful AI models temporarily. None of the major AI companies heeded that call. And the 2023 letter's most prominent signatory, Elon Musk, was at the same time quietly founding his own AI startup to compete with those he wanted to take a 6-month pause. Asked if he reached out to Musk again this time, Tegmark said he wrote to the CEOs of all major AI developers in the U.S. but didn't expect them to sign. "I really empathize for them, frankly, because they're so stuck in this race to the bottom that they just feel an irresistible pressure to keep going and not get overtaken by the other guy," Tegmark said. "I think that's why it's so important to stigmatize the race to superintelligence, to the point where the U.S. government just steps in."

[7]

Over 800 public figures, including "AI godfathers" and Steve Wozniak, sign open letter to ban superintelligent AI

Serving tech enthusiasts for over 25 years. TechSpot means tech analysis and advice you can trust. A hot potato: Tech companies are in a race to create superintelligent AI, regardless of what the consequences of creating something much smarter than humans might be. It's led to hundreds of public figures from the world of tech, politics, media, education, religion, and even royalty signing an open letter calling for a ban on the development of superintelligence until certain conditions are met. Among the 800+ signatories are two of the "Godfathers of AI," Geoffrey Hinton and Yoshua Bengio. Apple co-founder Steve Wozniak and Virgin Group founder Richard Branson are on the list, too. Former chief strategist to Donald Trump, Steve Bannon, former Joint Chiefs of Staff Chairman Mike Mullen, Glenn Beck, Joseph Gordon-Levitt, and musicians Will.I.am and Grimes also signed the letter. Even Prince Harry and Meghan, the Duke and Duchess of Sussex, feel strongly about the subject. The letter calls for a prohibition on the development of superintelligent AI until there is both broad scientific consensus that it will be done safely and controllably and there is strong public support. The statement, organized by the AI safety group Future of Life (FLI), acknowledges that AI tools may have benefits such as unprecedented health and prosperity, but the companies' goal of creating superintelligence in the coming decade that can significantly outperform all humans on essentially all cognitive tasks has raised concerns. The usual worries were cited: AI taking so many jobs that it leads to human economic obsolescence, disempowerment, loss of freedom, civil liberties, dignity, and control, and national security risks. The potential for total human extinction is also mentioned. Last week, the FLI said that OpenAI had issued subpoenas to it and its president as a form of retaliation for calling for AI oversight. According to a US poll that surveyed 2,000 adults, most AI companies' mantra of "move fast and break things" when it comes to developing the technology is supported by just 5% of people. Almost three quarters of Americans want robust regulation on advanced AI, and six out of ten agree that it should not be created until it is proven to be safe or controllable. A recent Pew Center survey found that in the US, 44% of people trust the government to regulate AI and 47% are distrustful. Sam Altman recently gave another prediction on when superintelligence will arrive. The OpenAI boss said it will be here by 2030, adding that up to 40% of tasks that happen in the economy today will be taken over by AI in the not very distant future. Meta is also chasing superintelligence. CEO Mark Zuckerberg said it is close and will "empower" individuals. The company recently split its Meta Superintelligence Labs into four smaller groups, so the technology might be further away than Zuckerberg predicted. Ultimately, it's unlikely the letter will prompt AI companies to slow their superintelligence development. A similar letter in 2023, which was signed by Elon Musk, had little to no effect.

[8]

Prince Harry and Steve Bannon Join Forces Against Superintelligence Development

A ragtag group of public figures has united under one message: the big tech world should not rush towards AI superintelligence. Superintelligence (also known as AGI) is a hypothetical AI system that could outperform human intelligence on virtually all scales. It is the holy grail of the AI industry, and big tech companies have spent countless money and resources to be the first to achieve it. Meta, for example, has a whole division and a multibillion-dollar spending spree dedicated to this goal. Meta CEO Mark Zuckerberg claims superintelligence is "in sight," but other experts are more skeptical of the timeline or even the potential for the technology to ever reach that level of sophistication. But even those who think superintelligence is achievable don't agree with the way AI is evolving towards it. That includes the more than 1,300 and counting signatories to the "Statement on Superintelligence," put forth by the Future of Life Institute. "We call for a prohibition on the development of superintelligence, not lifted before there is 1. broad scientific consensus that it will be done safely and controllably, and 2. strong public buy-in," the statement reads. Both those conditions are currently lacking. Many top computer scientists were among the signatories of this letter raising concerns about the safe development of superintelligence. Chief among them were Apple co-founder Steve Wozniak, Geoffrey Hinton and Yoshua Bengio, two scientists who are deemed "the godfathers of AI," and Stuart Russell, a computer science professor at UC Berkeley who is considered one of the most respected figures in the AI world. "This is not a ban or even a moratorium in the usual sense. It's simply a proposal to require adequate safety measures for a technology that, according to its developers, has a significant chance to cause human extinction. Is that too much to ask?" Russell said in a public statement accompanying the letter. There is also limited public buy-in. According to a Pew Research survey published last week, public concern about AI's increased use in daily life outweighs excitement globally. When broken down into countries, Americans were the most concerned. The signatories are not against AI; the statement even applauds the many benefits it can bring to society. But they say that the tech industry's rush to build superintelligence raises concerns "ranging from human economic obsolescence and disempowerment, losses of freedom, civil liberties, dignity, and control, to national security risks and even potential human extinction." The statement has brought together an eclectic group of people from all industries and both sides of the political divide. Among the signatories are right-wing media figures and Trump allies Steve Bannon and Glenn Beck, and Obama-era national security advisor and Biden-era Domestic Policy Council director Susan Rice. There are former congressmen from both sides of the aisle, the former UN High Commissioner for Human Rights Mary Robinson, the Pope's AI advisor, Friar Paolo Benanti, and even the Duke and Duchess of Sussex, Prince Harry and Meghan. The signatories also include actors like Joseph Gordon-Levitt, who published an op-ed against Meta's AI chatbots with the New York Times, musicians like Will.I.am and Grimes, and authors like Yuval Noah Harari. "Superintelligence would likely break the very operating system of human civilization - and is completely unnecessary," Harari said. "If we instead focus on building controllable AI tools to help real people today, we can far more reliably and safely realize AI's incredible benefits." It's not the first letter in which public figures have come together to warn the public and the industry of the dangers of AI. Back in 2023, a group of AI executives, including OpenAI CEO Sam Altman and Anthropic CEO Dario Amodei, signed a letter asking governments around the world to make the mitigation of the risk of extinction from AI a top priority in the same way pandemics and nuclear war are treated. Another 2023 open letter, also by the Future of Life Institute, had more than 33 thousand signatories, including the likes of Elon Musk, calling for a six-month pause on AI experiments that were training models more powerful than GPT-4. The pause was ignored, and OpenAI released GPT-4o last year and GPT-5 earlier this year. Both models were at the center of controversy this year when users revolted in grief after GPT-5 replaced GPT-4o, a model that has been criticized for evoking emotional reliance and addictive behavior in users. Pointedly, this new Statement on Superintelligence includes comments from notable people in the AI industry who did not sign. Those names are Altman, Amodei, Mustafa Suleyman (CEO of Microsoft AI), David Sacks (White House AI and Crypto Czar), and Elon Musk (you know who he is). The AI industry is innovating at breakneck speed and hurtling towards superintelligence with no regulatory guardrails. Even many leading figures who haven't signed the statement on superintelligence have themselves warned of the potential risks associated with this. "Development of superhuman machine intelligence (SMI) is probably the greatest threat to the continued existence of humanity," OpenAI CEO Sam Altman said in a blog post from 2015.

[9]

Nobel winners and celebrities challenge Silicon Valley's vision for the future

Prince Harry, the Duke of Sussex, speaks at a Project Healthy Minds event on Oct. 10 in New York. (Evan Agostini/Invision/AP) SAN FRANCISCO -- The tech industry is spending billions of dollars in a drive to upgrade today's artificial intelligence tools into so-called superintelligence, a fuzzy term used by companies like Meta and OpenAI to invoke future software they hope will outperform humans in every way. On Wednesday, a loose coalition of Nobel laureates, policymakers, celebrities and British royalty said that work on the hypothetical technology must be halted. "We call for a prohibition on the development of superintelligence," said a public statement with more than 700 signatories, including prominent AI researchers, Prince Harry, former Trump adviser Stephen K. Bannon and the Obama administration's national security adviser Susan Rice. The ban should be lifted only after "strong public buy-in" and scientific consensus that the technology can be made safe, the statement said, without specifying how either could be measured. Most AI experts in industry and academia do not think such technology is imminent, despite moves like Meta CEO Mark Zuckerberg spending about $15 billion to launch a new "superintelligence lab" this summer. Tech firms and national governments are competing to speed up AI development, making any ban unlikely. But the statement, coordinated by the nonprofit Future of Life Institute, comes at a time of renewed debate in Washington, Silicon Valley and across the nation about whether the potentially disruptive effects of existing AI technology require new regulations. Half of Americans are more concerned about AI than they are excited about it, according to a spring survey by the Pew Research Center. A Gallup poll from around the same time found that 80 percent of Americans supported rules for AI even if they slowed down its technological development. The Trump administration has aligned itself with tech figures who favor minimal regulation, rolling back AI rules introduced by President Joe Biden and supporting a proposed federal moratorium on state AI regulation. But federal and state lawmakers have launched dozens of efforts to regulate AI technology. More than half of U.S. states have passed AI-related laws, creating a patchwork of regulations across the country. In response, Meta and venture capital firm Andreessen Horowitz in recent months set up political action committees to support candidates that oppose strict AI regulation. "The state of AI regulation is rapidly evolving. As public use of AI tools surges, policymakers are digging in," said Katy Milner, a partner at law firm Hogan Lovells who works with technology companies. "Congress and the states have introduced hundreds of AI-related bills on issues such as data privacy and government use, with a few already enacted and many more bills likely to follow." Few of those bills are likely to focus on the kind of speculative AI technology targeted in Wednesday's statement released by the Future of Life Institute. The nonprofit organized an open letter in 2023 in which some business leaders and AI researchers called for a six-month "pause" on the training of more capable AI, but that did not appear to slow down the tech industry. "Many people want powerful AI tools for science, medicine, productivity and other benefits. But the path AI corporations are taking, of racing toward smarter-than-human AI that is designed to replace people, is wildly out of step with what the public wants," said Anthony Aguirre, co-founder and executive director of the Future of Life Institute. Wednesday's statement was also signed by actor Joseph Gordon-Levitt and several Nobel laureates in physics, including Geoffrey Hinton, an AI researcher widely seen as laying the groundwork for today's AI tools. Hinton left Google in 2023 to speak more freely about his concerns about AI technology. States like California have focused on regulations on potential harms of AI tools in daily use such as chatbots or requiring more transparency from companies developing AI. In September, California Gov. Gavin Newsom (D) signed a law that requires AI companies to publish safety reports detailing potential "catastrophic risks" of their technology -- such as enabling hackers to break encryption software or terrorists to make new bioweapons. This month, Newsom signed the nation's first law regulating how AI chatbots can interact with minors, after three U.S. families alleged in separate lawsuits that an AI chatbot had contributed to a teen's death by suicide. In Washington, some Republican politicians continue to fight against AI regulation at both the federal and state level. Proponents of unfettered AI development nearly passed a 10-year moratorium on most state-level AI laws as part of Republicans' sweeping tax and immigration bill earlier this year. It ultimately failed in the Senate after opponents of the measure including labor and children's safety advocates joined state lawmakers and attorneys general from both major parties in criticizing the moratorium. They alleged it was an infringement on states' rights and a giveaway to Big Tech. In a bid to resurrect the idea of a federal shield for AI firms, President Donald Trump in July released an "AI Action Plan" that called for blocking certain federal funding from states that pass AI laws deemed "burdensome." Sen. Ted Cruz (R-Texas) has introduced a bill that would allow AI companies to apply for two-year exemptions from external regulations.

[10]

Harry and Meghan join AI pioneers in call for ban on superintelligent systems

Nobel laureates also sign letter saying ASI technology should be barred until there is consensus that it can be developed 'safely' The Duke and Duchess of Sussex have joined artificial intelligence pioneers and Nobel laureates in calling for a ban on developing superintelligent AI systems. Harry and Meghan are among the signatories of a statement calling for "a prohibition on the development of superintelligence". Artificial superintelligence (ASI) is the term for AI systems, yet to be developed, that exceed human levels of intelligence at all cognitive tasks. The statement calls for the ban to stay in place until there is "broad scientific consensus" on developing ASI "safely and controllably" and once there is "strong public buy-in". It has also been signed by the AI pioneer and Nobel laureate Geoffrey Hinton, along with his fellow "godfather" of modern AI, Yoshua Bengio; the Apple co-founder Steve Wozniak; the UK entrepreneur Richard Branson; Susan Rice, a former US national security adviser under Barack Obama; the former Irish president Mary Robinson, and the British author and broadcaster Stephen Fry. Other Nobel laureates who signed include Beatrice Fihn, Frank Wilczek, John C Mather, and Daron Acemoğlu. The statement, targeted at governments, tech firms and lawmakers, was organised by the Future of Life Institute (FLI), a US-based AI safety group that called for a hiatus in developing powerful AI systems in 2023, soon after the emergence of ChatGPT made AI a political and public talking point around the world. In July, Mark Zuckerberg, the chief executive of the Facebook parent Meta, one of the big AI developers in the US, said development of superintelligence was "now in sight". However, some experts have said talk of ASI reflects competitive positioning among tech companies spending hundreds of billions of dollars on AI this year alone, rather than the sector being close to achieving any technical breakthroughs. Nonetheless, FLI says the prospect of ASI being achieved "in the coming decade" carries a host of threats ranging from taking all human jobs to losses of civil liberties, exposing countries to national security risks and even threatening humanity with extinction. Existential fears about AI focus on the potential ability of a system to evade human control and safety guidelines and trigger actions contrary to human interests. FLI released a US national poll showing that approximately three-quarters of Americans want robust regulation on advanced AI, with six out 10 believing that superhuman AI should not be made until it is proven safe or controllable. The survey of 2,000 US adults added that only 5% supported the status quo of fast, unregulated development. The leading AI companies in the US, including the ChatGPT developer OpenAI and Google, have made the development of artificial general intelligence - the theoretical state where AI matches human levels of intelligence at most cognitive tasks - an explicit goal of their work. Although this is one notch below ASI, some experts also warn it could carry an existential risk by, for instance, being able to improve itself towards reaching superintelligent levels, while also carrying an implicit threat for the modern labour market.

[11]

Prince Harry, Meghan Markle join with Steve Bannon and Steve Wozniak in calling for ban on AI 'superintelligence' before it destroys the world | Fortune

Prince Harry and his wife Meghan have joined prominent computer scientists, economists, artists, evangelical Christian leaders and American conservative commentators Steve Bannon and Glenn Beck to call for a ban on AI "superintelligence" that threatens humanity. The letter, released Wednesday by a politically and geographically diverse group of public figures, is squarely aimed at tech giants like Google, OpenAI and Meta Platforms that are racing each other to build a form of artificial intelligence designed to surpass humans at many tasks. The 30-word statement says: "We call for a prohibition on the development of superintelligence, not lifted before there is broad scientific consensus that it will be done safely and controllably, and strong public buy-in." In a preamble, the letter notes that AI tools may bring health and prosperity, but alongside those tools, "many leading AI companies have the stated goal of building superintelligence in the coming decade that can significantly outperform all humans on essentially all cognitive tasks. This has raised concerns, ranging from human economic obsolescence and disempowerment, losses of freedom, civil liberties, dignity, and control, to national security risks and even potential human extinction." Prince Harry added in a personal note that "the future of AI should serve humanity, not replace it. I believe the true test of progress will be not how fast we move, but how wisely we steer. There is no second chance." Signing alongside the Duke of Sussex was his wife Meghan, the Duchess of Sussex. "This is not a ban or even a moratorium in the usual sense," wrote another signatory, Stuart Russell, an AI pioneer and computer science professor at the University of California, Berkeley. "It's simply a proposal to require adequate safety measures for a technology that, according to its developers, has a significant chance to cause human extinction. Is that too much to ask?" Also signing were AI pioneers Yoshua Bengio and Geoffrey Hinton, co-winners of the Turing Award, computer science's top prize. Hinton also won a Nobel Prize in physics last year. Both have been vocal in bringing attention to the dangers of a technology they helped create. But the list also has some surprises, including Bannon and Beck, in an attempt by the letter's organizers at the nonprofit Future of Life Institute to appeal to President Donald Trump's Make America Great Again movement even as Trump's White House staff has sought to reduce limits to AI development in the U.S. Also on the list are Apple co-founder Steve Wozniak; British billionaire Richard Branson; the former Chairman of the U.S. Joint Chiefs of Staff Mike Mullen, who served under Republican and Democratic administrations; and Democratic foreign policy expert Susan Rice, who was national security adviser to President Barack Obama. Former Irish President Mary Robinson and several British and European parliamentarians signed, as did actors Stephen Fry and Joseph Gordon-Levitt, and musician will.i.am, who has otherwise embraced AI in music creation. "Yeah, we want specific AI tools that can help cure diseases, strengthen national security, etc.," wrote Gordon-Levitt, whose wife Tasha McCauley served on OpenAI's board of directors before the upheaval that led to CEO Sam Altman's temporary ouster in 2023. "But does AI also need to imitate humans, groom our kids, turn us all into slop junkies and make zillions of dollars serving ads? Most people don't want that." The letter is likely to provoke ongoing debates between the AI research community about the likelihood of superhuman AI, the technical paths to reach it and how dangerous it could be. "In the past, it's mostly been the nerds versus the nerds," said Max Tegmark, president of the Future of Life Institute and a professor at the Massachusetts Institute of Technology. "I feel what we're really seeing here is how the criticism has gone very mainstream." Complicating the broader debates is that the same companies that are striving toward what some call superintelligence and others call artificial general intelligence, or AGI, are also sometimes inflating the capabilities of their products, which can make them more marketable and have contributed to concerns about an AI bubble. OpenAI was recently met with ridicule from mathematicians and AI scientists when its researcher claimed ChatGPT had figured out unsolved math problems -- when what it really did was find and summarize what was already online. "There's a ton of stuff that's overhyped and you need to be careful as an investor, but that doesn't change the fact that -- zooming out -- AI has gone much faster in the last four years than most people predicted," Tegmark said. Tegmark's group was also behind a March 2023 letter -- still in the dawn of a commercial AI boom -- that called on tech giants to pause the development of more powerful AI models temporarily. None of the major AI companies heeded that call. And the 2023 letter's most prominent signatory, Elon Musk, was at the same time quietly founding his own AI startup to compete with those he wanted to take a 6-month pause. Asked if he reached out to Musk again this time, Tegmark said he wrote to the CEOs of all major AI developers in the U.S. but didn't expect them to sign. "I really empathize for them, frankly, because they're so stuck in this race to the bottom that they just feel an irresistible pressure to keep going and not get overtaken by the other guy," Tegmark said. "I think that's why it's so important to stigmatize the race to superintelligence, to the point where the U.S. government just steps in."

[12]

Hundreds of Power Players, From Steve Wozniak to Steve Bannon, Just Signed a Letter Calling for Prohibition on Development of AI Superintelligence

Hundreds of public figures -- including multiple AI "godfathers" and a staggeringly idiosyncratic array of religious, media, and tech figures -- just signed a letter calling for a "prohibition" on the race to build AI superintelligence. Simply titled the "Statement on Superintelligence," the letter, which was put forward by the Future of Life Institute (FLI), is extremely concise: it calls for a "prohibition on the development of superintelligence," which it says should not be "lifted before there is broad scientific consensus that it will be done safely and controllably" as well as with "strong public buy-in." The letter cites recent polling from FLI, which was cofounded by the Massachusetts Institute of Technology professor Max Tegmark, showing that only five percent of Americans are in favor of the rapid and unregulated development of advanced AI tools, while more than 73 percent support "robust" regulatory action on AI. Around 64 percent, meanwhile, said they felt that until superintelligence -- or an AI model that surpasses human-level intelligence -- could be proven to be safe or controllable, it shouldn't be built. Signatories include prominent tech and business figures like Apple cofounder Steve Wozniak and Virgin founder Richard Branson; influential right-wing media voices like "War Room" host Steve Bannon and talk radio host Glenn Beck, as well as left-leaning entertainers like Joseph Gordon-Levitt; Prince Harry and Meghan, Duke and Duchess of Sussex; Mike Mullen, the retired US Navy Admiral who served as Chairman of the Joint Chiefs of Staff under former presidents George W. Bush and Barack Obama; friar Paolo Benanti, who serves as the Pope's AI advisor; and a large consortium of AI experts and scientists including Turing award winner Yoshua Bengio and Nobel Prize laureate Geoffrey Hinton, two consequential AI researchers who each hold the title "godfather of AI." "Frontier AI systems could surpass most individuals across most cognitive tasks within just a few years," Bengio, a Professor at the University of Montreal, said in a press release. "These advances could unlock solutions to major global challenges, but they also carry significant risks." "To safely advance toward superintelligence, we must scientifically determine how to design AI systems that are fundamentally incapable of harming people, whether through misalignment or malicious use," he added. "We also need to make sure the public has a much stronger say in decisions that will shape our collective future." Crucially, the letter takes the position that superintelligence -- a lofty, scifi-esque vision for AI's future -- is an achievable technical goal, a position that some experts are skeptical of (or at least, believe to be a long way off.) It's also worth pointing out that the letter doesn't clarify the reality that AI doesn't have to reach superintelligence to cause chaos: as it stands, generative AI tools like chatbots and image and video-creation tools -- primitive technologies when compared against imagined future superintelligent AI systems -- are upending education, transforming the web into an increasingly misinformation-prone and unreal environment, expediting the creation and dissemination of nonconsensual and illegal pornography, and sending users of all ages spinning into mental health crises and reality breaks that have resulted in outcomes like divorce, homelessness, jail, involuntary commitments, self-harm, and death. It's also interesting who didn't sign the letter. Notable missing names include OpenAI CEO Sam Altman, DeepMind cofounder and Microsoft AI CEO Mustafa Suleyman, Anthropic CEO Dario Amodei, White House AI and crypto czar David Sacks, and xAI founder Elon Musk, the latter of whom signed a previous FLI letter from 2023 calling for a pause on the development of AI models more advanced than OpenAI's GPT-4. (That letter, of course, pretty much did nothing: GPT-5 was released this past summer.) Altman, too, has signed similar letters calling for awareness about large-scale future AI risks, making silence at this juncture striking. Given that this is one of multiple please-stop-advanced-AI-development-until-we-regulate letters to crop up since the release of ChatGPT in late 2022, whether this one will prove to have any bite is an open question. Still, the latest FLI letter does highlight the breadth of ideologies united on the belief that AI should be regulated, and that how we build AI, and by whom, should be a democratic process. In other words, the public should have a say in what humanity's technological future looks like -- and that shaping AI development shouldn't be done in a Wild West-like Silicon Valley vacuum lacking regulatory oversight and accountability. "Many people want powerful AI tools for science, medicine, productivity, and other benefits," FLI cofounder Anthony Aguirre said in press release. "But the path AI corporations are taking, of racing toward smarter-thanhuman AI that is designed to replace people, is wildly out of step with what the public wants, scientists think is safe, or religious leaders feel is right." "Nobody developing these AI systems has been asking humanity if this is OK," Aguirre added. "We did -- and they think it's unacceptable."

[13]

Open Letter Calls for Ban on Superintelligent AI Development

Among the signatories are five Nobel laureates; two so-called "Godfathers of AI;" Steve Wozniak, a co-founder of Apple; Steve Bannon, a close ally of President Trump; Paolo Benanti, an adviser to the Pope; and even Harry and Meghan, the Duke and Duchess of Sussex. "We call for a prohibition on the development of superintelligence, not lifted before there is The letter was coordinated and published by the Future of Life Institute, a nonprofit that in 2023 published a different open letter calling for a six-month pause on the development of powerful AI systems. Although widely-circulated, that letter did not achieve its goal. Organizers said they decided to mount a new campaign, with a more specific focus on superintelligence, because they believe the technology -- which they define as a system that can surpass human performance on all useful tasks -- could arrive in as little as one to two years. "Time is running out," says Anthony Aguirre, the FLI's executive director, in an interview with TIME. The only thing likely to stop AI companies barreling toward superintelligence, he says, "is for there to be widespread realization among society at all its levels that this is not actually what we want."

[14]

Many big names in group of unlikely allies seeking ban, for now, on AI "superintelligence"

Prince Harry and his wife Meghan have joined prominent computer scientists, economists, artists, evangelical Christian leaders and American conservative commentators Steve Bannon and Glenn Beck to call for a ban on AI "superintelligence" they say could threaten humanity. The letter, released Wednesday by a politically and geographically diverse group of public figures, is squarely aimed at tech giants like Google, OpenAI and Meta Platforms that are racing each other to build a form of artificial intelligence designed to surpass humans at many tasks. The 30-word statement says, "We call for a prohibition on the development of superintelligence, not lifted before there is broad scientific consensus that it will be done safely and controllably, and strong public buy-in." In a preamble, the letter notes that AI tools may bring health and prosperity, but alongside those tools, "many leading AI companies have the stated goal of building superintelligence in the coming decade that can significantly outperform all humans on essentially all cognitive tasks. This has raised concerns, ranging from human economic obsolescence and disempowerment, losses of freedom, civil liberties, dignity, and control, to national security risks and even potential human extinction." Prince Harry added in a personal note that "the future of AI should serve humanity, not replace it. I believe the true test of progress will be not how fast we move, but how wisely we steer. There is no second chance." Signing alongside the Duke of Sussex was his wife Meghan, the Duchess of Sussex. "This is not a ban or even a moratorium in the usual sense," wrote another signatory, Stuart Russell, an AI pioneer and computer science professor at the University of California, Berkeley. "It's simply a proposal to require adequate safety measures for a technology that, according to its developers, has a significant chance to cause human extinction. Is that too much to ask?" Also signing were AI pioneers Yoshua Bengio and Geoffrey Hinton, co-winners of the Turing Award, computer science's top prize. Hinton also won a Nobel Prize in physics last year. Both have been vocal in bringing attention to the dangers of a technology they helped create. But the list also has some surprises, including Bannon and Beck, in an attempt by the letter's organizers at the nonprofit Future of Life Institute to appeal to President Trump's Make America Great Again movement even as Mr. Trump's White House staff has sought to reduce limits to AI development in the U.S. Also on the list are Apple co-founder Steve Wozniak; British billionaire Richard Branson; the former Chairman of the U.S. Joint Chiefs of Staff Mike Mullen, who served under Republican and Democratic administrations; and Democratic foreign policy expert Susan Rice, who was national security adviser to President Barack Obama. Former Irish President Mary Robinson and several British and European parliamentarians signed, as did actors Stephen Fry and Joseph Gordon-Levitt, and musician will.i.am, who has otherwise embraced AI in music creation. "Yeah, we want specific AI tools that can help cure diseases, strengthen national security, etc.," wrote Gordon-Levitt, whose wife Tasha McCauley served on OpenAI's board of directors before the upheaval that led to CEO Sam Altman's temporary ouster in 2023. "But does AI also need to imitate humans, groom our kids, turn us all into slop junkies and make zillions of dollars serving ads? Most people don't want that." The letter is likely to provoke ongoing debates between the AI research community about the likelihood of superhuman AI, the technical paths to reach it and how dangerous it could be. "In the past, it's mostly been the nerds versus the nerds," said Max Tegmark, president of the Future of Life Institute and a professor at the Massachusetts Institute of Technology. "I feel what we're really seeing here is how the criticism has gone very mainstream." Confounding the broader debates is that the same companies that are striving toward what some call superintelligence and others call artificial general intelligence, or AGI, are also sometimes inflating the capabilities of their products, which can make them more marketable and have contributed to concerns about an AI bubble. OpenAI was recently met with ridicule from mathematicians and AI scientists when its researcher claimed ChatGPT had figured out unsolved math problems -- when what it really did was find and summarize what was already online. "There's a ton of stuff that's overhyped and you need to be careful as an investor, but that doesn't change the fact that -- zooming out -- AI has gone much faster in the last four years than most people predicted," Tegmark said. Tegmark's group was also behind a March 2023 letter -- still in the dawn of a commercial AI boom -- that called on tech giants to temporarily pause the development of more powerful AI models. None of the major AI companies heeded that call. And the 2023 letter's most prominent signatory, Elon Musk, was at the same time quietly founding his own AI startup to compete with those he wanted to take a 6-month pause. Asked if he reached out to Musk again this time, Tegmark said he wrote to the CEOs of all major AI developers in the U.S. but didn't expect them to sign. "I really empathize for them, frankly, because they're so stuck in this race to the bottom that they just feel an irresistible pressure to keep going and not get overtaken by the other guy," Tegmark said. "I think that's why it's so important to stigmatize the race to superintelligence, to the point where the U.S. government just steps in."

[15]

Top tech figures are calling for pause in AI race to superintelligence

Public figures say the AI race to superintelligence has raised concerns, ranging from human economic obsolescence, loss of freedom, and human extinction. More than 850 public figures, including artificial intelligence (AI) and technology leaders, have called for a slowdown in the race to create a form of AI that surpasses human intellect, known as "superintelligence". Prominent computer scientists such as Nobel Prize winner Geoffrey Hinton and Yoshua Bengio - widely considered the 'godfathers' of AI - signed the letter, as did Apple co-founder Steve Wozniak and Virgin Group founder Richard Branson. Superintelligence is broadly defined as AI that surpasses human cognitive capabilities, which some tech experts fear could cause humans to lose control over these systems. Machines taking control is the default outcome, according to a prediction in the 1950s by the famed computer scientist Alan Turing. Global leaders say winning the AI race is critical to national security and for advancements in health, business, and technology. Meanwhile, tech companies such as Meta are using superintelligence as a buzzword to hype up their latest AI models. This year, Meta named its large language model (LLM) division Meta Superintelligence Labs. The letter says the AI race to superintelligence "has raised concerns, ranging from human economic obsolescence and disempowerment, losses of freedom, civil liberties, dignity, and control, to national security risks and even potential human extinction". It calls for a "prohibition on the development of superintelligence, not lifted before there is broad scientific consensus that it will be done safely and controllably, and strong public buy-in". AI leaders such as OpenAI chief executive Sam Altman have previously said that the development of superhuman machine intelligence (SMI) "is probably the greatest threat to the continued existence of humanity". Meanwhile Microsoft CEO Mustafa Suleyman said "until we can prove unequivocally that it is [safe], we shouldn't be inventing it." However, Altman and Suleyman were not on the list of signatories. Altman has also hyped artificial general intelligence (AGI), loosely defined as a type of AI that matches or surpasses human cognitive capabilities, which is seen as the step before superintelligence. But for Altman, AGI is defined as a hypothetical form of machine intelligence that can solve any human task through methods not constrained to its training. Altman has said it can "elevate humanity" and does not refer to machines taking over. Other than tech figures, signatories of the statement include academics, media personalities such as Steven Fry and Steve Bannon, religious leaders, and former politicians.

[16]

From Prince Harry to Steve Bannon, unlikely coalition calls for ban on superintelligent AI

Prince Harry and Meghan, Duchess of Sussex, in Düsseldorf, Germany, in September 2022. Rolf Vennenbernd / Getty Images Hundreds of public figures, including Nobel Prize-winning scientists, former military leaders, artists and British royalty, signed a statement Wednesday calling for a ban on work that could lead to computer superintelligence, a yet-to-be-reached stage of artificial intelligence that they said could one day pose a threat to humanity. The statement proposes "a prohibition on the development of superintelligence" until there is both "broad scientific consensus that it will be done safely and controllably" and "strong public buy-in." Organized by AI researchers concerned about the fast pace of technological advances, the statement had more than 800 signatures Tuesday night from a diverse group of people. The signers include Nobel laureate and AI researcher Geoffrey Hinton, former Joint Chiefs of Staff Chairman Mike Mullen, rapper Will.i.am, former Trump White House aide Steve Bannon and U.K. Prince Harry and his wife, Meghan Markle. The statement adds to a growing list of calls for an AI slowdown at a time when AI is threatening to remake large swaths of the economy and culture. OpenAI, Google, Meta and other tech companies are pouring billions of dollars into new AI models and the data centers that power them, while businesses of all kinds are looking for ways to add AI features to a broad range of products and services. Some AI researchers believe AI systems are advancing fast enough that soon they'll demonstrate what's known as artificial general intelligence, or the ability to perform intellectual tasks as a human could. From there, researchers and tech executives believe what could follow might be superintelligence, in which AI models perform better than even the most expert humans. The statement is a product of the Future of Life Institute, a nonprofit group that works on large-scale risks such as nuclear weapons, biotechnology and AI. Among its early backers in 2015 was tech billionaire Elon Musk, who's now part of the AI race with his startup xAI. Now, the institute says, its biggest recent donor is Vitalik Buterin, a co-founder of the Ethereum blockchain, and it says it doesn't accept donations from big tech companies or from companies seeking to build artificial general intelligence. Its executive director, Anthony Aguirre, a physicist at the University of California, Santa Cruz, said AI developments are happening faster than the public can understand what's happening or what's next. "We've, at some level, had this path chosen for us by the AI companies and founders and the economic system that's driving them, but no one's really asked almost anybody else, 'Is this what we want?'" he said in an interview. "It's been quite surprising to me that there has been less outright discussion of 'Do we want these things? Do we want human-replacing AI systems?'" he said. "It's kind of taken as: Well, this is where it's going, so buckle up, and we'll just have to deal with the consequences. But I don't think that's how it actually is. We have many choices as to how we develop technologies, including this one." The statement isn't aimed at any one organization or government in particular. Aguirre said he hopes to force a conversation that includes not only major AI companies, but also politicians in the United States, China and elsewhere. He said the Trump administration's pro-industry views on AI need balance. "This is not what the public wants. They don't want to be in a race for this," he said. He said there might eventually need to be an international treaty on advanced AI, as there is for other potentially dangerous technologies. The White House didn't immediately respond to a request for comment on the statement Tuesday, ahead of its official release. Americans are almost evenly split over the potential impact of AI, according to an NBC News Decision Desk Poll powered by SurveyMonkey this year. While 44% of U.S. adults surveyed said they thought AI would make their and their families' lives better, 42% said they thought it would make their futures worse. Top tech executives, who have offered predictions about superintelligence and signaled that they are working toward it as a goal, didn't sign the statement. Meta CEO Mark Zuckerberg said in July that superintelligence was "now in sight." Musk posted on X in February that the advent of digital superintelligence "is happening in real-time" and has earlier warned about "robots going down the street killing people," though now Tesla, where Musk is CEO, is working to develop humanoid robots. OpenAI CEO Sam Altman said last month that he'd be surprised if superintelligence didn't arrive by 2030 and wrote in a January blog post that his company was turning its attention there. Several tech companies didn't immediately respond to requests for comment on the statement. Last week, the Future of Life Institute told NBC News that OpenAI had issued subpoenas to it and its president as a form of retaliation for calling for AI oversight. OpenAI Chief Strategy Officer Jason Kwon wrote on Oct. 11 that the subpoena was a result of OpenAI's suspicions around the funding sources of several nonprofit groups that had been critical of its restructuring. Other signers of the statement include Apple co-founder Steve Wozniak, Virgin Group co-founder Richard Branson, conservative talk show host Glenn Beck, former U.S. national security adviser Susan Rice, Nobel-winning physicist John Mather, Turing Award winner and AI researcher Yoshua Bengio and the Rev. Paolo Benanti, a Vatican AI adviser. Several AI researchers based in China also signed the statement. Aguirre said the goal was to have a broad set of signers from across society. "We want this to be social permission for people to talk about it, but also we want to very much represent that this is not a niche issue of some nerds in Silicon Valley, who are often the only people at the table. This is an issue for all of humanity," he said.

[17]

Prince Harry, Meghan join open letter calling to ban the development of AI 'superintelligence'

The letter, released Wednesday by a politically and geographically diverse group of public figures, is squarely aimed at tech giants like Google, OpenAI, and Meta Platforms that are racing each other to build a form of artificial intelligence designed to surpass humans at many tasks. The 30-word statement says: "We call for a prohibition on the development of superintelligence, not lifted before there is broad scientific consensus that it will be done safely and controllably, and strong public buy-in."

[18]

Geoffrey Hinton, Richard Branson, and Prince Harry join call to for AI labs to halt their pursuit of superintelligence | Fortune

A new open letter, signed by a range of AI scientists, celebrities, policymakers, and faith leaders, calls for a ban on the development of 'superintelligence' -- a hypothetical AI technology that could exceed the intelligence of all of humanity -- until the technology is reliably safe and controllable. The letter's more notable signatories include AI pioneer and Nobel laureate Geoffrey Hinton, other AI luminaries such as Yoshua Bengio and Stuart Russell, as well as business leaders such as Virgin founder Richard Branson and Apple co-founder Steve Wozniak. It was also signed by celebrities, including actor Joseph Gordon-Levitt, who recently expressed concerns around Meta's AI products, will.i.am, and Prince Harry and Meghan, Duke and Duchess of Sussex. Policy and national security figures as diverse as Trump ally and strategist Steve Bannon and Mike Mullen, Chairman of the Joint Chiefs of Staff under Presidents George W. Bush and Barack Obama, also appear in the list of more than 1,000 other signatories. New polling conducted alongside the open letter, which was written and circulated by the non-profit Future of Life Institute, found that the public generally agreed with the call for a moratorium on the development of superpowerful AI technology. In the U.S., the polling found that only 5% of U.S. adults support the current status quo of unregulated development of advanced AI, while 64% agreed superintelligence shouldn't be developed until it's provably safe and controllable. The poll found that 73% want robust regulation on advanced AI. "95% of Americans don't want a race to superintelligence, and experts want to ban it," Future of Life President Max Tegmark said in the statement. Superintelligence is broadly defined as a type of artificial intelligence capable of outperforming the entirety of humanity at most cognitive tasks. There is currently no consensus on when or if superintelligence will be achieved, and timelines suggested by experts are speculative. Some more aggressive estimates have said superintelligence could be achieved by the late 2020s, while more conservative views delay it much further or question the current tech's ability to achieve it at all. Several leading AI labs, including Meta, Google DeepMind, and OpenAI, are actively pursuing this level of advanced AI. The letter calls on these leading AI labs to halt their pursuit of these capabilities until there is a "broad scientific consensus that it will be done safely and controllably, and strong public buy-in." "Frontier AI systems could surpass most individuals across most cognitive tasks within just a few years," Yoshua Bengio, Turing Award-winning computer scientist, who along with Hinton is considered one of the "godfathers" of AI, said in a statement. "To safely advance toward superintelligence, we must scientifically determine how to design AI systems that are fundamentally incapable of harming people, whether through misalignment or malicious use. We also need to make sure the public has a much stronger say in decisions that will shape our collective future," he said. The signatories claim that the pursuit of superintelligence raises serious risks around economic displacement, disempowerment, and national security risks, as well as concerns around loss of freedoms and civil liberties. The letter accuses tech companies of pursuing this potentially dangerous technology without guardrails, oversight, and without broad public consent. "To get the most from what AI has to offer mankind, there is simply no need to reach for the unknowable and highly risky goal of superintelligence, which is by far a frontier too far. By definition, this would result in a power that we could neither understand nor control," actor Stephen Fry said in the statement.

[19]

Prince Harry, Meghan join call for ban on development of AI 'superintelligence'

Prince Harry and his wife Meghan have joined prominent computer scientists, economists, artists, evangelical Christian leaders and American conservative commentators Steve Bannon and Glenn Beck to call for a ban on AI "superintelligence" that threatens humanity. The letter, released Wednesday by a politically and geographically diverse group of public figures, is squarely aimed at tech giants like Google, OpenAI and Meta Platforms that are racing each other to build a form of artificial intelligence designed to surpass humans at many tasks. The 30-word statement says: "We call for a prohibition on the development of superintelligence, not lifted before there is broad scientific consensus that it will be done safely and controllably, and strong public buy-in." In a preamble, the letter notes that AI tools may bring health and prosperity, but alongside those tools, "many leading AI companies have the stated goal of building superintelligence in the coming decade that can significantly outperform all humans on essentially all cognitive tasks. This has raised concerns, ranging from human economic obsolescence and disempowerment, losses of freedom, civil liberties, dignity, and control, to national security risks and even potential human extinction." Prince Harry added in a personal note that "the future of AI should serve humanity, not replace it. I believe the true test of progress will be not how fast we move, but how wisely we steer. There is no second chance." Signing alongside the Duke of Sussex was his wife Meghan, the Duchess of Sussex. "This is not a ban or even a moratorium in the usual sense," wrote another signatory, Stuart Russell, an AI pioneer and computer science professor at the University of California, Berkeley. "It's simply a proposal to require adequate safety measures for a technology that, according to its developers, has a significant chance to cause human extinction. Is that too much to ask?" Also signing were AI pioneers Yoshua Bengio and Geoffrey Hinton, co-winners of the Turing Award, computer science's top prize. Hinton also won a Nobel Prize in physics last year. Both have been vocal in bringing attention to the dangers of a technology they helped create. But the list also has some surprises, including Bannon and Beck, in an attempt by the letter's organizers at the nonprofit Future of Life Institute to appeal to President Donald Trump's Make America Great Again movement even as Trump's White House staff has sought to reduce limits to AI development in the U.S. Also on the list are Apple co-founder Steve Wozniak; British billionaire Richard Branson; the former Chairman of the U.S. Joint Chiefs of Staff Mike Mullen, who served under Republican and Democratic administrations; and Democratic foreign policy expert Susan Rice, who was national security adviser to President Barack Obama. Former Irish President Mary Robinson and several British and European parliamentarians signed, as did actors Stephen Fry and Joseph Gordon-Levitt, and musician will.i.am, who has otherwise embraced AI in music creation. "Yeah, we want specific AI tools that can help cure diseases, strengthen national security, etc.," wrote Gordon-Levitt, whose wife Tasha McCauley served on OpenAI's board of directors before the upheaval that led to CEO Sam Altman's temporary ouster in 2023. "But does AI also need to imitate humans, groom our kids, turn us all into slop junkies and make zillions of dollars serving ads? Most people don't want that." The letter is likely to provoke ongoing debates between the AI research community about the likelihood of superhuman AI, the technical paths to reach it and how dangerous it could be. "In the past, it's mostly been the nerds versus the nerds," said Max Tegmark, president of the Future of Life Institute and a professor at the Massachusetts Institute of Technology. "I feel what we're really seeing here is how the criticism has gone very mainstream." Confounding the broader debates is that the same companies that are striving toward what some call superintelligence and others call artificial general intelligence, or AGI, are also sometimes inflating the capabilities of their products, which can make them more marketable and have contributed to concerns about an AI bubble. OpenAI was recently met with ridicule from mathematicians and AI scientists when its researcher claimed ChatGPT had figured out unsolved math problems -- when what it really did was find and summarize what was already online. "There's a ton of stuff that's overhyped and you need to be careful as an investor, but that doesn't change the fact that -- zooming out -- AI has gone much faster in the last four years than most people predicted," Tegmark said. Tegmark's group was also behind a March 2023 letter -- still in the dawn of a commercial AI boom -- that called on tech giants to pause the development of more powerful AI models temporarily. None of the major AI companies heeded that call. And the 2023 letter's most prominent signatory, Elon Musk, was at the same time quietly founding his own AI startup to compete with those he wanted to take a 6-month pause. Asked if he reached out to Musk again this time, Tegmark said he wrote to the CEOs of all major AI developers in the U.S. but didn't expect them to sign. "I really empathize for them, frankly, because they're so stuck in this race to the bottom that they just feel an irresistible pressure to keep going and not get overtaken by the other guy," Tegmark said. "I think that's why it's so important to stigmatize the race to superintelligence, to the point where the U.S. government just steps in."

[20]

Prince Harry, Meghan join call for ban on development of AI 'superintelligence'

Prince Harry and his wife Meghan have joined prominent computer scientists, economists, artists, evangelical Christian leaders and American conservative commentators Steve Bannon and Glenn Beck to call for a ban on AI "superintelligence" that threatens humanity. The letter, released Wednesday by a politically and geographically diverse group of public figures, is squarely aimed at tech giants like Google, OpenAI and Meta Platforms that are racing each other to build a form of artificial intelligence designed to surpass humans at many tasks. The letter calls for a ban unless some conditions are met The 30-word statement says: "We call for a prohibition on the development of superintelligence, not lifted before there is broad scientific consensus that it will be done safely and controllably, and strong public buy-in." In a preamble, the letter notes that AI tools may bring health and prosperity, but alongside those tools, "many leading AI companies have the stated goal of building superintelligence in the coming decade that can significantly outperform all humans on essentially all cognitive tasks. This has raised concerns, ranging from human economic obsolescence and disempowerment, losses of freedom, civil liberties, dignity, and control, to national security risks and even potential human extinction." Who signed and what they're saying about it Prince Harry added in a personal note that "the future of AI should serve humanity, not replace it. I believe the true test of progress will be not how fast we move, but how wisely we steer. There is no second chance." Signing alongside the Duke of Sussex was his wife Meghan, the Duchess of Sussex. "This is not a ban or even a moratorium in the usual sense," wrote another signatory, Stuart Russell, an AI pioneer and computer science professor at the University of California, Berkeley. "It's simply a proposal to require adequate safety measures for a technology that, according to its developers, has a significant chance to cause human extinction. Is that too much to ask?" Also signing were AI pioneers Yoshua Bengio and Geoffrey Hinton, co-winners of the Turing Award, computer science's top prize. Hinton also won a Nobel Prize in physics last year. Both have been vocal in bringing attention to the dangers of a technology they helped create. But the list also has some surprises, including Bannon and Beck, in an attempt by the letter's organizers at the nonprofit Future of Life Institute to appeal to President Donald Trump's Make America Great Again movement even as Trump's White House staff has sought to reduce limits to AI development in the U.S. Also on the list are Apple co-founder Steve Wozniak; British billionaire Richard Branson; the former Chairman of the U.S. Joint Chiefs of Staff Mike Mullen, who served under Republican and Democratic administrations; and Democratic foreign policy expert Susan Rice, who was national security adviser to President Barack Obama. Former Irish President Mary Robinson and several British and European parliamentarians signed, as did actors Stephen Fry and Joseph Gordon-Levitt, and musician will.i.am, who has otherwise embraced AI in music creation. "Yeah, we want specific AI tools that can help cure diseases, strengthen national security, etc.," wrote Gordon-Levitt, whose wife Tasha McCauley served on OpenAI's board of directors before the upheaval that led to CEO Sam Altman's temporary ouster in 2023. "But does AI also need to imitate humans, groom our kids, turn us all into slop junkies and make zillions of dollars serving ads? Most people don't want that." Are worries about AI superintelligence also feeding AI hype? The letter is likely to provoke ongoing debates between the AI research community about the likelihood of superhuman AI, the technical paths to reach it and how dangerous it could be. "In the past, it's mostly been the nerds versus the nerds," said Max Tegmark, president of the Future of Life Institute and a professor at the Massachusetts Institute of Technology. "I feel what we're really seeing here is how the criticism has gone very mainstream." Confounding the broader debates is that the same companies that are striving toward what some call superintelligence and others call artificial general intelligence, or AGI, are also sometimes inflating the capabilities of their products, which can make them more marketable and have contributed to concerns about an AI bubble. OpenAI was recently met with ridicule from mathematicians and AI scientists when its researcher claimed ChatGPT had figured out unsolved math problems -- when what it really did was find and summarize what was already online. "There's a ton of stuff that's overhyped and you need to be careful as an investor, but that doesn't change the fact that -- zooming out -- AI has gone much faster in the last four years than most people predicted," Tegmark said. Tegmark's group was also behind a March 2023 letter -- still in the dawn of a commercial AI boom -- that called on tech giants to pause the development of more powerful AI models temporarily. None of the major AI companies heeded that call. And the 2023 letter's most prominent signatory, Elon Musk, was at the same time quietly founding his own AI startup to compete with those he wanted to take a 6-month pause. Asked if he reached out to Musk again this time, Tegmark said he wrote to the CEOs of all major AI developers in the U.S. but didn't expect them to sign. "I really empathize for them, frankly, because they're so stuck in this race to the bottom that they just feel an irresistible pressure to keep going and not get overtaken by the other guy," Tegmark said. "I think that's why it's so important to stigmatize the race to superintelligence, to the point where the U.S. government just steps in."

[21]

Many big names in group of unlikely allies seeking ban, for now, on AI "superintelligence"