Google Revolutionizes App Development with Vibe Coding in AI Studio

8 Sources

8 Sources

[1]

Google's new vibe coding AI Studio experience lets anyone build, deploy apps live in minutes

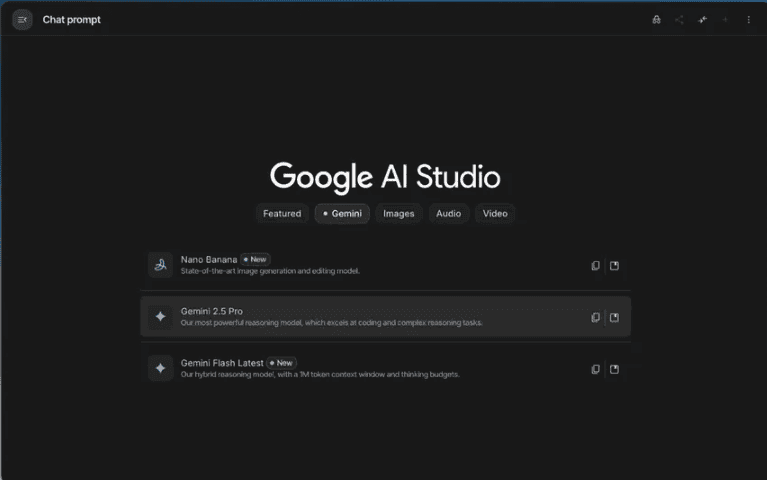

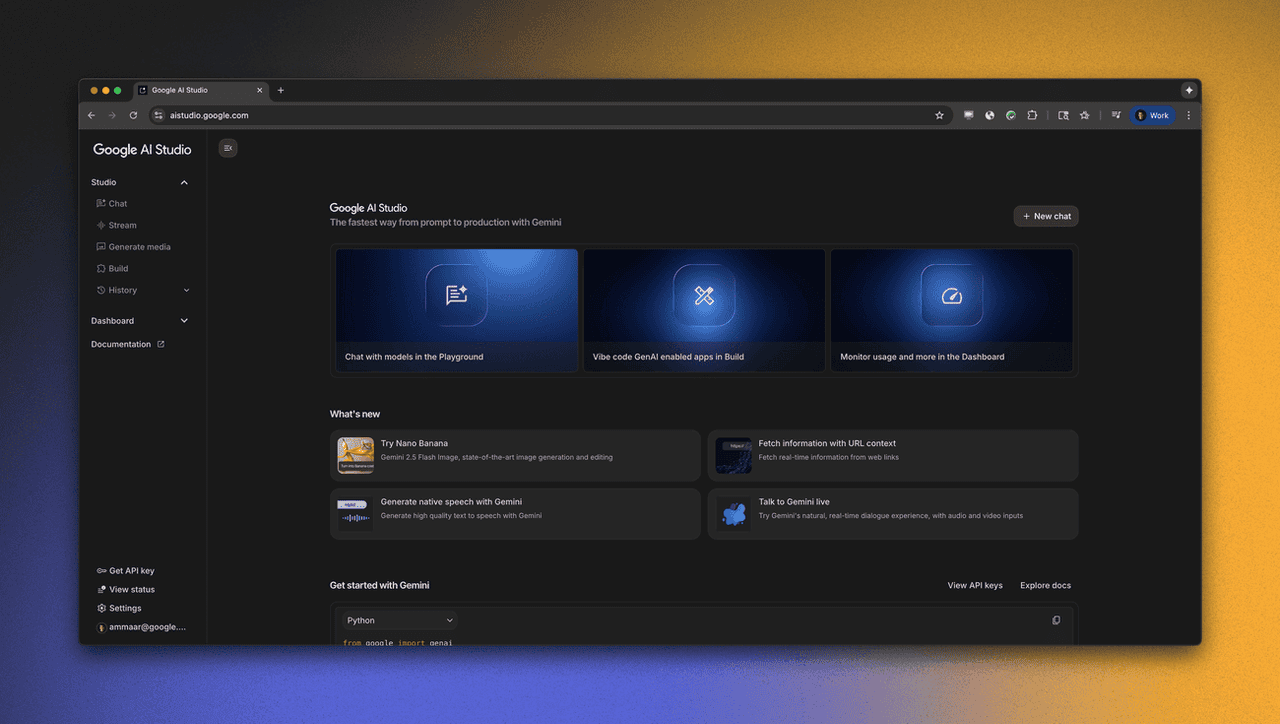

Google AI Studio has gotten a big vibe coding upgrade with a new interface, buttons, suggestions and community features that allow anyone with an idea for an app -- even complete novices, laypeople, or non-developers like yours truly -- to bring it into existence and deploy it live, on the web, for anyone to use, within minutes. The updated Build tab is available now at ai.studio/build, and it's free to start. Users can experiment with building applications without needing to enter payment information upfront, though certain advanced features like Veo 3.1 and Cloud Run deployment require a paid API key. The new features appear to me to make Google's AI models and offerings even more competitive, perhaps preferred, for many general users to dedicated AI startup rivals like Anthropic's Claude Code and OpenAI's Codex, respectively, two "vibe coding" focused products that are beloved by developers -- but seem to have a higher barrier to entry or may require more technical know-how. A Fresh Start: Redesigned Build Mode The updated Build tab serves as the entry point to vibe coding. It introduces a new layout and workflow where users can select from Google's suite of AI models and features to power their applications. The default is Gemini 2.5 Pro, which is great for most cases. Once selections are made, users simply describe what they want to build, and the system automatically assembles the necessary components using Gemini's APIs. This mode supports mixing capabilities like Nano Banana (a lightweight AI model), Veo (for video understanding), Imagine (for image generation), Flashlight (for performance-optimized inference), and Google Search. Patrick Löber, Developer Relations at Google DeepMind, highlighted that the experience is meant to help users "supercharge your apps with AI" using a simple prompt-to-app pipeline. In a video demo he posted on X and LinedIn, he showed how just a few clicks led to the automatic generation of a garden planning assistant app, complete with layouts, visuals, and a conversational interface. From Prompt to Production: Building and Editing in Real Time Once an app is generated, users land in a fully interactive editor. On the left, there's a traditional code-assist interface where developers can chat with the AI model for help or suggestions. On the right, a code editor displays the full source of the app. Each component -- such as React entry points, API calls, or styling files -- can be edited directly. Tooltips help users understand what each file does, which is especially useful for those less familiar with TypeScript or frontend frameworks. Apps can be saved to GitHub, downloaded locally, or shared directly. Deployment is possible within the Studio environment or via Cloud Run if advanced scaling or hosting is needed. Inspiration on Demand: The 'I'm Feeling Lucky' Button One standout feature in this update is the "I'm Feeling Lucky" button. Designed for users who need a creative jumpstart, it generates randomized app concepts and configures the app setup accordingly. Each press yields a different idea, complete with suggested AI features and components. Examples produced during demos include: * An interactive map-based chatbot powered by Google Search and conversational AI. * A dream garden designer using image generation and advanced planning tools. * A trivia game app with an AI host whose personality users can define, integrating both Imagine and Flashlight with Gemini 2.5 Pro for conversation and reasoning. Logan Kilpatrick, Lead of Product for Google AI Studio and Gemini AI, noted in a demo video of his own that this feature encourages discovery and experimentation. "You get some really, really cool, different experiences," he said, emphasizing its role in helping users find novel ideas quickly. Hands-On Test: From Prompt to App in 65 Seconds To test the new workflow, I prompted Gemini with: A randomized dice rolling web application where the user can select between common dice sizes (6 sides, 10 sides, etc) and then see an animated die rolling and choose the color of their die as well. Within 65 seconds (just over a minute) AI Studio returned a fully working web app featuring: * Dice size selector (d4, d6, d8, d10, d12, d20) * Color customization options for the die * Animated rolling effect with randomized results * Clean, modern UI built with React, TypeScript, and Tailwind CSS The platform also generated a complete set of structured files, including App.tsx, constants.ts, and separate components for dice logic and controls. After generation, it was easy to iterate: adding sound effects for each interaction (rolling, choosing a die, changing color) required only a single follow-up prompt to the built-in assistant. This was also suggested by Gemini, too, by the way. From there, the app can be previewed live or exported using built-in controls to: * Save to GitHub * Download the full codebase * Copy the project for remixing * Deploy via integrated tools My brief, hands-on test showed just how quickly even small utility apps can go from idea to interactive prototype -- without leaving the browser or writing boilerplate code manually. AI-Suggested Enhancements and Feature Refinement In addition to code generation, Google AI Studio now offers context-aware feature suggestions. These recommendations, generated by Gemini's Flashlight capability, analyze the current app and propose relevant improvements. In one example, the system suggested implementing a feature that displays the history of previously generated images in an image studio tab. These iterative enhancements allow builders to expand app functionality over time without starting from scratch. Kilpatrick emphasized that users can continue to refine their projects as they go, combining both automatic generation and manual adjustments. "You can go in and continue to edit and sort of refine the experience that you want iteratively," he said. Free to Start, Flexible to Grow The new experience is available at no cost for users who want to experiment, prototype, or build lightweight apps. There's no requirement to enter credit card information to begin using vibe coding. However, more powerful capabilities -- such as using models like Veo 3.1 or deploying through Cloud Run -- do require switching to a paid API key. This pricing structure is intended to lower the barrier to entry for experimentation while providing a clear path to scale when needed. Built for All Skill Levels One of the central goals of the vibe coding launch is to make AI app development accessible to more people. The system supports both high-level visual builders and low-level code editing, creating a workflow that works for developers across experience levels. Kilpatrick mentioned that while he's more familiar with Python than TypeScript, he still found the editor useful because of the helpful file descriptions and intuitive layout. This focus on usability could make AI Studio a compelling option for developers exploring AI for the first time. More to Come: A Week of Launches The launch of vibe coding is the first in a series of announcements expected throughout the week. While specific future features haven't been revealed yet, both Kilpatrick and Löber hinted that additional updates are on the way. With this update, Google AI Studio positions itself as a flexible, user-friendly environment for building AI-powered applications -- whether for fun, prototyping, or production deployment. The focus is clear: make the power of Gemini's APIs accessible without unnecessary complexity.

[2]

Introducing vibe coding in Google AI Studio

AI-powered apps let you build incredible things: generate videos from a script with Veo, build powerful image editing tools with a command using Nano Banana, or create the ultimate writing app that can check your sources using Google Search. Traditionally, building an app that combines these capabilities meant juggling different APIs, SDKs and services. That complexity is a major barrier between your idea and a working prototype. The new vibe coding experience in AI Studio removes that friction entirely. Now, you can describe the multi-modal app of your dreams, and AI Studio, alongside our latest Gemini models, does the heavy lifting. Want to make a magic mirror app that takes your photo and transforms it into something fantastical? Just say so. AI Studio understands the capabilities you need and automatically wires up the right models and APIs for you. We've made the process of creating a powerful, feature-rich, AI-powered app effortless. You bring the idea; we'll connect the magic. And for those moments when you need a creative spark, you can hit the "I'm Feeling Lucky" button and we'll help you get started.

[3]

Google Adds 'Vibe Coding' to its AI Studio Powered by Gemini | AIM

To inspire new creations, Google has revamped the App Gallery into a visual library that lets users explore and remix existing projects. Google has introduced a new feature called vibe coding on its AI Studio platform to make it faster and easier for users to build AI-powered applications without handling complex backend integrations. According to Google, the redesigned experience allows developers and creators to go "from prompt to working AI app in minutes" by removing the need to manage APIs, SDKs, or service connections. "You can describe the multi-modal app of your dreams, and AI Studio, alongside our latest Gemini models, does the heavy lifting," said the company in a blog post. "We've made the process of creating a powerful, feature-rich, AI-powered app effortless." The company said users can describe what they want to build, such as a photo transformation app or a writing assistant and AI Studio automatically identifies and connects the necessary models and APIs. A new "I'm Feeling Lucky" button has also been introduced to help users generate ideas and start projects quickly. To inspire new creations, Google has revamped the App Gallery into a visual library that lets users explore and remix existing projects. During app builds, a Brainstorming Loading Screen now displays context-aware ideas generated by Gemini, turning idle time into a creative prompt. Another addition, Annotation Mode, allows users to make app changes through direct interaction rather than editing code. The update also includes an option to add personal API keys, enabling uninterrupted development when the free quota is exhausted. Google said these changes aim to make AI app creation accessible to a broader audience. "We're lowering the barrier between a great idea and a working app with Gemini, so anyone can build with AI," the company added.

[4]

Google embraces vibe coding with latest version of AI Studio app development platform - SiliconANGLE

Google embraces vibe coding with latest version of AI Studio app development platform Google LLC said this week it's bringing a "vibe coding" experience into its AI Studio platform to facilitate easier application development for both coders and non-coders. The update means users can enter simple prompts to generate working applications and positions the AI Studio platform as a gateway to the company's broader artificial intelligence ecosystem. Google AI Studio was launched last year and is aimed at developers and non-technical users who want to create AI applications for work. It's different from the company's better known Vertex AI platform, which offers a more comprehensive environment for creating advanced models and is aimed at specialists such as data scientists and machine learning engineers. With this week's update, Google is transforming AI Studio into a platform that lowers the barrier to entry for rapid AI application prototyping and deployment. The company has integrated a comprehensive vibe coding workflow into the platform, so users can enter simple natural language prompts and higher-level instructions to create applications that cater to their needs. There are a number of new features in the platform that enable this experience. For instance, there's a new application gallery and model selector in the "Build" section of the platform. Google is also adding support for "secret variables", which make it possible to store sensitive application programming interface keys securely for production apps. Google also talked about some new modular "superpowers", which allow users to enhance their prompts with a simple click. They're designed to accelerate AI outputs and enable the underlying Gemini model to conduct deeper reasoning, media editing and other tasks. There's also a new "I'm Feeling Lucky" button that users can click on to receive random prompt suggestions, which the company said is meant to help inspire user's creativity. And once the basic app has been generated, there are new tools for editing specific user interface elements. Users can also instruct Gemini to change the UI in targeted ways, the company said. When users are happy with their prototype app, they can deploy it directly from the platform onto Google Cloud Run with a single click, and this will generate a live URL for testing and sharing. Google's new vibe coding experience looks to be a response to the recent launches of enhanced AI coding tools from rivals such as OpenAI, Anthropic PBC and Salesforce Inc. as well as a host of startups. For instance, OpenAI's new GPT-5-Codex tool is a new offering that's designed for "long-duration coding tasks", while Anthropic's Claude Sonnet 4.5 introduced advanced capabilities for writing software. Meanwhile, Salesforce is making a play with Agentforce Vibes, which is meant to address some of the security and reliability issues with AI-generated code. It embeds strict security and governance controls into the development process. More importantly perhaps, the update to Google AI Studio comes amid expectations of the imminent release of the company's latest large language model, Gemini 3.0. There has been increased speculation in recent days that the company is about to launch the successor to Gemini 2.5 Pro, whose capabilities appear to have been surpassed by new rival offerings. At the weekend, two powerful new LLMs appeared on the public testing platform LMArena. They're called "lithiumflow" and "orionmist", and there's no information available about who actually built them, but WinBuzzer says it's likely that they are pre-release versions of Gemini 3.0 Pro and Gemini 3.0 Flash, made available for anonymous final testing. The theory makes sense considering how good they are, with various users citing powerful new capabilities, like their advanced visual reasoning skills. Google Cloud Chief Executive Thomas Kurian recently talked about the company's platform-first approach to AI, saying that many companies only provide models and toolkits. "They are handing you the pieces, not the platform," he said. "They leave your teams to stitch everything together." Google meanwhile, has emphasized the need for a highly integrated ecosystem for AI tools that will lower the barrier to entry and enable everyone to build sophisticated applications, and today's release appears to position AI Studio at the forefront of that approach.

[5]

A Beloved Vibe Coding Platform Is Finally Getting Upgraded for More Casual Users

It's been a good week so far for entrepreneurs who are interested in trying their hands at vibe coding. On Monday, Anthropic released a new feature that enables vibe coding on the web and mobile devices, and on Tuesday, Google released a new vibe coding-focused update to Google AI Studio. Vibe coding, for those new to it, is a novel form of non-technical software development. Anthropic has already found major success with its own coding tool, Claude Code. The company announced on Monday that Claude Code has generated over $500 million in revenue since its release in February, and Anthropic is now bringing it to additional platforms in order to make vibe coding more accessible. Previously, using Claude Code took some technical expertise: it was only available as a command line interface within your computer terminal, or as a plugin within an integrated development environment, also known as an IDE. Terminals and IDEs are how professional software developers write and edit code, says Claude Code product manager Cat Wu, so it made sense to start there. But over time, Wu realized that non-technical people were also using Claude Code, so the team started experimenting with new form factors. "Everywhere that a developer is doing work," she says, "whether that's on web and mobile or other tools, we want Claude to be easily accessible there." Wu admits that Claude Code on web and mobile is still a fairly technical experience. For instance, users must connect to Github in order to create new files, and aren't able to see a live preview of their work in the app like in Claude.ai, Anthropic's consumer-facing chat platform. Wu says that her team will bring more visual elements into Claude Code for the web in the coming months to make the experience more intuitive for non-technical vibe coders. Meanwhile, Google has also put significant resources into making vibe coding more accessible. On Tuesday, the company released a big update to Google AI Studio, its AI-assisted coding platform, specifically aimed at vibe coders. In a video, Google AI Studio product lead Logan Kilpatrick explained that in this new 'vibe coding experience," users can write out the idea for their app, and then select the specific AI-powered elements that they want to include in their app, like generating images, integrating an AI chatbot, and prioritizing low-latency responses. When vibe coding through the platform, Kilpatrick said, Google AI Studio will generate suggestions for next steps in the form of clickable buttons. The platform also makes it easy for users to deploy their apps to the internet, either through Google Cloud or Github. According to Kilpatrick, Google AI Studio is free to use, but will charge for access to its most advanced AI models. Anthropic and Google aren't the only tech companies offering vibe coding tools. If you're looking to get into the vibe coding game, check out recent tools from companies like OpenAI, Replit, and Lovable.

[6]

Vibe Coding Is Now Coming to the Google AI Studio

Google AI Studio lets users access Veo 3 and Nano Banana to make apps Google is introducing vibe coding capability to its AI Studio platform. Now, developers can simply share their artificial intelligence (AI)-powered app idea in natural language text, and the chatbot will write the code for the app and share a preview of the same. With this major push for vibe coding, the Mountain View-based tech giant has also made its Veo 3 video generation model and the Nano Banana image editing model available to developers. Interestingly, users can also send the app for deployment directly from the platform. Google AI Studio Embraces Vibe Coding Vibe coding, a pop culture term for AI-assisted coding, has gained popularity in recent days. In simple terms, the software-development style puts the developer in the shoes of an orchestrator while the AI system does the hard work of writing the code. The human mainly focuses on describing the idea clearly, making iterative changes via text prompts, and sharing natural language feedback. One of the main reasons why vibe coding has gained popularity is that it has lowered the barrier to entry in software development. The speed of code generation has also resulted in many major corporations, including Google itself, opting for vibe coding in its workplace. Now, the tech giant is bringing the capability to all the developers who use the Google AI Studio. Users can generate AI-powered apps of their choice with simple prompts, and can also develop multimodal apps by leveraging Veo 3 and Nano Banana. Underneath the simplicity is the complex algorithm of the AI Studio that automatically understands the context and the intent of the user to connect the right AI models and application programming interfaces (APIs) to let the coding development occur. There is also an "I'm Feeling Lucky" button that generates a random app to act as a point of inspiration for developers. Google has also added a revamped App Gallery that acts as a visual library of what is possible with the Gemini chatbot. Users can check project ideas, preview them, learn from starter code, and remix apps into their own creations. Notably, while AI Studio does have a free quota, in case a developer hits it, they can add their API key to continue app development via Vibe coding.

[7]

Google AI Studio gets new Vibe Coding interface, Annotation Mode, and revamped App Gallery

Google has rolled out a redesigned "Vibe Coding" experience in AI Studio, allowing users to go from a single prompt to a working AI-powered app within minutes. The update removes the need to manage API keys or manually link multiple models, simplifying the entire development process. With the new Vibe Coding workflow, users can describe any app concept -- from generating videos with Veo, creating image-editing tools using Nano Banana, to building writing assistants that verify sources via Google Search. AI Studio, powered by the latest Gemini models, automatically connects the required models and APIs. Users can also tap the "I'm Feeling Lucky" button to instantly explore new app ideas and prototypes without starting from scratch. The App Gallery has been redesigned into a visual library showcasing what can be created using Gemini. Users can browse example projects, preview them instantly, access starter code, and remix existing apps into their own. A new Brainstorming Loading Screen also appears while apps are building, cycling through context-aware ideas from Gemini to help spark new concepts during wait time. The update adds a new Annotation Mode, allowing users to make visual edits directly within the interface. Instead of modifying lines of code, they can highlight any element and instruct Gemini with natural language commands such as "Make this button blue," "Change the style of these cards," or "Animate this image from the left." This visual editing method enables intuitive, conversational adjustments while maintaining creative flow. If users reach the free-tier limit, AI Studio now allows them to temporarily add their own API key to continue working seamlessly. Once the free quota renews, the platform automatically switches back, ensuring uninterrupted development. Google says the goal behind these updates is to make AI app development more accessible. By integrating AI throughout the process -- from ideation to iteration -- the new Vibe Coding experience enables anyone, including non-developers, to build feature-rich applications using Gemini models. All the new Vibe Coding features are available now in Google AI Studio. Google has also released a YouTube playlist featuring tutorials that demonstrate how to use the updated experience and start building with Gemini.

[8]

Google AI Studio gets unified Playground, Maps grounding, and smarter workflow tools

Google has introduced a new set of updates to Google AI Studio, aimed at simplifying development workflows, unifying creative tools, and improving overall control. Based on user feedback, these upgrades remove friction, create a single workspace, and offer clearer visibility across projects. The latest update brings a single, integrated Playground where developers can access Google's Gemini, GenMedia (featuring the new Veo 3.1 capabilities), Text-to-Speech (TTS), and Live models in one place. The new setup removes the need to switch tabs or contexts, allowing users to move seamlessly between text, image, video, and voice outputs. Google has also refined the Chat UI for consistent controls and a smoother interaction experience. Several platform improvements have been made to help users get started quickly and work with more context: Two highly requested features have been added to provide more flexibility and save time: Google describes this update as a step toward strengthening AI Studio's foundation. The company also announced that a "week of vibe coding" is coming next, which will introduce a faster way to turn a single idea into a functional AI-powered app.

Share

Share

Copy Link

Google introduces a new 'vibe coding' experience in AI Studio, allowing users to create AI-powered applications with simple prompts. This update aims to make app development accessible to both developers and non-technical users.

Google Unveils Vibe Coding in AI Studio

Google has introduced a groundbreaking 'vibe coding' experience in its AI Studio platform, revolutionizing the way users can create AI-powered applications. This new feature allows both developers and non-technical users to build sophisticated apps using simple natural language prompts, significantly lowering the barrier to entry for AI app development

1

2

.Streamlined App Development Process

The updated Build tab in AI Studio now offers a redesigned interface that simplifies the app creation process. Users can select from Google's suite of AI models, with Gemini 2.5 Pro as the default option. By describing their desired app, the system automatically assembles the necessary components using Gemini's APIs

1

.

Source: SiliconANGLE

The platform supports a mix of capabilities, including:

- Nano Banana for lightweight AI tasks

- Veo for video understanding

- Imagine for image generation

- Flashlight for performance-optimized inference

- Google Search integration

Once an app is generated, users can access a fully interactive editor with a code-assist interface and a complete source code display. The platform also offers tooltips to help users understand each component's function

1

.Key Features and Innovations

-

'I'm Feeling Lucky' Button: This feature generates randomized app concepts and configurations, sparking creativity and encouraging experimentation

1

2

. -

Visual App Gallery: The revamped App Gallery serves as a visual library for users to explore and remix existing projects, inspiring new creations

3

. -

Annotation Mode: This allows users to make app changes through direct interaction rather than editing code, making it more accessible for non-developers

3

. -

Deployment Options: Apps can be saved to GitHub, downloaded locally, shared directly, or deployed within the Studio environment or via Cloud Run for advanced scaling

1

.

Source: Google

Real-World Application and Testing

In a hands-on test, the platform demonstrated its efficiency by creating a fully functional randomized dice rolling web application within 65 seconds. The app featured dice size selection, color customization, and an animated rolling effect, all built with React, TypeScript, and Tailwind CSS

1

.Related Stories

Industry Impact and Competition

Google's vibe coding experience positions AI Studio as a formidable competitor to other AI-powered coding tools in the market. It offers a more accessible alternative to platforms like Anthropic's Claude Code and OpenAI's Codex, which may require more technical expertise

1

4

.This update aligns with Google's platform-first approach to AI, emphasizing the need for a highly integrated ecosystem that enables everyone to build sophisticated applications

4

. As the AI development landscape continues to evolve, Google's AI Studio is poised to play a significant role in democratizing AI app creation.Future Developments and Expectations

The introduction of vibe coding in AI Studio comes amid speculation about the imminent release of Google's latest large language model, Gemini 3.0. This update, along with potential new model releases, suggests that Google is actively working to maintain its competitive edge in the rapidly advancing field of AI-assisted development

4

.References

Summarized by

Navi

[1]

[4]

Related Stories

Recent Highlights

1

Samsung unveils Galaxy S26 lineup with Privacy Display tech and expanded AI capabilities

Technology

2

Anthropic refuses Pentagon's ultimatum over AI use in mass surveillance and autonomous weapons

Policy and Regulation

3

AI models deploy nuclear weapons in 95% of war games, raising alarm over military use

Science and Research