Google unveils Android XR updates with 2D to 3D conversion and AI glasses partnerships

4 Sources

4 Sources

[1]

Google just stealth announced a huge new Android XR feature -- everything you see can go from 2D to 3D

Google posted a new edition of its Android Show today (Dec. 8) with a special focus on its Android XR platform. The show covered a variety of announcements, including confirmation that the Xreal Project Aura smart glasses will be launching in 2026. However, the biggest news in our minds was a blink-and-you'll-miss-it moment that lasts 17 seconds in the 31-minute video. At around the 11:50 minute mark of the above video, Austin Lee, VP of XR UG Group at Samsung, and Katherine Erdman, a Google XR product manager, gave a "sneak peek" of system-level autospatialization. The feature basically takes all of your 2D content from games and images to YouTube videos and apps. It's a surprising announcement because it's essentially buried in this video and came out of nowhere. However, we think this feature will be huge when it launches in 2026. As mentioned, this was just a sneak peek of the feature, and Erdman says the feature won't launch for XR devices until "next year." What we can see from the video is that you can tap a button in an app's settings menu to turn what you're looking at from 2D to 3D. "You can turn regular 2D content into 3D in real time," Lee stated. He goes on to ask viewers to imagine what it would be like if every game, YouTube video, and even the web were immersive. Immersion and 3D content are one of the selling points of VR and XR headsets. Hopefully, we'll learn more about how autospatialization will work in Android XR soon. A significant chunk of the video is meant for developers creating apps and content for the Android XR platform. However, there are some teases. We got one of our first looks at the Xreal Project Aura in action. Briefly, Google showed off hand gestures for controlling menus and opening apps on the virtual display. Aura will run on a puck that also acts as a trackpad. It's more than we've seen about the upcoming spatial glasses, but we finally have a clearer idea of how Project Aura might work when it launches next year. They also showcased a partnership with the fashion brand Gentle Monster for high-end AI smart glasses. Other announced or teased features hint at glasses similar to Meta's Ray-Ban smart glasses. Tom's Guide Global Editor-in-Chief Mark Spoonauer just went hands-on with the Google Android XR glasses, calling them Google Glass done right.

[2]

Google Set to Host Android XR Event on December 8: Here's What to Expect

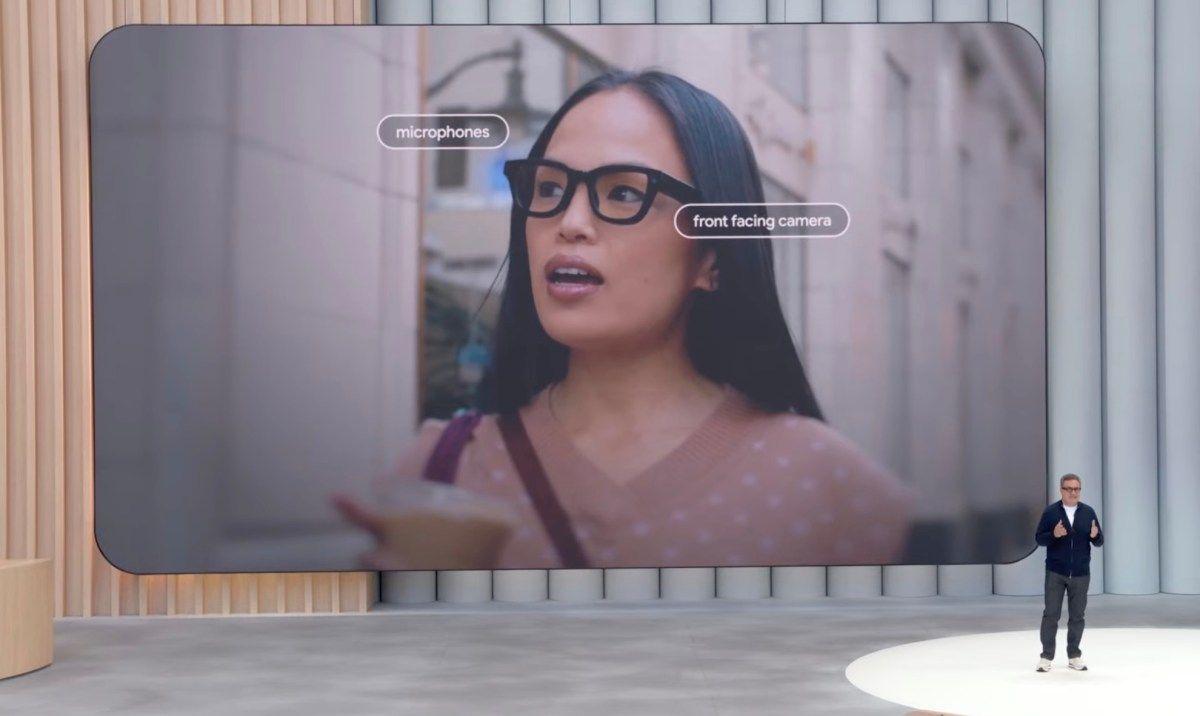

Google recently showcased the prototype of the Android XR smart glasses Google is all set to host a special edition of The Android Show next week. The search giant has also confirmed that the spotlight will be on its Android XR platform. Google is expected to showcase new Android XR features and tools for smart glasses and headsets during the show. The company has created a short video and a dedicated landing page on its website, offering insights about the event. This announcement comes weeks after the launch of the Galaxy XR headset in October. Google's Event Will Focus on Android XR Advancements Google has scheduled a new edition of The Android Show on December 8 at 10:00am PT (11:30pm IST). The event has a tagline, 'exciting XR updates are coming in'. Google says, 'from glasses to headsets and everything in between, get ready for the latest on Android XR', indicating that it will reveal Android XR advancements during the event. The teaser video posted by Google shows two Android bots, one appears to be wearing the Galaxy XR headset, and the other seems to have the rumoured XR smart glasses. This indicates that Google could preview both headsets and smart glasses platforms at the upcoming Android XR event. The event will be livestreamed via the Android Developers' YouTube channel. A teaser page on android.com features a countdown and an option to sign up to get notifications about the event. The description also mentions, "Hear how with Gemini by your side, you are able to have a more conversational, contextual and helpful experience," indicating integration of Google's Gemini AI. The page also displays Samsung's Galaxy XR headset, suggesting Google's continued collaboration with Samsung. The new edition of The Android Show comes close to a month after the debut of the Samsung Galaxy XR headset. The wearable, which runs on Google's Android XR operating system, costs $1,799 (roughly Rs. 1,58,000) in the US for the 16GB RAM + 256GB storage variant. Google recently showcased the prototype of the Android XR smart glasses at the Future Investment Initiative event in Riyadh in association with Magic Leap. The prototype has thick frames, a camera, microphones, alongside the in-lens display and speakers. The company initially teased the Android XR Glasses at its I/O Developers Conference in California in May this year. The company has joined hands with Samsung, Gentle Monster, and Warby Parker to build smart glasses.

[3]

Google's Android XR Showcase on December 8, Key Announcements to Watch

Google Gears Up for Android XR Spotlight Event on December 8: Top Announcements to Expect Google's Android XR promises to completely change the way people engage with digital content through immersive technologies. It combines augmented, virtual, and mixed-reality tools into a single open ecosystem. Furthermore, the platform is closely integrated with Google's AI assistant, Gemini, enabling human-like, voice and vision-based conversations with context awareness. The December 8 showcase has already created a massive hype among tech enthusiasts and industry observers. Early glimpses of the reveal promise next-generation hardware and software that combine the AI, vision, and voice capabilities. This opens the door to immersive technology updates that seamlessly merge the digital and physical worlds.

[4]

Android Show XR Edition announces new Galaxy XR features and first look at Project Aura glasses

Google is also working with Samsung, Gentle Monster and Warby Parker on AI glasses. Google used The Android Show: XR Edition to outline the next phase of its Android XR strategy, confirming new features for the Samsung Galaxy XR headset and offering an early look at upcoming devices from partners. The updates show how Google wants to broaden XR beyond a single headset and prepare for a mix of lightweight AI glasses, immersive wearables and hybrid systems like XReal's Project Aura. The Galaxy XR headset is receiving three new features: PC Connect, Travel mode and Likeness. PC Connect links a Windows PC to the headset, allowing users to bring their desktop or individual windows into the XR space and place them alongside native apps. The feature is designed to expand productivity and gaming, especially for users who want a wider workspace than a laptop screen. PC Connect is rolling out in beta. Travel mode stabilises the headset's view, which helps when using the device on flights or in other situations with motion. The idea is to let users watch content or work with multiple windows without discomfort in cramped environments. Likeness is Google's take on a real-time digital avatar. Users can create a realistic representation of their face that mirrors expressions, mouth movements and gestures during video calls. The feature aims to keep communication personal even when the user's face is obscured by the headset. Likeness enters beta today. These features continue Google's broader push to integrate AI, spatial computing and cross-device workflows into Android XR. Also Read: Oakley Meta Glasses with hands-free AI access, 3K video recording launched in India: Price, availability The company also detailed its work with Samsung, Gentle Monster and Warby Parker on AI glasses. Two models are planned. The first focuses on screen-free assistance using microphones, speakers and cameras to let users interact with Gemini and take photos. The second adds an in-lens display that can show information such as navigation prompts or translation captions. The first glasses are expected next year. Google then confirmed that Android XR will support wired XR glasses, a category aimed at users who want immersive, large-canvas visuals without a full headset. The company showcased Project Aura from XReal, which features optical see-through lenses and a 70-degree field of view. Project Aura allows AR-based digital windows to appear over the real world, creating a large private workspace suitable for productivity or entertainment. Google highlighted everyday use cases, such as following recipe videos or step-by-step guides, while keeping awareness of the physical environment. Project Aura is scheduled for launch next year. On the developer side, Google released Developer Preview 3 of the Android XR SDK. The update opens development for AI glasses, offering APIs for augmented assistance and early examples from partners like Uber and GetYourGuide. It also adds tools for richer spatial experiences on headsets and wired XR glasses. Shahram Izadi, VP and GM of XR at Google, said the goal is to deliver devices that fit naturally into users' daily routines. He highlighted the need for varied form factors that balance comfort, immersion and style, and stressed that hardware is only the starting point for the next wave of XR experiences. The Galaxy XR launched in October 2025 as the first commercial headset built on Google's revived XR platform, placing it in direct competition with Meta's Quest line and Apple's Vision Pro. The new features bring the headset closer to offering a full cross-device workflow, which has become a key differentiator in the premium XR segment. PC Connect also mirrors trends in portable PCs and handheld gaming, where users increasingly expect seamless transitions between screens. Meanwhile, the focus on AI glasses reflects Google's push to deliver lighter, more wearable hardware as part of its Gemini-integrated ecosystem. With multiple partners involved, the company appears to be positioning Android XR as an open alternative to vertically integrated systems from Apple and Meta. As the ecosystem expands, support from developers will be critical. The new SDK preview aims to give app makers the tools to design spatial interfaces that work across headsets, glasses and hybrid devices like Project Aura. The announcements at The Android Show signal Google's attempt to define the next phase of XR as a mix of AI, spatial interfaces and multimodal hardware, with the Galaxy XR, upcoming AI glasses and XREAL's Project Aura forming the first wave of that strategy. Keep reading Digit.in for more updates on this technology.

Share

Share

Copy Link

Google showcased major Android XR advancements during The Android Show on December 8, revealing a system-level autospatialization feature that converts 2D content into 3D in real time. The company also announced new features for Samsung Galaxy XR headsets, confirmed partnerships with Gentle Monster and Warby Parker for AI glasses, and provided the first detailed look at Xreal Project Aura smart glasses launching in 2026.

Google Reveals Autospatialization for Real-Time 2D to 3D Conversion

Google quietly unveiled one of its most significant Android XR capabilities during The Android Show on December 8—a system-level autospatialization feature that transforms 2D content into 3D in real time

1

. The new Android XR feature appeared as a brief 17-second segment at the 11:50 minute mark of the 31-minute video, where Austin Lee, VP of XR UG Group at Samsung, and Katherine Erdman, a Google XR product manager, demonstrated the capability1

. Users will be able to tap a button in an app's settings menu to activate 2D to 3D conversion for games, YouTube videos, images, and web content1

. Lee emphasized the potential impact, asking viewers to imagine every game, video, and web page becoming immersive through this technology1

. The feature won't launch for XR devices until 2026, but it addresses a critical challenge in the immersive Android XR ecosystem—the shortage of native 3D content that has limited adoption of spatial computing platforms1

.

Source: Gadgets 360

Samsung Galaxy XR Receives Three Major Updates

The Samsung Galaxy XR headset, which launched in October 2025 at $1,799 for the 16GB RAM and 256GB storage variant, is receiving three new features that expand its capabilities

2

4

. PC Connect enables users to link Windows PCs to the headset, bringing desktop windows into XR space alongside native apps to create expanded workspaces for productivity and gaming4

. Travel mode stabilizes the headset's view during flights or other motion-heavy situations, allowing users to watch content or work with multiple windows without discomfort4

. Likeness introduces real-time digital avatar technology that mirrors facial expressions, mouth movements, and gestures during video calls, maintaining personal communication even when the user's face is covered by headsets4

. These features demonstrate Google's commitment to cross-device workflows and position the Galaxy XR against competitors like Meta's Quest line and Apple's Vision Pro4

.AI Glasses Partnerships Expand Google's Wearable Strategy

Google detailed collaborations with Samsung, Gentle Monster, and Warby Parker to develop two distinct AI glasses models

4

. The first model focuses on screen-free assistance using microphones, speakers, and cameras to enable context-aware interactions with Google's Gemini AI and photo capture capabilities4

. The second model adds an in-lens display capable of showing navigation prompts, translation captions, and other information overlays4

. Both glasses are expected to launch next year, positioning Android XR as an open alternative to vertically integrated systems from Apple and Meta4

. The platform integrates Google's Gemini AI to deliver conversational, voice and vision-based experiences with context awareness, as highlighted on the event's teaser page2

3

. Google recently showcased an AI glasses prototype at the Future Investment Initiative event in Riyadh in partnership with Magic Leap, featuring thick frames with cameras, microphones, in-lens display, and speakers2

.

Source: Tom's Guide

Related Stories

Xreal Project Aura Brings Wired XR Glasses to Market

Google confirmed Android XR support for wired XR glasses and showcased Xreal Project Aura, scheduled for launch in 2026

1

4

. Project Aura features optical see-through lenses with a 70-degree field of view, allowing AR-based digital windows to appear over the real world for productivity or entertainment4

. The device runs on a puck that doubles as a trackpad, and Google demonstrated hand gestures for controlling menus and opening apps on the virtual display1

. Tom's Guide Global Editor-in-Chief Mark Spoonauer called the Android XR glasses "Google Glass done right" after hands-on testing1

. Google highlighted practical use cases such as following recipe videos or step-by-step guides while maintaining awareness of the physical environment4

. This category targets users seeking immersive, large-canvas visuals without the bulk of full headsets4

.

Source: Digit

Developer Tools Expand Android XR Capabilities

Google released Developer Preview 3 of the Android XR SDK, opening development for AI glasses with APIs for augmented assistance and early examples from partners like Uber and GetYourGuide

4

. The update adds tools for creating richer spatial experiences across headsets and wired XR glasses4

. Shahram Izadi, VP and GM of XR at Google, stated the goal is delivering devices that fit naturally into users' daily routines, emphasizing varied form factors that balance comfort, immersion, and style4

. The Android Show, which aired at 10:00am PT on December 8 and was livestreamed via the Android Developers' YouTube channel, signals Google's attempt to define the next phase of XR as a mix of AI, spatial interfaces, and multimodal hardware2

4

. The combination of autospatialization, diverse hardware partnerships, and developer support aims to address the content gap that has hindered XR adoption and position Android XR as a comprehensive platform for the next generation of spatial computing experiences.References

Summarized by

Navi

[1]

[3]

Related Stories

Google AI glasses set to launch in 2026 with Gemini and Android XR across multiple partners

08 Dec 2025•Technology

Google's Android XR: Revolutionizing Augmented Reality with AI-Powered Glasses

13 Dec 2024•Technology

Google and Magic Leap Unveil Android XR Smart Glasses Prototype with Extended Three-Year Partnership

30 Oct 2025•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology