Google Launches Private AI Compute: Cloud-Based AI Processing with On-Device Level Privacy

19 Sources

19 Sources

[1]

Google says new cloud-based "Private AI Compute" is just as secure as local processing

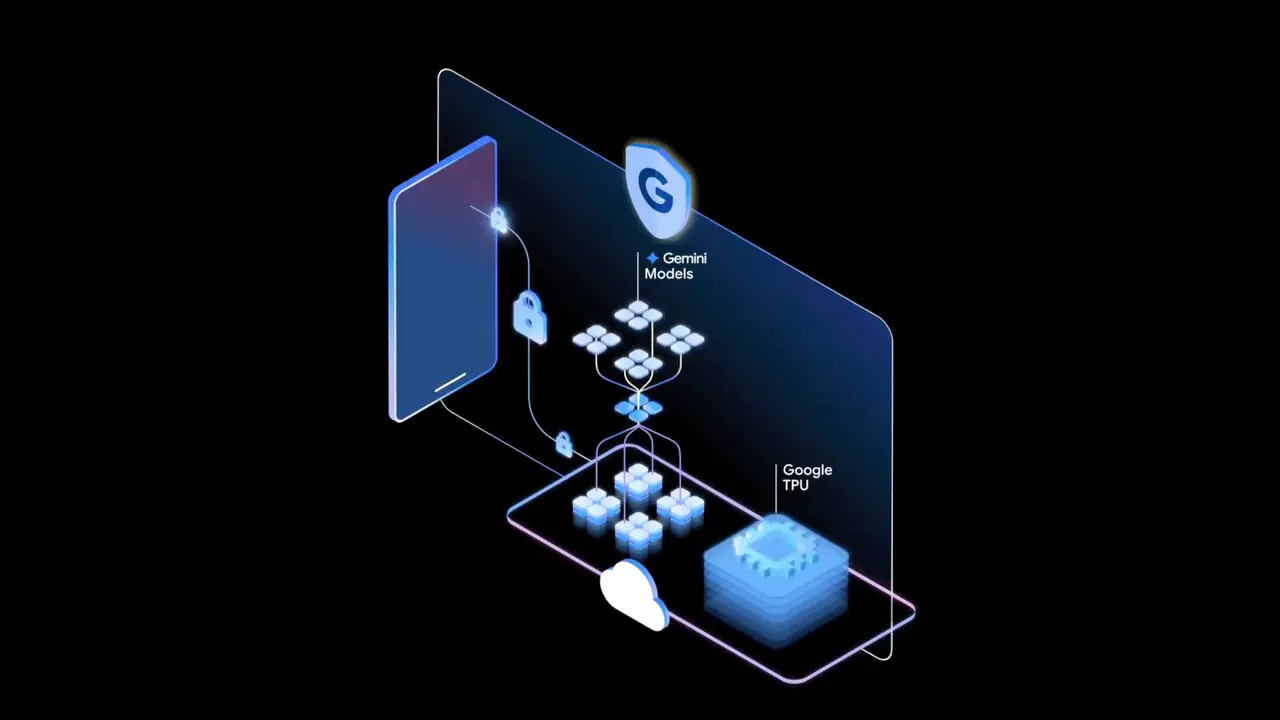

Google's current mission is to weave generative AI into as many products as it can, getting everyone accustomed to, and maybe even dependent on, working with confabulatory robots. That means it needs to feed the bots a lot of your data, and that's getting easier with the company's new Private AI Compute. Google claims its new secure cloud environment will power better AI experiences without sacrificing your privacy. The pitch sounds a lot like Apple's Private Cloud Compute. Google's Private AI Compute runs on "one seamless Google stack" powered by the company's custom Tensor Processing Units (TPUs). These chips have integrated secure elements, and the new system allows devices to connect directly to the protected space via an encrypted link. Google's TPUs rely on an AMD-based Trusted Execution Environment (TEE) that encrypts and isolates memory from the host. Theoretically, that means no one else -- not even Google itself -- can access your data. Google says independent analysis by NCC Group shows that Private AI Compute meets its strict privacy guidelines. According to Google, the Private AI Compute service is just as secure as using local processing on your device. However, Google's cloud has a lot more processing power than your laptop or phone, enabling the use of Google's largest and most capable Gemini models. Edge vs. Cloud As Google has added more AI features to devices like Pixel phones, it has talked up the power of its on-device neural processing units (NPUs). Pixels and a few other phones run Gemini Nano models, allowing the phone to process AI workloads securely on "the edge" without sending any of your data to the Internet. With the release of the Pixel 10, Google upgraded Gemini Nano to handle even more data with the help of researchers from DeepMind. NPUs can't do it all, though. While Gemini Nano is getting more capable, it can't compete with models that run on massive, high-wattage servers. That might be why some AI features, like the temporarily unavailable Daily Brief, don't do much on the Pixels. Magic Cue, which surfaces personal data based on screen context, is probably in a similar place. Google now says that Magic Cue will get "even more helpful" thanks to the Private AI Compute system. Google has also released a Pixel feature drop today, but there aren't many new features of note (unless you've been hankering for Wicked themes). As part of the update, Magic Cue will begin using the Private AI Compute system to generate suggestions. The more powerful model might be able to tease out more actionable details from your data. Google also notes the Recorder app will be able to summarize in more languages thanks to the secure cloud. So what Google is saying here is that more of your data is being offloaded to the cloud so that Magic Cue can generate useful suggestions, which would be a change. Since launch, we've only seen Magic Cue appear a handful of times, and it's not offering anything interesting when it does. There are still reasons to use local AI, even if the cloud system has "the same security and privacy assurances," as Google claims. An NPU offers superior latency because your data doesn't have to go anywhere, and it's more reliable, as AI features will still work without an Internet connection. Google believes this hybrid approach is the way forward for generative AI, which requires significant processing even for seemingly simple tasks. We can expect to see more AI features reaching out to Google's secure cloud soon.

[2]

Google's Private AI Compute promises good-as-local privacy in the Gemini cloud

The new service could make agentic AI on phones possible and private. Google is all in on AI, and with its latest advancement, it promises to offer local-level security and privacy, while also harnessing the power of the cloud. This new service, called Private AI Compute, aims to deliver a more helpful, personal, and proactive AI experience, with safety and responsibility at its core. Google's pitch sounds a little like Apple's Private Cloud Compute, doesn't it? Also: This new Google Gemini model scrolls the internet just like you do - how it works With AI rapidly gaining ground in both capability and popularity, it's evolving from traditional queries into the realm of completing tasks for users. This level of AI is capable of anticipating needs and handling tasks, which requires advanced reasoning and computational power. The problem is, these advanced features are far beyond what on-device processing can handle. That's where Private AI Compute comes into play. According to Google, the new service will not only unlock all the speed and power that agentic AI requires, but also ensure that your personal data remains private. As Google notes in its press release, "Private AI Compute allows you to get faster, more helpful responses, making it easier to find what you need, get smart suggestions and take action." But how does Private AI Compute protect your data? Essentially, Google is applying the security and privacy safeguards embedded in devices like Pixel phones, guided by its Secure AI Framework, to its more robust cloud servers. All user data will remain isolated, private, and protected by an additional layer of security and privacy. That multi-layered system was designed from the ground up around Google's core security and privacy principles, which are: Also: 5 reasons I use local AI on my desktop - instead of ChatGPT, Gemini, or Claude With these principles combined, features like Magic Cue (which automatically surfaces relevant information and actions within apps) will become even more helpful and timely on the latest Pixel 10 phones. As well, the Pixel Recorder app will be able to summarize transcriptions across a wider range of languages. Based on the press release, here's my understanding of Google's plans: The company recognizes that agentic AI tasks require significantly more power than a phone can offer, and building phones with sufficient capacity to achieve full agentic capabilities would be unaffordable for the general public. To that end, it appears that Google is planning to utilize Private AI Compute to offload some of its AI functionality back to the cloud, while maintaining the same level of privacy as on-device computing. As someone who doesn't generally trust the privacy claims of companies building AI, I tend to stick with local-level processing (as you can achieve with a locally installed Ollama, for instance). Also: I tried every new AI feature on the Google Pixel 10 series - my thoughts as an AI expert Yes, Google claims that they won't be able to view the data transmitted to the Private AI Compute servers, but does that mean they also won't be building in the technology that can be used to generate profiles for users in order to push targeted ads? I certainly want to believe this, but I'm hesitant to do so. And if Google plans to offload even more AI processing to the cloud, I might be inclined to either migrate to a different brand of phone or do my best to avoid AI (especially agentic AI) on my phones. Let's hope Google stands by its word and delivers on the promise of privacy and security. If they can do that, this new Private AI Compute could open up Pixel Phones to some seriously powerful AI processing that would otherwise be impossible. With that more powerful processing, the goal of delivering agentic AI to phones will be within reach.

[3]

Google is introducing its own version of Apple's private AI cloud compute

Google is rolling out a new cloud-based platform that lets users unlock advanced AI features on their devices while keeping data private. The feature, virtually identical to Apple's Private Cloud Compute, comes as companies reconcile users' demands for privacy with the growing computational needs of the latest AI applications. Many Google products run AI features like translation, audio summaries, and chatbot assistants, on-device, meaning data doesn't leave your phone, Chromebook, or whatever it is you're using. This isn't sustainable, Google says, as advancing AI tools need more reasoning and computational power than devices can supply. The compromise is to ship more difficult AI requests to a cloud platform, called Private AI Compute, which it describes as a "secure, fortified space" offering the same degree of security you'd expect from on-device processing. Sensitive data is available "only to you and no one else, not even Google." Google said the ability to tap into more processing power will help its AI features go from completing simple requests to giving more personal and tailored suggestions. For example, it says Pixel 10 phones will get more helpful suggestions from Magic Cue, an AI tool that contextually surfaces information from email and calendar apps, and a wider range of languages for Recorder transcriptions. "This is just the beginning," Google said.

[4]

Google touts Private AI Compute for cloud confidentiality

Google, perhaps not the first name you'd associate with privacy, has taken a page from Apple's playbook and now claims that its cloud AI services will safeguard sensitive personal data handled by its Gemini model family. The Chocolate Factory has announced Private AI Compute, which is designed to extend the trust commitments embodied by Android's on-device Private Compute Core to services running in Google datacenters. It's conceptually and architecturally similar to Private Cloud Compute from Apple, which historically has used privacy as a big selling point for its devices and services, unlike Google, which is fairly open about collecting user data to serve more relevant information and advertisements. "Private AI Compute is a secure, fortified space for processing your data that keeps your data isolated and private to you," said Jay Yagnik, VP of AI innovation and research, in a blog post. "It processes the same type of sensitive information you might expect to be processed on-device." Since the generative AI boom began, experts have advised keeping sensitive data away from large language models, for fear that such data may be incorporated into them during the training process. Threat scenarios since then have expanded as models have been granted varying degrees of agency and access to other software tools. Now, providers are trying to convince consumers to share personal info with AI agents so that they can take action that requires credentials and payment information. Without greater privacy and security assurances, the agentic pipe dreams promoted by AI vendors look unlikely to take shape. Among the 39 percent of Americans who haven't adopted AI, 71 percent cite data privacy as a reason why, according to a recent Menlo Ventures survey. The paranoids have reason to be concerned. According to a recent Stanford study, six major AI companies - Amazon (Nova), Anthropic (Claude), Google (Gemini), Meta (Meta AI), Microsoft (Copilot), and OpenAI (ChatGPT) - "appear to employ their users' chat data to train and improve their models by default, and that some retain this data indefinitely." If every AI prompt could be handled by an on-device model that didn't phone home with user data, many of the privacy and security concerns would be moot. But so far, the consensus appears to be that frontier AI models must run in the cloud. So model vendors have to allay concerns about insiders harvesting sensitive stuff from the tokens flowing between the street and the data center. Google's solution, Private AI Compute, is similar to Apple's Private Cloud Compute in that both data isolation schemes rely on Trusted Execution Environments (TEE) or Secure Enclaves. These notionally confidential computing mechanisms encrypt and isolate memory and processing from the host. For AI workloads on its Tensor Processing Unit (TPU) hardware, Google calls its computational safe room Titanium Intelligence Enclave (TIE). For CPU workloads, Private AI Compute relies on AMD's Secure Encrypted Virtualization - Secure Nested Paging (SEV-SNP), a secure computing environment for virtual machines. Where Private AI Compute jobs require analytics, Google claims that it relies on confidential federated analytics, "to ensure that only anonymous statistics (e.g. differentially private aggregates) are visible to Google." And the system incorporates various defenses against insiders, Google claims. Data is processed during inference requests in protected environments and then discarded when the user's session ends. There's no administrative access to user data and no shell access on hardened TPUs. As a first step toward makings its claims verifiable, Google has published [PDF] cryptographic digests (e.g. SHA2-256) of application binaries used by Private AI Compute servers. Looking ahead, Google plans to let experts inspect its remote attestation data, to undertake third-party further audits, and to expand its Vulnerability Rewards Program to cover Private AI Compute. That may attract more interest from security researchers, some of whom recently found flaws in AMD SEV-SNP and other trusted computing schemes. Kaveh Ravazi, assistant professor in the department of information technology and electrical engineering at ETH Zürich, told The Register in an email that, while he's not an expert on privacy preserving analytics, he's familiar with TEEs. "There have been attacks in the past to leak information from SEV-SNP for a remote attacker and compromise the TEE directly for an attacker with physical access (e.g., Google itself)," he said. "So while SEV-SNP raises the bar, there are definitely ways around it." As for the hardened TPU platform, that looks more opaque, Ravazi said. "They say things like there is no shell access and the security of the TPU platform itself has definitely been less scrutinized (at least publicly) compared to a TEE like SEV-SNP," he said. "Now in terms of what it means for user data privacy, it is a bit hard for me to say since it is unclear how much user data actually goes to these nodes (except maybe the prompt, but maybe they also create user-specific layers, but I do not really know)." He added, "Google seems to be a bit more open about their security architecture compared to other AI-serving cloud companies as far as this whitepaper goes, and while not perfect, I see this (partial) openness as a good thing." An audit conducted by NCC Group concludes that Private AI Compute mostly keeps AI session data safe from everyone except Google. "Although the overall system relies upon proprietary hardware and is centralized on Borg Prime, NCC Group considers that Google has robustly limited the risk of user data being exposed to unexpected processing or outsiders, unless Google, as a whole organization, decides to do so," the security firm's audit concludes. ®

[5]

Google Launches 'Private AI Compute' -- Secure AI Processing with On-Device-Level Privacy

Google on Tuesday unveiled a new privacy-enhancing technology called Private AI Compute to process artificial intelligence (AI) queries in a secure platform in the cloud. The company said it has built Private AI Compute to "unlock the full speed and power of Gemini cloud models for AI experiences, while ensuring your personal data stays private to you and is not accessible to anyone else, not even Google." Private AI Compute has been described as a "secure, fortified space" for processing sensitive user data in a manner that's analogous to on-device processing but with extended AI capabilities. It's powered by Trillium Tensor Processing Units (TPUs) and Titanium Intelligence Enclaves (TIE), allowing the company to use its frontier models without sacrificing on security and privacy. In other words, the privacy infrastructure is designed to take advantage of the computational speed and power of the cloud while retaining the security and privacy assurances that come with on-device processing. Google's CPU and TPU workloads (aka trusted nodes) rely on an AMD-based hardware Trusted Execution Environment (TEE) that encrypts and isolates memory from the host. The tech giant noted that only attested workloads can run on the trusted nodes, and that administrative access to the workloads is cut off. Furthermore, the nodes are secured against potential physical data exfiltration attacks. The infrastructure also supports peer-to-peer attestation and encryption between the trusted nodes to ensure that user data is decrypted and processed only within the confines of a secure environment and is shielded from broader Google infrastructure. "Each workload requests and cryptographically validates the workload credentials of the other, ensuring mutual trust within the protected execution environment," Google explained. "Workload credentials are provisioned only upon successful validation of the node's attestation against internal reference values. Failure of validation prevents connection establishment, thus safeguarding user data from untrusted components." The overall process flow works like this: A user client establishes a Noise protocol encryption connection with a frontend server and establishes bi-directional attestation. The client also validates the server's identity using an Oak end-to-end encrypted attested session to confirm that it's genuine and not modified. Following this step, the server sets up an Application Layer Transport Security (ALTS) encryption channel with other services in the scalable inference pipeline, which then communicates with model servers running on the hardened TPU platform. The entire system is "ephemeral by design," meaning an attacker who manages to gain privileged access to the system cannot obtain past data, as the inputs, model inferences, and computations are discarded as soon as the user session is completed. Google has also touted the various protections baked into the system to maintain its security and integrity and prevent unauthorized modifications. These include - NCC Group, which has conducted an external assessment of Private AI Compute between April and September 2025, said it was able to discover a timing-based side channel in the IP blinding relay component that could be used to "unmask" users under certain conditions. However, Google has deemed it low risk due to the fact that the multi-user nature of the system introduces a "significant amount of noise" and makes it challenging for an attacker to correlate a query to a specific user. The cybersecurity company also said it identified three issues in the implementation of the attestation mechanism that could result in a denial-of-service (DoS) condition, as well as various protocol attacks. Google is currently working on mitigations for all of them. "Although the overall system relies upon proprietary hardware and is centralized on Borg Prime, [...] Google has robustly limited the risk of user data being exposed to unexpected processing or outsiders, unless Google, as a whole organization, decides to do so," it said. "Users will benefit from a high level of protection from malicious insiders." The development mirrors similar moves from Apple and Meta, which have released Private Cloud Compute (PCC) and Private Processing to offload AI queries from mobile devices in a privacy-preserving way. "Remote attestation and encryption are used to connect your device to the hardware-secured sealed cloud environment, allowing Gemini models to securely process your data within a specialized, protected space," Jay Yagnik, Google's vice president for AI Innovation and Research, said. "This ensures sensitive data processed by Private AI Compute remains accessible only to you and no one else, not even Google."

[6]

Google just gave Pixel AI a major boost without sacrificing your privacy

The tech uses strong encryption and a secure hardware environment to ensure that your information remains private. Google is making its AI features on Pixel phones smarter and more secure with the help of a new technology called Private AI Compute. The company explains that the tech enables your phone to leverage Google's advanced Gemini AI models in the cloud, while maintaining the same level of privacy and security as on-device processing. That means while some of the AI processing now happens in the cloud, your personal data remains private and inaccessible to anyone, including Google. "Private AI Compute is like running AI on your device, but with the power of our best cloud models," the company wrote in a blog post. The system uses strong encryption and a secure hardware environment called Titanium Intelligence Enclaves to ensure that your information remains private. According to the company, Magic Cue on the Pixel 10 series will now provide more timely and accurate suggestions, thanks to the additional cloud processing power. The Pixel Recorder app can also now generate summaries of transcribed recordings in more languages, including English, Mandarin Chinese, Hindi, Italian, French, German, and Japanese, starting with Pixel 8 and newer models. In essence, Private AI Compute allows Google to run more powerful models that might be too large or demanding for a smartphone's hardware, giving users smarter AI features without sacrificing their privacy. Google says this is just the start. The company plans to bring Private AI Compute to more products and experiences in the future.

[7]

Google makes Pixel 10 Magic Cue 'more timely' with cloud-based 'Private AI Compute'

Alongside the November 2025 Feature Drop, Google announced Private AI Compute to make Magic Cue on the Pixel 10 more powerful while remaining secure. Private AI Compute is Google's new "AI processing platform" that combines the power of cloud models with on-device processing's privacy. It specifically is a "secure, fortified space for processing" sensitive user data that Google cannot access. This system takes advantage of Google's end-to-end AI stack including CPUs and Cloud TPUs. Your phone is connected to the "hardware-secured sealed cloud environment" via encryption and remote attestation. This is a joint effort from Google's Platform and Devices (the hardware team), DeepMind, and Cloud divisions. In general, Google says AI that "can anticipate your needs with tailored suggestions or handle tasks for you at just the right moment" needs "advanced reasoning and computational power that at times goes beyond what's possible with on-device processing." Google published a more detailed technical brief here. This is similar in concept to Apple Intelligence's Private Cloud Compute. Magic Cue is now using Private AI Compute for "more timely suggestions" thanks to Gemini models in the cloud, though on-device Gemini Nano is still leveraged. Magic Cue will continue to appear when you open a Google Messages conversation, on Phone by Google's calling screen, the Pixel Weather homepage when you have an upcoming event, and in the Gboard suggestions row. Additionally, Pixel Recorder is using Private AI Compute for transcription summaries in more languages. Pixel users will be able to see when Private AI Compute is called. To access, enable Developer options and go to Settings app > Security & Privacy > More security & privacy > Android System Intelligence > Network Usage log > Log network activity. Google teases how Private AI Compute "opens up a new set of possibilities for helpful AI experiences now that we can use both on-device and advanced cloud models for the most sensitive use cases."

[8]

Google Announces Its Own Version of Apple's Private Cloud Compute

Google this week announced Private AI Compute, a new cloud-based system designed to deliver AI capabilities using its Gemini models while maintaining strict data privacy controls, a framework that closely parallels Apple's own Private Cloud Compute technology. According to Google, the service enables AI tasks that exceed the processing capacity of local hardware to be handled securely in the cloud without exposing personal data to the company or third parties. For decades, Google has developed privacy-enhancing technologies (PETs) to improve a wide range of AI-related use cases. Today, we're taking the next step in building helpful experiences that keep users safe with Private AI Compute in the cloud, a new AI processing platform that combines our most capable Gemini models from the cloud with the same security and privacy assurances you expect from on-device processing. It's part of our ongoing commitment to deliver AI with safety and responsibility at the core. Private AI Compute is built using custom Tensor Processing Units (TPUs) with integrated Titanium Intelligence Enclaves (TIE). These hardware-secured enclaves form an isolated, "fortified space" where AI workloads can be processed without direct access to raw user data. Devices connect to the environment using remote attestation and encrypted channels, ensuring that all data transferred remains inaccessible to Google's engineers or infrastructure administrators. The system will first power new AI experiences on Pixel 10 devices, such as enhancements to Magic Cue, an AI assistant that provides contextually aware suggestions, and an upgraded Recorder app capable of summarizing transcriptions in additional languages. Both rely on Gemini's larger models in the cloud, which require significantly greater computing resources than on-device NPUs can provide. Private AI Compute is extremely similar to Apple's Private Cloud Compute, which it launched last year. Apple's system supports Apple Intelligence features and uses custom servers containing Apple silicon chips that operate as verifiable, sealed environments for processing AI tasks.

[9]

Google's Private AI Compute will let users securely run even the most powerful AI models on your smartphone

Google follows Apple in launching its own Private AI Compute * Private AI Compute is built to power Gemini models for your smartphone * Pixel 10's Magic Cue could be the first feature to benefit from this * Google wants to be transparent and accountable Google has launched Private AI Compute, its own cloud-based AI processing platform in a move to handle heavy processing tasks off-device. Private AI Compute will enable access to advanced AI capabilities without having to limit them to on-device processing - the cloud parts will include strong data privacy protections. In a company blog post, AI Innovation and Research VP Jay Yagnik explained that the data processed through Private AI Compute would only be available to the user (not even Google). Google Private AI Compute cloud "[AI's] progression in capability requires advanced reasoning and computational power that at times goes beyond what's possible with on-device processing," Yagnik explained. Google says Private AI Compute unlocks the "full speed and power" of Gemini models - something that would only be possible on-device with costly chips. Google says that models process data within a "specialized, protected space." Core technologies include Google's proprietary Tensor Processing Units (TPUs) for compute power and Titanium Intelligence Enclaves (TIE) to cover the privacy and security fronts. A great example of where Private AI Compute can offer benefits is with Pixel 10's Magic Cue, which generates contextually-aware suggestions like which apps you may want to open and which actions you may want to take. On a similar note, Apple's Private Cloud Compute (PCC), which was announced in mid-2024, extends on-device-level privacy to the cloud. Like Google's take on the secure cloud, Apple uses its own proprietary silicon, Secure Enclave and Secure Boot. "This is just the beginning," Yagnik explained, suggesting that the product could still be in development and may continue to improve. Google's technical brief on Private AI Compute reveals plans for a bug bounty program to deepen accountability, as well as more options for security developers to inspect code and verify remote attestation. Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button! And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

[10]

Google launches Private AI Compute for privacy-centric AI users

Google just announced a new system called Private AI Compute, describing it as a way to run its most advanced Gemini models while keeping user data locked down. Apple already offers a similar feature, introduced in 2024, called Private Cloud Compute. In a blog post, Google said the feature is designed to balance performance and privacy. Private AI Compute would allow AI models to draw on cloud processing power without giving Google (or anyone else) access to the information being analyzed. According to Google, Private AI Compute operates within a hardware-sealed, verified environment that encrypts all data transfers between devices and its cloud infrastructure. What's the point of services like Private AI Compute? Customers who are wary of their personal conversations, personal emails, or sensitive company data being used for AI training often prefer to perform AI processes on device, which ensures privacy. That way, their data never leaves their computer or phone. However, that's not always possible, as many devices weren't built for complex AI processing. "This approach delivers the benefits of powerful cloud models with the privacy protections of on-device processing," the company said in its announcement. It builds on Google's Secure AI Framework and utilizes its custom TPUs, along with new Titanium Intelligence Enclaves, to enforce end-to-end encryption and security standards, Google said. Private AI Compute will debut in select Google products, starting with Magic Cue on the Pixel 10 and updated Recorder app features that utilize cloud models to generate smarter summaries and suggestions, while maintaining conversation privacy. The system's goal, according to Google, is to support "helpful, personal and proactive" AI experiences without requiring users to trade away sensitive information. As more of Google's AI features move beyond simple commands toward proactive assistance, Private AI Compute represents what the company calls a "next step in responsible innovation."

[11]

Google just upgraded Magic Cue on your Pixel 10 with advanced Gemini models, without compromising privacy

Its new Private AI Compute platform combines the speed and smarts of cloud models with the privacy of on-device processing. What's happened? Google today released a surprise Pixel Drop update for its Pixel lineup, bringing a bunch of new features to Pixel 6 and newer models. Alongside the new features, the company announced a new AI processing platform for the Pixel 10 series that promises the same level of privacy and security as on-device processing, even when using advanced Gemini cloud models. The new platform, called Private AI Compute, is designed to deliver the full speed and power of Gemini models in the cloud, while ensuring users' personal data stays private. It will allow on-device features to offer more advanced capabilities without compromising privacy, starting with an upgrade for the Pixel 10 series' Magic Cue feature, which can now offer "more timely suggestions." Google is also using this platform to power transcription summaries in a wider range of languages in the Pixel Recorder app. Why is this important? With the Private AI Compute platform, Google aims to reassure users that their sensitive data will remain private, even if they use AI features powered by Gemini models in the cloud. For users, this should result in a significant performance boost in supported AI features without any privacy or security trade-offs. The platform also lets Google unlock new capabilities, like more proactive suggestions from Magic Cue and expanded language support for transcription summaries in the Recorder app, without compromising user privacy. Recommended Videos Why should I care? Since cloud-based advanced Gemini models are more capable than their on-device counterparts, features powered by Private AI Compute should deliver noticeable performance gains without compromising your privacy. If you have a Pixel 10 series phone, you can expect more timely and helpful suggestions from the Magic Cue feature. You'll also get transcription summaries in more languages in the Recorder app, which currently only supports summaries for transcriptions in English, French, German, Japanese, Chinese (Mandarin), Hindi, and Italian. What's next? These Private AI Compute-powered features mark the start of a new wave of AI experiences that combine on-device and advanced cloud models for sensitive use cases. Google is expected to utilize the platform to enhance existing AI features on Pixel devices and introduce new features, allowing you to do more while keeping your data private.

[12]

Google Introduces Private AI Compute for On-Device Gemini Capabilities | AIM

The platform uses Gemini cloud models for faster responses and improved features in Google products, all within a secure and privacy-focused framework. Google is introducing "Private AI Compute," a new cloud-based platform that allows users to access advanced AI features on their devices while maintaining data privacy. This feature, which closely resembles Apple's Private Cloud Compute, emerges as companies strive to strike a balance between users' privacy demands and the growing computational requirements of modern AI applications. As stated by the company, Google developed Private AI Compute to harness the complete speed and power of Gemini cloud models for AI experiences. The new feature offers quicker, more useful responses, simplifying the process of finding what you need, receiving intelligent suggestions, and taking action. Private AI Compute allows on-device capabilities to operate with enhanced features while upholding privacy assurances. With this technology, Magic Cue is becoming even more beneficial, offering timely suggestions on the latest Pixel 10 devices. Additionally, with the assistance of the platform, the Recorder app on Pixel can now summarise transcriptions across a broader array of languages. Many Google products utilise AI features, such as translation and chatbots, directly on devices, keeping user data local. However, Google acknowledges that this method is unsustainable, as more advanced AI tools need greater computational power than devices can offer. Private AI Compute is a secure, multi-layered system designed with core principles of privacy. It operates on a seamless Google tech stack powered by custom Tensor Processing Units (TPUs) and integrates world-class privacy through Titanium Intelligence Enclaves (TIE). Remote attestation and encryption ensure your device connects to a hardware-secured cloud environment, allowing Gemini models to process your data in a protected space. "This ensures sensitive data processed by Private AI Compute remains accessible only to you and no one else, not even Google," it said in the blog.

[13]

Google details cloud-based Private AI Compute system for securing Pixel data - SiliconANGLE

Google details cloud-based Private AI Compute system for securing Pixel data Google LLC today detailed Private AI Compute, a cloud-based system it uses to power its Pixel handsets' artificial intelligence features. Some of the AI models used by the latest Pixel 10 smartphone series are too large to run on-device. As a result, Google hosts them in its cloud. Private AI Compute is designed to protect the user data that Pixel 10 phones send to the search giant's cloud-based AI models. The models run on servers equipped with Google's custom TPU machine learning accelerators. The company debuted its newest TPU, Ironwood, in April. It runs in clusters that contain up to 9,216 chips with 42.5 exaflops of aggregate performance. The TPUs that power Private AI Compute are installed in specialized, hardened servers. Google has disabled the machines' shell access, a capability that enables administrators to modify sensitive software components. Many cyberattacks exploit shell access to install malware. Pixel devices don't connect to TPUs directly, but rather go through intermediary servers powered by central processing units from Advanced Micro Devices Inc. Google uses a feature called SEV-SNP that AMD ships with its CPUs to reduce the risk of breaches. SEV-SNP splits a server's memory into encrypted segments that can only be accessed by the virtual machines that use them. As a result, the memory can't be decrypted by the hypervisor or operating system. That means the operator of the underlying infrastructure, in this case Google, has no way of viewing users' data. SEV-SNP also includes mitigations against side channel attacks. Those are cyberattacks that attempt to extract sensitive data by analyzing physical server properties such as fluctuations in a machine's power consumption. Pixel phones connect to Google's AMD-powered intermediary servers via encrypted connections. Before establishing a connection, the search giant verifies the servers using a technique called attestation. The method uses technical data about a system to check that it's not malicious. Google routes Private AI Compute network traffic through systems called IP blinding relays. The purpose of the systems is to hide Pixel users' IP addresses. Without an IP address, a hacker seeking to eavesdrop on a specific user has no way of distinguishing the user's traffic from other network data, which makes cyberattacks impractical to carry out. Google is using Private AI Compute to power Pixel's Recorder transcription app. According to the search giant, the system enables Recorder to provide transcription summaries in more languages than would otherwise be possible. Private AI Compute also underpins Magic Cue, a new set of Pixel features that help users find data stored in Google services.

[14]

Google's New Private AI Compute Takes Gemini Tasks to the Cloud in...

We may earn a commission when you click links to retailers and purchase goods. More info. As a behind-the-scenes part of today's Pixel Feature Drop, Google is introducing Private AI Compute. This new AI processing platform aims to take Gemini tasks that would typically happen on-device and moves them to the cloud to give them more power while keeping them private. Google has an entire blog post explaining what Private AI Compute is, but really, the idea here is that the needs for AI to become more helpful and speedy and proactive requires more computational power than your device can offer. That's where the cloud and Google's vast network of data centers can come in to play. Google believes it can trigger Gemini tasks through the cloud in private to process on-device-like tasks in a hurry and be more helpful. For now, the power of Private AI Compute will show up in Magic Cue on Pixel 10 devices, where Google hopes to show even "more timely" suggestions in the apps it integrates with. Google is also using Private AI Compute to summarize transcriptions for a wider range of languages in the Recorder app. And that's it at this time, but you can imagine they'll push more and more to the cloud over time. On a privacy note, since the word "Private" is in the name, Google says this is a "secure, fortified space for processing your data that keeps your data isolated and private to you." This all supposedly happens within a "trusted boundary" that houses your personal info, your unique insights, and how you use them under "an extra layer of security and privacy." Google is saying specifically that all of the sensitive data processing by Private AI Compute is only accessible to you and not even Google.

[15]

Google Says Private AI Compute Will Keep Your Conversations With Gemini Secure

Recorder app now uses this platform to summarise transcriptions Google has introduced a new cloud-backed platform designed to enable advanced AI features on devices without compromising user privacy. Similar to Apple's Private Cloud Compute, this new system, dubbed Private AI Compute, allows data processing in a secure, encrypted environment, ensuring sensitive information stays protected. Google's Private AI Compute feature debuts with the Pixel 10's Magic Cue and Pixel Recorder apps. It allows Google AI features to use Gemini cloud models for the AI experience. Google Brings Private AI Compute With Privacy in Focus In a blog post on Tuesday, Google announced Private AI Compute cloud, a new platform that blends the power of its Gemini AI models with the privacy and security typically associated with on-device processing. The company says this approach allows users to benefit from advanced AI capabilities while keeping their data secure and private. Google claims its new Private AI Compute platform ensures that personal data remains accessible only to the user, not even Google cannot access it. The system enables faster, AI-driven responses by handling tasks in the cloud. It's designed to help users find what they need, receive smart suggestions, and take action. Private AI Compute runs on Google stack, powered by Google's custom Tensor Processing Units (TPUs) with Titanium Intelligence Enclaves (TIE). The company states that this design enables Google AI features to use their most capable and intelligent Gemini models in the cloud, while maintaining privacy. Google confirmed that with Private AI Compute, Magic Cue on the latest Pixel 10 phones is getting improvements. The on-device AI feature is confirmed to provide more timely and relevant suggestions with this upgrade. Additionally, the Recorder app now uses this platform to summarise transcriptions in more languages, enhancing its usability for a global audience. Google says this is just the beginning as Private AI Compute unlocks new possibilities for delivering new AI experiences by combining on-device processing with advanced cloud models. As mentioned, the Private AI Compute feature closely mirrors Apple's Private Cloud Compute, which offers similar privacy-focused cloud-based AI processing.

[16]

Google 'Private AI Compute' offers Enhanced Data Privacy in Cloud AI Processing

Google has been actively developing privacy-enhancing technologies (PETs) to improve a wide array of AI-related applications. The company has now announced its next step in this commitment with the launch of Private AI Compute in the cloud, a new AI processing platform. This platform is designed to combine the power of capable Gemini models from the cloud with security and privacy assurances typically associated with on-device processing. Google states that this initiative is part of its ongoing efforts to deliver AI with safety and responsibility at the core. As AI evolves to become more helpful, personal, and proactive -- moving beyond simple requests to anticipating needs and handling tasks -- it increasingly requires advanced reasoning and computational power. According to Google, this demand sometimes exceeds the capacity of on-device processing alone. Private AI Compute was developed to address this challenge, aiming to unlock the full speed and power of cloud-based Gemini models for AI experiences. Crucially, the company emphasizes that the platform ensures personal data remains private to the user and is not accessible to any other party, not even Google. The goal is to provide users with faster, more helpful responses, smarter suggestions, and easier actions. Google positions Private AI Compute as its next evolution in AI processing technology, building upon existing security and privacy safeguards guided by the company's Secure AI Framework, AI Principles, and Privacy Principles. The platform is described as a secure, fortified space for processing sensitive data, ensuring the data remains isolated and private to the user. This trusted boundary processes the same type of sensitive information users might expect to be handled on-device, protected by an additional layer of security and privacy on top of existing AI safeguards. Private AI Compute enables on-device features to perform with extended capabilities while maintaining their privacy assurance. The company highlights two initial applications of this technology: Google concludes that this platform "opens up a new set of possibilities for helpful AI experiences" by enabling the use of both on-device and advanced cloud models for sensitive use cases.

[17]

Google's Private AI Compute Merges Cloud Power with Total Privacy

Google Reveals Private AI Compute: Cloud-Level Gemini Intelligence with On-Device Privacy Assurance Google is introducing Private AI Compute, a cloud-based AI processing platform that promises the power of Gemini models with the privacy of on-device processing. It's a defining step in Google's long drive to balance the capability of AI and protect users' data. The tech giant, in its , assures that the platform will enable AI to become much more personal, anticipatory of user needs, and able to offer personalized suggestions, while keeping private data protected. Leveraging the cloud brings advanced reasoning and computational strength that traditional on-device models can't achieve.

[18]

Private AI Compute explained: How Google plans to make powerful AI private

Verified attestation ensures Google can't access or track private computations. For years, artificial intelligence has wrestled with a paradox: the most capable models need vast cloud power, but users want the privacy of local computing. Google's new initiative, Private AI Compute, aims to dissolve that trade-off by reengineering how computation itself happens. This isn't a new product or setting; it's an architectural rethink. The idea is simple but radical: run powerful AI models in the cloud, but make it mathematically and technically impossible for Google or anyone else to see what's inside. Also read: AI chips: How Google, Amazon, Microsoft, Meta and OpenAI are challenging NVIDIA At the heart of this system are secure enclaves, isolated hardware environments built into Google's custom Tensor Processing Units (TPUs). Whenever your device sends data for an AI task, say, summarising a conversation or generating a response, it doesn't go to a general cloud server. It enters a Titanium Intelligence Enclave (TIE), a sealed section of the chip that's cryptographically locked. Only your device holds the keys to encrypt or decrypt the data. Even Google's engineers can't access the content inside. The AI model runs within that bubble, produces the result, and the data is deleted immediately after processing. This means that the power of large Gemini models can be used without the exposure risks of traditional cloud processing - a crucial step toward scalable, private AI. To prove this privacy isn't just theoretical, Google uses remote attestation. Each request sent to Private AI Compute must pass a cryptographic check that verifies the enclave is genuine, secure, and unmodified. If anything about the environment is tampered with, the computation won't start. In other words, users don't have to trust Google's word, the system proves its own integrity. It's privacy that's verifiable by design, not promised by policy. Private AI Compute also redefines how devices and the cloud share responsibility. Your phone remains the controller - holding your identity, keys, and permissions - while the enclave performs the heavy lifting. This ensures that personal identifiers never leave the device. What travels to the cloud is the task, not the user. For example, when a Pixel phone uses features like Magic Cue or the Recorder summariser, the Gemini model might process voice data remotely, but the link between your voice and your Google account stays local and protected. Google's design embeds protection across multiple levels: Also read: AMD vs NVIDIA GPUs: AI, efficiency, and gaming, who wins? This layered architecture means privacy isn't an add-on, it's built into every stage of computation. Traditional privacy-preserving computation often slows AI to a crawl. Google's innovation lies in maintaining performance: the AI doesn't need to process encrypted data, it processes inside an already private zone. That allows the same speed and reasoning depth of large Gemini models while meeting high privacy standards. It's the cloud, reimagined as a private vault. Private AI Compute is Google's attempt to reconcile the growing need for powerful AI with society's demand for privacy. By combining cryptographic verification, secure enclaves, and layered security, it creates a path where intelligence and confidentiality can coexist. If successful, it could redefine how AI systems are trusted, not just by promising privacy, but by proving it in code and silicon. And that, more than any new app or feature, might be Google's most transformative innovation yet.

[19]

Google steps up AI privacy game with Private AI Compute: What it is and why it matters

Private AI Compute ensures that your personal data stays private to you and is not accessible to anyone else, not even Google. Google has announced Private AI Compute, a new platform that promises to bring powerful AI features to users without compromising their privacy. The idea is to combine the strength of Google's Gemini AI models in the cloud with the same security and privacy protections users expect from on-device processing. AI is becoming more personal and proactive, helping users not just respond to requests, but also anticipate needs, offer suggestions, and take actions automatically. However, this level of intelligence often needs more computing power than what's available on a phone or laptop. That's where Private AI Compute comes in: it lets devices connect with cloud models securely, giving faster and smarter responses while ensuring that personal data remains private. Also read: Google's November Pixel Drop is here: AI-powered notification summaries, Remix in Messages and more Google describes Private AI Compute as a "secure, fortified space for processing your data that keeps your data isolated and private to you." According to the tech giant, it ensures that your personal data stays private to you and is not accessible to anyone else, not even Google. The system uses encryption and remote attestation to ensure that data stays protected from the moment it leaves your device to when it's processed in the cloud. Google is already using Private AI Compute to improve features on its devices. For example, the Magic Cue on Pixel 10 phones will now offer more timely and relevant suggestions, and the Recorder app can summarize transcripts in more languages. Also read: Google Photos brings conversational editing to iOS, announces other new AI features "This is just the beginning. Private AI Compute opens up a new set of possibilities for helpful AI experiences now that we can use both on-device and advanced cloud models for the most sensitive use cases," Google explains. Also read: Google Pixel 10 Pro price drops by over Rs 9,500 on Amazon: Check deal details here

Share

Share

Copy Link

Google unveils Private AI Compute, a secure cloud platform that promises local-level privacy while harnessing cloud computing power for advanced AI features. The system uses Trusted Execution Environments and hardware security measures to process sensitive data.

Google's Answer to Privacy-First Cloud AI

Google has unveiled Private AI Compute, a new cloud-based platform designed to deliver advanced AI capabilities while maintaining the privacy and security standards of on-device processing

1

. The service represents Google's attempt to reconcile users' growing privacy concerns with the computational demands of increasingly sophisticated AI applications3

.The platform bears striking similarities to Apple's Private Cloud Compute, reflecting an industry-wide shift toward privacy-preserving cloud AI solutions

2

. According to Google, Private AI Compute creates a "secure, fortified space" that processes sensitive user data with the same privacy guarantees as local processing while unlocking the full computational power of Google's Gemini cloud models5

.

Source: Digit

Technical Architecture and Security Measures

Private AI Compute operates on Google's custom Tensor Processing Units (TPUs) with integrated secure elements, creating what the company calls Titanium Intelligence Enclaves (TIE)

1

. The system employs AMD-based Trusted Execution Environments (TEE) that encrypt and isolate memory from the host system, theoretically preventing access to user data by anyone, including Google itself4

.The platform incorporates multiple layers of security protection. User devices connect directly to the protected environment via encrypted links, with the system supporting peer-to-peer attestation and encryption between trusted nodes

5

. The infrastructure is designed to be "ephemeral," meaning user inputs, model inferences, and computations are discarded immediately after each session completes, preventing potential data recovery by attackers who might gain privileged access5

.

Source: Android Authority

Addressing the Edge Computing Limitation

The introduction of Private AI Compute addresses a fundamental limitation in current AI deployment strategies. While Google has emphasized the power of on-device neural processing units (NPUs) in devices like Pixel phones, these chips cannot match the computational capabilities required for advanced AI features

1

.Google's hybrid approach recognizes that agentic AI tasks—those capable of anticipating user needs and completing complex tasks—require significantly more processing power than mobile devices can provide

2

. Features like Magic Cue, which contextually surfaces information from email and calendar apps, and enhanced language support for the Recorder app will benefit from this increased computational capacity3

.

Source: Droid Life

Related Stories

Security Assessment and Expert Concerns

NCC Group conducted an independent security assessment of Private AI Compute between April and September 2025, discovering several potential vulnerabilities

5

. The assessment identified a timing-based side channel in the IP blinding relay component that could potentially "unmask" users under specific conditions, though Google considers this low risk due to the multi-user system's inherent noise.Security experts remain cautiously optimistic about the technology while noting potential concerns. Kaveh Ravazi from ETH Zürich highlighted that previous attacks have successfully leaked information from AMD SEV-SNP systems and compromised TEEs with physical access

4

. The hardened TPU platform, while potentially more secure, has received less public security scrutiny compared to established TEE implementations.Industry Context and Privacy Implications

The launch comes amid growing consumer skepticism about AI privacy practices. According to a recent Menlo Ventures survey, 71 percent of Americans who haven't adopted AI cite data privacy concerns as their primary reason

4

. A Stanford study revealed that six major AI companies, including Google, appear to use user chat data for model training by default and retain this data indefinitely4

.Google's move toward privacy-preserving cloud AI reflects broader industry recognition that consumer trust is essential for AI adoption. The company has committed to publishing cryptographic digests of application binaries used by Private AI Compute servers and plans to expand its Vulnerability Rewards Program to cover the new platform

4

.References

Summarized by

Navi

[1]

[4]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology