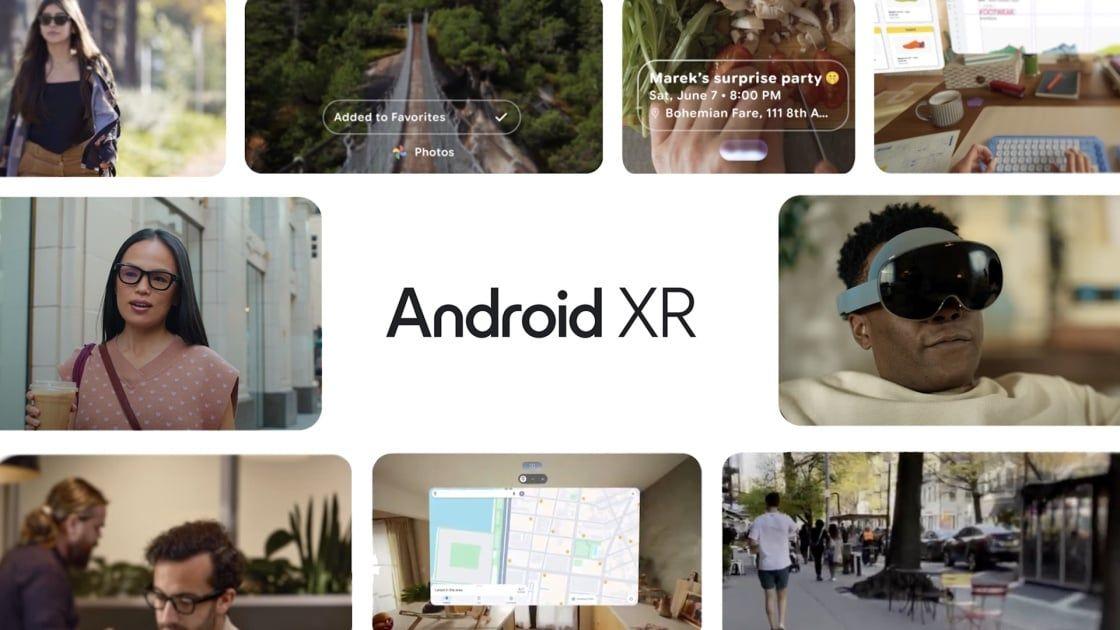

Google's Android XR Glasses: A Glimpse into the Future of Smart Eyewear

8 Sources

8 Sources

[1]

I Hated Smart Glasses, but Google's Android XR Let Me See a New Future

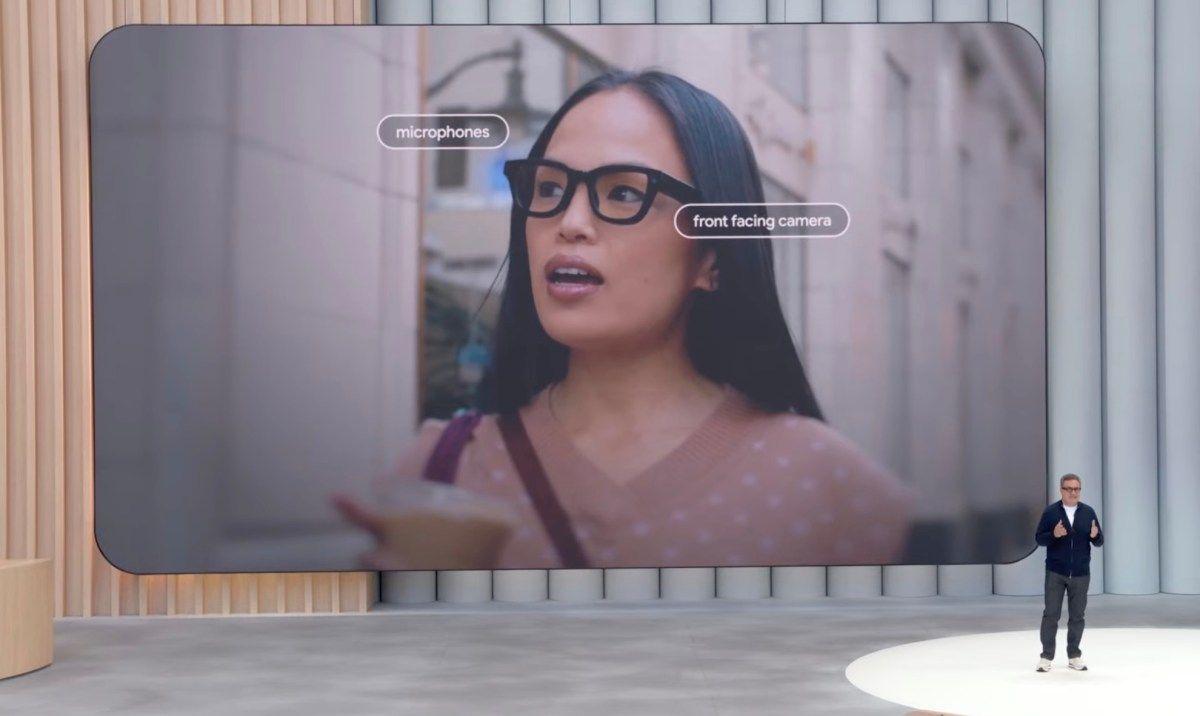

Patrick's play The Cowboy is included in the Best American Short Plays 2011-12 anthology. He co-wrote and starred in the short film Baden Krunk that won the Best Wisconsin Short Film award at the Milwaukee Short Film Festival. For 5 minutes on Tuesday, I felt like Benji in Mission: Impossible -- Rogue Nation. But instead of wearing smart glasses at the Vienna State Opera and hearing Tom Cruise's voice, I was standing in a 5-by-5-foot wooden shed at the Google's I/O developer conference with Gemini AI in my ear. I got to test out a pair of Android XR glasses that look like something Poindexter from Revenge of the Nerds would wear. In the right lens, there's tiny white text displaying the time and weather: 2:24 p.m., 73 degrees. If this were all that smart glasses could do, I'd be happy. But that was just the home screen. Next, I used the prototype frames to take a photo and view a full-color preview, right on one of the lenses. It was wild to simultaneously see a picture and then the actual world through it. The futuristic frames are a very early prototype of the Android XR glasses that Google is making in partnership with Samsung and Qualcomm. In nearly every way, these frames look like normal prescription glasses. In fact, Google announced it'll work with Warby Parker (my go-to glasses brand for years) and swanky South Korean eyeglass brand Gentle Monster (Beyoncé and Rihanna wear them) for actual frame designs when they ship. Unlike regular glasses, these frames are loaded with tech. There's a microphone and speaker that can be used to dictate prompts for Gemini and listen to the responses. On the top edge of the temple, there's a physical camera shutter button. You can interact with the sides of the frames. And the glasses are packed with sensors that interpret your movements as input, so that Google Maps, for instance, show you directions, no matter which direction you're looking. Until now, I've shrugged off other smart glasses like an annoying invite to a friend's improv comedy show. They don't seem worth the effort and price. However, these may be the turning point. Google is striving to make Android XR frames stir up interest beyond just early adopters looking for an alternative to Meta's Ray-ban smart glasses. During my brief encounter, I left thinking that there's definitely potential for wider appeal here, especially if Google can get more people to try them. The controls seemed intuitive like a natural extension of an Android phone. Years from now, I envision going to the eye doctor and being asked if you want the optional Android XR and Gemini addition -- in much the way we are now asked if we want a coating for blue light. The standout feature to me is the tiny display on the right lens of Google's glasses. But describing how the display works on these frames approaches Defcon 1 levels of technical mastery, so I enlisted some help. As Luke Skywalker has Yoda, I have CNET's Scott Stein, who's seen it all when it comes to AR and VR glasses and headsets. Turns out he got to try a similar prototype of these glasses last year. "These glasses have a single display in the right lens, projected in via a Micro LED chip on the arm onto etched waveguides on a small square patch on the lens glass," Stein told me. This display is where the magic happens. Not only can it show an Android XR interface and animations, but it does so in color -- even with the few photos that I got to take. Also I was impressed with how the UI disappeared when I wasn't directly using it. Google's Gemini is built into XR, so I can ask my glasses to give me more information on whatever I'm looking at. In the Lilliputian-size demo shed, I asked Gemini about a couple of paintings on the wall. I could hear responses via the speaker that's nestled in the glasses' temple, but those around me couldn't hear anything. It definitely made me feel like a spy. Then I got to try Google Maps. I never knew getting directions could be so enjoyable. I saw a circular map with street names and an arrow pointing in the direction I should go. As I moved, the little map rotated. It felt a bit like moving the camera in a video game with a controller to get a better view. And that was it. Any initial skepticism I had for smart glasses was wiped away. But I do have questions. How long does a pair of these glasses last on a single charge? Am I supposed to use these as my everyday frames? Should I have a second regular pair of glasses to wear when I charge them so that I can see? And how much is a pair going to cost me? Will there be a rise in people walking into walls because they're distracted by whatever they're interacting with on their lenses? Google doesn't have much to share with me yet, so I guess we'll find out more in the coming months and years. I spent about 5 minutes in the frames, but if you want to know more, check out this more in-depth look at Android XR by CNET's resident smart glasses expert, Scott Stein.

[2]

I wore Google's XR glasses, and they already beat my Ray-Ban Meta in 3 ways

Google unveiled a slew of new AI tools and features at I/O, dropping the term Gemini 95 times and AI 92 times. However, the best announcement of the entire show wasn't an AI feature; rather, the title went to one of the two hardware products announced -- the Android XR glasses. CNET: I hated smart glasses until I tried Google's Android XR. Now I see the potential For the first time, Google gave the public a look at its long-awaited smart glasses, which pack Gemini's assistance, in-lens displays, speakers, cameras, and mics into the form factor of traditional eyeglasses. I had the opportunity to wear them for five minutes, during which I ran through a demo of using them to get visual Gemini assistance, take photos, and get navigation directions. As a Meta Ray-Bans user, I couldn't help but notice the similarities and differences between the two smart glasses -- and the features I now wish my Meta pair had. The biggest difference between the Android XR glasses and the Meta Ray-Bans is the inclusion of an in-lens display. The Android XR glasses have a display that is useful in any instance involving text, such as when you get a notification, translate audio in real time, chat with Gemini, or navigate the streets of your city. The Meta Ray-Bans do not have a display, and although other smart glasses such as Hallidays do, the interaction involves looking up at the optical module placed on the frame, which makes for a more unnatural experience. The display is limited in what it can show, as it is not a vivid display. The ability to see elements beyond text adds another dimension to the experience. Also: I've tested the Meta Ray-Bans for months, and these 5 features still amaze me For example, my favorite part of the demo was using the smart glasses to take a photo. After clicking the button on top of the lens, I was able to take a photo in the same way I do with the Meta Ray-Bans. However, the difference was that after taking the picture, I could see the results on the lens in color and in pretty sharp detail. Although being able to see the image wasn't particularly helpful, it did give me a glimpse of what it might feel like to have a layered, always-on display integrated into your everyday eyewear, and all the possibilities. Google has continually improved its Gemini Assistant by integrating its most advanced Gemini models, making it an increasingly capable and reliable AI assistant. While the "best" AI assistant ultimately depends on personal preference and use case, in my experience testing and comparing different models over the years, I've found Gemini to outperform Meta AI, the assistant currently used in Meta's Ray-Ban smart glasses. Also: I'm an AI expert, and these 8 announcements at Google I/O impressed me the most My preference is based on several factors, including Gemini's more advanced tools, such as Deep Research, advanced code generation, and more nuanced conversational abilities, which are areas where Gemini currently holds an advantage over Meta AI. Another notable difference is in content safety. For example, Gemini has stricter guardrails around generating sensitive content, such as images of political figures, whereas Meta AI is looser. It's still unclear how many of Gemini's features will carry over to the smart glasses, but if the full experience is implemented, I think it would give the Android smart glasses a competitive edge. Although visually they do not look very different from the Meta Ray-Bans in the Wayfarer style, Google's take on XR glasses felt noticeably lighter than Meta's. As soon as I put them on, I was a bit shocked by how much lighter they were than I expected. While a true comfort test would require wearing them for an entire day, and there is also the possibility that by the time the glasses reach production, they become heavier, at this moment, it seems like a major win. PCMag: Google Glass reborn? I tried Android XR smart glasses, and one thing stood out If the glasses can maintain their current lightweight design, it will be much easier to fully take advantage of the AI assistance they offer in daily life. Wearing them for extended periods wouldn't sacrifice comfort, especially around the bridge of the nose and behind the ears. Ultimately, these glasses act as a bridge between AI assistance and the physical world. That connection only works if you're willing and able to wear them consistently. Get the morning's top stories in your inbox each day with our Tech Today newsletter.

[3]

Android XR Could Be the Future of Smart Glasses -- But Where Are the Products?

I've been PCMag's home entertainment expert for over 10 years, covering both TVs and everything you might want to connect to them. I've reviewed more than a thousand different consumer electronics products including headphones, speakers, TVs, and every major game system and VR headset of the last decade. I'm an ISF-certified TV calibrator and a THX-certified home theater professional, and I'm here to help you understand 4K, HDR, Dolby Vision, Dolby Atmos, and even 8K (and to reassure you that you don't need to worry about 8K at all for at least a few more years). When Google announced Android XR six months ago, I was excited. An Android-powered platform for augmented reality (AR), virtual reality (VR), and mixed reality (XR) offering similar functionality to the Apple Vision Pro but open to third-party developers across a wide range of devices? Sounds great! I still think it does, and could be exactly what the industry needs, but after the Google I/O keynote this week, it's pretty clear any real product using the platform won't be coming out for a while. Google's developer conference was predictably Gemini-focused, but some Android XR news also came out of it. Actually, most of that news had to do with AI, too. The Google I/O keynote showed off features and use cases for Gemini and other AI tools on Android XR. It'll be able to analyze your surroundings, translate languages -- all of that useful stuff your phone can do -- but that's not why Android XR matters. It's a big deal because it's a platform for different devices made by different companies. Right now, VR headsets and smart glasses are in a tough spot, and a unified platform could be just what they need. Let's look at why that's the case, how Android XR may be able to help, and what kind of timeline we're looking at for this effort. The Fractured State of VR Headsets and Smart Glasses I've been following VR/XR headsets for more than a decade, ever since the Oculus Rift brought VR into broad public attention. I've also been reviewing smart glasses for the last several years as they've steadily evolved from clunky headsets made for enterprise and industrial work into comfortable personal displays. Both of those categories have seen very uneven progress. Full VR/XR headsets have fully matured with options for everyone from budget shoppers (the $300 Meta Quest 3S) to early adopters willing to shell out a ton of cash for the most advanced technology available (the $3,500 Apple Vision Pro). There are multiple systems to choose from, each with large libraries of games and software. These headsets have seen a lull over the last year, though. When the Apple Vision Pro was met with a less-than-enthusiastic response from the public (mostly due to very understandable sticker shock, but partly because its all-metal body and less-than-ideally-balanced head strap made it uncomfortable to wear for long periods), headset makers seem to have gotten a bit more cagey. Even though it's fully developed as a technology, VR is still very much a niche novelty. Just the act of putting a big visor over your face and clearing out enough space in your home to fumble around without tripping on furniture means it requires some physical dedication that most other electronics don't demand. Moreover, if you want to use VR, you're probably not stepping foot outside the house for a while, or at least until eye or neck strain kicks in. These headsets are for enthusiasts, and despite Apple and Meta trying their best, almost no one looks at them as multi-purpose devices for work, play, and communication. Then there are smart glasses. The term covers so wide and amorphous a range of devices that following every kind is like the technological version of herding cats, but for simplicity, I'm going to mostly be talking about what I call video smart glasses or wearable displays, which are often referred to as AR glasses. They're glasses that use angled lenses to project a big virtual screen across your eyes, and include the XReal One and the Viture Pro. Unlike headsets, their displays cover a limited field of view and look more like a huge TV or theater screen rather than an all-encompassing picture that replaces the outside world. They're also much less bulky and easier to put on and take off. I use AR glasses much more often than I use VR/XR headsets, including for work. They work great as portable, private monitors you can slip onto your face and use to watch a movie, play a game, or write an article through whatever phone or computer it's connected to. The problem with them is that that isn't actually AR. Augmented reality requires reality actually being a factor, like overlaying useful information about whatever you're looking at or making a virtual object appear as if it's right in front of you relative to your surroundings. Some smart glasses do have motion sensors or even cameras to implement these features, but they're very underbaked and I've yet to find a pair that doesn't feel like a limited tech demo built into what's otherwise just a wearable monitor. There's also a growing subcategory of waveguide-based AR glasses like the Even Realities G1 and the Vuzix Z100 with transparent lenses that offer genuine augmented reality and are lighter and more comfortable than video smart glasses. The trade-off is that their displays are much, much more limited in field of view and resolution. More importantly, if video glasses' AR features feel a bit half-baked, waveguide glasses have only just been put in the oven. In other words, there are several different types of wearable displays with different levels of maturation, and each product currently on the market runs entirely off of whatever system their manufacturer developed specifically for that device. In other words, it's kind of a mess, and the lighter and more convenient the design is, the less polished the experience is. That's what makes Android XR so important. Android XR: The Great Unifier? The big reason those smart glasses I mentioned are in a very unpolished state is that each manufacturer is doing the bulk of its development in-house. Even Realities, Viture, Vuzix, and XReal are all doing their own thing to enable AR features. Even Realities and Vuzix models have completely different smartphone apps, voice and touch controls, and display formats, even when they're doing similar things like translating a language or giving directions. Meanwhile, Viture and XReal are making devices that are effectively just Android media players with different head-tracking AR interfaces bolted on them (the XReal Beam Pro is an example). Everyone is doing their own thing their own way, and that's a lot of redundant effort to get similar smart glasses to do similar things. It's a nightmare for third-party developers, too, since apps need to be developed separately (or modified heavily) to work on all of these different platforms. Android XR is a unified system developed to work on VR/XR headsets and smart glasses of different builds and form factors. It's a framework that standardizes many software and hardware functions across the board, like Android does with smartphones. That's a big deal, for the same reason that Android was a huge deal for smartphones: It ensures that different devices and apps from multiple manufacturers and developers all function with some level of consistency. Before Android, non-iPhone smartphones were heavily fragmented, with almost every phone maker coming up with their own interface. Android provided a consistent, stable base for phone makers not based in Cupertino to build on. That's something smart glasses have fundamentally lacked, and while VR/XR headsets have more mature ecosystems, they're still largely locked to the manufacturer. That's what Android XR can be. Consistency between devices doesn't mean much to buyers, but software does, and Android XR means the potential for a proper app store. Developers will be able to make one app and be confident that it will work in multiple headsets or smart glasses with the right hardware components. That's a bigger benefit than you might think; keep in mind that smartphones as a concept didn't really explode until the App Store opened on the iPhone 3G, only months before Android's equivalent (Android Market, now Google Play) opened. When a ton of software is available and the user can count on it running on their device, no matter who might have built it, that's a huge deal. As For Hardware, Don't Expect Much This Year For all of this promise, you probably won't be reaping the benefits of Android XR with a shiny new headset or smart glasses anytime soon. Google announced some new Android XR manufacturing partners during its I/O keynote, but nothing in the way of an actual device slated for release this year, at least by my standards. The big hardware announcement was smart glasses company XReal revealing its own Android XR prototype and development kit called Project Aura. That's the second big Android XR headset to be announced after Samsung's Project Moohan, and while Moohan is clearly taking a run at the Apple Vision Pro, XReal's Aura looks to have a much more streamlined form factor, like a pair of traditional glasses. Neither of them is really intended for the average buyer, though. They're development kits, which manufacturers and software developers will reference to make other headsets, glasses, and apps for those headsets and glasses. To be fair, you'll still technically be able to order at least one of them (Project Moohan is slated to go on sale later this year), but that's often the case for development kits. If you're willing to spend the likely hefty price tag, you can order these first-generation devices. You'll just be running into an almost nonexistent app ecosystem, more of a development playground that will hopefully, eventually be filled with easy-to-use software to take advantage of all that hardware. That was the case for the first Oculus Rift, Microsoft HoloLens, and is also true for Vuzix's innovative but unpolished Z100 smart glasses. I would be shocked if Project Moohan is sold for less than $2,000, and even more shocked if it has an app store with more than 40 true XR apps (not just 2D Android apps projected in front of you, a trick we saw with the Apple Vision Pro). It'll be an early adopter toy. Google also announced its partnership with glasses manufacturers Gentle Monster and Warby Parker to make Android XR wearables. No products were mentioned at all, and considering they're mostly frame-and-lens folk and the displays of smart glasses are incredibly complex to build, I see Android XR implementation going one of two ways. One: Google does the overwhelming bulk of hardware development, including the lenses for the display, and the resulting smart glasses are ultimately Google devices with a Gentle Monster or Warby Parker veneer on them. Or, two: They go audio-only, using the Android XR framework as a front-end for basically just Gemini through built-in microphones and speakers. Maybe a camera for snapshots and video will be added, but that's it. That's a much easier technical ask, and we've seen that approach done already by Carrera with its now-discontinued Amazon Echo Frames collaboration, and by Ray-Ban with its Meta-powered smart glasses. No screen, just sound and, for the Ray-Ban Metas, a camera. At that point, any augmented reality is thrown out the window, so I wouldn't consider it a real demonstration of what Android XR could mean for smart glasses. Some sort of display is necessary, because that's a fundamental part of AR: It visually augments the reality you're looking at. I would love to see AR smart glasses get the same kind of huge jump in usability that Android afforded for smartphones, because right now they're too nascent to be compelling to the average person. I think Android XR is the best chance for that to happen, and if it also manages to pull VR/XR headsets out of the enthusiast-only ditch they're currently lodged in, that would be all for the better. It's just not happening this year.

[4]

Making Android XR Glasses That Don't Suck Is Going to Be Very Hard

Google has its work cut out if it's going to make the smart glasses we've been waiting for. I'll be honest with you: I think smart glasses are awesome. I don't mean to say they work well all the time, or that they don't do half of what they should do at this stage, or that they don't need a massive dose of computing power. Those things are all true in my honest opinion. But even despite having to scream at my pair of Meta Ray-Bans to play Elton John this morning, only for them to repeatedly try and play John Prine instead, I love them for one thing: their potential. And Google -- if I/O 2025 is any indication -- sees that same potential, too. It spent quite some time during its keynote laying out its vision for what smart glasses might be able to do via its Android XR smart glasses platform and supporting hardware made with Xreal. The possibilities are enticing. With Android XR and smart eyewear like Project Aura glasses, the form factor could actually get to the next level, especially with optical passthrough that can superimpose stuff like turn-by-turn navigation in front of your eyeballs. If you were watching the live demo yesterday, it probably looked like we were all right on the cusp of being able to walk around with the futuristic pair of smart glasses we've been waiting for, and partly that impression is true. Smart glasses -- ones that do all the fun stuff we want them to do -- are imminent, but unfortunately for everyone, Google included, they're likely going to be a lot harder to perfect than tech companies would have us believe. When it comes to glasses, there are still constraints, and quite a few of them. For one, if smart glasses are going to be the future gadget we want them to be, they need some kind of passthrough. Technologically speaking, we're already there. While Gizmodo's Senior Editor, Consumer Tech, Ray Wong, only got about a minute to try Project Aura at Google I/O he can attest to the fact that the glasses do indeed have an optical display that can show maps and other digital information. The problem isn't the screen, though; it's what having a screen might entail. I'm personally curious about what having optical passthrough might do to battery life. Size is still the biggest issue when it comes to functional smart glasses, which is to say that it's difficult to cram all of that architecture we need into frames that don't feel much heavier than a regular pair of glasses. Think about it: you need a battery, compute power, drivers for speakers, etc. All of those things are relatively small nowadays, but they add up. And if your glasses can suddenly do a lot more than they used to, you're going to need a battery that reflects those features, especially if you're using optical passthrough, playing audio, and querying your onboard voice assistant all at once. A bigger battery means more weight and a bulkier look, however, and I don't really care to walk around with Buddy Holly-lookin' frames on my face all day. Then there's the price. Meta's Ray-Ban smart glasses are already relatively expensive ($300+, depending on the model) despite not even having a screen in them. I don't have the projected bill of materials for Project Aura or Google's prototype Android XR glasses, obviously, but I am going to assume that these types of optical displays are going to cost a little bit more than your typical pair of sunglasses. That's compounded by the fact that the supply chain and manufacturing infrastructure around making those types of displays likely isn't very robust at this point, given people really only started buying smart glasses in the mainstream about five minutes ago. I sound like I'm naysaying here, and maybe I am a little bit. Shrinking tech down, historically speaking, has never been easy, but these are some of the most powerful and resourced companies in the world making this stuff, and I don't for a second doubt that they can't figure it out. It's not the acumen that would prevent them; it's the commitment. I want to believe that the level of focus is there, given all of the time Google devoted to showcasing Project Aura yesterday, but it's hard to say for sure. Google, despite the behemoth it is, is being pulled in various directions -- for example, AI, AI, and AI. I can only hope in this case that Google actually puts its money where its futuristic spy glasses are and actually powers Project Aura through to fruition. C'mon, Google, daddy needs a HUD-enabled map for biking around New York City.

[5]

Google's upcoming XR glasses are the smart eyewear I've been been for

The Garmin smartwatch that's perfect for almost everyone just hit a record low price Google Glass debuted in 2013. If you asked me over a decade ago, I would've sworn that glasses would be the predominant wearable technology. However, like many projects before and since, the company abandoned Google Glass, and I've been waiting for a suitable replacement ever since. The Apple Vision Pro and other AR wearables are impractical, and if Android XR is going to catch on, it won't be with goggle-style products like Samsung's upcoming Project Moohan. Thankfully, Google gave us a glimpse of the future toward the end of its Google I/O 2025 keynote event. Google XR glasses are the AI technology I've been waiting for. They combine the potential power of AI with a form factor we'll actually use. They bridge the practical gap that's frustrated me about all these fancy AI gimmicks. XR glasses can work, but Google must stick with them this time. Related Google co-founder Sergey Brin admits he dropped the ball with Google Glass There's a lesson in there somewhere Posts 1 It's finally practical AI for the everyday Jules Wang / AP Project Astra has been around for a year, but Google's XR glasses are the first implementation I'm excited about. I have my misgivings about AI for multiple reasons. No company has convinced me it's a positive addition to the user experience and that it provides any value, certainly not the $20 a month Google wants to charge us. However, Android XR on Google's XR glasses demonstrates the blueprint for AI success. I want an AR overlay of my environment. I want conversations translated in front of my eyes in real time, and my XR device to show me directions to my next destination. Glasses that function normally when I don't need the technology but can provide an AI experience when I do are the form factor I've been waiting for. I don't love the idea that my smartglasses will remember things I viewed earlier or will keep track of where I'm going, but at least it's a convenience, and I know I've wanted to remember a sign or phone number I saw earlier but didn't think of writing it down. I could glance at a business card or information for a restaurant and have my Google XR glasses remember the contact's phone number or help me make a reservation. If I'm giving up privacy for AI, I want it to be useful, and the Google XR glasses are the first time I've thought about making a compromise. Form factor matters No one wants to wear a tablet on their head Source: Apple I am impressed by the technology in the Apple Vision Pro, and I'm sure that Samsung's Project Moohan will be an interesting headset, but I fear they'll share a similar fate. No one wants to walk around or be stuck with a large headset on them for any length of time, and no one wants to be connected to a battery pack. I get the entertainment and productivity possibilities for them, but they'll remain marginal products because they aren't a natural extension of the human experience -- technology should enhance, not intrude. Glasses that function normally when I don't need the technology but can provide an AI experience when I do are the form factor I've been waiting for. As a glasses wearer, it's a natural transition. Even if you don't wear prescription lenses, I'm sure you've worn a pair of sunglasses. It's the same reason why flip phones are superior to book-style, larger folding devices. I don't need to change how I use a smartphone to enjoy a flip phone, and I wouldn't need to change how I wear glasses or go about my day to use the Google XR glasses -- when adoption is easier, sales are greater. AI still has to work We need to see plenty of improvement Source: Lucas Gouveia/Android Police Of course, a map overlay is only good if it points me in the right direction, and a real-time translation only provides value if it's accurate. I don't have the faith in AI I'd need to for Google's XR glasses to work. Every Google I/O 2025 demo went off without a hitch, but as any current Google Gemini user will tell you, the reality is a mixed bag. I get numerous wrong answers weekly from Gemini Live, and AI assistants on multiple platforms still need to be rigorously double-checked. I hold my breath when I ask any AI model for information I need to act on, and if I'm going to trust AI to provide me with an overlay of the world I see, I will need greater accuracy. Nothing will ever be perfect, and mistakes will always creep into any model, but if Google wants me to treat its various agentic AI features as a personal assistant with personal context, then I need to trust it. It's the same standard I'd hold to a human assistant or friend, and if Google wants me to offload things I'd usually handle myself, I need to know it's up to the task. I'm excited about Google XR glasses, but reliability is vital. We're on the right track Plenty of questions remain unanswered. Google's glasses can't have a minuscule battery life or a terrible Bluetooth connection, but at least I approve of the direction. The technology might take a while to catch up. Still, Android XR makes me believe we're headed towards a usable, valuable AI experience, which is something I can't say about Samsung's Galaxy AI or other Google Gemini functions. We're close to the future; I just hope Google doesn't give up.

[6]

Google takes a second shot on smart glasses

Why it matters: Augmented reality glasses are shaping up to be a key interface for AI-powered computing. Driving the news: At Google I/O, the company offered more details on its prototype Android XR glasses and announced partnerships with Samsung and Warby Parker. How it works: Google's XR glasses connect via a nearby smartphone, while Aura glasses tether to a small custom computer powered by a Qualcomm processor. Flashback: Introduced in 2013, the "explorer edition" of Google Glass cost a whopping $1,500 despite its limited function and awkward design, including a small display that was housed in a prominent acrylic block. Those who bought the device were often mocked, with some dubbing wearers as "glassholes." Between the lines: Reflecting on Google Glass, Sergey Brin said the product was too expensive and too distracting, among other flaws. Hassabis said modern AI gives the glasses a purpose. Zoom in: I got to try both the prototype Android AR glasses and Project Moohan and both felt like a glimpse of the future and solid competitors to the products on the market. Yes, but: Google's augmented reality glasses aren't coming this year, while Meta is expected to offer a version of its Ray-Bans with a small screen included.

[7]

Google's second swing at smart glasses seems a lot more sensible

The standard spec for Android XR glasses covers devices with and without an in-lens screen. Google didn't go into details about the display technology involved, but it's the most obvious path to a functional improvement over the current Meta Ray-Bans. Lately I've been using Gemini with Google's Pixel Buds Pro 2 -- supposedly "built for Gemini AI" -- and while it works well for what it is, I think AI chat interfaces are a lot less compelling when you can't read the responses. Beyond Gemini, the ability to see notifications, Maps directions and real-time language translations could make a huge difference to the smart glasses experience. Design is obviously critical to any wearable technology, and Meta made a strong move by tying up dominant eyewear company EssilorLuxottica -- parent of Ray-Ban and many other brands -- to a long-term partnership. The Meta Ray-Bans would not be anywhere near as popular if they weren't Ray-Bans. In response, Google has partnered with U.S. retailer Warby Parker and hip South Korean brand Gentle Monster for the initial batch of Android XR glasses. No actual designs have been shown off yet, and it'll be hard to compete with the ubiquitous Wayfarer, but the announcement should ensure a solid range of frames that people will actually want to wear.

[8]

Google Android XR Glasses : Feature Real-Time Translation and 3D Navigation

What if your glasses could do more than just help you see? Imagine walking through a bustling foreign market, effortlessly reading signs in another language, or navigating an unfamiliar city with directions projected seamlessly into your field of view. This isn't science fiction -- it's the promise of Google's new Android XR glasses. Powered by the innovative Google Gemini AI, these wearable devices aim to transform how we interact with the world around us. With features like real-time translation, contextual memory, and 3D navigation, they blur the line between the digital and physical worlds. But as new as they seem, these glasses also spark pressing questions: Can they balance innovation with privacy? Will their battery life keep up with their ambitions? And perhaps most importantly, are we ready for a future where AI is literally in our line of sight? AI Grid explores how Google's Android XR glasses could redefine wearable technology, offering a glimpse into a future where AI-powered wearables are as ubiquitous as smartphones. From the sleek, fashion-forward design to the fantastic potential of features like hands-free notifications and object recognition, these glasses promise to enhance both personal convenience and professional efficiency. Yet, challenges like cost, accessibility, and ethical concerns loom large. Whether you're a tech enthusiast curious about the next big thing or a skeptic questioning the implications of such advancements, this deep dive into Google's latest innovation will leave you pondering the possibilities -- and the pitfalls -- of a world where AI is always within view. The Android XR glasses are available in three distinct models, each designed to meet the diverse needs of users: All models are compatible with prescription lenses, making sure accessibility for users with vision needs. The design emphasizes subtlety, with a lightweight frame that closely resembles traditional eyewear. Collaborations with fashion-forward brands such as Gentle Monster and Warby Parker add a stylish dimension, appealing to both tech enthusiasts and casual users. This combination of functionality and aesthetics ensures the glasses fit seamlessly into everyday life. At the core of the Android XR glasses lies Google Gemini AI, which powers an array of intelligent features designed to enhance your daily experiences: These features aim to streamline your interactions with technology, offering convenience while minimizing distractions. By integrating these capabilities into a wearable device, Google seeks to enhance productivity and connectivity in a way that feels natural and unobtrusive. Gain further expertise in Google AI Glasses by checking out these recommendations. The Android XR glasses use the power of Google Gemini AI for advanced contextual understanding and object recognition. To maintain a lightweight design and optimize battery life, much of the processing is offloaded to a connected Android smartphone. This approach ensures the glasses remain comfortable for extended wear without compromising performance. Google has partnered with Samsung to develop the Android XR platform, making sure seamless integration with the broader Android ecosystem. This collaboration underscores the glasses' potential to serve as a cornerstone of wearable technology, bridging hardware and software to deliver a cohesive and user-friendly experience. By combining innovative AI with a lightweight and practical design, the glasses aim to strike a balance between innovation and usability. The Android XR glasses are designed to address a wide range of everyday challenges, offering practical solutions that enhance both personal and professional life: Beyond personal use, the glasses hold significant potential for various industries. In healthcare, surgeons could access real-time data during procedures, while educators might use augmented reality to visualize complex concepts in 3D. Logistics professionals could benefit from hands-free navigation and inventory management. These applications highlight the glasses' versatility and their potential to transform workflows across multiple fields. Despite their promise, the Android XR glasses face several challenges that could impact their widespread adoption: These challenges emphasize the importance of thoughtful design and transparent communication as Google prepares for a broader release. Addressing these concerns will be critical to making sure the glasses' success in the market. The Android XR glasses represent a significant advancement in wearable AI technology. By addressing real-world problems with innovative solutions, they have the potential to redefine how you interact with your environment. Developer access and prototype testing are already underway, signaling that the glasses are nearing readiness for broader adoption. While challenges such as privacy, battery life, and cost remain, the glasses' combination of style, functionality, and innovative technology positions them as a promising product. As Google continues to refine the design and expand its partnerships, the Android XR glasses could pave the way for the future of AI-powered wearables, offering a glimpse into a more connected and intelligent world.

Share

Share

Copy Link

Google unveils prototype Android XR glasses at I/O 2025, showcasing AI-powered features and potential to revolutionize smart eyewear, despite challenges ahead.

Google Unveils Android XR Glasses Prototype

At the Google I/O 2025 developer conference, the tech giant unveiled its highly anticipated Android XR glasses prototype, showcasing a potential future for smart eyewear

1

. The glasses, developed in partnership with Samsung and Qualcomm, aim to integrate artificial intelligence and augmented reality into a familiar form factor1

2

.

Source: Geeky Gadgets

Features and Functionality

The Android XR glasses boast several impressive features:

- In-lens Display: A tiny display on the right lens projects information using Micro LED technology and etched waveguides

1

2

. - AI Integration: Google's Gemini AI is built into the glasses, allowing users to ask questions about their surroundings

1

. - Camera and Photo Capabilities: Users can take photos and view full-color previews directly on the lens

1

. - Navigation: Google Maps integration provides an intuitive, heads-up navigation experience

1

2

. - Real-time Translation: The glasses offer the potential for real-time language translation

4

.

Design and Comfort

Google has prioritized a design that closely resembles traditional eyewear:

- Lightweight Construction: Early prototypes felt noticeably lighter than existing smart glasses options

2

. - Partnerships: Google announced collaborations with Warby Parker and Gentle Monster for frame designs

1

. - Integrated Technology: The frames include microphones, speakers, and various sensors

1

.

Challenges and Future Development

Despite the promising prototype, several challenges remain:

- Battery Life: Questions persist about the glasses' battery life and charging solutions

3

5

. - AI Reliability: Current AI models still struggle with accuracy, which is crucial for AR applications

5

. - Price Point: The cost of advanced optical displays may impact affordability

3

. - Privacy Concerns: The always-on nature of smart glasses raises potential privacy issues

5

.

Related Stories

Industry Impact and Competition

Google's entry into the smart glasses market could have significant implications:

- Platform Unification: Android XR aims to provide a unified platform for various AR/VR devices

3

. - Competition: The glasses will compete with offerings from Meta, Apple, and others in the growing wearable display market

2

3

. - Developer Opportunities: Google is opening up the platform to third-party developers, potentially spurring innovation

3

.

Source: PC Magazine

Public Reception and Expectations

Initial reactions to the Android XR glasses have been largely positive:

- Potential Game-Changer: Many see the glasses as a possible turning point for smart eyewear adoption

1

2

. - Natural Integration: The familiar form factor is viewed as a key advantage for everyday use

4

5

. - Cautious Optimism: While excitement is high, there's recognition that perfecting the technology will take time

4

5

.

Source: Android Police

As Google continues to refine its Android XR glasses, the tech world eagerly anticipates further developments in this space. The success of these smart glasses could mark a significant shift in how we interact with AI and augmented reality in our daily lives.

References

Summarized by

Navi

[5]

Related Stories

Google AI glasses set to launch in 2026 with Gemini and Android XR across multiple partners

08 Dec 2025•Technology

Google Unveils Android XR: A New Era of AI-Powered Smart Glasses and XR Headsets

16 May 2025•Technology

Google's Project Astra: AI-Powered Smart Glasses Inch Closer to Reality

12 Dec 2024•Technology

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology