Google's Gemini 2.0 Flash: A Game-Changer in AI Image Generation and Editing

9 Sources

9 Sources

[1]

Farewell Photoshop? Google's new AI lets you edit images by asking

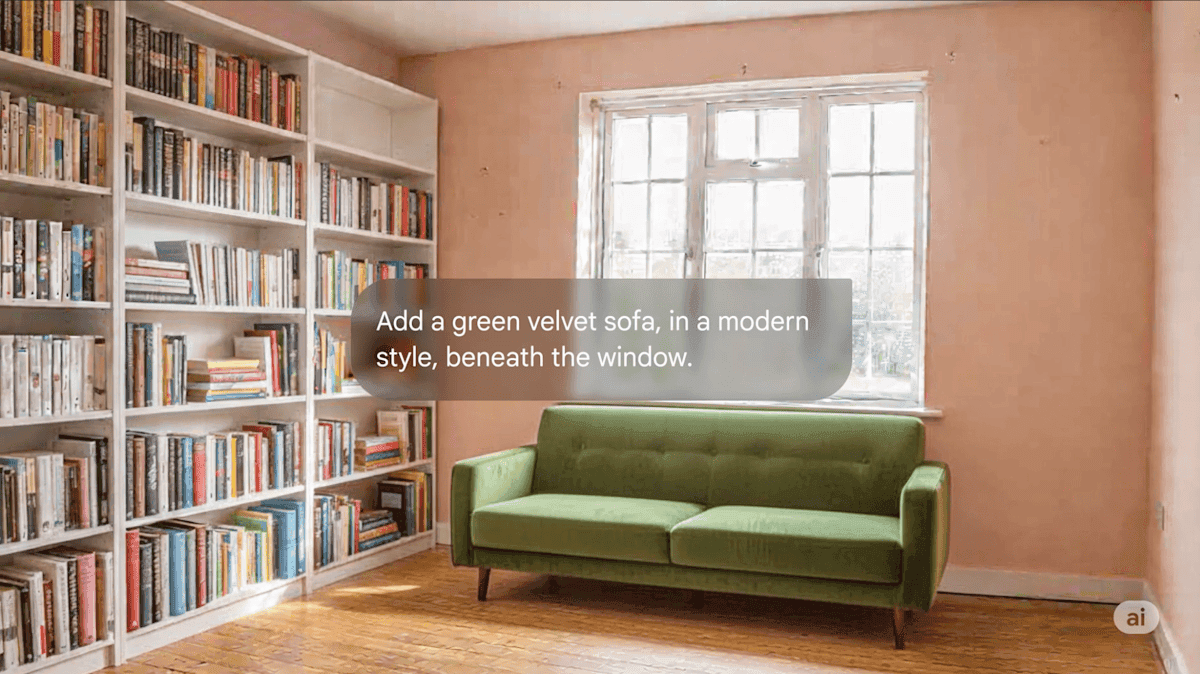

There's a new Google AI model in town, and it can generate or edit images as easily as it can create text -- as part of its chatbot conversation. The results aren't perfect, but it's quite possible everyone in the near future will be able to manipulate images this way. Last Wednesday, Google expanded access to Gemini 2.0 Flash's native image generation capabilities, making the experimental feature available to anyone using Google AI Studio. Previously limited to testers since December, the multimodal technology integrates both native text and image processing capabilities into one AI model. The new model, titled "Gemini 2.0 Flash (Image Generation) Experimental," flew somewhat under the radar last week, but it has been garnering more attention over the past few days due to its ability to remove watermarks from images, albeit with artifacts and a reduction in image quality. That's not the only trick. Gemini 2.0 Flash can add objects, remove objects, modify scenery, change lighting, attempt to change image angles, zoom in or out, and perform other transformations -- all to varying levels of success depending on the subject matter, style, and image in question. To pull it off, Google trained Gemini 2.0 on a large dataset of images (converted into tokens) and text. The model's "knowledge" about images occupies the same neural network space as its knowledge about world concepts from text sources, so it can directly output image tokens that get converted back into images and fed to the user. Incorporating image generation into an AI chat isn't itself new -- OpenAI integrated its image generator DALL-E 3 into ChatGPT last September, and other tech companies like xAI followed suit. But until now, every one of those AI chat assistants called on a separate diffusion-based AI model (which uses a different synthesis principle than LLMs) to generate images, which were then returned to the user within the chat interface. In this case, Gemini 2.0 Flash is both the large language model (LLM) and AI image generator rolled into one system. Interestingly, OpenAI's GPT-4o is capable of native image output as well (and OpenAI President Greg Brock teased the feature at one point on X last year), but that company has yet to release true multimodal image output capability. One reason why is possibly because true multimodal image output is very computationally expensive, since each image either inputted or generated is composed of tokens that become part of the context that runs through the image model again and again with each successive prompt. And given the compute needs and size of the training data required to create a truly visually comprehensive multimodal model, the output quality of the images isn't necessarily as good as diffusion models just yet. Another reason OpenAI has held back may be "safety"-related: In a similar way to how multimodal models trained on audio can absorb a short clip of a sample person's voice and then imitate it flawlessly (this is how ChatGPT's Advanced Voice Mode works, with a clip of a voice actor it is authorized to imitate), multimodal image output models are capable of faking media reality in a relatively effortless and convincing way, given proper training data and compute behind it. With a good enough multimodal model, potentially life-wrecking deepfakes and photo manipulations could become even more trivial to produce than they are now. Putting it to the test So, what exactly can Gemini 2.0 Flash do? Notably, its support for conversational image editing allows users to iteratively refine images through natural language dialogue across multiple successive prompts. You can talk to it and tell it what you want to add, remove, or change. It's imperfect, but it's the beginning of a new type of native image editing capability in the tech world. We gave Gemini Flash 2.0 a battery of informal AI image-editing tests, and you'll see the results below. For example, we removed a rabbit from an image in a grassy yard. We also removed a chicken from a messy garage. Gemini fills in the background with its best guess. No need for a clone brush -- watch out, Photoshop! Removing a rabbit from a photograph with Gemini 2.0 Flash. Google / Benj Edwards Removing a rabbit from a photograph with Gemini 2.0 Flash. Google / Benj Edwards Removing a chicken from a photograph with Gemini 2.0 Flash. Google / Benj Edwards Removing a chicken from a photograph with Gemini 2.0 Flash. Google / Benj Edwards Removing a rabbit from a photograph with Gemini 2.0 Flash. Google / Benj Edwards Removing a chicken from a photograph with Gemini 2.0 Flash. Google / Benj Edwards We also tried adding synthesized objects to images. Being always wary of the collapse of media reality called the "cultural singularity," we added a UFO to a photo the author took from an airplane window. Then we tried adding a Sasquatch and a ghost. The results were unrealistic, but this model was also trained on a limited image dataset (more on that below). Adding a UFO to a photograph with Gemini 2.0 Flash. Google / Benj Edwards Adding a UFO to a photograph with Gemini 2.0 Flash. Google / Benj Edwards Adding a sasquatch to a photograph with Gemini 2.0 Flash. Google / Benj Edwards Adding a sasquatch to a photograph with Gemini 2.0 Flash. Google / Benj Edwards Adding a ghost to a photograph with Gemini 2.0 Flash. Google / Benj Edwards Adding a ghost to a photograph with Gemini 2.0 Flash. Google / Benj Edwards Adding a sasquatch to a photograph with Gemini 2.0 Flash. Google / Benj Edwards Adding a ghost to a photograph with Gemini 2.0 Flash. Google / Benj Edwards We then added a video game character to a photo of an Atari 800 screen (Wizard of Wor), resulting in perhaps the most realistic image synthesis result in the set. You might not see it here, but Gemini added realistic CRT scanlines that matched the monitor's characteristics pretty well. Gemini can also warp an image in novel ways, like "zooming out" of an image into a fictional setting or giving an EGA-palette character a body, then sticking him into an adventure game. "Zooming out" on an image with Gemini 2.0 Flash. Google / Benj Edwards "Zooming out" on an image with Gemini 2.0 Flash. Google / Benj Edwards Giving the author a much-needed body with Gemini 2.0 Flash. Google / Benj Edwards Giving the author a much-needed body with Gemini 2.0 Flash. Google / Benj Edwards Inserting the embodied author into a simulated adventure game with Gemini 2.0 Flash. Google / Benj Edwards Inserting the embodied author into a simulated adventure game with Gemini 2.0 Flash. Google / Benj Edwards Giving the author a much-needed body with Gemini 2.0 Flash. Google / Benj Edwards Inserting the embodied author into a simulated adventure game with Gemini 2.0 Flash. Google / Benj Edwards And yes, you can remove watermarks. We tried removing a watermark from a Getty Images image, and it worked, although the resulting image is nowhere near the resolution or detail quality of the original. Ultimately, if your brain can picture what an image is like without a watermark, so can an AI model. It fills in the watermark space with the most plausible result based on its training data. And finally, we know you've likely missed seeing barbarians beside TV sets (as per tradition), so we gave that a shot. Originally, Gemini didn't add a CRT TV set to the barbarian image, so we asked for one. Then we set the TV on fire. All in all, it doesn't produce images of pristine quality or detail, but we literally did no editing work on these images other than typing requests. Adobe Photoshop currently lets users manipulate images using AI synthesis based on written prompts with "Generative Fill," but it's not quite as natural as this. We could see Adobe adding a more conversational AI image editing flow such as this one in the future. Multimodal output opens up new possibilities Having true multimodal output opens up interesting new possibilities in chatbots. For example, Gemini 2.0 Flash can play interactive graphical games or generate stories with consistent illustrations, maintaining character and setting continuity throughout multiple images. It's far from perfect, but character consistency is a new capability in AI assistants. We tried it out and it was pretty wild -- especially when it generated a view a photo we provided from another angle. Creating a multi-image story with Gemini 2.0 Flash, part 1. Google / Benj Edwards Creating a multi-image story with Gemini 2.0 Flash, part 1. Google / Benj Edwards Creating a multi-image story with Gemini 2.0 Flash, part 2. Notice the alternative angle of the original photo. Google / Benj Edwards Creating a multi-image story with Gemini 2.0 Flash, part 2. Notice the alternative angle of the original photo. Google / Benj Edwards Creating a multi-image story with Gemini 2.0 Flash, part 3. Google / Benj Edwards Creating a multi-image story with Gemini 2.0 Flash, part 3. Google / Benj Edwards Creating a multi-image story with Gemini 2.0 Flash, part 2. Notice the alternative angle of the original photo. Google / Benj Edwards Creating a multi-image story with Gemini 2.0 Flash, part 3. Google / Benj Edwards Text rendering represents another potential strength of the model. Google claims that internal benchmarks show Gemini 2.0 Flash performs better than "leading competitive models" when generating images containing text, making it potentially suitable for creating content with integrated text. From our experience, the results weren't that exciting, but they were legible. Despite Gemini 2.0 Flash's shortcomings so far, the emergence of true multimodal image output feels like a notable moment in AI history because of what it suggests if the technology continues to improve. If you imagine a future, say 10 years from now, where a sufficiently complex AI model could generate any type of media in real time -- text, images, audio, video, 3D graphics, 3D-printed physical objects, and interactive experiences -- you basically have a holodeck, but without the matter replication. Coming back to reality, it's still "early days" for multimodal image output, and Google recognizes that. Recall that Flash 2.0 is intended to be a smaller AI model that is faster and cheaper to run, so it hasn't absorbed the entire breadth of the Internet. All that information takes a lot of space in terms of parameter count, and more parameters means more compute. Instead, Google trained Gemini 2.0 Flash by feeding it a curated dataset that also likely included targeted synthetic data. As a result, the model does not "know" everything visual about the world, and Google itself says the training data is "broad and general, not absolute or complete." That's just a fancy way of saying that the image output quality isn't perfect -- yet. But there is plenty of room for improvement in the future to incorporate more visual "knowledge" as training techniques advance and compute drops in cost. If the process becomes anything like we've seen with diffusion-based AI image generators like Stable Diffusion, Midjourney, and Flux, multimodal image output quality may improve rapidly over a short period of time. Get ready for a completely fluid media reality.

[2]

Google's native multimodal AI image generation in Gemini 2.0 Flash impresses with fast edits, style transfers

Google's latest open source AI model Gemma 3 isn't the only big news from the Alphabet subsidiary today. No, in fact, the spotlight may have been stolen by Google's Gemini 2.0 Flash with native image generation, a new experimental model available for free to users of Google AI Studio and to developers through Google's Gemini API. It marks the first time a major U.S. tech company has shipped multimodal image generation directly within a model to consumers. Most other AI image generation tools were diffusion models (image specific ones) hooked up to large language models (LLMs), requiring a bit of interpretation between two models to derive an image that the user asked for in a text prompt. By contrast, Gemini 2.0 Flash can generate images natively within the same model that the user types text prompts into, theoretically allowing for greater accuracy and more capabilities -- and the early indications are this is entirely true. Gemini 2.0 Flash, first unveiled in December 2024 but without the native image generation capability switched on for users, integrates multimodal input, reasoning, and natural language understanding to generate images alongside text. The newly available experimental version, gemini-2.0-flash-exp, enables developers to create illustrations, refine images through conversation, and generate detailed visuals based on world knowledge. * Text and Image Storytelling: Developers can use Gemini 2.0 Flash to generate illustrated stories while maintaining consistency in characters and settings. The model also responds to feedback, allowing users to adjust the story or change the art style. * Conversational Image Editing: The AI supports multi-turn editing, meaning users can iteratively refine an image by providing instructions through natural language prompts. This feature enables real-time collaboration and creative exploration. * World Knowledge-Based Image Generation: Unlike many other image generation models, Gemini 2.0 Flash leverages broader reasoning capabilities to produce more contextually relevant images. For instance, it can illustrate recipes with detailed visuals that align with real-world ingredients and cooking methods. * Improved Text Rendering: Many AI image models struggle to accurately generate legible text within images, often producing misspellings or distorted characters. Google reports that Gemini 2.0 Flash outperforms leading competitors in text rendering, making it particularly useful for advertisements, social media posts, and invitations. Initial examples show incredible potential and promise Googlers and some AI power users to X to share examples of the new image generation and editing capabilities offered through Gemini 2.0 Flash experimental, and they were undoubtedly impressive. Google DeepMind researcher Robert Riachi showcased how the model can generate images in a pixel-art style and then create new ones in the same style based on text prompts. AI news account TestingCatalog News reported on the rollout of Gemini 2.0 Flash Experimental's multimodal capabilities, noting that Google is the first major lab to deploy this feature. User @Angaisb_ aka "Angel" showed in a compelling example how a prompt to "add chocolate drizzle" modified an existing image of croissants in seconds -- revealing Gemini 2.0 Flash's fast and accurate image editing capabilities via simply chatting back and forth with the model. YouTuber Theoretically Media pointed out that this incremental image editing without full regeneration is something the AI industry has long anticipated, demonstrating how it was easy to ask Gemini 2.0 Flash to edit an image to raise a character's arm while preserving the entire rest of the image. Former Googler turned AI YouTuber Bilawal Sidhu showed how the model colorizes black-and-white images, hinting at potential historical restoration or creative enhancement applications. These early reactions suggest that developers and AI enthusiasts see Gemini 2.0 Flash as a highly flexible tool for iterative design, creative storytelling, and AI-assisted visual editing. The swift rollout also contrasts with OpenAI's GPT-4o, which previewed native image generation capabilities in May 2024 -- nearly a year ago -- but has yet to release the feature publicly -- allowing Google to seize an opportunity to lead in multimodal AI deployment. As user @chatgpt21 aka "Chris" pointed out on X, OpenAI has in this case "los[t] the year + lead" it had on this capability for unknown reasons. The user invited anyone from OpenAI to comment on why. My own tests revealed some limitations with the aspect ratio size -- it seemed stuck in 1:1 for me, despite asking in text to modify it -- but it was able to switch the direction of characters in an image within seconds. A significant new tool for developers and enterprises While much of the early discussion around Gemini 2.0 Flash's native image generation has focused on individual users and creative applications, its implications for enterprise teams, developers, and software architects are significant. AI-Powered Design and Marketing at Scale: For marketing teams and content creators, Gemini 2.0 Flash could serve as a cost-efficient alternative to traditional graphic design workflows, automating the creation of branded content, advertisements, and social media visuals. Since it supports text rendering within images, it could streamline ad creation, packaging design, and promotional graphics, reducing the reliance on manual editing. Enhanced Developer Tools and AI Workflows: For CTOs, CIOs, and software engineers, native image generation could simplify AI integration into applications and services. By combining text and image outputs in a single model, Gemini 2.0 Flash allows developers to build: Since the model also supports conversational image editing, teams could develop AI-driven interfaces where users refine designs through natural dialogue, lowering the barrier to entry for non-technical users. New Possibilities for AI-Driven Productivity Software: For enterprise teams building AI-powered productivity tools, Gemini 2.0 Flash could support applications like: How to deploy and experiment with this capability Developers can start testing Gemini 2.0 Flash's image generation capabilities using the Gemini API. Google provides a sample API request to demonstrate how developers can generate illustrated stories with text and images in a single response: By simplifying AI-powered image generation, Gemini 2.0 Flash offers developers new ways to create illustrated content, design AI-assisted applications, and experiment with visual storytelling.

[3]

I tried Gemini's new AI image generation tool - here are 5 ways to get the best art from Google's Flash 2.0

AI art generation has been evolving at a wild pace, and Google just threw another big contender into the mix through its Gemini Flash 2.0. You can play with the new image creation tool in Google's AI Studio. Gemini Flash is, as the name suggests, very fast, notably faster than DALL-E 3 and other image creators. That speed might mean lower quality images, but that's not the case here, especially because all of the changes and upgrades to the model's image production ability. Still, if you want really good results, you must know how to talk to the AI. After plenty of trial and error, I've put together five tips for getting the absolute best art out of Gemini Flash 2.0. Some of these may seem similar to advice about other AI art creators, because they are, but that doesn't make them less useful in this context. The most interesting new feature for Gemini Flash's image creation is that it isn't just good for one-off illustrations, it can actually help you create a visual story by generating a series of related images with consistent style, settings, and moods. To get started, you just have to ask it to tell you a story and how often you want an illustration to go with the action. The result will include those images accompanying the text. For my project, I asked the AI to "Generate a story of a heroic baby dragon who protected a fairy queen from an evil wizard in a 3d cartoon animation style. For each scene, generate an image." I saw the above start to appear. And, if there's an issue, you can rewrite any of the bits of the story and the model will regenerate the image accordingly. If you tell Gemini to make "a dog in a park," you might get a blurry golden retriever sitting somewhere vaguely green. But if you say, "A fluffy golden retriever sitting on a wooden bench in Central Park during autumn, with red and orange leaves scattered on the ground" -- you get exactly what you're picturing. AI models thrive on detail. The more you provide, the better your image will be. So for the image above, instead of just asking for a futuristic looking city, I requested "A retro-futuristic cityscape at sunset, with neon signs glowing in pink and blue, flying cars in the sky, and people walking in retro-future style outfits." Seven seconds later, the result came in. One of my favorite things about the new Gemini Flash is that you can get conversational with it without losing much of the speed. That means you don't have to get everything right in one go. After generating an image, you can literally chat with the AI to make edits. Want to change the colors? Add a character? Make the lighting moodier? Just ask. In the image set above, I started by asking for "A cozy reading nook with a fireplace, bookshelves filled with novels, and a big comfy armchair." I then refined it by asking it to "Make it nighttime with soft, warm lighting," then followed up by asking it to "Add a sleeping cat on the armchair," and finished by requesting the AI "Give the room a vintage, Victorian aesthetic." The final result on the left looks almost exactly like what I imagined, and makes Gemini feel like an art assistant, one capable of adjusting to what I want without starting over from scratch every time. Google has boasted that Gemini is full of real-world knowledge, which means you can get historical accuracy, realistic cultural details, and true-to-life imagery if you ask for it. Of course, that requires being specific. For example, if you prompt it for "a Viking warrior," you might get something that looks more like a Game of Thrones character. But if you say, "A historically accurate Viking warrior from the 9th century, wearing detailed chainmail armor, a round wooden shield, and a traditional Norse helmet" -- you'll get something much more precise. As a test I asked the AI to make "An ancient Mayan city at sunrise, with towering stone pyramids, lush jungle surroundings, and people dressed in traditional Mayan garments." It's not perfect, but it looks a lot more like the real thing than previous versions, which would sometimes come back with almost an Egyptian pyramid. Most AI image models have long struggled with rendering text, turning words into illegible scribbles. Even the better models today that can do so take a bit to do it and getting it right can take a few tries. But, Gemini Flash is shockingly good at integrating text into images quickly and legibly. Being very specific can help though. That's how I generated the image above by asking the AI to "Make a vintage-style travel poster that says 'Visit London' in bold, retro typography, featuring a stylized illustration of the city."

[4]

Google's New AI Model Might Make Photoshop Redundant for Beginners

Google may have just dealt a death blow to Adobe. With the launch of Gemini's native image generation, Gemini 2.0 Flash combines multimodal input, advanced reasoning, and natural language processing (NLP) to generate high-quality visuals. According to Google, the model's key capabilities include text and image generation, conversational image editing, and text rendering. Since its launch on Google AI Studio, many users have expressed excitement about Gemini's multimodal capabilities. They have praised its ability to generate and edit images using simple text prompts, such as creating passport photos from existing pictures, adding decorations to a house, or changing a person's clothing in an image. Some are even using it as a virtual try-on for clothes to help reduce returns. "You can now design your house with AI. I asked Google Gemini, 'Make the furniture go away,' and then, 'Decorate it with a modern chic aesthetic.' It did it on the first try," said Deedy Das from Menlo Ventures, who recently played with the model. Das added that an interior designer would have charged $5,000-$10,000 for this in the US. "You can get infinite reps for free." He even went on to say that Google's new AI image editing model "is going to kill 99% of Photoshop". Moreover, many users are turning to it for watermark removal. "Gemini 2.0 Flash, now available in Google's AI Studio, makes editing images incredibly easy with just a text prompt," said Tanay Jaipuria, partner at Wing Capital Venture. He added that it also can remove watermarks from images. Similarly, another user on X commented that Gemini 2.0 Flash might just be the "death of professional image editing". He said the new Gemini 2.0 Flash has eliminated the need for hours of Photoshop learning, costly design software, and advanced technical skills. It removes creative limitations and reduces reliance on professional editors, making high-quality image editing faster, easier, and more accessible than ever. The best part is that Gemini can generate perfect text in images. This means it can be used to create invitation cards and posters. Moreover, it will have numerous applications in the advertising industry, where companies can use it to edit and tweak product visuals effortlessly. AIM tested Gemini by creating a birthday invitation card, and the model generated one with perfectly formatted text. "The level of scene understanding the new Gemini model exhibits is wild. You'd need multiple control nets to get this result, and now it's just a text prompt," said Bilawal Sidhu, a scout at a16z. Gemini can now also be used to teach new concepts to children through visuals. "Mind blown! Gemini 2.0 Flash interleaved image generation is a game changer. I explained AlphaFold to my two-year-old daughter's class for science week on Friday. Prompt: 'Write an illustrated story about AlphaFold for toddlers.' Instant magic!" said Oriol Vinyals, VP of research and deep learning lead at Google DeepMind. At the same time, Adobe is going all in on generative AI. In response to the growing influence of AI-driven tools, the company has been integrating its own generative AI features, powered by Firefly, into Photoshop. These include capabilities like Generative Fill and Generative Expand, which allow users to seamlessly add, remove, or extend elements in images using text prompts. During the recent earnings call, Adobe shared that its AI-first standalone products and add-on features brought in over $125 million by the end of the quarter. "AI represents a generational opportunity to reimagine our technology platforms to serve an increasingly large and diverse customer universe," said Shantanu Narayen, CEO of Adobe. Adobe's recent moves also include expanding Photoshop's accessibility beyond the desktop. In February 2025, Adobe launched a full-fledged Photoshop app for iPhone, bringing many of its desktop-like features to mobile users. This app, which includes Firefly-powered AI tools, aims to cater to both professional designers and casual users, offering a free tier with basic functionality and a premium plan for advanced features.

[5]

Google Outpaces OpenAI with Native Image Generation in Gemini 2.0 Flash

Gemini 2.0 Flash integrates multimodal input, reasoning, and natural language processing to generate images. Google has announced the availability of native image output in Gemini 2.0 Flash for developer experimentation. Initially introduced to trusted testers in December, this feature is now accessible across all regions supported by Google AI Studio. "Developers can now test this new capability using an experimental version of Gemini 2.0 Flash (gemini-2.0-flash-exp) in Google AI Studio and via the Gemini API," Google said. OpenAI also announced the same feature for GPT-4o last year, but the company hasn't shipped it yet. Notably, Google isn't using Imagen 3 for generating images, it is fully native Gemini. Gemini 2.0 Flash integrates multimodal input, reasoning, and natural language processing to generate images. According to Google, the model's key capabilities include text and image generation, conversational image editing, and text rendering. "Use Gemini 2.0 Flash to tell a story, and it will illustrate it with pictures while maintaining consistency in characters and settings," the company explained. The model also supports interactive editing, allowing users to refine images through natural language dialogue. Another feature is its ability to use world knowledge for realistic image generation. Google claims this makes it suitable for applications such as recipe illustrations. Moreover, the model offers improved text rendering, addressing common issues found in other image-generation tools. Internal benchmarks indicate that Gemini 2.0 Flash outperforms leading models in rendering long text sequences, making it useful for advertisements and social media content. Google has invited developers to experiment with the model and provide feedback. "We're eager to see what developers create with native image output," the company said. Feedback from this phase will contribute to finalising a production-ready version. Google recently also launched Gemma 3, the next iteration in the Gemma family of open-weight models. It is a successor to the Gemma 2 model released last year. The small model comes in a range of parameter sizes -- 1B, 4B, 12B and 27B. The model also supports a longer context window of 128k tokens. It can analyse videos, images, and text, supports 35 languages out of the box, and provides pre-trained support for 140 languages.

[6]

Create Stunning Visuals in Seconds with Google Gemini 2.0

Imagine being able to bring your wildest creative visions to life without ever picking up a paintbrush or mastering complex design software. Whether it's crafting a stunning visual for a presentation, designing a personalized birthday card, or illustrating an entire storybook, the process often feels daunting -- especially if you're not a professional artist or designer. But what if there were a way to skip the steep learning curve and dive straight into creating? That's where Google's Gemini 2.0 steps in, offering an intuitive and accessible solution that transforms your ideas into images with just a few words. At its core, Gemini 2.0 is more than just a tool -- it's a creative partner that listens to your ideas and helps you shape them into something tangible. Using innovative text-to-image generation and seamless editing features, it enables anyone, regardless of skill level, to produce professional-grade visuals in record time. Whether you're a seasoned designer looking to streamline your workflow or someone who's always wished they could translate their imagination into art, this platform opens up a world of possibilities. At the heart of Gemini 2.0 lies its text-to-image generation capability, which allows you to transform written descriptions into vivid, high-quality visuals. By simply typing a prompt such as "a serene lake surrounded by autumn trees at sunset," the AI generates an image that matches your description. If the result doesn't fully align with your vision, you can refine it by adjusting or expanding your prompts. This feature eliminates the need for advanced design skills, making professional-grade image creation accessible to everyone. For those who may lack technical expertise, this tool bridges the gap between imagination and execution, allowing you to bring your ideas to life without the steep learning curve traditionally associated with design software. Gemini 2.0 extends beyond image generation by offering robust and user-friendly image editing tools. With simple commands, you can modify existing visuals to suit your needs. For example, you can upload a photo and instruct the AI to "add a rainbow in the background" or "remove the text from the corner." These tools allow you to adjust layouts, change colors, or incorporate new elements effortlessly. This functionality is particularly valuable for tasks such as retouching photos, creating marketing materials, or enhancing personal projects. By streamlining the editing process, Gemini 2.0 saves you time and effort, allowing you to focus on the creative aspects of your work rather than the technical details. Here are additional guides from our expansive article library that you may find useful on Text-to-Image AI. One of the standout features of Gemini 2.0 is its ability to support visual storytelling, making it an ideal tool for creating illustrated content such as children's books, graphic novels, or educational materials. By generating sequential images based on your prompts, the AI helps you bring your narratives to life with cohesive and visually engaging scenes. You can guide the AI step by step, making sure that the visuals align with the tone, style, and progression of your story. This feature simplifies the creative process, allowing you to focus on developing compelling narratives while the AI handles the technical execution of the visuals. Whether you're an author, educator, or content creator, this tool offers a streamlined approach to storytelling. Gemini 2.0 enables you to create custom designs that reflect your unique vision. Whether you're designing a poster, a personalized gift, or a social media graphic, the platform allows you to specify details such as color schemes, themes, and text. For instance, you can request "a minimalist poster with a mountain silhouette and motivational text," and the AI will generate a polished design tailored to your specifications. This feature is particularly beneficial for small businesses, educators, and hobbyists who need efficient and cost-effective design solutions. By offering a high degree of customization, Gemini 2.0 ensures that your creations are not only visually appealing but also aligned with your specific goals and preferences. To enhance your creative workflow, Gemini 2.0 provides a library of sample media that you can use as a starting point. Alternatively, you can upload your own images for further customization. This flexibility allows you to blend AI-generated content with personal assets, resulting in truly unique creations. For example, you could upload a family photo and instruct the AI to transform it into a watercolor-style painting. This capability enables you to combine technology with personal touches, making your projects more meaningful and distinctive. Gemini 2.0 offers adjustable creativity settings that give you control over the AI's output. By modifying parameters such as "temperature," you can influence the style and complexity of the generated content. Increasing the temperature encourages more abstract and imaginative results, while lowering it ensures precision and consistency. This level of customization allows you to tailor the tool's behavior to suit the specific requirements of your project. Whether you're aiming for bold experimentation or refined accuracy, Gemini 2.0 adapts to your creative vision, providing the flexibility needed for diverse applications. To promote ethical and responsible use, Gemini 2.0 incorporates robust safety features designed to filter inappropriate or harmful outputs. These safeguards make the platform suitable for a wide range of users, including educators, families, and professionals. By prioritizing accountability and inclusivity, Google ensures that the platform fosters a safe environment for creativity and innovation. These safety measures not only protect users but also reinforce the platform's commitment to ethical AI development, making it a reliable tool for both personal and professional use. For those eager to push creative boundaries, Gemini 2.0 offers access to experimental AI models. These advanced tools allow you to explore innovative rendering techniques, experiment with unconventional styles, and discover new creative possibilities. Whether you're a professional innovator or a curious hobbyist, these models open doors to fresh ideas and new projects. By providing access to experimental features, Gemini 2.0 encourages exploration and innovation, allowing you to stay at the forefront of AI-driven creativity. Google's Gemini 2.0 represents a significant advancement in visual content creation, combining text-to-image generation, advanced editing tools, and customizable design features into a single, user-friendly platform. By simplifying complex creative processes and offering a wide range of functionalities, it caters to a diverse audience, from professionals to casual users. With its adjustable creativity settings, safety features, and experimental models, Gemini 2.0 enables you to bring your ideas to life efficiently and responsibly. Whether you're crafting a visual story, designing a custom project, or exploring uncharted artistic territory, this platform equips you with the tools to unlock your creative potential and achieve your goals with confidence.

[7]

I Tried Out Gemini's New Native Image Gen Feature, and It's Fricking Nuts

We have been hearing the term 'natively multimodal' in the AI space for over a year, but companies were slow in unlocking full multimodal capabilities of their AI models until now. Google has finally released its latest "Gemini 2.0 Flash Experimental" model with the ability to generate and edit images natively. Now, you might be wondering, what is the big deal with image generation? AI image generation has been available with all major AI chatbots like ChatGPT for quite some time. Well, when we generate AI images on ChatGPT or Gemini, the prompt is routed to a specialized Diffusion-based model like Dall-E 3 or Imagen 3. The said models are trained on images and designed only to generate images; they are like an extension to the main AI model and not part of it. However, language-vision models like Gemini are natively multimodal, meaning they can inherently understand, generate, and modify both text and images. Until now, no tech company had made this capability available to users. OpenAI demonstrated its native image generation feature with GPT-4o in 2024, but again, it was never released. With native image generation, you get better consistency as multimodal models are trained on a large dataset of different modalities. As a result, such models boast better understanding of concepts and exhibit broader world knowledge. Beyond image generation, you can seamlessly edit images with simple prompts. For example, you can upload an image and ask the model to add sunglasses, insert legible text, remove objects, and more to the image. And unlike Diffusion models which regenerate the whole image with each new prompt, natively multimodal models maintain consistency across multiple modifications. Currently, the native image generation feature is not available to general users. The Gemini 2.0 Flash Experimental model with native image generation is only available on Google's AI Studio (visit) for free. After previewing the model on AI Studio, it will be released on Gemini for everyone to use in the near future. However, I tried out the new Gemini model with native image generation, and it was quite the exciting experience. First, I started with a visual guide to showcase the consistency of Gemini's native image generation capability. I asked Gemini to create a visual guide on how to make an omelet, generating an image for each step of the process. As you can notice, the results are highly consistent across images with no glitches. Even the bowl is the same in the second image. Finally, you can download the images in 1024 x 680 resolution. This way, you can create a visual guide on anything you want. Next, I asked Gemini to create an aesthetic table and then told it to show the table from the center camera angle. It did a perfect job. After that, I prompted Gemini to add a PlayStation to the table and give me a closer look. Again, Gemini nailed it. The AI model, as you see below, also included a reflection of the PS5 in the mirror behind it. To demonstrate native image editing, I uploaded an image from my gallery and asked Gemini 2.0 to remove the wine glass from the table. Following that, I told Gemini to add mushrooms to the pizza, and it did a wonderful job. Then, I prompted Gemini to add a croissant and there you have AI image editing in full glory, thanks to Gemini's native multimodal capability. Next, I uploaded an image of mine, and asked Gemini to add sunglasses and then add the "Beebom" text on my t-shirt. Both were done quite well. Lastly, I asked Gemini to colorize an image, and it worked really well too. I mean, the image came out more beautiful than it was before, without any weird glitches, artifacting, or part of the image missing. There are many such use cases that you can try with Gemini's new multimodal capability. Google has done a commendable job with native image generation and editing, and I'm planning to use it more rigorously in the coming weeks to test its limits. After the release of Veo 2 for video generation and Imagen 3 for specialized image generation, it appears Google has outclassed OpenAI in many areas; not just AI text generation. So, it would be interesting to see what OpenAI does next to reclaim the top spot with ChatGPT.

[8]

Gemini 2.0 Flash AI Image Generator and Editing Guide

Google has unveiled Gemini 2.0 Flash, a innovative AI model that is reshaping the landscape of image generation and editing. Designed to cater to both professionals and enthusiasts, this advanced tool enables users to create, modify, and enhance visuals effortlessly using simple text prompts. Its multimodal capabilities allow seamless integration into diverse workflows, offering a versatile and efficient solution for image manipulation. Whether you are a designer, marketer, or content creator, Gemini 2.0 Flash provides the tools to streamline your creative process and elevate your projects. At its core, Gemini 2.0 Flash is more than just a tool -- it's a creative partner. With its ability to generate, edit, and enhance images using simple text prompts, this innovative AI model opens up a world of possibilities for professionals and hobbyists alike. From applying artistic styles to maintaining character consistency across projects, Gemini 2.0 Flash is packed with features designed to make your creative process not only faster but also more enjoyable. In this overview, Prompt Engineering provides more insight into the new capabilities of this AI model and explore how it's setting a new standard for what's possible in visual storytelling and design. Gemini 2.0 Flash introduces a suite of innovative tools that simplify and enhance image editing. These features are designed to meet the needs of users across various industries, making sure precision and creativity in every project: These features make Gemini 2.0 Flash a powerful tool for a wide range of creative and professional applications, from producing marketing materials to crafting compelling visuals for storytelling. Gemini 2.0 Flash goes beyond traditional image editing, offering practical solutions for a variety of real-world scenarios. Its capabilities are tailored to meet the demands of industries such as design, advertising, education, and research. Here are some of its most impactful applications: These applications demonstrate the versatility of Gemini 2.0 Flash, making it an indispensable tool for professionals seeking to enhance their workflows and achieve high-quality results. Explore further guides and articles from our vast library that you may find relevant to your interests in Gemini 2.0 Flash. Gemini 2.0 Flash is designed with user accessibility and technical integration in mind, making sure that it can be seamlessly incorporated into existing workflows. The model is available through Google AI Studio and supports API access, making it suitable for both individual users and businesses. Key technical highlights include: These features make Gemini 2.0 Flash a practical and scalable choice for users seeking advanced AI tools that are both powerful and user-friendly. Its integration capabilities ensure that it can adapt to the needs of diverse industries and workflows. Gemini 2.0 Flash is part of Google's broader ecosystem of AI advancements, which includes a range of complementary tools and models designed to enhance productivity and creativity. Some of the most notable innovations include: These developments highlight Google's commitment to advancing AI technology while making sure practical applications for users across various domains. By integrating these tools into its ecosystem, Google provides a comprehensive suite of solutions that cater to diverse needs. Google's approach to AI development emphasizes a balance between innovative innovation and user accessibility. By offering a diverse range of models and features, the company aims to meet the needs of both individual creators and large enterprises. Key pillars of this strategy include: This strategy positions Google as a leader in the AI space, delivering solutions that are both innovative and practical. By prioritizing usability and versatility, Google ensures that its AI tools can empower users to achieve their creative and professional goals.

[9]

Google Unveils Gemini Flash 2.0: Faster, Smarter AI Image Generation

Google has introduced an upgraded AI image generation tool known as This model type generates images faster than its competitors. Despite the speed, it still maintains high-quality results. Users can refine outputs and create visually consistent stories. The tool allows generating images in sequence. This feature helps in creating visual stories with consistent styles and settings. Instead of single illustrations, Gemini Flash 2.0 builds a connected narrative. Detailed prompts improve image quality. A simple prompt like "a dog in a park" produces a generic image. A more detailed request, such as "a fluffy golden retriever sitting on a wooden bench in Central Park during autumn," results in a precise image.

Share

Share

Copy Link

Google introduces Gemini 2.0 Flash, a revolutionary AI model that combines native image generation and editing capabilities, potentially challenging traditional image editing software and other AI image generators.

Google Unveils Gemini 2.0 Flash with Native Image Generation

Google has introduced Gemini 2.0 Flash, a groundbreaking AI model that integrates native image generation and editing capabilities directly into its large language model (LLM). This development marks a significant advancement in AI technology, potentially revolutionizing the way we create and manipulate images

1

2

.Key Features and Capabilities

Gemini 2.0 Flash boasts several impressive features:

-

Native Image Generation: Unlike other AI chatbots that rely on separate diffusion models, Gemini 2.0 Flash can generate images directly within its neural network

1

. -

Conversational Image Editing: Users can iteratively refine images through natural language dialogue, making the editing process more intuitive and accessible

2

. -

World Knowledge-Based Image Generation: The model leverages its broad understanding to create contextually relevant images, particularly useful for applications like recipe illustrations

2

. -

Improved Text Rendering: Gemini 2.0 Flash outperforms competitors in generating legible text within images, making it ideal for creating advertisements, social media posts, and invitations

2

.

Practical Applications

The model's versatility opens up numerous possibilities:

- Visual Storytelling: Gemini 2.0 Flash can generate illustrated stories while maintaining consistency in characters and settings

2

. - Design and Marketing: It offers a cost-efficient alternative to traditional graphic design workflows, potentially streamlining content creation for marketing teams

2

. - Image Editing: Users can perform complex edits like removing objects, changing lighting, or adding elements simply by describing the desired changes

1

3

.

Comparison with Competitors

Google's release of Gemini 2.0 Flash puts it ahead of competitors like OpenAI, which has yet to release its native image generation capability for GPT-4

2

5

. This move could potentially challenge the dominance of specialized image editing software like Adobe Photoshop, especially for beginners and casual users4

.Implications and Concerns

While the technology is impressive, it raises some concerns:

- Deepfake Potential: The ease of manipulating images could make the creation of convincing deepfakes more accessible

1

. - Impact on Creative Industries: The tool's capabilities may disrupt traditional graphic design and image editing professions

4

.

Related Stories

User Experiences and Reactions

Early users have reported positive experiences with Gemini 2.0 Flash:

- Rapid editing of home interiors and furniture arrangements

4

. - Easy creation of vintage-style posters with legible text

3

. - Generation of historically accurate images based on detailed prompts

3

.

Future Prospects

As Gemini 2.0 Flash is still in its experimental phase, Google is actively seeking developer feedback to refine the model further

5

. The technology's potential applications span various industries, from advertising and e-commerce to education and entertainment.This advancement in AI technology represents a significant step towards more intuitive and accessible image creation and manipulation tools, potentially democratizing complex design processes and opening new avenues for creative expression.

References

Summarized by

Navi

[2]

[3]

Related Stories

Google's Gemini 2.0 Flash AI Model Raises Concerns Over Watermark Removal Capabilities

17 Mar 2025•Technology

Google Gemini's AI Image Editing Gets a Powerful Upgrade: Consistency and Advanced Features

27 Aug 2025•Technology

Google Relaunches Gemini with Enhanced Features: Image Generation, Custom Bots, and More

30 Aug 2024

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology