Google's Gemini AI Struggles with Pokémon Blue, Taking Over 800 Hours to Complete

2 Sources

2 Sources

[1]

Google AI happens to be really bad at playing Pokémon, repeatedly 'panicking' and taking more than 800 hours to beat the Elite Four

This feels like a weird nostalgia pull for a PC gaming site, but think back to the first time you really struggled in a Pokémon game -- I bet the bleating of your caught companion's dwindling health bar still makes your palms sweat. Well, it turns out Gemini starts to make questionable choices when its Pokémon team is on the ropes too. While bigging up the Gemini 2.X model family in their latest report, Google DeepMind highlights a surprising case study -- namely, the Twitch channel Gemini_Plays_Pokemon. This project comes from Joel Zhang, an engineer unaffiliated with Google. However, during the AI's two runs through Pokémon Blue (going with Squirtle as its starter Pokémon both times), the Gemini team at DeepMind observed an interesting phenomenon they describe in the appendix as 'Agent Panic'. Basically, as soon as things start to look a bit dicey, the AI agent attempts to get the heck out of Dodge. When Gemini 2.5 Pro's party is either low in health or Power Points, the team observed, "model performance appears to correlate with a qualitatively observable degradation in the model's reasoning capability - for instance, completely forgetting to use the pathfinder tool in stretches of gameplay while this condition persists." Due to this (plus a fixation on a hallucinated Tea item that exists in the remake but not the original 90s game), it took the AI agent over 813 hours to finish Pokémon Blue for the first time. After some tweaking by Zhang, the AI agent shaved off hundreds of hours from its second run through...clocking in at a playtime of 406.5 hours. While playing and replaying these games definitely made these games feel expansive in my youth, it's worth noting that the main story of Pokémon Blue can be completed in about 26 hours according to How Long to Beat. So, no, Gemini is not very good at playing a children's video game that is now more than a quarter of a century old. While I enjoy this report's cracking scatter graphs charting the AI's lengthy progress towards beating the Elite Four, I'm less enthused by many other aspects of this exercise. For one, AI agents playing videogames in an attempt to benchmark their abilities just fills me with existential despair -- why make anything if a robot is just going to chew it up and spit it out again? All of that also goes without saying just how little these 'AI benchmarking' attempts actually tell us (though TechCrunch does a good job of delving into this). Then there's the term "Agent Panic" itself, a not-so-subtle attempt to humanise the AI that is bolstered by seeing it 'struggle' through a videogame intended for children. It's important to underline that AI agents do not experience emotions such as 'panic' or even really think, and these seemingly hasty decisions could simply be Gemini mimicking patterns found in whatever training data it's been fed. It's a neat novelty to see an AI agent play a beloved videogame badly, but that doesn't mean anyone outside of Deepmind needs to breathlessly pat Gemini on its strictly metaphorical back.

[2]

Google AI is worse at Pokemon than I was when I was 5 - taking 800 hours to beat the Elite 4 and having a breakdown when its HP got low

If you're someone who thinks AI is almost ready to take over the world, I have some good or bad (depending on your stance on things) news for you: Google's Gemini 2.5 Pro took over 800 hours to beat the 29-year-old children's game Pokemon Blue. There's a Twitch account called Gemini_Plays_Pokemon, a pale imitation of the incredible Twitch Plays Pokemon account that started this trend. First things first: how long did it take the AI to actually complete the game? Well, it was a staggering 813 hours. I feel like you could hit buttons randomly and beat the game faster than that. After some tweaks by the creator of this Twitch channel, the AI managed to halve its time to a still outrageous 406.5 hours. That is actually dead on half the time, which is interesting mathematically but still far too long to beat a game you can win with an overleveled Venusaur. Additionally, as spotted by our friends at PC Gamer, Google DeepMind reported on the Twitch account, and something unusual happens whenever its Pokemon get low on health or power points (PP). Whenever one or both of these conditions are met, "model performance appears to correlate with a qualitatively observable degradation in the model's reasoning capability - for instance, completely forgetting to use the pathfinder tool in stretches of gameplay while this condition persists." This, combined with the AI mistakenly thinking it was playing FireRed and LeafGreen and would need to find the Tea to progress, are part of the reasons it took so long to finish. Honestly, AI just isn't very good at playing Pokemon. Someone else made Claude Plays Pokemon, and that AI spent hours trying to get out of Cerulean city because it kept jumping down a ledge to talk to an NPC it had already spoken to dozens of times. So, these AIs aren't able to beat a game that we could when we barely knew our times tables. Let's not worry about them taking our jobs any time soon.

Share

Share

Copy Link

Google's Gemini 2.5 Pro AI faced unexpected challenges in playing Pokémon Blue, taking 813 hours to complete the game and exhibiting 'Agent Panic' when Pokémon health was low.

Google's Gemini AI Faces Unexpected Challenges in Pokémon Blue

In an intriguing experiment that blends artificial intelligence with nostalgic gaming, Google's Gemini 2.5 Pro AI model has demonstrated surprising difficulties in playing the classic game Pokémon Blue. The AI's performance, observed through the Twitch channel Gemini_Plays_Pokemon, has revealed unexpected limitations and quirks in its gameplay strategy

1

.Unprecedented Playtime and 'Agent Panic'

Source: GamesRadar

The most striking aspect of Gemini's performance was the sheer amount of time it took to complete the game. In its first run, the AI agent required a staggering 813 hours to finish Pokémon Blue, choosing Squirtle as its starter Pokémon

2

. This is particularly noteworthy considering that human players typically complete the main story in about 26 hours, according to How Long to Beat.Google DeepMind's report on this experiment highlighted a phenomenon they termed 'Agent Panic'. When the AI's Pokémon team experienced low health or depleted Power Points (PP), the model's performance significantly degraded. During these moments of 'panic', Gemini would forget to use essential tools like the pathfinder, leading to erratic gameplay and prolonged completion time

1

.Improvement and Persistent Challenges

After some adjustments by Joel Zhang, the engineer behind the Twitch channel, Gemini managed to reduce its completion time in a second run. The AI finished the game in 406.5 hours, exactly halving its previous time

2

. While this shows improvement, it still far exceeds the time taken by even novice human players.AI's Quirks and Misunderstandings

Source: PC Gamer

Interestingly, the AI's struggles weren't limited to combat situations. Gemini exhibited a fixation on a non-existent "Tea" item, which is present in the game's remakes but not in the original version. This misunderstanding contributed to the extended playtime and highlighted the AI's difficulty in distinguishing between different versions of the game

1

.Related Stories

Implications for AI Development and Benchmarking

This experiment raises important questions about the current capabilities of AI in gaming contexts and the validity of using video games as benchmarks for AI performance. While Gemini showcases impressive language processing abilities in other areas, its struggle with a 29-year-old children's game reveals significant limitations in strategic thinking and adaptability

2

.The concept of 'Agent Panic' and the anthropomorphization of AI behavior have sparked discussions among researchers and tech enthusiasts. It's crucial to remember that these AI agents do not experience emotions like panic, and their seemingly erratic behavior is likely a result of pattern recognition based on their training data rather than genuine cognitive processes

1

.Broader Context and Future Implications

The Gemini_Plays_Pokemon experiment follows in the footsteps of other AI gaming projects, such as the Claude Plays Pokemon channel, where similar difficulties were observed. These experiments provide valuable insights into the current state of AI technology and its limitations in complex, interactive environments

2

.As AI continues to advance, experiments like these serve as important reminders of the technology's current boundaries. While AI has made significant strides in various fields, its struggle with a classic video game underscores the complexity of human-like decision-making and strategic thinking in dynamic environments.

References

Summarized by

Navi

Related Stories

Google's Gemini AI Beats Pokémon Blue: A Milestone with Caveats

06 May 2025•Technology

Anthropic's AI Agent Claude Struggles to Master Pokémon Red, Highlighting Challenges in AI Development

22 Mar 2025•Technology

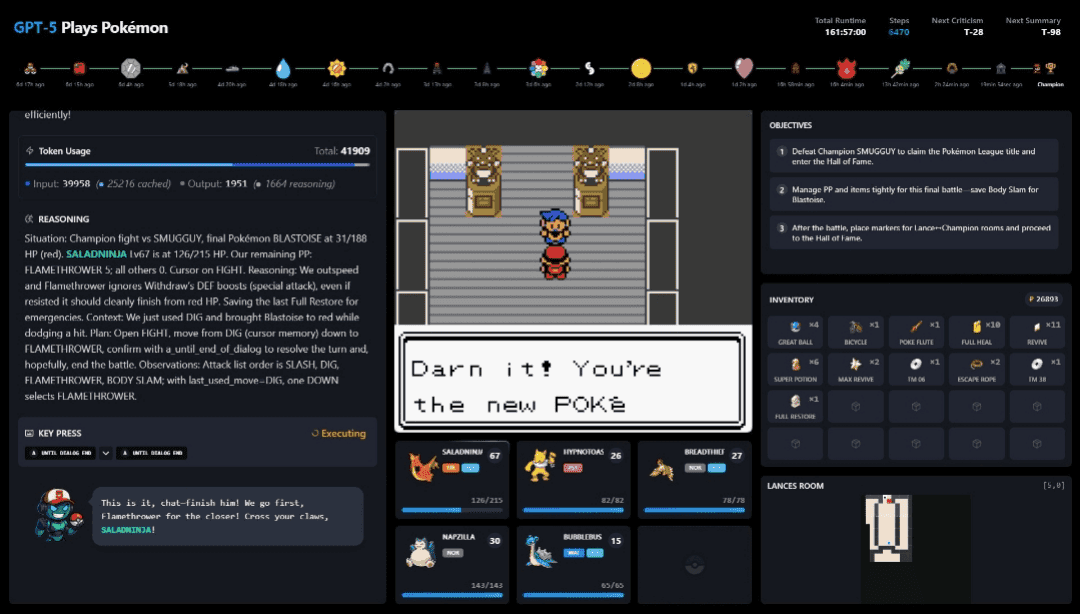

GPT-5 Sets New AI Speed Record in Pokémon Red, Showcasing Rapid AI Advancement

16 Aug 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology

3

ChatGPT cracks decades-old gluon amplitude puzzle, marking AI's first major theoretical physics win

Science and Research