Google Unveils Android XR: A New Era of AI-Powered Smart Glasses and XR Headsets

21 Sources

21 Sources

[1]

Glass redux: Google aims to avoid past mistakes as it brings Gemini to your face

MOUNTAIN VIEW, Calif. -- Get ready to see Android in a new-ish way. It's been 13 years since Google announced its Google Glass headset and 10 years since it stopped selling the device to consumers. There have been other attempts to make smart glasses work, but none of them have stuck. As simpler devices like the Meta Ray-Ban glasses have slowly built a following, Google is getting back into the smart glasses game. After announcing Android XR late last year, the first usable devices were on site at Google I/O. And you're not going to believe this, but the experience is heavily geared toward Gemini. As Google is fond of pointing out, Android XR is its first new OS developed in the "Gemini era." The platform is designed to run on a range of glasses and headsets that make extensive use of Google's AI bot, but there were only two experiences on display at I/O: an AR headset from Samsung known as Project Moohan and the prototype smart glasses. Moohan is a fully enclosed headset, but it defaults to using passthrough video when you put it on. If you've worn an Apple Vision Pro or a Meta Quest with newer software, you'll be vaguely familiar with how Moohan works. Indeed, the interactions are consistent and intuitive. You can grab, move, and select items with the headset's accurate hand tracking. With Android XR, you also get access to the apps and services you've come to know from Google. Outside of games and video experiences, content has been a problem on other headsets. Wearing Google Glass(es) Google's smart glasses are the more interesting of the pair. While Moohan is not as hefty as the Vision Pro or Quest 3, it's not something you'd want to wear all day. The glasses, on the other hand, could blend pretty seamlessly into your life. Google stressed at every opportunity that the hardware was a prototype, but the glasses felt and looked good. Unlike the Meta Ray-Bans, Google's glasses have a display embedded in the right lens (you can just make out the size and location of the display in Sergey's glasses above). Google Glass projected its UI toward the corner of your vision, but the Android XR specs place the UI right in the middle. It's semi-transparent and can be a little hard to focus on at first, in part because the information it shows is much more minimal than what you get with Moohan. The glasses have a camera, microphone, and open-ear speakers. Everything blends well into a body that barely looks any different than a pair of normal eyeglasses. The only hint of "smarts" are the chunky temples that house the silicon and battery. They connect to a phone wirelessly, leveraging the same Gemini Live that you can access with the Gemini app. The experience is different when it's on your face, though. The XR glasses have a touch-sensitive region on the temple, which you can long-press to activate Gemini. A tap pauses and unpauses the AI assistant, which is important because it's very verbose. The demo area for the Android XR glasses was a maximalist playground with travel books, art, various tchotchkes, and an espresso machine. Because Gemini is seeing everything you do, you can ask it things on the fly -- Who painted this? Where was this photo taken? Does that bridge have a name? How do I make coffee with this thing? Gemini answers those questions (mostly) correctly, displaying text in the lens display when appropriate. The glasses also support using the camera to snap photos, which are previewed in the XR interface. That's definitely something you can't do with the Meta Ray-Bans. AI for your face, later this year As Google announced at I/O, everyone now has access to Gemini live on their phone. So you can experience many of the same AI interactions that are possible with the prototype specs. But having Gemini ready hands-free to share your perspective on the world feels different, allowing for smoother access to AI features. Google showed Gemini in Android XR remembering minute details from earlier in the day, sending messages, controlling phone settings and displaying a floating Google Maps frame for live navigation. Googlers briefly engaged in a "very risky" demo of real-time language translation, too. Google has done things like this before with phones and earbuds, but it looked more effortless with these compact smart glasses. The presenters both spoke their mother tongue (Farsi and Hindi), which was detected and translated in real-time to English. However, the magic didn't last, with one of the units freezing after a minute. Google said we'll see hardware later this year, but it probably won't look exactly like the demo version at I/O. Hardware is often where Google's more ambitious projects stumble, and that was certainly the case for Glass. Google cofounder Sergey Brin spoke about that during an I/O appearance, noting that he "made a lot of mistakes with Glass." He specifically cited his lack of knowledge on consumer electronics supply chains, which contributed to the hefty $1,500 price tag for Glass. Things should be better for Android XR glasses, Brin said. He says Google is working closely with partners to ensure its glasses are better out of the gate than Glass was. As for Project Moohan, Samsung has the scale and supply chain access to make a competitive piece of hardware. Its issue with past AR and VR products was the software, which it's relying on Google to handle. Of course, no one will want to wear smart glasses that look bad -- Meta's glasses have been successful in part because they look very close to standard Ray-Bans. Google says it's working with Gentle Monster and Warby Parker to create Android XR glasses you won't mind having on your face. As for whether or not you'll want Gemini there, it's hard to say. Google's live generative AI has come a long way, but churning through video footage of your entire life is going to be expensive and a potential privacy nightmare.

[2]

Google's About to Tell Us More About Its Android XR Plans for Glasses

Nearly 20 years writing about tech, and over a decade reviewing wearable tech, VR, and AR products and apps Google's future of AI looks like it's going through glasses and we should know more as soon as next week. A little tease by Android head Sameer Samat, where he put on a pair of smart glasses at the end of Google's Android 16 showcase this week, pointed toward more to come at the company's AI-focused IO developer conference next week. The glasses are part of Android XR, Google's upcoming platform for VR, AR and AI on glasses and headsets. But what should we expect, and what do we think this really is all about? Let's recap what we already know. Android XR is Google's return to an AR/VR space it left years ago when it had Google Daydream. I demoed Android XR and spoke to the platform heads last December, where it became clearer that this new initiative is very AI-focused: Gemini's presence on XR devices is what Google sees as the platform's unique advantage over what Meta and Apple offer. Android XR is also cross-compatible with Google Play, too. Android XR comes in two flavors so far: a mixed reality VR headset made by Samsung called Project Moohan, and an assortment of smart glasses made by Google (and, it looks like, Samsung, Xreal and others to come). Moohan is very much a headset similar to Apple's Vision Pro, with a high-res display and passthrough cameras that seem to blend the virtual and real, and runs 2D apps that float onscreen. The glasses come with and without displays, and have always-on AI modes that can see and hear what you're looking at and saying. Gemini Live can look at your world, or the apps on-screen, and analyze and describe (or even remember) what you're seeing. That vision-enabled generative AI is exactly what Meta's exploring as well on its glasses and on devices to come, and Apple is likely to enter that space too in next-gen versions of Apple Intelligence on Vision Pro. Project Moohan could be revealed in more detail at Google I/O but it also may come later this year. Samsung and Google said the hardware's release date is sometime in 2025 but it's still unknown whether it's really more of a developer kit than a consumer product. Meanwhile, Google's glasses efforts look to be next on deck, perhaps readying for release in 2026 instead of 2025. Google's likely to get into much more detail now about how AI tools could work on these headsets and glasses. A developer-focused Android XR event last December began making preview software available but it's time to get even more proactive about how existing Gemini tools on phones, tablets and elsewhere could run on XR and how these could dovetail. Google's deep sets of AI features across all its products could make Android XR a lot more intriguing than anything Meta's shown off so far on Ray-Bans but that also depends on how much Google makes available. We'll know more soon, but Google's presence in the XR race should make the next wave of headsets and glasses a lot more competitive and interesting for sure.

[3]

Google's upcoming AI smart glasses may finally convince me to switch to a pair full-time

At Google I/O 2025, the company shared more about how Android XR will be integrated into the smart glasses form factor, including practical Gemini features. Google I/O 2025, like the few years before it, dove deep into AI and the various ways it's being integrated across the Search giant's products and services. Among the recipients is Android XR, Google's extended reality operating system that underpins the emerging product category of headsets and smart glasses. Also: Here's the Android XR headset that Google and Samsung are releasing in 2025 Android XR was first unveiled last December, but the company mainly covered the general software experience for XR and VR headsets. In fact, Google showcased most of its spatial computing features on a headset it created with Samsung and Qualcomm, codenamed Project Moohan. We're expecting to learn more about that project later this year. At I/O this week, Google shifted its focus toward Android XR glasses, everyday wearables that can leverage cameras, microphones, and speakers to interpret what you see and assist with Gemini. Many big tech companies, including Meta and Apple, share this same vision of wearables, but Google may have the upper hand. That's because we've already seen how Gemini works in the multimodal sense, with Gemini Live interacting and communicating with you as if it's looking at the world from your phone camera. With Android XR and a configurable in-lens display, Google demonstrated how users will be able to pull up a directional system when navigating city streets, similar to how HUD screens work on some cars. You'll also be able to visualize incoming text messages and respond, translate conversations in real time, and capture photos via voice commands, reducing the friction of taking your phone out of your pocket. Google says its Android XR glasses will work in tandem with your phone, whether tethered to the device or paired wirelessly, to sync contacts, messages, notifications, and more. Also: Your Android devices are getting several upgrades for free - including a big one for Auto This integration should significantly reduce the power load and, most importantly, the weight of the glasses, as the bulk of the computing is executed and managed from your phone. To attract a wider audience, Google is partnering with popular eyewear brands like Gentle Monster and Warby Parker to create more stylish smart glasses. That strategy is similar to Meta, which partners with EssilorLuxottica to create and market the Ray-Ban smart glasses. As for when we can expect Android XR glasses to hit the market, Google says they'll come later this year.

[4]

We tried on Google's prototype AI smart glasses

Victoria Song is a senior reporter focusing on wearables, health tech, and more with 13 years of experience. Before coming to The Verge, she worked for Gizmodo and PC Magazine. Here in sunny Mountain View, California, I am sequestered in a teeny tiny box. Outside, there's a long line of tech journalists, and we are all here for one thing: to try out Project Moohan and Google's Android XR smart glasses prototypes. (The Project Mariner booth is maybe 10 feet away and remarkably empty.) While nothing was going to steal AI's spotlight at this year's keynote -- 95 mentions! -- Android XR has been generating a lot of buzz on the ground. But the demos we got to see here were notably shorter, with more guardrails than what I got to see back in December. Probably, because unlike a few months ago, there are cameras everywhere and these are "risky" demos. First up is Project Moohan. Not much has changed since I first slipped on the headset. It's still an Android-flavored Apple Vision Pro, albeit much lighter and more comfortable to wear. Like Oculus headsets, there's a dial in the back that lets you adjust the fit. If you press the top button, it brings up Gemini. You can ask Gemini to do things, because that is what AI assistants are here for. Specifically, I ask it to take me to my old college stomping grounds in Tokyo in Google Maps without having to open the Google Maps app. Natural language and context, baby. But that's a demo I've gotten before. The "new" thing Google has to show me today is spatialized video. As in, you can now get 3D depth in a regular old video you've filmed without any special equipment. (Never mind that the example video I'm shown is most certainly filmed by someone with an eye for enhancing dramatic perspectives.) Because of the clamoring crowd outside, I'm then given a quick run-through of Google's prototype Android XR glasses. Emphasis on prototype. They're simple; it's actually hard to spot the camera in the frame and the discreet display in the right lens. When I slip them on, I can see a tiny translucent screen showing the time and weather. If I press the temple, it brings up -- you guessed it -- Gemini. I'm prompted to ask Gemini to identify one of two paintings in front of me. At first, it fails because I'm too far away. (Remember, these demos are risky.) I ask it to compare the two paintings, and it tells me some obvious conclusions. The one on the right uses brighter colors, and the one on the left is more muted and subdued. On a nearby shelf, there are a few travel guidebooks. I tell Gemini a lie -- that I'm not an outdoorsy type, so which book would be the best for planning a trip to Japan? It picks one. I'm then prompted to take a photo with the glasses. I do, and a little preview pops up on the display. Now that's something the Ray-Ban Meta smart glasses can't do -- and arguably, one of the Meta glasses' biggest weaknesses for the content creators that make up a huge chunk of its audience. The addition of the display lets you frame your images. It's less likely that you'll tilt your head for an accidental Dutch angle or have the perfect shot ruined by your ill-fated late-night decision to get curtain bangs. These are the safest demos Google can do. Though I don't have video or photo evidence, the things I saw behind closed doors in December were a more convincing example of why someone might want this tech. There were prototypes with not one, but two built-in displays, so you could have a more expansive view. I got to try the live AI translation. The whole "Gemini can identify things in your surroundings and remember things for you" demo felt both personalized, proactive, powerful, and pretty dang creepy. But those demos were on tightly controlled guardrails -- and at this point in Google's story of smart glasses redemption, it can't afford a throng of tech journalists all saying, "Hey, this stuff? It doesn't work." Meta is the name that Google hasn't said aloud with Android XR, but you can feel its presence loom here at the Shoreline. You can see it in the way Google announced stylish eyewear brands like Gentle Monster and Warby Parker as partners in the consumer glasses that will launch... sometime, later. This is Google's answer to Meta's partnership with EssilorLuxottica and Ray-Ban. You can also see it in the way Google is positioning AI as the killer app for headsets and smart glasses. Meta, for its part, has been preaching the same for months -- and why shouldn't it? It's already sold 2 million units of the Ray-Ban Meta glasses. The problem is even with video, even with photos this time. It is so freakin' hard to convey why Silicon Valley is so gung-ho on smart glasses. I've said it time and time again. You have to see it to believe it. Renders and video capture don't cut it. Even then, even if in the limited time we have, we could frame the camera just so and give you a glimpse into what I see when I'm wearing these things -- it just wouldn't be the same.

[5]

I tried Samsung's Project Moohan XR headset at I/O 2025 - and couldn't help but smile

From the more comfortable design to the AI-driven software experience, Project Moohan feels like a more polished XR headset even before it's released. Putting on Project Moohan, an upcoming XR headset developed by Google, Samsung, and Qualcomm, for the first time felt strangely familiar. From twisting the head strap knob on the back to slipping the standalone battery pack into my pants pocket, my mind was transported back to February of 2024, when I tried on the Apple Vision Pro during launch day. Also: Xreal's Project Aura are the Google smart glasses we've all been waiting for Only this time, the headset was powered by Android XR, Google's newest operating system built around Gemini, the same AI model that dominated the Google I/O headlines this week. The difference in software was immediately noticeable, from the starting home grid of Google apps like Photos, Maps, and YouTube (which VisionOS still lacks) to prompting for Gemini instead of Siri with a long press of the headset's multifunctional key. While my demo with Project Moohan lasted only about ten minutes, it gave me a fundamental understanding of how it's challenging Apple's Vision Pro and how Google, Samsung, and Qualcomm plan to convince the masses that the future of spatial computing does, in fact, live in a bulkier, space helmet-like device. For starters, there's no denying that the industrial designers of Project Moohan drew some inspiration from the Apple Vision Pro. I mentioned a few of the hardware similarities already, but the general aesthetic and hand feel of the XR headset would easily pass as one made in Cupertino. Only it's much better than the Vision Pro. Google wouldn't share the exact materials being used to shape the headset, but a few taps, brushes, and squeezes around the perimeter suggested to me that Project Moohan is made mostly of plastic and hard metals. That's a good thing, as the headset felt much lighter in the hand and around my head than what I remembered the Vision Pro feeling like. And if I can get a headset with better weight distribution and a potentially cheaper price tag, I won't complain. Also: Apple Vision Pro review: Fascinating, flawed, and needs to fix 5 things Project Moohan comes tethered with a portable power pack, which the Google reps told me should keep the system running for two to three hours, depending on usage. I don't have any strong feelings toward the accessory, but its presence suggests to me that the headset will be best used when you're sitting down or standing still -- like how I was when going through the demo. For more outdoorsy activities, you should probably stick with Google's XR glasses instead. Using Android XR for the first time felt very intuitive. Navigation gestures, like pinches, dragging, and taps, reminded me of how I'd use Vision Pro or Meta Quest 3, which was great to see. For most of the demonstrations, which included sightseeing in a 3D expanded view of Google Maps, watching immersive YouTube videos, and talking to Gemini, I quickly picked up how to work the software. Also: I've tested the Meta Ray-Bans for months, and these 5 features still amaze me Save for a few times when I accidentally clicked out of a floating window or minimized a video player, using Android XR felt like using a more dynamic version of Android on my phone. It's like multitasking with split-screen mode and floating apps, but with an infinitely sized display. Notably, you can tell Gemini to clean up your screen layout whenever there are too many things everywhere all at once. Such conversational interactions with the AI assistant are Project Moohan's (and other future Android XR devices) biggest advantage compared to the Vision Pro. The ability to call on a reliable and versatile AI assistant to help you navigate and manage the software is especially crucial when you don't want to use your eyes and hands to control the headset. I found the passthrough on Project Moohan to be just OK. The renderings appeared slightly blurry and in a cooler (blueish) tone, though not warped significantly. Perhaps it's the fact that I tested the headset with prescription inserts made just minutes before, but I definitely wouldn't call the passthrough a seamless blend of digital and real world. Also: Google Beam is poised to bring 3D video conferencing mainstream It helps that you can use the XR headset without the light blockers (usually magnetically attached to the bottom half of the visor), so there was always a sense of depth and proximity as I was in the immersive experience. The biggest question mark with Project Moohan is pricing. Sure, Samsung will likely undercut Apple's $3,500 Vision Pro, but by how much? What will repairs and insurance look like? And exactly how many apps will be optimized for Android XR, a platform that's only months old, when the headset launches? We'll get a clearer picture later this year, but for now, I'm staying hopeful based on the product that I've seen so far. Get the morning's top stories in your inbox each day with our Tech Today newsletter.

[6]

I tried Google's XR glasses and they already beat my Meta Ray-Bans in 3 ways

Google unveiled a slew of new AI tools and features at I/O, dropping the term Gemini 95 times and AI 92 times. However, the best announcement of the entire show wasn't an AI feature; rather, the title went to one of the two hardware products announced -- the Android XR glasses. Also: I'm an AI expert, and these 8 announcements at Google I/O impressed me the most For the first time, Google gave the public a look at its long-awaited smart glasses, which pack Gemini's assistance, in-lens displays, speakers, cameras, and mics into the form factor of traditional eyeglasses. I had the opportunity to wear them for five minutes, during which I ran through a demo of using them to get visual Gemini assistance, take photos, and get navigation directions. As a Meta Ray-Bans user, I couldn't help but notice the similarities and differences between the two smart glasses -- and the features I now wish my Meta pair had. The biggest difference between the Android XR glasses and the Meta Ray-Bans is the inclusion of an in-lens display. The Android XR glasses have a display that is useful in any instance involving text, such as when you get a notification, translate audio in real time, chat with Gemini, or navigate the streets of your city. The Meta Ray-Bans do not have a display, and although other smart glasses such as Hallidays do, the interaction involves looking up at the optical module placed on the frame, which makes for a more unnatural experience. The display is limited in what it can show, as it is not a vivid display. The ability to see elements beyond text adds another dimension to the experience. Also: I've tested the Meta Ray-Bans for months, and these 5 features still amaze me For example, my favorite part of the demo was using the smart glasses to take a photo. After clicking the button on top of the lens, I was able to take a photo in the same way I do with the Meta Ray-Bans. However, the difference was that after taking the picture, I could see the results on the lens in color and in pretty sharp detail. Although being able to see the image wasn't particularly helpful, it did give me a glimpse of what it might feel like to have a layered, always-on display integrated into your everyday eyewear, and all the possibilities. Google has continually improved its Gemini Assistant by integrating its most advanced Gemini models, making it an increasingly capable and reliable AI assistant. While the "best" AI assistant ultimately depends on personal preference and use case, in my experience testing and comparing different models over the years, I've found Gemini to outperform Meta AI, the assistant currently used in Meta's Ray-Ban smart glasses. Also: Your Google Gemini assistant is getting 8 useful features - here's the update log My preference is based on several factors, including Gemini's more advanced tools, such as Deep Research, advanced code generation, and more nuanced conversational abilities, which are areas where Gemini currently holds an advantage over Meta AI. Another notable difference is in content safety. For example, Gemini has stricter guardrails around generating sensitive content, such as images of political figures, whereas Meta AI is looser. It's still unclear how many of Gemini's features will carry over to the smart glasses, but if the full experience is implemented, I think it would give the Android smart glasses a competitive edge. Although visually they do not look very different from the Meta Ray-Bans in the Wayfarer style, Google's take on XR glasses felt noticeably lighter than Meta's. As soon as I put them on, I was a bit shocked by how much lighter they were than I expected. While a true comfort test would require wearing them for an entire day, and there is also the possibility that by the time the glasses reach production they become heavier, at this moment it seems like a major win. Also: The best smart glasses unveiled at I/O 2025 weren't made by Google If the glasses can maintain their current lightweight design, it will be much easier to take full advantage of the AI assistance they offer in daily life. You wouldn't be sacrificing comfort, especially around the bridge of the nose and behind the ears, to wear them for extended periods. Ultimately, these glasses act as a bridge between AI assistance and the physical world. That connection only works if you're willing and able to wear them consistently. Get the morning's top stories in your inbox each day with our Tech Today newsletter.

[7]

Google XR glasses hands-on: Lightweight but with a limited field of view

One of the biggest reveals of Google I/O was that the company is officially back in the mixed reality game with its own . It's been years since we've seen anything substantial from the search giant on the AR/VR/XR front, but with a swath of hardware partners to go with its XR platform it seems that's finally changing. Following the keynote, Google showed off a very short demo of the prototype device we saw onstage. I only got a few minutes with the device so my impressions are unfortunately very limited, but I was immediately impressed with how light the glasses were compared with Meta's Orion prototype and Snap's augmented reality Spectacles. While both of those are quite chunky, Google's prototype device was lightweight and felt much more like a normal pair of glasses. The frames were a bit thicker than what I typically wear, but not by a whole lot. At the same time, there are some notable differences between Google's XR glasses and what we've seen from Meta and Snap. Google's device only has a display on one side -- the right lens, you can see it in the image at the top of this article -- so the visuals are more "glanceable" than fully immersive. I noted during Google's demo onstage at I/O that the field of view looked narrow and I can confirm that it feels much more limited than even Snap's 46-degree field of view (Google declined to share specifics on how wide the field of view is on its prototype.) Instead, the display felt a bit similar to how you might use the front display of a foldable phone. You can get a quick look at the time and notifications and small snippets of info from your apps, like what music you're listening to. Obviously, Gemini is meant to play a major role in the Android XR ecosystem and Google walked me through a few demos of the assistant working on the smart glasses. I could look at a display of books or some art on the wall and ask Gemini questions about what I was looking at. It felt very similar to multimodal capabilities we've seen with Project Astra and elsewhere. There were some bugs, though, even in the carefully orchestrated demo. Gemini started to tell me about what I was looking at before I had even finished my question to it, which was followed by an awkward moment where we both paused and interrupted each other. One of the more interesting use cases Google was showing was Google Maps in the glasses. You can get a heads-up view of your next turn, much like Google augmented reality walking directions, and look down to see a little section of map on the floor. However, when I asked Gemini how long it would take to drive to San Francisco from my location it wasn't able to provide an answer. (It actually said something like "tool output," and my demo ended very quickly after.) As with so many other mixed reality demos I've seen, it's obviously still very early days. Google was careful to emphasize that this is prototype hardware meant to show off what Android XR is capable of, not a device it's planning on selling anytime soon. So any eventual smart glasses we get from Google or the company's hardware partners could look very different. What my few minutes with Android XR was able to show, though, was how Google is thinking about bringing AI and mixed reality together. It's not so different from Meta, which sees smart glasses as key to long-term adoption of its AI assistant too. But now that Gemini is coming to just about every Google product that exists, the company has a very solid foundation to actually accomplish this.

[8]

I Waited One Hour to Try Google's Android XR Smart Glasses and Only Had 90 Seconds With Them

I was promised 5 minutes with Google's AR glasses prototype, but only got 90 seconds to use them. Well, that didn't go well. After enduring two hours of nonstop Gemini AI announcement after announcement at Google I/O, I waited one hour in the press lounge to get a chance to try out either a pair of Android XR smart glasses or Samsung's Project Moohan mixed reality headset. Obviously, I went for the Android XR smart glasses to see how they compare to Meta's $10,000 Orion concept and Google Glass before it. Is Android XR the holy grail of smart glasses we've been waiting over a decade for? Unfortunately, Google only let me try them out for 90 seconds. I was promised five minutes with the Android XR headset prototype and only had three minutes total, half of which a product rep spent explaining to me how the smart glasses worked. Ninety seconds in, I was told to tap on the right side of the glasses to invoke Gemini. The AI's star-shaped icon appeared in the right lens (this pair of Android XR glasses only had one tiny transparent display) slightly below its center point. I was instructed to just talk to Gemini. I turned around and looked at a painting hung on the wall and asked it what I was looking at, who painted it, and asked for the art style. Gemini answered confidently; I have no idea if the answers were correct. I looked over at a bookshelf and asked Gemini to tell me the names of the booksâ€"and it did. Then the rep used a phone that was paired to the glasses to load up Google Maps. He told me to look down at my feet, and I saw a small portion of a map; I looked back up, and there Gemini pulled up a turn-by-turn navigation. Then the door in the 10 x 10-foot wooden box I was in slid open, and I was told I was done. The whole thing was incredibly rushed, and honestly, I barely even got to get a sense for how well Gemini worked. The AI constantly spoke over the rep while he was explaining the Android XR demo to me. I'm not sure if it was a false activation or a bug or what. When I asked about the painting and books, I didn't need to keep tapping on the side of the glassesâ€"Gemini kept listening and just switched gears. That part was neat. Compared to Meta's Orion smart glassesâ€"which are also a prototype concept at this stageâ€"the Android XR glasses don't even compare. You can see more and do more with Orion and through its waveguide lenses with silicon carbide. Orion runs multiple app windows like Instagram and Facebook Messenger, and even has "holographic" games like a Pong knockoff that you can play another person wearing their own pair of the AR glasses. Versus Snapchat's latest AR "Spectacles" and their super narrow field of view, I'd say the Android XR prototype and its singular display might actually be better. If you're going to have less powerful hardware, lean into its strength(s). As for the smart glasses themselvesâ€"they felt like any pair of thick sunglasses, and they felt relatively light. They did slide off my nose a bit, but that's only because I have a flat and wider Asian nose. They didn't appear to slip off the nose of my friend and Engadget arch-nemesis, Karissa Bell. (I'm just kidding; I love Karissa.) There was no way for me to check battery life in 90 seconds. So that's my first impression of the first pair of Android XR smart glasses. It's not a lot, but also not nothing. Part of me is wondering why the hell Google chose to limit demo time like this. My spidey sense tells me that it may not be as far along as it appeared in the I/O keynote demo. What I saw feels like a better version of Google Glass, for sure, with the screen resembling a super-tiny heads-up display that's located in the center of the right lens instead of above your right eye on Glass. But with only 90 seconds, there's no way for me to form a firm opinion. I need to see a lot more, and what I saw was not even a sliver of a sliver. Google, you got my numberâ€"call me!

[9]

Google Is Gunning for Meta’s Ray-Ban Smart Glasses

Smart glasses are hot right now, and Google is poised to turn that temperature up even further. In a very brief teaser on Friday, Google's President of the Android Ecosystem, Sameer Samat, gave the tiniest of glimmers for what the company may have in store on the smart glasses front. At the end of Google's I/O edition of its Android Show series, Samat can be seen very coyly taking out a pair of glasses and sliding them onto his face while proclaiming that Google will have "a few more really cool Android demos" in store for viewers who tune into I/O next week. If we're to read between the very obvious, billboard-sized lines, that means Google is going to show off some smart glassesâ€"and soon. For me, personally, that's very exciting, but I can think of one company that might not receive Google's teaser so well. I'll give you a hint: they own a little social media platform called Facebook. As nascent as smart glasses may be as a device category, they already have a clear poster child, and it's Mark Zuckerberg's Meta. As an owner of Meta's Ray-Ban smart glasses, I can say for sure that, despite not having many competitors, the smart eyewear has earned the frontrunner title. Meta's Ray-Ban glasses are stylish, they take surprisingly nice pictures, and they have a voice assistant that is more adept than most (ahem, Siri). They also deliver shockingly good audio for phone calls and music playback, which makes them a perfect gadget for when you want to listen to something but you don't want to wall yourself off with ANC earbuds or over-ear headphones. In my opinion, they're the one device that you don't think you need, but as soon as you try a pair, you'll likely want them. But as bullish as I am on Meta's Ray-Ban glasses, I'm also fully aware that the field for smart glasses is entirely wide open. Meta, as I alluded to previously, has the benefit of being a big fish in a relatively small pond, but that arrangement is destined for a disruption, and that shakeup could come by the hands of several companiesâ€"Google included. Google's hardware, though we don't really know much of anything about it at this point, might not have the classic Ray-Ban silhouette if and when it's released, but it would have one thing that Meta doesn't: Google's ecosystem. Paired with a Pixel device, for example, Google's smart glasses could go places that Meta has only dreamed of. Imagine tight integration with messaging on your phone, phone calls, audio recording apps, or the camera app. Those are little things on the surface, but having photos or videos taken with your smart glasses appear directly in your photos app on your phone are quality-of-life improvements that could be the deciding factor for lots of people. As great as Meta's Ray-Ban glasses are, they'll never have full, native integration with say, an iPhone. Google on the other hand? Well, the lane is wide open. To make matters worse, Google may also have a leg up on AI. If there's one thing I don't love about Meta's Ray-Ban glasses, it's Meta AI. Though Meta AI does well for basic voice assistant commands like "take a video," when it comes to the beefier, more complex tasks, I find it to be more volatile. During a recent excursion on the beach in Florida, for example, Meta AI very confidently told me that almost every shell I was holding was a shark's tooth or that the car I was looking at was an "In-fin-ee-tee" instead of an Infiniti. It's hard to say if Google's Gemini would perform better in those tasks, but worth noting that it has been around for slightly longer in the generative AI game and has lots of different models to throw at a pair of smart glasses. And again, with tight integration between phone and glasses, Gemini (especially an agentic Gemini) might be able to squeeze out even more functionality and give Google's smart glasses an edge over Meta's Ray-Bans. Google already teased a little of what its smart glasses might be able to do with AI last year during I/O 2024. Project Astra, as Google calls it, is a smart glasses UI that leans on computer vision to do all sorts of things. In a controlled demo, Google showed off how Astra could read lines of computer code off a screen and give advice or even remember objects in your environment in case you lose them. It's a compelling, if potentially far-off, vision of what a pair of Google-made smart glasses could bring to the table, and one that we might get a better look at next week. No matter what transpires at I/O in a few days, though, time is of the essence for Google and Meta. While neither Samsung nor Apple have officially announced their own smart glasses yet, both are almost certainly devising their own gadgets that would have all of the same benefits of a Google device (tight integration and AI acumen), plus an even larger ecosystem of augmentative productsâ€"phones, TVs, speakers, etc. Though, to give Meta some credit, it might not be overshadowed by Google, Samsung, or Apple just yet if it can actually bring its Orion prototype to fruition. Orion, for the uninitiated, is a pair of real-deal AR glasses that can actually superimpose digital objects (texts, pictures, videos, games) onto your vision, and Meta's goal is to shrink them down to a form factor that's about the size of a regular pair of glasses. According to Meta, it could have Orion ready for market by 2027, but a product that can do as much as Meta has suggested would be nothing short of game-changing. When it comes to Google, almost everything is conjecture at this point, but the possibilities for a pair of Google-made smart glasses are definitely vast, and hopefully, we'll have a better look at what that potential is very soon. Here's to hoping this goes a lot more smoothly than Google Glass.

[10]

After Trying the Google Android XR Glasses, I'm Ready to Ditch My Meta Ray-Bans

Material 3 Expressive Finally Made Me Excited About Android Updates Again Google wrapped up its I/O 2025 keynote today with an extensive demo of its Android XR glasses, showcasing Gemini-powered translation, navigation, and messaging features. Although the on-stage showcase was cool, we didn't really see anything new. You could say I was unimpressed. But then I got to use the glasses for five minutes, and I can't wait to buy a pair. I've worn the Meta Ray-Ban smart glasses for more than a year now, and while I don't regret my purchase, they're primarily used as sunglasses with built-in Bluetooth headphones while walking my dog. The camera actually takes great photos and the AI features could be useful for the right person, but there isn't much else to them. Ray-Ban Meta Smart Glasses Embraced by the next generation of culture makers, its journey continues with AI-enhanced wearable tech. Listen, call, capture, and live stream features are seamlessly integrated within the classic frame. See at Amazon See at Meta See at Ray-Ban On paper, Google's Android XR glasses are very similar. It has a built-in camera, speakers, and can interact with AI. But the moment I put on the glasses and saw the AR display light up, I was prepared to throw out my Meta glasses. Close Looking around the Android XR glasses, controls and buttons are nearly identical to other smart glasses. There's a touch-sensitive area on the right arm to activate or pause Gemini, a camera button on the right temple, speakers located near your ears, and a USB-C charging port in the left arm. Google isn't sharing specs, but their weight felt equal to or lighter than my Meta Ray-Bans. I expressed my shock when I first put them on and noticed how comfortable they felt. Google's choice to use a monocular display was the one thing that threw me for a loop. Instead of projecting the interface on both lenses, there's a single display in the right lens. This might not bother everyone, but my initial response was to try shifting the frames because something felt off and the interface looked out of focus. I wasn't seeing double, but it took my brain a moment to adjust. I was first greeted with the time and weather. It was nothing but white text, but I was impressed by how bright and legible everything was. Of course, I was in a dimly lit room, so it probably would have looked different if I had been outside under the sun. The Google employee giving me the demo then had me touch the right arm to launch Gemini. The rest of my demo was basically a recreation of what we saw on the I/O stage. Using what's basically already available through Gemini Live on your smartphone, I looked at several books on a shelf and asked the glasses for information about the author. I then used the shutter button on the frame to take a picture, which was instantly transferred to the employee's Pixel. The Googler also launched walking directions, which gave me a moment to try an interface concept new to me. When you're looking straight ahead, Google Maps shows basic navigation instructions like "Walk north on Main Street." But if you look down, the display switches over to a map, with an arrow showing your current trajectory. Splitting the two views ensures there's minimal information floating in front of you as you walk, but if you need more context, it's a glance away. It's this ability to see Gemini's responses and interact with different applications that makes these smart glasses so impressive. Having a full-color display that's easy to read also makes Google's Android XR glasses feel more futuristic than other options from companies like Even Realities that use single-color waveguide technology. There was no way for me to capture the display during my short demo, so you'll have to rely on the keynote demo embedded below to get an idea of what I was looking at. It should be noted that Google's Android XR glasses currently serve as a tech demo to showcase the in-development operating system, with no word on if the company plans to actually sell them. They're still pretty buggy, with Gemini having a hard time distinguishing my instructions from background conversations, but I wasn't expecting perfection. Also, most of the compute appeared to be happening on the paired Pixel handset, which is good for battery life on the glasses, but you'll notice slight delays while information is transferred between the two devices. Thankfully, even if Google doesn't launch "Pixel Glasses," third-parties like XREAL are already working on their own spectacles. And, of course, Samsung is working on the co-developed Project Moohan AR headset that's set to launch later this year. But given that developers still don't have access to Android XR to begin building apps for the OS, I wouldn't expect Google's potential offerings to hit until 2027 at the earliest.

[11]

I just tried Google's smart glasses built on Android XR -- and Gemini is the killer feature

I don't know if smart glasses are the device that's going to make us put away our smartphones for good, as some in the tech world do. But if smart glasses do enjoy their moment, it's going to be because they come equipped with a pretty good on-board assistant for helping you navigate the world. I came to that conclusion after trying out a prototype of some smart glasses that Google built on its Android XR platform. The glasses themselves are pretty ordinary devices -- instead, the standout feature is the AI-powered Gemini assistant that adds enough functionality to even win over smart glass skeptics like me. Google announced its AI-powered Android XR smart glasses during the company's Google I/O keynote yesterday (May 20). The inclusion of AI in the form of a Gemini assistant isn't exactly a surprise -- a week ago, Google outlined plans to bring Gemini to more devices, including smart glasses and mixed reality headsets. But Google planning on its own pair of glasses equipped with an on-board assistant who can see what you see and answer your questions is certainly noteworthy. There's no timeframe for Google releasing its glasses. The company says the prototypes are with testers, who will provide feedback on the design and feature set that Google brings to the market. That means the finished product could be a lot different from what I briefly got the chance to wear in a demo area at the Google I/O conference. But the real takeaway here is not what Google's glasses might look like and how they might compare to rival products, some of which will also be built on the Android XR platform. Instead, what I chose to focus on from my demo was what Gemini brings to the table when you try on a pair of smart glasses. That said, I should spend a little bit of time talking about the glasses themselves. For a prototype design, they're not terribly bulky -- certainly the frames didn't look as thick as the Meta Orion AR glasses I tried out last year nor the Snap Spectacles AR glasses I took for a test drive. I don't wear glasses, save for the occasional pair of cheaters at night when I'm enjoying a book, but Google's effort, while thicker than those, didn't feel like ones you'd be embarrassed to wear in public. My demo time didn't leave a big window to talk to specs -- instead, I got a rundown of the controls. A button on top of the right side of the frame takes photos when you press it, while a button on the bottom turns the display off. There's also a touch pad on the side of the frame that you use to summon Gemini with a long press. When I put on the glasses, my attention was drawn to a little info area down in the lower right of the frames, showing the time and temperature. This is the heads-up display, as Google calls it, and it's not so far out of the way that you can't see the information without breaking eye contact with people. That said, I found my eyes drawn to the area with text, though that might be something I'd be less inclined to look at over time. Like I mentioned, I really didn't get a specs rundown from Google, and I'm not sure that it matters if Google winds up fine-tuning its glasses based on tester feedback. But the field of view seems narrow -- decidedly more so than the 70-degree FOV that Orion provides. If I had to guess, I'd say that's so that there's no question about what you're looking at should you ask Gemini to provide you with more information or actions. Once you tap and hold on the frame -- it took me a moment to find the right spot, though I imagine I'd get use to that with more time -- the AI logo appears and Gemini announces itself. You can immediately start asking it questions, and I decided to focus on some of the books Google had left around our demo room. Gemini correctly identified the title of the first book and its subject matter when I asked it to name the book I was looking at. But when I asked how long the book was, the assistant thought I wanted to look up its price. OK, I decided, I'm game -- how much does the book cost? Gemini then wanted to know where I was -- maybe for currency purposes? -- but my response that I was in the United States led Gemini to conclude that I was asking it to confirm whether the U.S. was one of the the places featured in the book. So that was a fruitless conversation. Things improved when I tried another book, this one with sumptuous pictures of various Japanese dishes. Gemini correctly identified a photo of sushi, then offered to look up nearby restaurants when I asked if there were any places nearby that served sushi. That turned out to be a much rewarding interaction. Gemini could also identify a painting hanging in the demo area, correctly telling me that it was an example of pointillism and even identifying the artist and the year he painted it. Using the button on the top of the frame, I was able to snap a picture, and a preview of the image I captured floated up before my eyes. I do wonder if in the process of snapping the photo, I also pressed on the bottom button that turns off the display, because for a couple queries, Gemini was unable to see what I was seeing. Tapping and holding on the frame put things right again, but maybe this is an instance where Google might to think about the placement of buttons. Or perhaps this was just one of those things that can happen when you're trying out a product prototype. Even though I don't have the best hearing in the world, Gemini came through loud and clear on the speakers that appeared to be located in the frames. Even more impressive, my colleague Kate Kozuch was recording video of my demo and tells me that she couldn't hear any audio spillover -- which means at least one end of your Gemini conversations should stay private. I could dwell on some of the mishaps with Gemini during my demo with Google's smart glasses, but I think it's fair to chalk those up to early demo jitters. There's a long way to go before these glasses are anywhere close to ready, and a lot can change for Google's AI in a short amount of time. I look at how much Project Astra has improved in the year since its Google I/O 2024 debut, at least based on the video Google showed during Tuesday's keynote. Instead, the thing with Google's glasses that impressed me was that I was interacting more or less completely with my voice, save for a button tap here or a frame press there. I didn't have to learn a set of new pinching gestures and hoped that the cameras on my glasses picked those up. Instead, I could just ask questions -- and in a comfortably natural way, too. I imagine the finished version of Google's XR glasses with Gemini are going to look and perform very different from what I saw this week when they eventually hit the market, and I'll judge the product on those merits then. But whatever does happen, I bet that Gemini will be at the heart of this product, and that strikes me as a solid base to build on.

[12]

Everything Interesting Google Announced at I/O Today

Google today held its annual I/O developer conference, where it shared a number of new features and tools that are coming to its products in the coming weeks and months. There was a heavy focus on AI capabilities, and Google is deeply integrating Gemini and other AI tools into its software. Gemini is Google's AI product, equivalent to Anthropic's Claude and OpenAI's ChatGPT. Apple has no equivalent at the current time, but Gemini could soon be integrated into iOS like ChatGPT. There are multiple new capabilities coming to Gemini, some of which are available for iPhone users this week. Gemini is being integrated more deeply into search, starting with a dedicated AI Mode that's rolling out in the U.S. this week. Gmail, Chrome, and Meet are all getting new Gemini capabilities that are rolling out starting today. Google already announced Android XR as a platform for companies that are building VR headsets, but today, Google said that it's also developing Android XR for augmented reality glasses. Google last tried this kind of product with Google Glass, but it didn't go over so well and Google Glass was discontinued after several years. Google showed off a set of lightweight glasses that incorporate an in-lens display. On stage, Google demonstrated the glasses offering a live translation feature with words that appeared on the lenses, and providing turn-by-turn directions. The glasses have cameras, microphones, and speakers, and are connected to Gemini. The AI is able to see and hear what the wearer hears to answer questions, offer image recognition capabilities, provide tailored directions, and more. The smart glasses could compete with Apple's future smart glasses, as Apple is rumored to be working on a pair of lightweight augmented reality glasses that could eventually replace the iPhone. Apple is still far off from being able to release AR glasses, so the Android XR version is likely to come out first. Gentle Monster and Warby Parker are partnering with Google for Android XR glasses that are lightweight and stylish. Samsung's XR headset will still be the first device that runs Android XR, and it's launching later this year. Samsung will also build Android XR glasses.

[13]

Forget Android XR smart glasses -- 3 reasons why I'm more excited about the Project Astra upgrades

If you tuned into the Google I/O 2025 keynote, you probably were overloaded by all of the AI announcements Google made this week. Then came the end with a big reveal around its Android XR powered smart glasses, which certainly proves the case for having Gemini in yet another gadget. Although I'm as intrigued as anyone else about wearing a pair of smart glasses powered by Android XR, I'm not convinced it's going to be as widely accessible. In fact, I think the biggest announcement out of Google I/O 2025 is the new Project Astra upgrades. Even though there were many other promising AI features attached to Gemini, the new stuff that Google showed off at the keynote around Project Astra is much more promising for many reasons. Here's why. Google didn't mention a time frame on when we'll actually see consumer-ready Android XR smart glasses like the ones that it demoed at its keynote. Instead, the company simply mentioned some of the partners it's working with that will be running its new platform. Likewise, Google didn't reveal when these new Project Astra features will become available -- but it won't prevent some of these features from trickling out sooner in other ways. In a recent update to its Gemini app, Gemini Advanced subscribers using a Pixel 9 or Galaxy S25 device were given access to Gemini Live Video and screensharing. These features are somewhat indirectly tied to Project Astra, as Gemini Live Video allows you to interact with the AI agent to perform complex tasks using the phone's camera. I've tried this out myself with my Pixel 9a to analyze a hallway in my home I want to fill with appropriate artwork, taking into consideration the lighting conditions and length of the room. Gemini took all of those details and gave me appropriate responses, including suggestions on installing track lights. What I'm getting at here is that some of the Project Astra upgrades could eventually make their way in some capacity to our phones, probably much sooner than these Android XR smart glasses. Early adopters who are adamant on trying these Android XR smart glasses could be in for a shock. I'm referring to their cost, which could end up being pretty high. I highly doubt that these smart glasses are going to be priced like the Ray-Ban Meta smart glasses, largely due to the fact that they employ new technologies that haven't been widely circulated. I wouldn't be shocked if the first set of Android XR smart glasses end up costing $500 at the very least. Just a quick search on some of the best smart glasses reveals how the most premium models fetch for around $500 -- so it's only logical to pay more for glasses powered by a robust platform with Gemini at the driver's seat. And considering how Android XR is an entirely new operating system for extended reality devices, I imagine that it's going to be far more functional and fleshed out than some of the software in current smart glasses on the market. I'm really not sold on the idea of constantly wearing glasses on my face, which also is the same reason why I think smartphones will continue to be the de facto device that we interact with the most on a daily basis for the foreseeable future. I won't deny the promise of these immersive experiences with Android XR smart glasses, but they still face a lot of challenges -- like how long would the battery last. I think the new Project Astra upgrades will have a more profound impact on real-world applications. I'm particularly interested in how Gemini could take the duty of taking phone calls for you to accomplish a specific task. During a video demo shown at Google I/O, the company featured the AI assistant calling up bike stores to inquire about a specific part -- and then following up with you when those queries are completed. This reminds me a lot of another underrated Google AI feature, Call Screen, which uses AI to take phone calls when you're busy. Not only would you see the live conversation between the caller and the assistant, but the assistant will provide you with contextual responses based on what it hears. These Project Astra upgrades are meant to be helpful and have an impact on your productivity, and not just those who would willingly wear smart glasses.

[14]

Hands on: I tried Google's Android XR prototype and they can't do much but Meta should still be terrified

Why you can trust TechRadar We spend hours testing every product or service we review, so you can be sure you're buying the best. Find out more about how we test. The Google Android XR can't do very much... yet. At Google I/O 2025, I got to wear the new glasses and try some key features - three features exactly - and then my time was up. These Android XR glasses aren't the future, but I can certainly see the future through them, and my Meta Ray Ban smart glasses can't match anything I saw. The Android XR glasses I tried had a single display, and it did not fill the entire lens. The glasses projected onto a small frame in front of my vision that was invisible unless filled with content. To start, a tiny digital clock showed me the time and local temperature, information drawn from my phone. It was small and unobtrusive enough that I could imagine letting it stay active at the periphery. The first feature I tried was Google Gemini, which is making its way onto every device Google touches. Gemini on the Android XR prototype glasses is already more advanced than what you might have tried on your smartphone. I approached a painting on the wall and asked Gemini to tell me about it. It described the pointillist artwork and the artist. I said I wanted to look at the art very closely and I asked for suggestions on interesting aspects to consider. It gave me suggestions about pointillism and the artist's use of color. The conversation was very natural. Google's latest voice models for Gemini sound like a real human. The glasses also did a nice job pausing Gemini when somebody else was speaking to me. There wasn't a long delay or any frustration. When I asked Gemini to resume, it said 'no problem' and started up quickly. That's a big deal! The responsiveness of smart glasses is a metric I haven't considered before, but it matters. My Meta Ray Ban Smart Glasses have an AI agent that can look through the camera, but it works very slowly. It responds slowly at first, and then it takes a long time to answer the question. Google's Gemini on Android XR was much faster and that made it feel more natural. Then I tried Google Maps on the Android XR prototype. I did not get a big map dominating my view. Instead, I got a simple direction sign with an arrow telling me to turn right in a half mile. The coolest part of the whole XR demo was when the sign changed as I moved my head. If I looked straight down at the ground, I could see a circular map from Google with an arrow showing me where I am and where I should be heading. The map moved smoothly as I turned around in circles to get my bearings. It wasn't a very large map - about the size of a big cookie (or biscuit for UK friends) in my field of view. As I lifted my head, the cookie-map moved upward. The Android XR glasses don't just stick a map in front of my face. The map is an object in space. It is a circle that seems to remain parallel with the floor. If I look straight down, I can see the whole map. As I move my head upward, the map moves up and I see it from a diagonal angle as it lifts higher and higher with my field of view. By the time I am looking straight ahead, the map has entirely disappeared and has been replaced by the directions and arrow. It's a very natural way to get an update on my route. Instead of opening and turning on my phone, I just look towards my feet and Android XR shows me where they should be pointing. The final demo I saw was a simple photograph using the camera on the Android XR glasses. After I took the shot, I got a small preview on the display in front of me. It was about 80% transparent, so I could see details clearly, but it didn't entirely block my view. Sadly that was all the time Google gave me with the glasses today, and the experience was underwhelming. In fact, my first thought was to wonder if the Google Glass I had in 2014 had the exact same features as today's Android XR prototype glasses. It was pretty close. My old Google Glass could take photos and video, but it did not offer a preview on its tiny, head-mounted display. It had Google Maps with turn directions, but it did not have the animation or head-tracking that Android XR offers. There was obviously no conversational AI like Gemini on Google Glass, and it could not look at what you see and offer information or suggestions. What makes the two similar? They both lack apps and features. Should developers code for a device that doesn't exist? Or should Google sell smart glasses even though there are no developers yet? Neither. The problem with AR glasses isn't just a chicken and egg problem of what comes first, the software or the device. That's because AR hardware isn't ready to lay eggs. We don't have a chicken or eggs, so it's no use debating what comes first. Google's Android XR prototype glasses are not the chicken, but they are a fine looking bird. The glasses are incredibly lightweight, considering the display and all the tech inside. They are relatively stylish for now, and Google has great partners lined up in Warby Parker and Gentle Monster. The display itself is the best smart glasses display I've seen, by far. It isn't huge, but it has a better field of view than the rest; it's positioned nicely just off-center from your right eye's field of vision; and the images are bright, colorful (if translucent), and flicker-free. When I first saw the time and weather, it was a small bit of text and it didn't block my view. I could imagine keeping a tiny heads-up display on my glasses all the time, just to give me a quick flash of info. This is just the start, but it's a very good start. Other smart glasses haven't felt like they belonged at the starting line, let alone on retail shelves. Eventually, the display will get bigger, and there will be more software. Or any software, because the feature set felt incredibly limited. Still, with just Gemini's impressive new multi-modal capabilities and the intuitive (and very fun) Google Maps on XR, I wouldn't mind being an early adopter if the price isn't terrible. Of course, Meta Ray Ban Smart Glasses lack a display, so they can't do most of this. The Meta Smart Glasses have a camera, but the images are beamed to your phone. From there, your phone can save them to your gallery, or even use the Smart Glasses to broadcast live directly to Facebook. Just Facebook - this is Meta, after all. With its Android provenance, I'm hoping whatever Android XR smart glasses we get will be much more open than Meta's gear. It must be. Android XR runs apps, while Meta's Smart Glasses are run by an app. Google intends Android XR to be a platform. Meta wants to gather information from cameras and microphones you wear on your head. I've had a lot of fun with the Meta Ray Ban Smart Glasses, but I honestly haven't turned them on and used the features in months. I was already a Ray Ban Wayfarer fan, so I wear them as my sunglasses, but I never had much luck getting the voice recognition to wake up and respond on command. I liked using them as open ear headphones, but not when I'm in New York City and the street noise overpowers them. I can't imagine that I will stick with my Meta glasses once there is a full platform with apps and extensibility - the promise of Android XR. I'm not saying that I saw the future in Google's smart glasses prototype, but I have a much better view of what I want that smart glasses future to look like.

[15]

I finally tried Samsung's Project Moohan Android XR headset, and it was Google Gemini that stole the show

Google and Samsung's Project Moohan Android XR headset isn't entirely new - my colleague Lance Ulanoff already broke down what we knew about it back in December 2024. But until now, no one at TechRadar had the chance to try it out. That changed shortly after Sundar Pichai stepped off the Google I/O 2025 stage. I had a brief but revealing seven-minute demo with the headset. After scanning my prescription lenses and matching them with a compatible set from Google, they were inserted into the Project Moohan headset, and I was quickly immersed in a fast-paced demonstration. It wasn't a full experience - more a quick taste of what Google's Android XR platform is shaping up to be, and very much on the opposite end of the spectrum compared to the polished demo of the Apple Vision Pro I experienced at WWDC 2023. Project Moohan itself feels similar to the Vision Pro in many ways, though it's clearly a bit less premium. But one aspect stood out above all: the integration of Google Gemini. Just like Gemini Live on an Android like the Pixel 9 - Google's AI assistant takes center stage in Project Moohan. The launcher includes two rows of core Google apps - Photos, Chrome, YouTube, Maps, Gmail, and more -- with a dedicated icon for Gemini at the top. You select icons by pressing your thumb and forefinger together, mimicking the Apple Vision Pro's main control. Once activated, the familiar Gemini Live bottom bar appears. Thanks to the headset's built-in cameras, Gemini can see what you're seeing. In the press lounge at the Shoreline Amphitheater, I looked at a nearby tree and asked, "Hey Gemini, what tree is this?" It quickly identified a type of sycamore and provided a few facts. The whole interaction felt smooth and surprisingly natural. You can also grant Gemini access to what's on your screen, turning it into a hands-free controller for the XR experience. I asked it to pull up a map of Asbury Park, New Jersey, then launched into immersive view - effectively dropping into a full 3D rendering akin to Google Earth. Lowering my head gave me a clear view below, and pinching and dragging helped me navigate around. I jumped to a restaurant in Manhattan, asked Gemini to show interior photos, and followed up by requesting reviews. Gemini responded with relevant YouTube videos of the eatery. It was a compelling multi-step AI demo - and it worked impressively well. That's not to say everything was flawless. There were a few slowdowns, but Gemini was easily the highlight of the experience. I came away wanting more time with it. Though I only wore the headset briefly, it was evident that while it shares some design cues with the Vision Pro, Project Moohan is noticeably lighter - though not as high-end in feel. After inserting the lenses, I put the headset on like a visor -- the screen in front, and the back strap over my head. A dial at the rear let me tighten the fit easily. Pressing the power button on top adjusted the lenses to my eyes automatically, with an internal mechanism that subtly repositioned them within seconds. From there, I used the main control gesture - rotating my hand and tapping thumb to forefinger - to bring up the launcher. That gesture seems to be the primary interface for now. Google mentioned eye tracking will be supported, but I didn't get to try it during this demo. Instead, I used hand tracking to navigate, which, as someone familiar with the Vision Pro, felt slightly unintuitive. I'm glad eye tracking is on the roadmap. Google also showed off a depth effect for YouTube videos that gave motion elements -- like camels running or grass blowing in the wind - a slight 3D feel. However, some visual layering (like mountain peaks floating oddly ahead of clouds) didn't quite land. The same effect was applied to still images in Google Photos, but these lacked emotional weight unless the photos were personal. The standout feature so far is the tight Gemini integration. It's not just a tool for control - it's an AI-powered lens on the world around you, which makes the device feel genuinely useful and exciting. Importantly, Project Moohan didn't feel burdensome to wear. While neither Google nor Samsung has confirmed its weight - and yes, there's a corded power pack I slipped into my coat pocket - it remained comfortable during my short time with it. There's still a lot we need to learn about the final headset. Project Moohan is expected to launch by the end of 2025, but for now, it remains a prototype. Still, if Google gets the pricing right and ensures a strong lineup of apps, games, and content, this could be a compelling debut in the XR space. Unlike Google's earlier Android XR glasses prototype, Project Moohan feels far more tangible, with an actual launch window in sight. I briefly tried those earlier glasses, but they were more like Gemini-on-your-face in a prototype form. Project Moohan feels like it has legs. Let's just hope it lands at the right price point.

[16]

"See you on May 20": Is this Google I/O promo a sneak peek at Android smart glasses?

This couldn't have been a more obvious hint if Samat had ended by winking directly into the camera. Google may have just given us its biggest hint yet about a pair of Android smart glasses that could make an apperance at Google I/O next week. It's been a couple of years since Google finally shuttered its ill-fated Google Glass AR glasses, but it looks like Google isn't giving up yet. An Android Show video streamed Tuesday on the Android YouTube channel may have included a sneaky tease of Google's rumored Android-powered smart glasses. Here's a look at what we know so far about Google's potential return to smart glasses and the pitfall Google will need to watch out for this time. See also: Best phone deals in May 2025 The live stream unpacked some recent Android updates, but the most interesting part of the show came near its end. Sameer Samat, Android Ecosystem President, closed things out with a short promo for Google I/O, stating: "Join us for an exciting Google I/O in just a few days. We'll have deep dives from developers, the latest on Google Gemini, and maybe even a few more really cool Android demos. See you on May 20." As Samat says, "A few more really cool Android demos," he slips on a pair of black sunglasses. The frames are chunky black plastic, and there appear to be circular indents on each side -- in the exact spots where cameras would be on a pair of smart glasses. This couldn't have been a more obvious hint if Samat had ended by winking directly into the camera. The implication is clear: one of the "really cool Android demos" coming up at Google I/O could very well be a pair of Android-powered smart glasses. This hint comes after rumors of a private Google smart glasses demo last month, and as Google's rival Meta goes all-in on smart glasses. There is a smart glasses showdown brewing. Google's potential return to the smart glasses market is no coincidence. Meta is pouring attention into its Ray-Ban AI smart glasses, which are expected to get a big refresh later this year. At the same time, there are rumors that Apple is developing a chip for a pair of smart glasses. It's no surprise that Google is returning to smart glasses despite its failure with Google Glass. The glasses Samat briefly wore in the most recent Android Show Livestream certainly look strikingly similar to Meta's Ray-Ban smart glasses. While it's nothing exciting, the design is definitely a step up from the blocky Matrix-like look of Google Glass. The question is, what else has Google changed this time around? Considering we got this sneak peek on the Android Show, it seems likely that this pair of smart glasses will be running on Android, or some sort of modified version of it. Additionally, I hope Google is paying attention to the controversy surrounding privacy on Meta's Ray-Ban glasses. Privacy is a natural concern when you're walking around wearing a device that can record audio and video at the sound of a simple activation phrase. Meta took things a step further recently by tweaking its privacy policy to require users to allow Meta to store certain audio and video data in the cloud to improve and train its AI. While Google isn't exactly a paragon of data privacy, hopefully, they'll pay attention to the concerns over Meta's move with its smart glasses. If Google commits to respecting user privacy and allowing them to control where and how data from their glasses is stored, it could give Google's new Android-powered smart glasses an edge on Meta. Either way, we'll soon find out exactly what Google envisions for its Android smart glasses at Google I/O, which will kick off on May 20.

[17]

Google Unveils Android XR Glasses with Gemini AI Integration - Decrypt

Google unveiled Android XR, a new extended reality platform designed to integrate its Gemini AI into wearable devices such as smart glasses and headsets. During its 2025 I/O developer conference on Tuesday, the tech giant showcased the Android XR glasses, the company's first eyewear set since the ill-fated Google Glass smart glasses in 2023. During the presentation, Shahram Izadi, Vice President and General Manager at Android XR, highlighted the need for portability and quick access to information without relying on a phone. "When you're on the go, you'll want lightweight glasses that can give you timely information without reaching for your phone," he said. "We built Android XR together as one team with Samsung and optimized it for Snapdragon with Qualcomm." Google first announced Android XR in December 2024. The reveal arrived eight months after Meta released the latest version of its Ray-Ban Meta AI glasses -- a sign of growing competition in the wearable AI space. Like Meta's AI glasses, the Android XR glasses include a camera, microphones, and speakers and can connect to an Android device. Google's flagship AI, Gemini, provides real-time information, language translation, and an optional in-lens display that shows information when needed. During the presentation, Google also showed off the Android XR glasses live streaming capabilities, as well as their ability to take photos, receive text messages, and display Google Maps. Google also demonstrated how Gemini can complement exploration and navigation through immersive experiences. "With Google Maps in XR, you can teleport anywhere in the world simply by asking Gemini to take you there," Izadi said. "You can talk with your AI assistant about anything you see and have it pull up videos and websites about what you're exploring." While Google did not announce a release date or price, Izadi said the glasses would be available through partnerships with South Korean eyewear brand Gentle Monster and U.S. brand Warby Parker, adding that a developer platform for Android XR is in development. "We're creating the software and reference hardware platform to enable the ecosystem to build great glasses alongside us," Parker said. "Our glasses prototypes are already being used by trusted testers, and you'll be able to start developing for glasses later this year."

[18]

What it's like to wear Google's Gemini-powered AI glasses

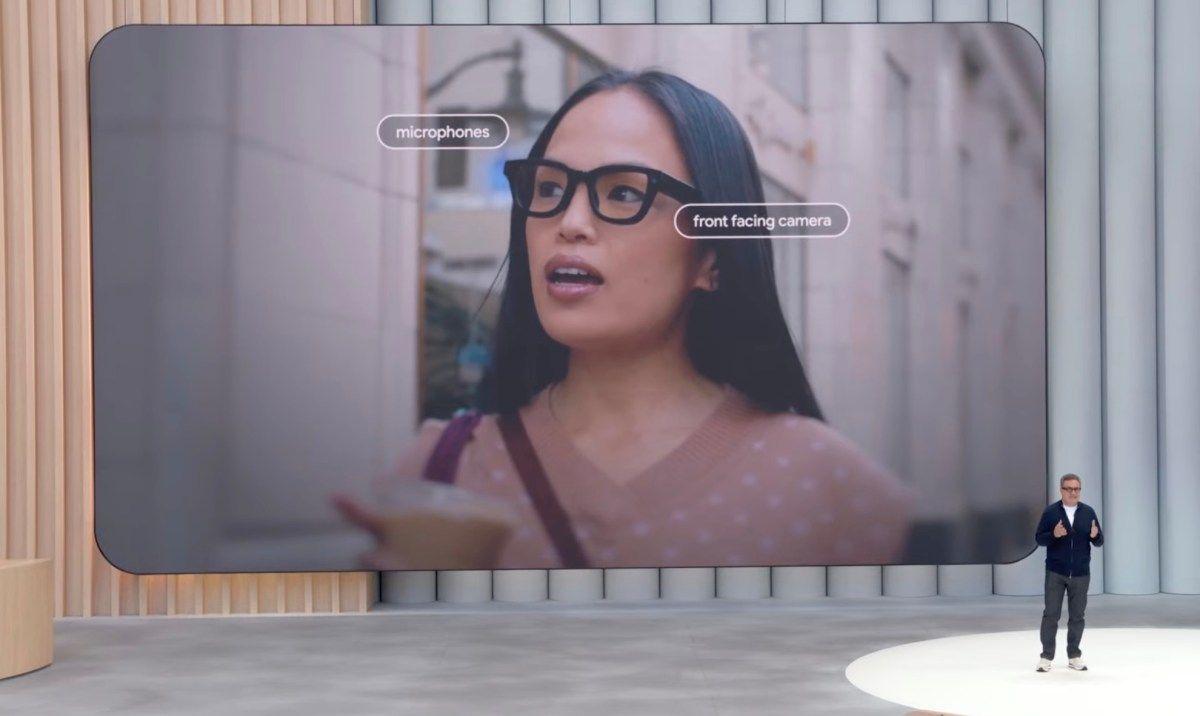

Google wants to give people access to its Gemini AI assistant with the blink of an eye: The company has struck a partnership with eyeglasses makers Warby Parker and Gentle Monster to make AI smart glasses, it announced at its Google I/O developer conference in Mountain View Tuesday. These glasses will be powered by Google's new Android XR platform, and are expected to be released in 2026 at the earliest. To show what Gemini-powered smart glasses can do, Google has also built a limited number of prototype devices in partnership with Samsung. These glasses use a small display in the right lens to show live translations, directions and similar lightweight assistance. They also feature an integrated camera that gives Gemini a real-time view of your surroundings and can also be used to capture photos and videos. "Unlike Clark Kent, you can get superpowers when you put your glasses on," joked Android XR GM and VP Shahram Izadi during Tuesday's keynote presentation. Going hands- (and eyes-) on Google demonstrated its prototype device to reporters Tuesday afternoon. Compared to a regular pair of glasses, Google's AI device still features notably thicker temples. These house microphones, a touch interface for input, and a capture button to take photos.Despite all of that, the glasses do feel light and comfortable, similar to Meta's Ray-Ban smart glasses.

[19]

Android XR is Coming Soon for Headsets and Glasses