Google to Implement AI-Generated Image Labeling in Search Results

2 Sources

2 Sources

[1]

Google Search to start labelling AI-generated images

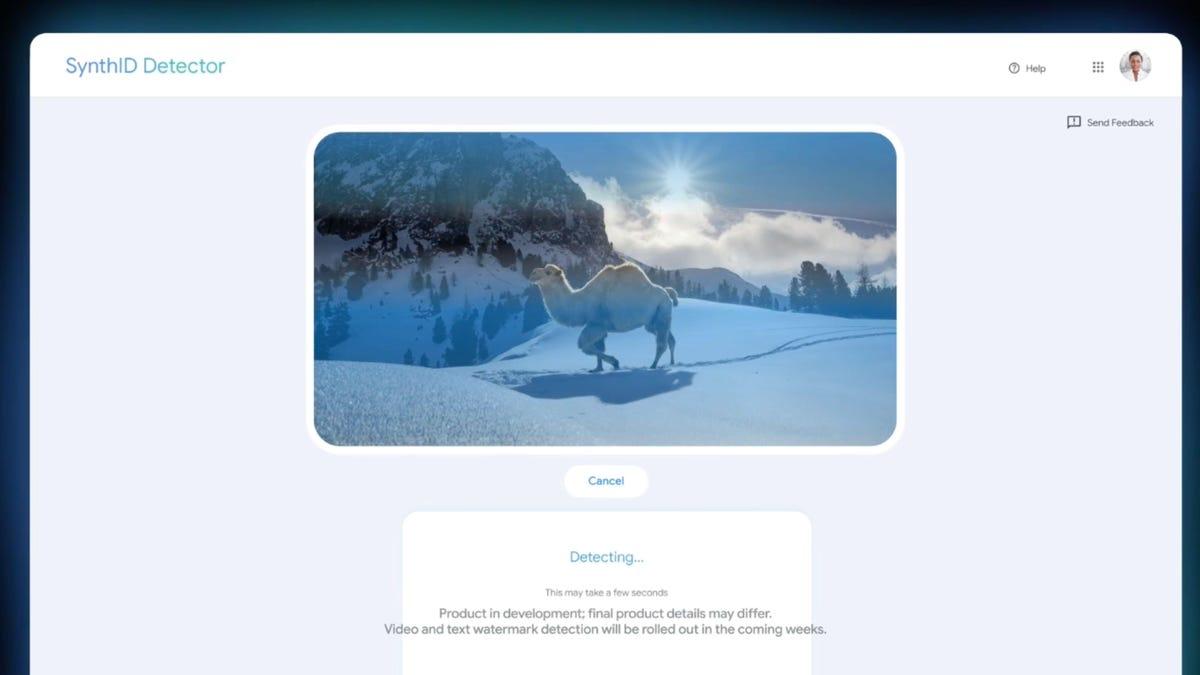

Google has announced that it will be rolling out a new feature in search that helps users distinguish between AI-generated images and images taken by humans. The move comes after the tech giant has joined the Coalition for Content Provenance and Authenticity (C2PA), a group of major brands that have developed the latest Content Credentials standard to prevent misinformation being spread online. Google said that the metadata for images will now include information like the origin of the image and where and how it was created. These labels can now be seen across Google apps like Search, Images and Lens. Once users click on the 'About this image' feature, they can see if an image was created or edited by AI. Besides this, the company is also working on bringing in AI content labelling into its ad platforms so that they comply with the C2PA policies when featuring AI-generated content. Google brings AI answers in Search to India, other countries YouTube is also reportedly looking at ways to identify and label videos created or edited by AI and is expected to announce news around this later during the year. There are however AI platforms that have declined to follow the C2PA standard like Black Forest Labs, the AI image and video generating startup behind Flux, the model that X's Grok uses. Google is also working on a watermarking tool called SynthID which was created by Google DeepMind to help identify AI-generated text, images, audio and videos online. Published - September 19, 2024 11:47 am IST Read Comments

[2]

Google is preparing a system to label all AI-generated content - Softonic

English The company will integrate the C2PA standard into many of its services and systems, including advertising Google has announced the implementation of a content authentication technology in its products. Over the coming months, the company will integrate the Coalition for Content Provenance and Authenticity (C2PA) standard into its search, advertising, and potentially YouTube services. The new measure aims to help users differentiate between images created by humans and those generated by artificial intelligence. Developed by a consortium of tech companies since 2019, the C2PA technology aims to combat the growing amount of AI-generated content, allowing the tracking of content origins and its editing history through metadata. At Google, it will be integrated into features like "About this image" in search, Circle to Search and Lens, showing information about whether an image has been edited with AI. Laurie Richardson, Vice President of Trust and Safety at Google, acknowledged the challenges of establishing content provenance in such a vast digital environment. "There is no magic solution for all online content," she stated, while emphasizing the importance of collaborating with other industry players to create sustainable solutions. With this initiative, Google aligns with other AI transparency measures, such as SynthID, a watermarking technology created by Google DeepMind. The C2PA standard will also be implemented in Google's advertising systems as part of its transparency policies. In the long term, it could extend to platforms like YouTube, especially for content captured by webcam. However, the adoption of this technology is not without problems. Many devices and editing tools are still not compatible with C2PA, making it difficult to maintain metadata. Additionally, AI image generators would need to adapt to include this information, which could exclude open-source models.

Share

Share

Copy Link

Google announces plans to label AI-generated images in search results, aiming to enhance transparency and help users distinguish between human-created and AI-generated content.

Google's Initiative to Label AI-Generated Images

In a significant move towards transparency in the digital realm, Google has announced its plans to implement a labeling system for AI-generated images in search results. This development comes as part of the tech giant's ongoing efforts to address the challenges posed by the rapid advancement of artificial intelligence technology

1

.The Labeling Mechanism

Google's new system will utilize metadata embedded within images to identify and label those created by AI. When users encounter these images in search results, they will see a label indicating "AI-generated image"

1

. This feature is expected to roll out in the coming months, demonstrating Google's commitment to keeping pace with the evolving landscape of digital content creation.Broader Implications for AI-Generated Content

While the initial focus is on images, Google is reportedly working on expanding this labeling system to encompass all forms of AI-generated content

2

. This comprehensive approach underscores the growing need for distinguishing between human-created and AI-generated content across various media types.Collaboration with Content Creators and Platforms

To ensure the effectiveness of this labeling system, Google is actively collaborating with content creators and platforms. The company is encouraging the use of metadata standards that will enable the identification of AI-generated content

1

. This collaborative effort aims to create a more transparent online environment where users can make informed decisions about the content they consume.Related Stories

User Empowerment and Digital Literacy

By implementing this labeling system, Google aims to empower users with the information needed to critically evaluate the content they encounter online. This initiative aligns with broader efforts to promote digital literacy and combat the spread of misinformation in the digital age

2

. As AI-generated content becomes increasingly sophisticated, such transparency measures become crucial in maintaining user trust and platform integrity.Challenges and Future Developments

While Google's initiative is a step in the right direction, it faces potential challenges. The rapidly evolving nature of AI technology may require continuous updates to the labeling system. Additionally, there are concerns about the potential for creators to circumvent these labels, highlighting the need for robust verification mechanisms

2

.As this technology develops, it is likely to spark further discussions about the ethical implications of AI-generated content and the responsibilities of tech companies in managing its proliferation. Google's move may set a precedent for other platforms to follow suit, potentially reshaping how we interact with and perceive digital content in the future.

References

Summarized by

Navi

[1]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology