Google reveals Glimmer design system for Android XR AI glasses ahead of 2026 launch

3 Sources

3 Sources

[1]

Google on what to expect from Android XR transparent displays

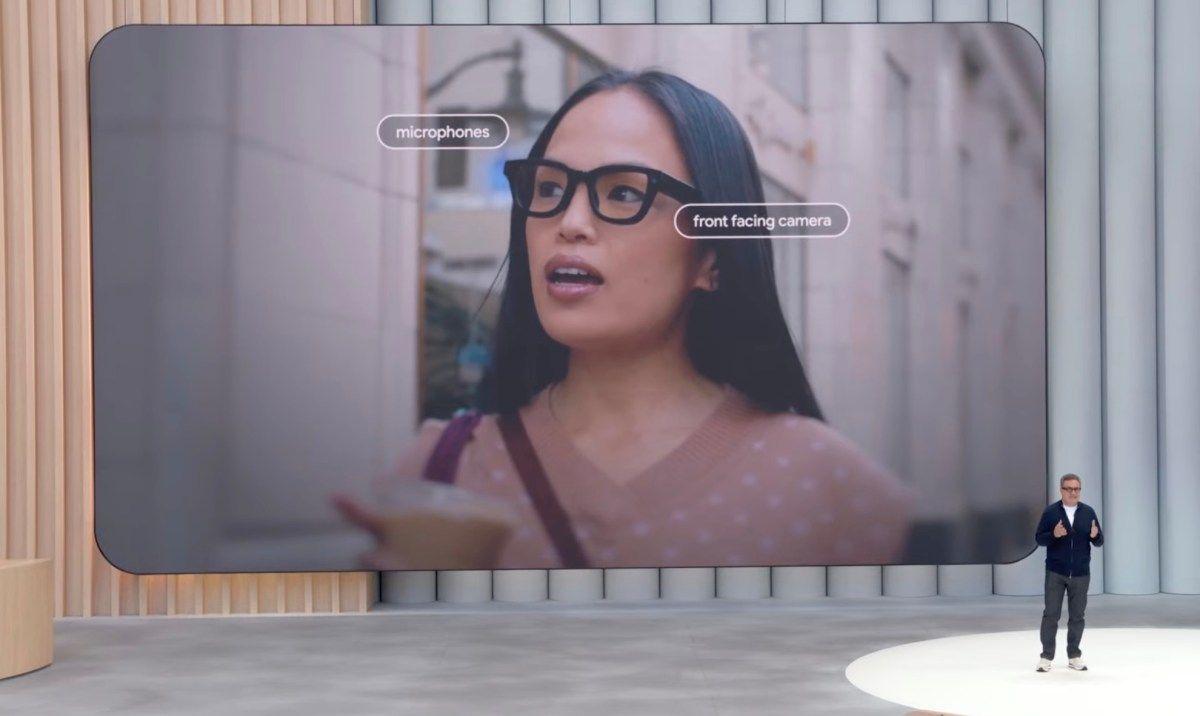

Google Design has an interesting article this week about "Designing for Transparent Screens" and the work that went into Jetpack Compose Glimmer, Android XR's design system for Display AI Glasses. For those who haven't used smart glasses with displays before, the first thing to know is that the "interface doesn't actually appear on the surface of the lens itself." Rather, it is "projected to a perceived depth of about one meter away." Additionally, the display area is a square. You can get an idea of what to expect by "placing your hand in front of you at arm's length and focusing on your fingers." As you do this, the "environment behind and around your hand goes out of focus," as seen in the video below. This was a really pivotal realization for our Android XR design team early on. It sounds like a small detail, but it means that to read any content, a user has to consciously shift their focus. They're moving their gaze from the real world -- say, a friend's face, a stunning sunset, or the street ahead -- to this one-meter focal plane. It's not a passive glance; it's an active, physical choice to engage with the UI, even if just for a millisecond. Motion on this type of display cannot be "distracting or extraneous." Google's work on notifications found that a "typical motion transition of around 500 milliseconds" appeared too quickly: Instead of slowly drawing someone's attention, they would essentially "blink" and a notification would suddenly be in view. The implementation Android XR settled on has incoming notification transitions spanning about two seconds during which a circle (badged profile avatar) expands into a pill. With text, Google Sans Flex's optical size axis is used to improve readability at that one-meter distance: ...letters like a and e have larger counters (the openings inside the letter), and the dots on j's and i's are further away from the letter body. Bold typography and increased letter spacing are also recommended, with text measured in visual angle (degrees) instead of pixels or points: Think of it like driving along a highway. You see exit signs or speed-limit signs with text and graphics printed at a fixed size. But how big that text appears to you changes dramatically based on your distance. Far away, it's tiny; as you get closer, it grows. That's visual angle in action. These glasses use an additive display that can only add light. It cannot create black, which appears as 100% transparent, with Google equating it to "how a home movie projector can't project black." This proved an issue when Google initially tried to port existing Material components: Material Design, with its tactile metaphors of paper and layers, relies heavily on bright, opaque surfaces and subtle shadows. On an additive display, those "surfaces" turned into large, bright blocks of light, creating distracting glare and rapidly draining battery life. We quickly discovered another significant problem: halation. Halation is that effect where bright light sources bleed into adjacent darker areas, creating a blurry, halo-like fringe. On these displays, our bright surfaces would bleed into transparent content, making text completely illegible. Google's solution sees "surfaces use black to provide a legible 'clean plate' foundation for your content." This is paired with a "new depth system that casts dark, rich shadows to convey a sense of occlusion and space." Additionally, "highly saturated colors we'd typically use on a phone simply 'disappear' against the real world." As such, Android XR UI is "neutral by default" to "harmonize with the diverse colors of the real world." Color is used sparingly to draw attention to buttons and other important elements.

[2]

Google Introduces Glimmer UI for AI Glasses

The industry is racing up to build the next generation of UI for AI glasses that blends into the real world. Google has introduced Glimmer, a new design language built for AI-powered display glasses. It's part of the Android XR initiative, and it prioritizes voice, gesture and eye-tracking with transparent UI elements. The new Glimmer design system focuses on "glanceable, transient elements that appear only when needed". Glimmer's UI appears on optical see-through AI glasses at one meter away, instead on the lens itself. It's optimized for transparent displays, so UI is legible, low-distraction and works with voice, gesture and eye input. Google has published a Jetpack Compose Glimmer that features components, theme, and behaviors and a Figma kit. Android developers can build Glimmer-styled UIs for AI glasses. UI elements have rounded corners and floating tiles for multitasking. And to read in real-world backgrounds, Google has gone with dark surfaces and bright content for contrast. Throughout the UI, sharp corners are discouraged because they are displeasing to the eye. Google says the Glimmer UI must "harmonize" with reality instead of taking the attention away from the user. In a way, it's an ambient UI by Google for the upcoming AI glasses revolution, under the Android XR initiative. Google further notes that the UI must earn attention of the user without being dominating. Next, on these AI glasses, the display is additive, meaning it can only add light. So, true black won't exist and black appears as 100% transparent in a container. Highly saturated colors also disappear in real-world backgrounds. So, Google is going for neutral and desaturated color palette with dark surfaces and bright content. It's a break from Google's Material Design principles and moving towards physics-aware UI philosophy where perception is more important than the screen.

[3]

Google details how users will control and navigate Android XR glasses ahead of 2026 launch

New 'Glimmer' design language guidelines are set for optical see-through displays. Google has been releasing detailed design documentation and development tools related to its forthcoming Android XR glasses platform. 9to5Google has found updated instructions that tell how users will be able to control and navigate these glasses. Read on to know what to expect in terms of their hardware controls, software navigation, display behaviour and thermal dissipation. This should give you an idea of what the overall Android XR experience will look like. Google has officially named the two hardware categories: Importantly, users can turn off the display at any time. This means you can have visuals when needed or enjoy voice-only when preferred. It also indicates that Google sees voice and AI interaction, likely powered by Gemini, as central to the platform rather than just an add-on. Also Read: Best smart glasses with AI, AR and VR you can buy in India Google is standardising key physical controls across all Android XR glasses. Every device must include: Models with displays will also feature a separate display button, typically placed on the underside of the stem. It allows users to wake or sleep the screen, effectively switching between visual and audio-only modes. The camera button supports multiple actions. A single tap captures a photo, while a long press records video. Pressing again stops recording, and a double press launches the Camera app. You can use the touchpad through these gestures: On display models, swipes also handle scrolling, focus movement and button selection inside apps. Each device will include two LEDs, one visible to the wearer and one to bystanders. These act as system indicators for whether the device is on, off, whether it is recording, etc. Developers cannot modify these LEDs. So, you see, Google is standardising privacy cues across the ecosystem. On Display AI Glasses, users will see a home screen that Google compares to a smartphone lockscreen. At the bottom sits a persistent system bar showing time, weather, notifications, alerts and Gemini feedback. Above it, users can view contextual, glanceable information, suggested shortcuts to likely next actions, and ongoing activities when multitasking. Notifications appear as pill-shaped chips, and you can select to expand them. Google has chosen 'Glimmer' design language for Android XR glasses with guidelines for soft corners, what colours to choose based on power and heat efficiency, Material Symbols Rounded as the standard icon, and the use of a stack layout that shows one piece of content at a time. So, you can expect consistency across brands employing the Android XR platform. Google's approach includes a combination of physical buttons, touch gestures and voice control in a lightweight glasses format. By requiring apps to function without a display, Google is prioritising AI-driven, voice-first interaction as a core experience. Let's see how the end products pan out. Keep reading Digit.in for similar updates.

Share

Share

Copy Link

Google has detailed its Glimmer design language for Android XR AI glasses, introducing a physics-aware UI philosophy built specifically for transparent displays. The system prioritizes voice-first interaction, eye-tracking, and glanceable transient UI elements that appear only when needed, marking a departure from traditional Material Design principles.

Google Introduces Glimmer Design Language for Android XR AI Glasses

Google has unveiled Glimmer, a comprehensive design system created specifically for Android XR AI-powered display glasses that fundamentally reimagines how users interact with wearable technology

1

2

. The new Glimmer design language prioritizes voice-first interaction, gesture control, and eye-tracking with transparent UI elements that harmonize with the real world rather than dominate the user's attention. Google Design has released detailed documentation alongside Jetpack Compose Glimmer, providing Android developers with the tools to build applications for this emerging platform ahead of the 2026 launch3

.

Source: Digit

Understanding Optical See-Through Displays and Visual Perception

The interface on these AI glasses doesn't appear on the lens surface itself but is projected to a perceived depth of approximately one meter away

1

. This fundamental characteristic shaped Google's entire approach to the design system. The display area appears as a square, and users must consciously shift their focus from the real world to engage with the UI, making it an active physical choice rather than a passive glance. Google's design team discovered this was pivotal in understanding how to create glanceable transient UI that doesn't disrupt the user's connection to their environment. Motion transitions required significant adjustment, with typical 500-millisecond animations appearing too abrupt on transparent displays. Android XR settled on notification transitions spanning approximately two seconds, during which a circle expands into a pill-shaped element to gradually draw attention without startling users.

Source: 9to5Google

Technical Challenges with Additive Displays and Material Design

The optical see-through displays use additive technology that can only add light, meaning true black appears as 100% transparent

1

2

. Google compared this limitation to how a home movie projector cannot project black. When the team initially attempted to port existing Material Design components, they encountered significant problems. The bright, opaque surfaces that define Material Design turned into distracting blocks of light that drained battery life rapidly. More critically, they discovered halation, an effect where bright light sources bleed into adjacent darker areas, creating blurry halos that rendered text completely illegible. This forced Google to abandon traditional Material Design principles in favor of a physics-aware UI philosophy where perception matters more than conventional screen design. The solution involves surfaces that use black to provide a legible foundation, paired with a new depth system casting dark, rich shadows to convey occlusion and space.Neutral UI Palette and Readability Optimizations

Google adopted a neutral and desaturated color palette with dark surfaces and bright content to ensure readability against real-world backgrounds

1

2

. Highly saturated colors that work well on smartphones simply disappear when overlaid on diverse real-world environments. The Android XR UI remains neutral by default, using color sparingly to draw attention to buttons and critical interactive elements. Text optimization employs Google Sans Flex's optical size axis to improve readability at the one-meter distance, with letters like 'a' and 'e' featuring larger counters and increased spacing between dots and letter bodies. Bold typography and increased letter spacing are recommended throughout, with text measured in visual angle (degrees) rather than pixels or points. This approach mirrors how highway signs appear to change size based on distance, ensuring consistent legibility regardless of the user's focal adjustments.Related Stories

Hardware Controls and User Interaction Plans

Google has standardized hardware controls across all Android XR devices, categorizing them into two types: Display AI Glasses and Audio AI Glasses

3

. Every device must include a camera button and touchpad for touch gestures including swipes, taps, and scrolling. Display models feature an additional display button on the stem's underside, allowing users to wake or sleep the screen and switch between visual and audio-only modes. This design choice signals that Gemini-powered voice interaction sits at the platform's core rather than serving as a supplementary feature. The camera button supports multiple actions: a single tap captures photos, long press records video, pressing again stops recording, and double press launches the Camera app. Each device includes two LEDs for system indicators—one visible to the wearer and one to bystanders—creating standardized privacy cues that developers cannot modify.Multitasking and Navigation Architecture

Display AI Glasses present a home screen comparable to a smartphone lockscreen, featuring a persistent system bar showing time, weather, notifications, alerts, and Gemini feedback

3

. Above this bar, users access contextual information, suggested shortcuts to likely next actions, and ongoing activities when multitasking. Notifications appear as pill-shaped chips that users can select to expand. The Glimmer design language mandates soft rounded corners throughout the interface, as sharp corners prove displeasing to the eye on transparent displays. UI elements feature floating tiles optimized for multitasking, with Material Symbols Rounded serving as the standard icon set. Google has also published a Figma kit alongside Jetpack Compose Glimmer, enabling designers and developers to create consistent experiences across brands employing the Android XR platform. By requiring apps to function without a display, Google positions AI-driven, voice-first interaction as the fundamental experience rather than an optional mode, potentially reshaping how users think about wearable computing as the 2026 launch approaches.

Source: Beebom

References

Summarized by

Navi

Related Stories

Google AI glasses set to launch in 2026 with Gemini and Android XR across multiple partners

08 Dec 2025•Technology

Google unveils Android XR updates with 2D to 3D conversion and AI glasses partnerships

02 Dec 2025•Technology

Google's Android XR Glasses: A Glimpse into the Future of Smart Eyewear

22 May 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology