AI tools create fake Epstein photos in seconds, fueling wave of disinformation targeting politicians

6 Sources

6 Sources

[1]

Grok's Analysis of Whether Mamdani Is Related to Epstein May Be the Single Most Amazing AI Response We've Ever Seen

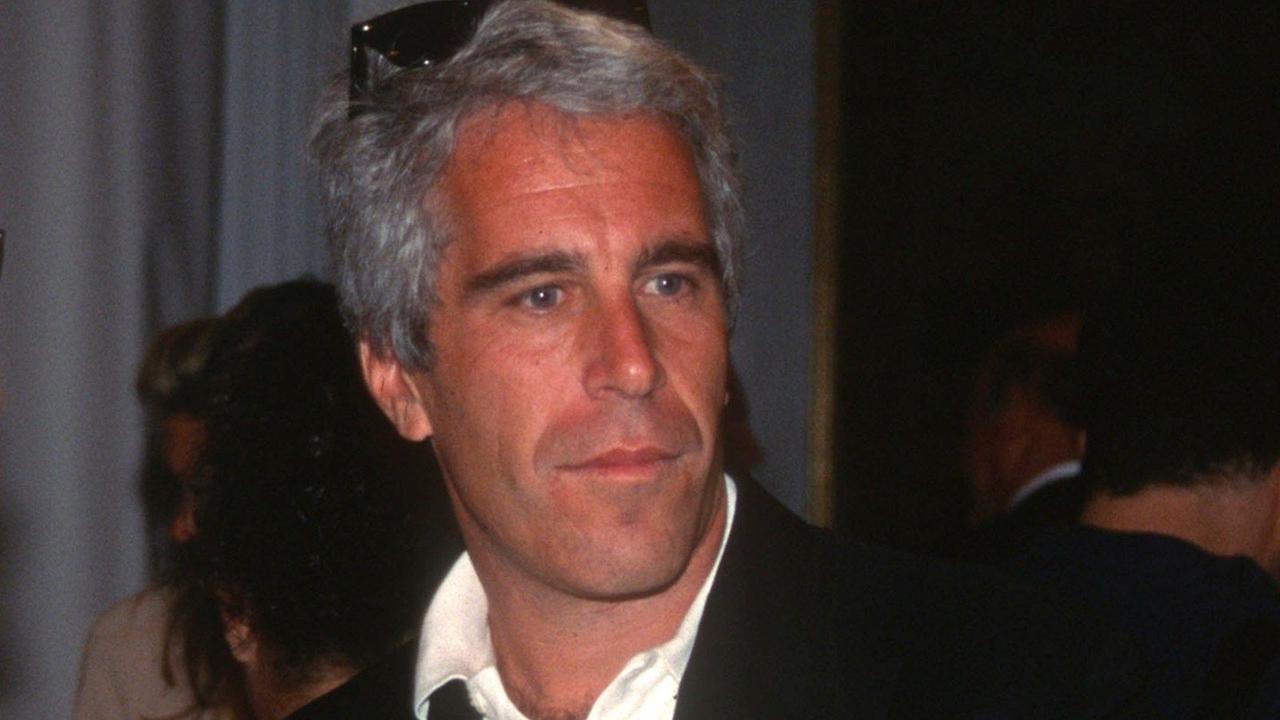

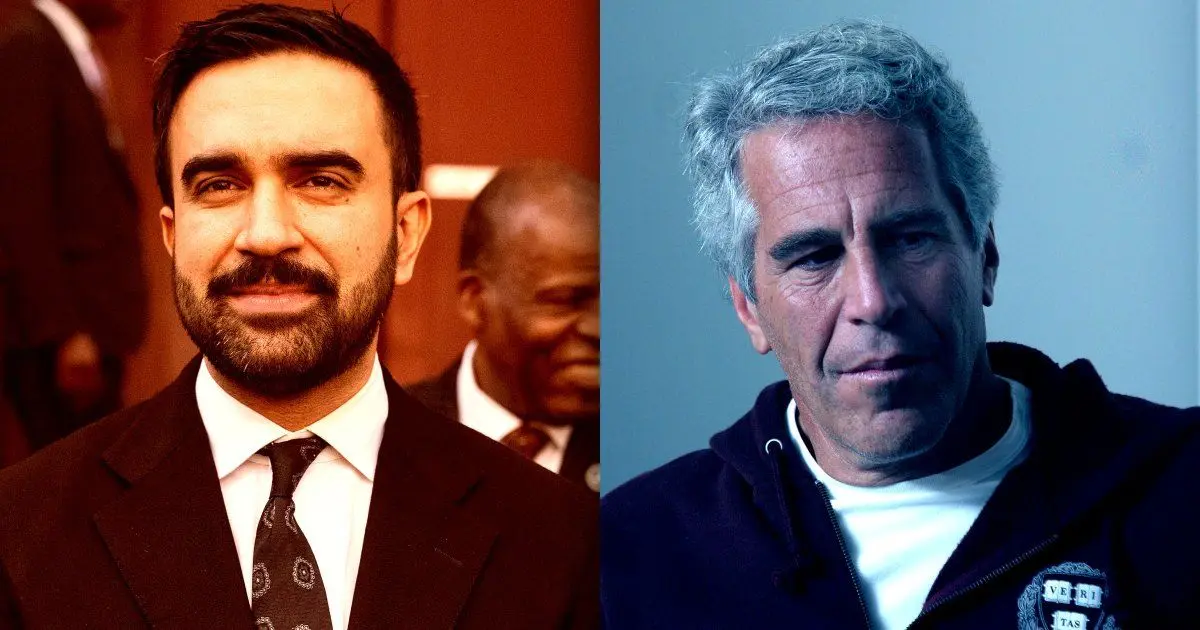

Along with being used to generate nonconsensual sexual images of women and girls, spread racist rhetoric, and doxx the home addresses of both celebrities and non-public figures, Elon Musk's AI chatbot Grok is struggling to get a grip on reality. The mercurial billionaire's social media platform X, which was folded into his AI startup xAI last year -- and subsequently acquired by SpaceX this week -- has once again been thrust into the center of the ongoing scandal surrounding the Justice Department's release of millions of files pertaining to its investigation into the late sex offender Jeffrey Epstein. Musk has been in full damage control mode, struggling to reconcile his attempts to downplay his personal relationship with the notorious predator with some damning emails that suggest he was actively trying to invite himself to Epstein's island in the Caribbean. Adding to the chaos is Musk's xAI chatbot Grok, which he's advertised as "maximally truth-seeking" -- but which is instead sowing chaos into the explosive revelations about Epstein. The result may be one of the most amazing AI responses we've ever seen. No, seriously: when a X account asked Grok to calculate the "probability" that New York City mayor Zohran Mamdani is somehow related to Epstein, based on perceived similarities in the shapes of their eyebrows and mouths, the AI chatbot had a downright baffling answer. The account showed Grok two headshots of the two men side by side, with two arrows pointing to their eyebrows and circles around their respective mouths, as if to suggest they were genetically related. The odd request may have been related to recently released documents implicating Mamdani's mother, Mira Nair, who attended a 2009 afterparty at the house of convicted sex trafficker and Epstein's close collaborator Ghislaine Maxwell. However, there's currently no evidence to suggest that Nair knew about or was involved in any criminal activity. All that flew far above the AI chatbot's head, though, as it failed to even accurately identify Mamdani. "Based on the image, these seem to be [late-night TV host] Jimmy Kimmel (left) and Jeffrey Epstein (right)," the chatbot responded. "They share minor similarities in eyebrow arch and mouth shape, but differences in age, hair, and features are clear." Yes, that's right: the chatbot that Musk has deployed to untold millions of users on X, which now operates as a de facto fact-checking machine used to settle arguments on the chaotic site, can't even tell the difference between Zohran Mamdani and Jimmy Kimmel, who look nothing alike. At least it admitted its error -- though only after prodding. "Correction: That's actually Zohran Mamdani on the left, not Jimmy Kimmel -- my bad on the initial ID," the chatbot conceded. "Similarities in eyebrows and smiles are there, but no known family ties to Epstein. Probability of relation: under one percent based on public info." The chatbot's gaffe shows yet again how even a seemingly straightforward task like identifying a well-known figure, who has been photographed many thousands of times, from a well-lit headshot appears to be beyond the tech's capabilities -- despite xAI pouring billions of dollars into its development. Coincidentally, Mamdani used his powers as New York City's mayor to shut down a "functionally unusable" AI chatbot just last week. The chatbot was set up by disgraced former mayor Eric Adams to help small business owners navigate local regulations, costing the administration "around half a million dollars," per Mamdani. Not unlike Grok, Adams' chatbot was shown to be incredibly unreliable, often contradicting actual labor law.

[2]

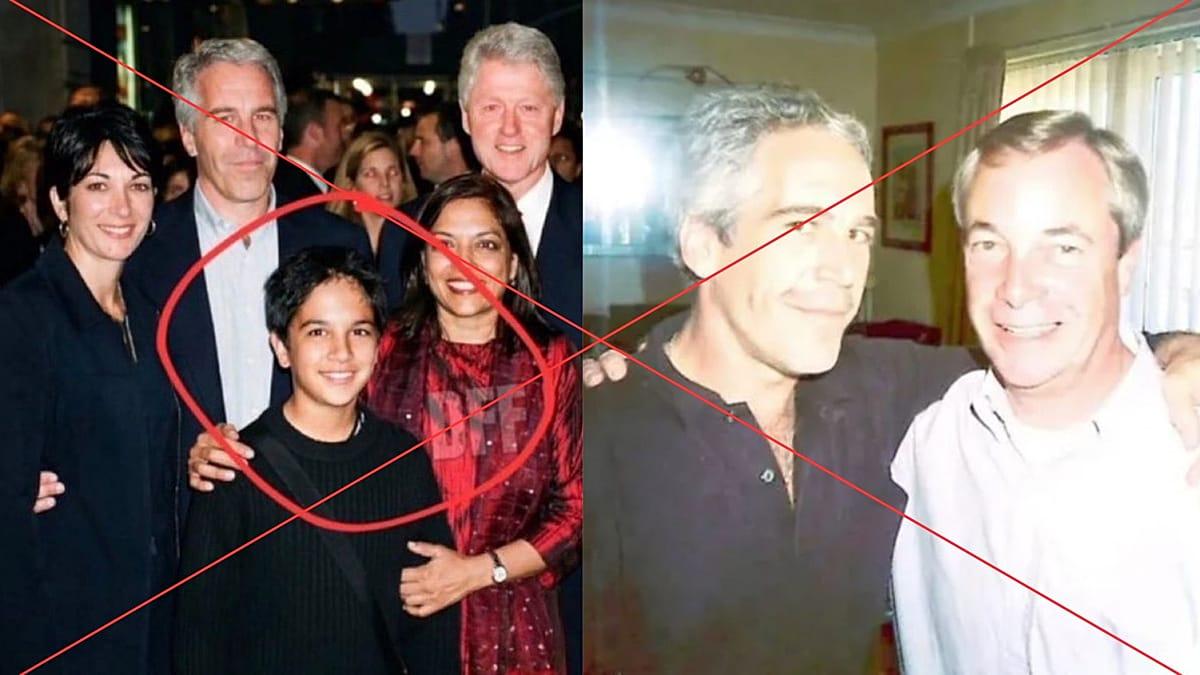

Fake photos of European and US politicians with Epstein spread online

The latest tranche of files related to the investigation into convicted sex offender Jeffrey Epstein has set the internet ablaze with AI and manipulated images supposedly depicting him with politicians. Not all are real. The US Department of Justice's release of an extra 3 million pages of files related to convicted sex offender Jeffrey Epstein has opened the door to intense speculation about his vast network of rich and powerful contacts. The documents, which contain images, videos, text messages and emails, have also triggered a wave of disinformation, including AI-generated images of a young Zohran Mamdani, New York's mayor, alongside Epstein. In one doctored image, Mamdani is pictured as a child in a photograph with his mother, Mira Nair, as well as Epstein's collaborator Ghislaine Maxwell, Jeffrey Epstein, Bill Clinton, Bill Gates and Jeff Bezos. Euronews' fact-checking team, The Cube, ran these images through Google Gemini after spotting inconsistencies in the photo. The AI chatbot detected a SynthID on the image -- an invisible watermark developed by Google to identify AI-generated content. The photo has a "DFF" watermark on it. The Cube conducted a reverse image search and matched it with an X account called @DumbFckFinder, which has a "parody account" disclaimer on it. It's spread multiple images of politicians pictured with Epstein in unrealistic contexts, such as one depicting Epstein and the late English theoretical astrophysicist Stephen Hawking, with Hawking scuba diving into a "secret tunnel". Along with the images, some social media users shared outlandish theories, for instance, suggesting that Epstein was Mamdani's father while seeking to draw up similarities between their facial characteristics. Mamdani's name was mentioned in the Epstein files five times. However, these mentions were connected to newspaper clippings rather than any potential wrongdoing. There is no evidence that Epstein ever corresponded with or wrote about Mamdani. Many of these AI images were shared on social media alongside an email sent by US publicist Peggy Siegal to Epstein in October 2009, which featured in the files. In her message, Siegal referenced Mamdani's mother while speaking about the afterparty for her film "Amelia", which was hosted at Ghislaine Maxwell's New York townhouse in 2009. The messages suggest that Epstein did not attend the screening. Mamdani would have been 17 at the time of the screening, not a baby, as depicted in some of the AI-altered images. The account also seemed to acknowledge the number of views the images garnered online as they went viral. "Damn you guys failed. I purposefully made him a baby." On 4 February, Mamdani responded to the images, stating, "at a personal level, it is incredibly difficult to see images that you know to be fake, that are patently photoshopped and AI-generated, and yet can reach across the entirety of the world in an era of misinformation." Were you fooled by these photos? Another image claims to show Epstein with British politician and leader of the far-right Reform UK, Nigel Farage, and was picked up by the Wrexham Labour Party group on X after it was shared widely online. The image shows Epstein and Farage with their arms around each other in a living room setting. It was shared on X and Threads with captions including "I won't be voting for Farage or Reform" and "A picture paints a thousand words." The Wrexham Labour Party group has since deleted the image. There are no reports of Epstein and Farage meeting or directly corresponding in the files. Mentions of him are limited to newspaper clippings and discussions of him in the context of UK politics by Steve Bannon, a former White House strategist who regularly conversed with Epstein about Europe's far-right parties, according to the tranche of documents. Farage told Sky News Australia that he "never met Epstein and never went to the island". He was referring to Little Saint James, commonly nicknamed "Epstein Island" -- the private island in the US Virgin Islands owned by Epstein, which the financier allegedly used as a base of operations for underage sex trafficking. AI-generators were unable to conclusively determine whether the image is AI-generated. The Cube ran the photograph through Google Gemini to look for traces of a SynthID, but it found that it did not contain a watermark. Meanwhile, other AI detection tools did not offer a clear-cut response. Indicators of AI generation include inconsistent lighting that does not match the shadows on Epstein and Farage's faces, as well as the fact that their shirts align unnaturally. Macron targeted by Russian bots Elsewhere, the Ukrainian Center for Countering Disinformation, a body affiliated with the Ukrainian National Security and Defence Council of Ukraine, identified the "Matryoshka" bot network spreading doctored French newspaper covers linking French President Emmanuel Macron to Epstein. One cover mimicking French daily Libération asks, "What was Emmanuel Macron doing 18 times on Epstein's island when he was France's Minister of the Economy". There is no evidence of Libération publishing this story. Although Macron is named in the files, there is also no evidence that Epstein and he ever communicated directly, with most of the mentions of him references by third parties. There is no evidence he was implicated in Epstein's sex crimes.

[3]

Images of NYC Mayor Mamdani with Jeffrey Epstein are AI-generated. Here's how we know

Multiple AI-generated photos falsely claiming to show New York City Mayor Zohran Mamdani as a child and his mother, filmmaker Mira Nair, with disgraced financier Jeffrey Epstein and his confidant Ghislaine Maxwell, along with other high-profile public figures, were shared widely on social media Monday. The images originated on an X account labeled as parody after a huge tranche of new Epstein files was released by the Justice Department on Friday. They are clearly watermarked as AI and other elements they contain do not add up. Here's a closer look at the facts. CLAIM: Images show Mamdani as a child and his mother with Jeffrey Epstein and other public figures linked to the disgraced financier. THE FACTS: The images were created with artificial intelligence. They all contain a digital watermark identifying them as such and first appeared on a parody X account that says it creates "high-quality AI videos and memes." In one of the images, Mamdani and Nair appear in the front of a group photo with Maxwell, Epstein, former President Bill Clinton, Amazon founder Jeff Bezos and Microsoft founder Bill Gates. They seem to be posing at night on a crowded city street. Mamdani looks to be a preteen or young teenager. Another supposedly shows the same group of people, minus Nair, in what appears to be a tropical setting. Epstein is pictured holding Clinton sitting in his arms, while Maxwell has her arm around Mamdani, who appears slightly younger. Other AI-generated images circulating online depict Mamdani as a baby being held by Nair while she poses with Epstein, Clinton, Maxwell and Bezos. None of Epstein's victims have publicly accused Clinton, Gates or Bezos of being involved in his crimes. Google's Gemini app detected SynthID, a digital watermarking tool for identifying content that has been generated or altered with AI, in all the images described above. This means they were created or edited, either entirely or in part, by Google's AI models. The X account that first posted the images describes itself as "an AI-powered meme engine" that uses "AI to create memes, songs, stories, and visuals that call things exactly how they are -- fast, loud, and impossible to ignore." An inquiry sent to the account went unanswered. However, a post by the account seems to acknowledge that it created the images. "Damn you guys failed," it reads. "I purposely made him a baby which would technically make this pic 34 years old. Yikes." The photos began circulating after an email emerged in which a publicist, Peggy Siegal, wrote to Epstein about seeing a variety of luminaries, including Clinton, Bezos and Nair, an award-winning Indian filmmaker, at 2009 afterparty for a film held at Maxwell's townhouse. While Mamdani appears as a baby or young child in all of the images, he was 18 in 2009, when Nair is said to have attended the party. The images have led to related falsehoods that have spread online in their wake. For example, one claims that Epstein is Mamdani's father. This is not true -- Mamdani's father is Mahmood Mamdani, an anthropology professor at Columbia University. Another alleges that Nair's first marriage was to a relative of Epstein. It was not. She was previously married to photographer Mitch Epstein. A search of public records and existing reporting about the two men reveals no evidence of familial ties. Epstein is a common last name among Jewish families. The NYC Mayor's Office did not respond to a request for comment. A representative for Mitch Epstein declined to comment.

[4]

AI tools fabricate Epstein images 'in seconds,' study says

Social media users have amplified AI-generated images purporting to show the convicted sex offender socializing with politicians such as New York Mayor Zohran Mamdani and his mother, award-winning filmmaker Mira Nair, AFP's fact-checkers have previously reported. In a new study, US disinformation watchdog NewsGuard prompted three leading image generators to create photos of Epstein with five politicians including President Donald Trump, Israeli Prime Minister Benjamin Netanyahu and French President Emmanuel Macron. Grok Imagine, a tool developed by Elon Musk's xAI, produced "convincing fakes in seconds" with all five, the study said. That included a fake but lifelike image purporting to show a younger Trump and Epstein surrounded by young girls. Trump has been photographed with Epstein at multiple social events but there is no publicly known picture of the pair in the presence of underage girls. Google's Gemini declined to generate an image depicting Epstein with Trump but produced realistic photos of the late sex offender with four other politicians -- Netanyahu and Macron as well as Ukrainian President Volodymyr Zelensky and UK Prime Minister Keir Starmer, the study said. The fabricated photos purported to show Epstein with the politicians at parties, aboard a private jet and relaxing on a beach. "The findings demonstrate the ease with which bad actors can use AI imaging tools to generate realistic-seeming viral fakes -- and why fake images have become so routine that it's difficult to tell authentic images from AI-generated images," NewsGuard said. When the watchdog prompted OpenAI's ChatGPT, it declined to produce any images showing Epstein with the politicians. In its response, ChatGPT said it is "not able to create images involving real people with sexualized depictions of minors or scenarios that imply sexual abuse." Detecting fakes There was no immediate response to AFP's request for comment from xAI. In its review of the fake images of Epstein with Mamdani and Nair -- which racked up millions of views on X -- researchers including those at AFP detected a SynthID, an invisible watermark meant to identify content created using Google's AI. A Google spokesman told AFP: "We make it easy to determine if content is made with Google AI by embedding an imperceptible SynthID watermark." The study comes after the Justice Department last week released the latest cache of so-called Epstein files -- more than three million documents, photos and videos related to its investigation into Epstein, who died from what was determined to be suicide while in custody in 2019. The Epstein affair has entangled high-profile figures across the globe, from Britain's former prince Andrew to renowned American intellectual Noam Chomsky and Norway's Crown Princess Mette-Marit. But it has also prompted a wave of disinformation. This week, a fake Trump social media post circulated across platforms, AFP's fact-checkers reported. The fabricated post purported to show Trump pledging to drop all tariffs against Canada if Prime Minister Mark Carney admitted to involvement with Epstein. AFP's review of Carney's references in the files do not indicate any involvement with Epstein's alleged crimes.

[5]

AI tools fabricate Epstein images 'in seconds,' study says

A study released Thursday found that AI image tools can easily create convincing fake pictures of Jeffrey Epstein with world leaders, adding to a rise in manipulated images linking politicians to the convicted sex offender. AI tools can easily fabricate convincing images of Jeffrey Epstein with world leaders, a study showed Thursday, following a surge of manipulated photos falsely linking prominent politicians to the convicted sex offender. Social media users have amplified AI-generated images purporting to show the convicted sex offender socializing with politicians such as New York Mayor Zohran Mamdani and his mother, award-winning filmmaker Mira Nair, AFP's fact-checkers have previously reported. In a new study, US disinformation watchdog NewsGuard prompted three leading image generators to create photos of Epstein with five politicians including President Donald Trump, Israeli Prime Minister Benjamin Netanyahu and French President Emmanuel Macron. Grok Imagine, a tool developed by Elon Musk's xAI, produced "convincing fakes in seconds" with all five, the study said. That included a fake but lifelike image purporting to show a younger Trump and Epstein surrounded by young girls. Trump has been photographed with Epstein at multiple social events but there is no publicly known picture of the pair in the presence of underage girls. Google's Gemini declined to generate an image depicting Epstein with Trump but produced realistic photos of the late sex offender with four other politicians -- Netanyahu and Macron as well as Ukrainian President Volodymyr Zelensky and UK Prime Minister Keir Starmer, the study said. The fabricated photos purported to show Epstein with the politicians at parties, aboard a private jet and relaxing on a beach. "The findings demonstrate the ease with which bad actors can use AI imaging tools to generate realistic-seeming viral fakes -- and why fake images have become so routine that it's difficult to tell authentic images from AI-generated images," NewsGuard said. When the watchdog prompted OpenAI's ChatGPT, it declined to produce any images showing Epstein with the politicians. In its response, ChatGPT said it is "not able to create images involving real people with sexualized depictions of minors or scenarios that imply sexual abuse." There was no immediate response to AFP's request for comment from xAI. In its review of the fake images of Epstein with Mamdani and Nair -- which racked up millions of views on X -- researchers including those at AFP detected a SynthID, an invisible watermark meant to identify content created using Google's AI. A Google spokesman told AFP: "We make it easy to determine if content is made with Google AI by embedding an imperceptible SynthID watermark." The study comes after the Justice Department last week released the latest cache of so-called Epstein files -- more than three million documents, photos and videos related to its investigation into Epstein, who died from what was determined to be suicide while in custody in 2019. The Epstein affair has entangled high-profile figures across the globe, from Britain's former prince Andrew to renowned American intellectual Noam Chomsky and Norway's Crown Princess Mette-Marit. But it has also prompted a wave of disinformation. This week, a fake Trump social media post circulated across platforms, AFP's fact-checkers reported. The fabricated post purported to show Trump pledging to drop all tariffs against Canada if Prime Minister Mark Carney admitted to involvement with Epstein. AFP's review of Carney's references in the files do not indicate any involvement with Epstein's alleged crimes. (You can now subscribe to our Economic Times WhatsApp channel)

[6]

Zohran Mamdani Is The Latest Victim of Epstein Files AI Slop

Trump Admin. Having a Hard Time Selling Narrative Around Latest ICE Killing If you happened to check social media in the aftermath of the Trump administration's latest drop of Epstein files, you may have encountered the following claim: New York's City's recently elected socialist mayor, Zohran Mamdani, is actually the secret, biological son of convicted sex trafficker Jeffrey Epstein. Supposed photos depicting Mamdani at various ages, his mother (filmmaker Mira Nair), and Epstein were shared tens of thousands of times, including by prominent right-wing influencers. Sandy Hook conspiracy theorist Alex Jones even posted on X that there was a "major investigation" underway to determine the mayor's parentage. It was all bullshit -- a dumb, AI-fueled conspiracy theory apparently based on an email between a publicist and Epstein that mentioned Nair as an attendee at a promotional screening afterparty for her 2009 film Amelia, which was hosted at the home of Ghislane Maxwell. Mayor Mamdani was born in 1991. The episode laid bare the newest, most rapidly evolving frontier in news misinformation: the collision between the changing manner in which global audiences consume information, and an artificial intelligence revolution that allows any individual to generate increasingly detailed and realistic content in just a few seconds. Nowhere has this been more apparent than throughout the Epstein files saga. For the better part of a year, the Trump administration has attempted to delay, defer, and deny public access to materials related to the case. After being forced to release the files by an act of Congress, the material has been released in dumps of hundreds of thousands, even millions of documents at a time -- including six million of them last week. The onslaught of emails, business logs, photos, testimony, videos, court documents, and personal correspondence has spread coverage of the files so thin that it's near impossible to parse. Adding to the confusion is that those already-muddy waters are being regularly stirred up by AI misinformation slop. In March of last year, researchers debunked a photo purporting to depict Canadian Prime Minister Mark Carney, actor Tom Hanks, and Epstein's convicted accomplice Ghislane Maxwell on the beach together as AI-generated. In January, a viral audio clip presented as a recording of Trump berating former Rep. Marjorie Taylor Greene over her support for the release of the files was actually generated by OpenAI's video generating software Sora. After a tranche of Epstein documents containing mentions of both Trump and former President Bill Clinton, an AI-generated video of Trump patting and kissing Clinton's crotch went viral on several social media platforms. A slew of celebrities, including Oprah Winfrey, former Vice President Kamala Harris, and Open AI CEO Sam Altman have been caught up in the AI slop vortex swirling around the release of the Epstein files. In the case of Mamdani, major conservative figures circulated AI-generated images purporting to show him as an infant on a beach vacation, in his mother's arms, with Epstein looming behind them. Other AI-generated images claimed to show a young Mamdani alongside his mother, Epstein, and other prominent figures named in the files. According to BBC Verify, the latter images seem to have originated from the parody account "@DumbFckFinder." Despite containing an AI watermark, X's AI chatbot Grok told at least one user that the photo was authentic. "Somebody has to say it. There is a very real possibility Zohran Mamdani is Jeffrey Epstein's biological son," a pro-Trump account wrote in an X post with over 2 million engagements and 90 thousand likes. "Grok says this photo of the young future, New York City mayor Zohran Mamdani with Jeffrey Epstein, Bill Gates, Bill Clinton and others is real," Alex Jones wrote in one of his posts. "I'm about to break huge news on this topic in the next few hours." No news was broken, and fact-checking notes later added to his posts clarified that the images had been made "with Google Nano Banana." It's not particularly surprising given the extent to which the Trump administration and the MAGA movement have used generative AI to create the reality they wish existed. The impulse has been particularly visible in Minneapolis, where in just the past few weeks the administration has -- through its official social media accounts -- distributed a manipulated image of lawyer and activist Nekima Levy Armstrong during her arrest, falsely showing her in tears. The Department of Homeland Security, ICE, and Border Patrol regularly share AI-generated content depicting immigration agents as jingoistic -- decidedly nordic looking -- warriors for the motherland. The pattern is repeated across the administration, and Trump's near-daily barrage of Truth social posts and reposts are typically clogged with AI slop depicting him as a king, superhero, or literally dumping shit on his rivals. The integration of AI into the public's interfacing with news events is a multi-front misinformation war on people who just want the news. From Google AI summaries serving up ludicrously incorrect answers to queries, to news outlets publishing fake books and authors after using AI to create a reading list, to the ease with which AI can create newscasts out of thin air or manipulate the image of a news personality into saying something completely fake. There is no need to invent personalities, or connections, or photos related to the Epstein files; the case itself is horrifying enough. The testimonials given by survivors are harrowing, and contain plenty of leads worthy of investigation, as do the now-public communications between Epstein and his wide networks of contacts and associates. The Epstein saga as a whole calls for a sober, independent, detailed investigation into the multiple failures of the American criminal justice system that allowed him and his accomplices to continue abusing young women years after he was publicly known as a predator. No AI hallucination can even begin to imitate those horrors.

Share

Share

Copy Link

Following the Justice Department's release of over 3 million Epstein files, AI image generators have flooded social media with convincing fake images showing the convicted sex offender with world leaders. A NewsGuard study reveals how tools like Grok and Google Gemini produce realistic fabrications in seconds, while fact-checkers struggle to help users distinguish real from fake images.

AI Image Generators Produce Convincing Fake Images of Jeffrey Epstein

The Justice Department's release of over 3 million pages of documents related to convicted sex offender Jeffrey Epstein has triggered an unprecedented wave of AI-generated images falsely depicting him with prominent politicians. Social media platforms, particularly X, have been flooded with fabricated photos showing Epstein alongside figures including New York City Mayor Zohran Mamdani, his mother filmmaker Mira Nair, and other high-profile individuals

1

. The spread of disinformation has reached such proportions that millions of users have viewed and shared these doctored images, believing them to be authentic evidence of connections to the late financier.

Source: Rolling Stone

A NewsGuard study conducted this week exposed how easily AI image generators can fabricate realistic photos of Jeffrey Epstein with world leaders. The disinformation watchdog tested three leading tools by prompting them to create images of Epstein with five politicians, including President Donald Trump, Israeli Prime Minister Benjamin Netanyahu, and French President Emmanuel Macron

4

. Elon Musk's xAI tool Grok Imagine produced convincing fake images with all five politicians in seconds, including a lifelike photo purporting to show a younger Trump and Epstein surrounded by young girls—a scenario for which no authentic photograph exists5

.

Source: Euronews

How Different AI Tools Handle Fabrication Requests

Google Gemini demonstrated mixed safeguards in the NewsGuard study. While it declined to generate an image depicting Epstein with Trump, it produced realistic photos of the sex trafficker with four other politicians—Netanyahu, Macron, Ukrainian President Volodymyr Zelensky, and UK Prime Minister Keir Starmer. These fabricated photos showed Epstein with the politicians at parties, aboard a private jet, and relaxing on a beach

4

. OpenAI's ChatGPT took the most restrictive approach, declining to produce any images showing Epstein with politicians, stating it is "not able to create images involving real people with sexualized depictions of minors or scenarios that imply sexual abuse."Grok's Bizarre Misidentification Reveals Deeper AI Flaws

Beyond creating fabricated content, Elon Musk's xAI chatbot Grok has demonstrated alarming failures in basic image recognition. When asked to calculate the probability that Zohran Mamdani was related to Epstein based on facial similarities, Grok initially misidentified the NYC mayor as late-night TV host Jimmy Kimmel—two individuals who look nothing alike

1

. Only after correction did the AI-powered misinformation tool acknowledge its error, stating "Correction: That's actually Zohran Mamdani on the left, not Jimmy Kimmel—my bad on the initial ID." This gaffe highlights how even straightforward tasks like identifying well-known figures from well-lit headshots appear beyond the capabilities of systems that Musk has promoted as "maximally truth-seeking," despite xAI pouring billions of dollars into development.

Source: Futurism

Related Stories

Methods to Detect Fabricated Images Emerge

Fact-checkers have developed techniques to distinguish real from fake images in the wake of this AI-powered misinformation crisis. When examining the viral photos of Epstein with Mamdani and Nair, which accumulated millions of views on X, researchers detected SynthID—an invisible digital watermark embedded by Google to identify content created using its AI models

3

. The images originated from an X account labeled as parody, describing itself as "an AI-powered meme engine"2

. Google's Gemini app successfully detected SynthID in multiple circulating images, providing a crucial tool for verification.However, not all fabricated images can be easily identified. When fact-checkers examined a photo claiming to show Epstein with British politician Nigel Farage—which was shared by the Wrexham Labour Party group before being deleted—AI generators could not conclusively determine whether it was AI-generated

2

. Indicators of manipulation included inconsistent lighting that didn't match shadows on faces and unnaturally aligned clothing.Why This Disinformation Wave Matters Now

The timing of these fabrications coincides with legitimate revelations from the Epstein files, creating a toxic information environment where authentic evidence becomes harder to trust. Mamdani's name appeared five times in the released documents, but only in connection with newspaper clippings rather than any wrongdoing

2

. An email from publicist Peggy Siegal to Epstein mentioned Nair attending a 2009 afterparty at Ghislaine Maxwell's townhouse, though messages suggest Epstein did not attend the screening. Yet AI-generated images depicted Mamdani as a baby with Epstein—despite him being 18 in 2009—alongside outlandish theories claiming Epstein was his biological father.NewsGuard's findings "demonstrate the ease with which bad actors can use AI imaging tools to generate realistic-seeming viral fakes—and why fake images have become so routine that it's difficult to tell authentic images from AI-generated images." Misinformation campaigns have also targeted other figures, with fake Trump social media posts and doctored French newspaper covers linking Emmanuel Macron to Epstein circulating widely. The Ukrainian Center for Countering Disinformation identified the "Matryoshka" bot network spreading fabricated content

2

.Mamdani addressed the personal toll on February 4, stating: "at a personal level, it is incredibly difficult to see images that you know to be fake, that are patently photoshopped and AI-generated, and yet can reach across the entirety of the world in an era of misinformation"

2

. The crisis underscores urgent questions about platform accountability, the need for robust detection tools, and whether current safeguards can keep pace with rapidly advancing AI capabilities that enable anyone to fabricate damaging evidence in seconds.References

Summarized by

Navi

Related Stories

AI-generated images of Nicolás Maduro spread rapidly despite platform safeguards

05 Jan 2026•Entertainment and Society

AI-Altered Image of Alex Pretti Reaches US Senate Floor, Exposing Misinformation Crisis

31 Jan 2026•Entertainment and Society

Trump's AI-Generated Portraits: A New Era of Presidential Image-Making

29 Sept 2025•Technology

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Samsung unveils Galaxy S26 lineup with Privacy Display tech and expanded AI capabilities

Technology

3

Anthropic faces Pentagon ultimatum over AI use in mass surveillance and autonomous weapons

Policy and Regulation