Instagram Implements Mandatory Privacy Settings and Parental Controls for Teen Accounts

15 Sources

15 Sources

[1]

Instagram to automatically put teens into private accounts with increased restrictions and parental controls

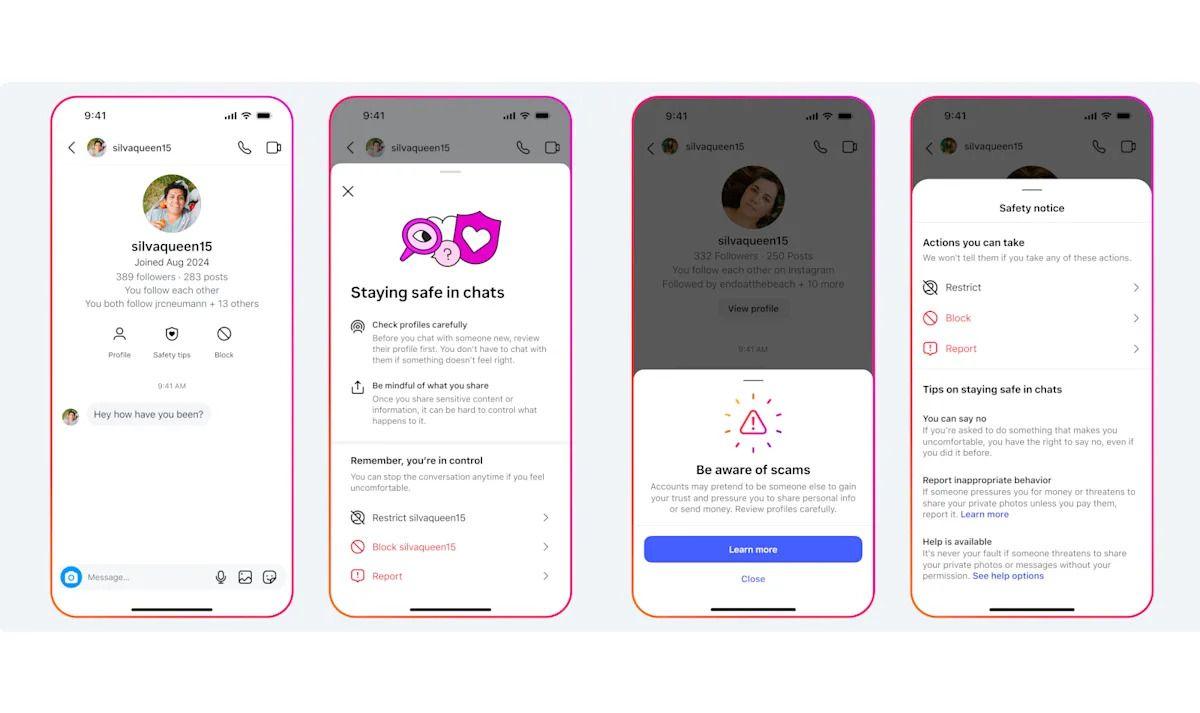

Teenagers on Instagram will soon be automatically placed in a new type of account with built-in privacy restrictions that give parents more control. On Tuesday, Meta, the social media platform's parent company, will begin rolling out its "teen accounts" feature, which aims to put all teens -- including those who may try to lie about their ages -- into private accounts that can be messaged, tagged or mentioned only by people they already follow. It's the company's most significant move yet to manage how minors use Instagram. The feature is Meta's latest effort to combat child safety issues across its platforms. In January, Meta CEO Mark Zuckerberg apologized to parents at a Senate online child safety hearing who said Instagram contributed to their children's suicides or exploitation. In recent years, the company has released a variety of features and opt-in restrictions aimed at teen users, including parental supervision controls. But so far, they've been sporadically applied. Naomi Gleit, head of product at Meta, said it has been working to bundle new and existing tools into a more standardized package. "Everyone under 18, creators included, will be put into teen accounts," Gleit told NBC News. "They can remain public if their parent is involved and gives them permission and is supervising the account. But these are pretty big changes that we need to get right." The company has "gotten a lot of feedback from parents, mostly about a few things," Gleit said. "One, it can be simpler and easier to use, and so that's one of the goals of this launch," she said. "Two, there's some inconsistencies in the current settings that we do have. ... And then the third thing is really just wanting to have more control and tools to help their teen online." The rollout won't be immediate, however. New users will be defaulted into teen accounts upon sign-up if they are under 18, but existing teen users may not see immediate changes. Many around the world won't be placed into teen accounts until next year, according to a Meta fact sheet. Aside from the new privacy limits, such accounts will also be placed in the most restrictive content setting that limits potentially sensitive content from accounts they don't follow. The accounts will have the Hidden Words feature on, as well, meaning offensive words or phrases should be automatically filtered from any comments or direct messages they receive. Users under 16 will need parents' or other guardians' permission to change their new teen account settings, whereas teens older than 16 will be able to adjust them on their own unless their accounts are still linked to parental supervisors. Meta expects teens to try to find workarounds, Gleit said, which is why it plans to test a slew of measures to prevent them from changing their ages or creating new accounts with adult birthdays. "If you have one account and then you try to create a new account on the same phone, we will ask you to age-verify, so asking for a government ID or asking for a video selfie to prove your age," Gleit said. Meta might also be able to trace teens who use different devices to create adult accounts if they, for example, register with their original teen accounts' same email addresses or phone numbers. "And we're also working on a technology to try to predict, for people that have a stated age as an adult, do we think they're lying and they might actually be a teen," Gleit said. "For those people, we also want to ask them to age-verify and put them in teen accounts, as well." Using artificial intelligence, the technology, which Meta claims is a first for the industry, aims to help Instagram predict whether users are over or under age 18 -- even if their accounts list adult birthdays. The age prediction tools, scheduled to go into testing in the U.S. early next year, will scrutinize behavioral signals such as when an account was created, what kind of content and accounts it interacts with, and how the user writes. Those who Meta deems could be teens will then be asked to verify their ages. Gleit declined to give more in-depth details about how the technology will work, saying the company wants to keep teens from figuring out exactly how to circumvent its detection tools. Parents who set up supervision controls, which require both the guardians and their teens to opt in, will now be able to see whom their teens have been messaging -- although they won't be able to read the actual conversations. They will also be able to see which topics their teens have expressed interest in, as teen accounts will be able to select specific topics they'd like to see more of on their Explore pages. And for teen accounts, a new "Sleep Mode" (which replaces the existing Quiet Mode and Night Nudges features) will silence notifications and send autoreplies from 10 p.m. to 7 a.m. The accounts will also receive new "Daily Limit" prompts encouraging them to close Instagram after 60 minutes of use. Parental supervisors will be able to further customize which hours they wish to block their teens' access to the app or set maximum time limits for each day. But just as teens are likely to lie about their ages on social media, Meta is similarly preparing for those who may try to hijack their parental supervision controls. Teen accounts won't be able to supervise other teen accounts, Gleit said, and there will be a limit to how many teen accounts one person can supervise. "Our approach really is to give parents control," Gleit said. "We think parents know their teens best, and so I don't think that all of those controls are necessarily right for everyone, but we want to give parents the options to choose what's right for their child."

[2]

Instagram makes 'Teen Accounts' private by default - and AI will be checking your age

Meta is restricting content, enforcing time limits, and handing control back to parents. Will the changes make younger users safer on social media? In 2021, a Wall Street Journal investigation detailed research findings that exposed Meta's role -- particularly its Instagram platform -- in exacerbating mental health issues in teenagers. The social giant's internal documents became the subject of a Senate Judiciary Committee hearing called "Big Tech and the Online Child Exploitation Crisis" as Instagram came under intense scrutiny about the correlation between increased eating disorders, depression, and self-harm among teen users who frequent Instagram. Also: Is social media safe for kids? Surgeon general calls for a warning label Since then, Meta CEO Mark Zuckerberg has been pressured to find solutions that amplify online child and teen safety across his social media platforms. On Tuesday, Meta began rolling out its Teen Accounts feature for users under the age of 18. This new account type is private by default and employs a set of restrictions for minors. Only users 16 years and older can loosen some of these settings with their parent's permission. The goal: Transform the way young people navigate and use social media. The new built-in protections and limitations, Instagram head Adam Mosseri told the New York Times, aim to address "parents' top concerns about their children online, including limiting inappropriate contact, inappropriate content, and too much screen time." According to the Meta Newsroom, Teen Accounts are designed "to better support parents" and "give parents control" by granting them a supervisor role over their teen's accounts -- specifically users under the age of 16. However, Meta added, "If parents want more oversight over their older teen's (16+) experiences, they simply have to turn on parental supervision. Then, they can approve any changes to these settings, irrespective of their teen's age." Moreover, the new protections include "Messaging restrictions" that place the strictest available messaging settings on young users' accounts, "so they can only be messaged by people they follow or are already connected to." "Sensitive content restrictions" will automatically limit the type of content -- such as violent content or content promoting cosmetic procedures -- that teens see in Explore and Reels. Accounts also will notify users with time limit reminders that "tell them to leave the app after 60 minutes each day," while a new "Sleep mode" will silence notifications and send auto-replies from 10 p.m. to 7 a.m. Also: How to get ChatGPT to roast your Instagram feed Meta stated that the most restrictive version of its anti-bullying feature will be turned on automatically for teen users, and offensive words and phrases will be hidden from their comment sections and DM requests. Meta also announced that it will be deploying artificial intelligence (AI) to weed out those who are lying about their ages. These age prediction tools -- currently being tested for planned deployment in the US early next year -- "will scrutinize behavioral signals such as when an account was created, what kind of content and accounts it interacts with, and how the user writes. Those who Meta deems could be teens will then be asked to verify their ages." The rollout won't be immediate for all -- new users will be directed into teen accounts upon signing up if they are under 18, but existing teen users may not see immediate changes. On a global scale, users not based in the US won't see changes in their accounts until next year, according to a Meta fact sheet.

[3]

Instagram 'teen accounts' with parental controls will be mandatory for kids under 16

Parents can view who their kids are messaging with and control their privacy settings. After years of scrutiny over its handling of teen safety on its platform, Meta is introducing a new type of account that will soon be required for all teens under 16 on Instagram. The new "teen accounts" add more parental supervision tools and automatically opt teens into stricter privacy settings that can only be adjusted with parental approval. The changes are unlikely to satisfy Meta's toughest critics, who have argued that the company puts its own profits ahead of teens' safety and wellbeing. But the changes will be significant for the app's legions of younger users who will face new restrictions on how they use the app. With teen accounts, kids younger than 16 will be automatically opted into Instagram's strictest privacy settings. Many of these settings, like automatically private accounts, the inability to message strangers and the limiting of "sensitive content" have already been in place for teenagers on Instagram. But younger teens will now be unable to change these settings without approval from a parent. And, once a parent has set up Instagram's in-app supervision tools, they'll be able to monitor which accounts their kids are exchanging messages with (parents won't see the contents of those DMs, however) as well as the types of topics their children are seeing posts about in their feeds. Parents will also have the ability to limit the amount of time their kids spend in the app by setting up "sleep mode" -- which will mute notifications or make the app inaccessible entirely -- or reminders to take breaks. The changes, according to Meta, are meant to "give parents greater oversight of their teens' experiences." While the company has had some parental supervision features since 2022, the features were optional and required teens to opt-in to the controls. Teen accounts, on the other hand, will be mandatory for all teens younger than 16 and the more restrictive settings, like the ability to make an account public, aren't able to be adjusted without parent approval. The company says it also has a plan to find teens who have already lied about their age when setting up their Instagram account. Beginning next year, the company will use AI to detect signs an account may belong to a teen, like the age of other linked accounts and the ages on the accounts they frequently interact with, to find younger users trying to avoid its new restrictions. The app will then prompt users to verify their age. In the meantime, Meta will start designating new accounts created by 13 to 15-year-olds as "teen accounts" beginning today. The company will start switching over existing teens into the accounts over the next two months in the US, Canada, UK and Australia, with a wider rollout in the European Union planned for "later this year." Teen accounts will be available in other countries and on Meta's other apps beginning in 2025.

[4]

What are Instagram Teen Accounts? Here's what to know about the new accounts with tighter restrictions

Instagram has officially launched its new Teen Accounts feature, marking one of Meta's biggest efforts to date to bolster safety for its youngest users online. The new accounts, automatically assigned to any new users under the age of 18, place limits on what users can see, who can message and interact with them, and enable parents to exercise more control over their teens' social media use. Meta promised the rollout of additional safety features earlier this year after it came under fire in both the United States and Europe over allegations that its apps are addictive and have fueled a youth mental health crisis. In October, more than 40 states filed a lawsuit in federal court claiming that the social media company profited from the advertising revenue gained by intentionally designing features on Instagram and Facebook to maximize the time teens and children spent on the platforms. Meta said in a statement at the time that it shares the "commitment to providing teens with safe, positive experiences online," adding, "We're disappointed that instead of working productively with companies across the industry to create clear, age-appropriate standards for the many apps teens use, the attorneys general have chosen this path." Now, users will start to see some of the promised changes. What is an Instagram Teen Account? Every new Instagram user under the age of 18 will automatically be signed up for a Teen Account, which regulates how they view and interact with the app and gives parents specific controls over their teen's experience on the app. These accounts come with unique features designed to limit teen activity on the app, including messaging and inappropriate content restrictions, automatic private accounts and time limits set by parents. The accounts can be changed to have less strict settings, but teens under 16 will need their parent's permission to make this change. "We know parents want to feel confident that their teens can use social media to connect with their friends and explore their interests, without having to worry about unsafe or inappropriate experiences," Instagram said in a press release. "This new experience is designed to better support parents, and give them peace of mind that their teens are safe with the right protections in place." The content that's viewable via the Teen Account is filtered by the most stringent settings, hiding content classified as "sensitive" even when shared by someone they follow. The accounts also notify the app not to suggest anything marked as potentially sensitive to these accounts in the first place. This applies not only to traditional posts but Reels and suggested accounts as well. Sensitive posts can include things like sexually suggestive content, content discussing suicide, self-harm or disordered eating, images of violence including fights caught on camera and posts discussing plastic surgery procedures. Teens will also get access to a new Explore feature that allows them to select topics they want to see more posts about, allowing them to further tailor what comes across their pages. Messaging is also restricted by default on Teen Accounts, meaning they can only be messaged by people they follow or are already connected to. Teen Accounts can also only be tagged or mentioned by people they follow and come equipped with Instagram's tightest anti-bullying settings, meaning offensive words and phrases will be filtered out of comments and DM requests. How will Instagram enforce Teen Accounts? Instagram also has plans to enforce these age restrictions even when teens attempt to circumvent them by lying about their birth dates or attempting to gain parental control over each other's accounts. Account creators will be asked to verify their age using ID, and additional verification steps will be added. Since 2022, teens have been required to prove their age with a video selfie or ID check if they attempt to change their birthday from under the age of 18 to over 18. If a teen attempts to update their birthday from a younger age to an older age, the platform requires proof of age with an ID check. Further steps will include using information about a teen's original account to prevent them from using a new account with an adult age or preventing teens from linking their accounts to accounts with adult ages. Likewise, Instagram is working on an AI model that can detect if someone is likely to be underage even if they put an adult birthday in at the account signup. By using clues like a review of the accounts and types of content an account is interacting with, the AI tech, which has yet to be rolled out, may automatically switch an account over to a teen version, though account holders will be able to change this setting. Parental control features Parental controls are a major part of the new Teen Accounts, as Instagram said its inspiration for the new features came from feedback on parents' most common concerns. Parents can set up parental supervision on their teens' accounts, which enables them to approve and deny their teens' requests to change settings or allow teens to manage their settings themselves and gives parents access to optional monitoring tools. Using these tools, parents can see the topics their teens are looking at and get a look at who they are chatting with. While parents can't read their children's messages or see which posts they view directly, they can see who their teen has messaged in the past seven days and what topics they have opted to see more of on their feeds. A few more of these parental tools allow users to limit the amount of time spent on Instagram. Some of these include: How to get an Instagram Teen Account Instagram began placing all teens under 18 who sign up for a new account on Teen Accounts starting Tuesday, but existing accounts belonging to teenagers will not automatically transfer quite yet. According to the company, Instagram plans to move existing accounts owned by teenagers over to Teen Accounts within the next 60 days in the US, UK, Canada and Australia, and elsewhere starting in January.

[5]

Instagram's latest child safety effort: 'Teen accounts'

New rules streamline previous attempts to give parents more oversight into what their younger adolescents are doing online. Instagram is revamping its youth safety strategy by giving parents more oversight over what their teenagers are doing online, Meta said Tuesday, as the social media giant seeks to assuage critics who say its services compromise adolescents' well-being. Meta, which owns Instagram, said it would bolster efforts to limit the time teenagers spend on the social networking site, what content they see and which strangers are able find their accounts and talk to them. The new safety measures -- introduced by Meta as part of a new "teen accounts" program -- will also give parents more insight into the people their children are talking to and the types of posts they are consuming, while still offering the ability to set limits on their accounts. "We are changing the experience for millions of teens on our app," Antigone Davis, Meta's global head of safety, said in an interview. "We're reimagining that parent-child relationship online in response to what we heard from parents about how they parent, or want to be able to parent." The new rules won't immediately apply to Meta's original social network, Facebook, which is larger than Instagram but less popular among American teenagers. Meta, which also owns WhatsApp, said it would put other apps under similar new rules in the coming months. Meta's new default protections for teens are meant to help address long-standing allegations that the design of Instagram intentionally keep teens addicted to its services while hurting their well-being. But some experts say teens may find ways around the new standards, which they say leave some safety issues unaddressed. Some critics earlier suggested that tougher rules for teens on Instagram could infringe on their privacy and free speech. "All this is better than it was before," said Zvika Krieger, a former director of Meta's responsible innovation team who now works as a consultant for technology companies. "I don't want to say that it's worthless or cosmetic, but I do think that it doesn't solve all the problems." Instagram's new tools arrive as concerns are rising among political leaders of both major parties that social media sites are contributing to a youth mental health crisis in the United States, and that tech companies are prioritizing keeping younger users engaged over their safety and well-being. Last year, 41 states and D.C. sued Meta, alleging that the company harms children by building addictive features into Instagram and Facebook while exposing them to harmful content. School districts and families have also sued Meta over how its services have affected young people. Dozens of states have proposed or passed bills aimed at protecting kids online in recent years, including legislation barring minors from joining social media sites without their parents' consent or requiring that tech companies verify that account creators are not underage. Several states have also sought to cut down on screen time by restricting access to smartphones during school hours or displaying pop-up notifications to young users warning of mental health risks. The U.S. Senate in July passed a sweeping proposal that would require companies to take "reasonable" steps to prevent harm to children on their services such as cyberbullying, sexual exploitation or harassment. The Kids Online Safety Act would also force tech platforms to give parents and legal guardians the ability to manage minors' privacy settings and monitor or restrict how much time they spend on the sites. The federal bill has not yet passed the House and many of the state laws have been challenged and halted in court in line with industry groups' First Amendment arguments. Meta and other social media giants have long struggled to gain traction for their parental control tools. Meta's own internal research has demonstrated that parents often lack the time and technological understanding to properly supervise their kids' online activities. Child safety critics have argued the companies impose weak default settings for teens, while forcing parents to do the heavy lifting to protect their kids. By the end of 2022, fewer than 10 percent of teens on Instagram had enabled the parental supervision setting, The Washington Post has reported. Meta has repeatedly declined to offer statistics on how many teens are being supervised by their parents on Instagram. Other companies' parental control adoption rates are also low. Earlier this year, Snapchat CEO Evan Spiegel told congress that 20 million teens in the United States use the ephemeral messaging app but only 400,000 have linked their accounts to their parents, representing a 2 percent adoption rate. Under Meta's new approach, the company will force both new sign ups and existing users under 18 into the new "teen accounts" with stricter safety default standards. Meta, which has often shied away from changing the default settings of existing teen users, expects millions of teenagers will end up in the new supervised accounts. All "teen accounts" will be set to private by default. Users who are 16 and 17 years old can make those accounts public, but users under 16 won't be able to do so without parental approval. By requiring younger teens to obtain supervision before changing any of their safety settings, the company expects more teen accounts will be supervised by their parents. "What we heard in talking to parents is how their parenting ... and involvement shifts as a teen matures," Davis said. "This is really designed to reflect that shift of the parent-child relationship." The company plans to place teens in the new restricted accounts within 60 days in the United States, U.K., Canada and Australia, and in other regions in January. Meta also said it plans to use artificial intelligence to proactively find teens it suspects of lying about their age. As part of that effort, the company says it will more often ask suspected teenagers to verify their identity through an outside contractor, Yoti, one of several companies that ask users to take video selfies or hold up government IDs to verify their ages. Critics have expressed concerns about privacy lapses and fairness issues for youth who might not have an ID. For users who end up in Instagram's new teen accounts, the company will stop notifications between the hours of 10 p.m. and 7 a.m. That so-called Sleep Mode replaces earlier features that reminded teens to close Instagram at night. That change might not quell parents' concerns that teenagers will simply keep checking the app when they should be sleeping, Krieger said. The company will also start reminding teens to get off the app after an hour of usage a day, streamlining its existing approach to time limit reminders. Parents will be allowed to block their teens' Instagram usage after a certain amount of time, or within certain windows of time. The company is also asking teens to proactively choose the topics -- such as the arts or sports -- they favor for recommended content. Supervising parents will be able to see what topics their teens have chosen to see. Parents will now also be able to see with whom their child has been recently messaging but not the messages themselves. Previously, they could only see lists of whom their children follow, who follows them and blocked accounts. Meta's new teen accounts largely streamlines and builds on its other teen safety efforts. In recent years, the company has tightened its standards on showing teens less "sensitive content" such as posts that are violent or sexually suggestive. The company also restricted adults' ability to contact them and pre-checked the private account option for new teen sign ups.

[6]

Instagram Launches New 'Teen Accounts' with Privacy Controls Amid Growing Concerns

As the Meta-owned company comes under criticism and is accused of failing to protect children on the app, Instagram announced tighter privacy settings for younger users on Tuesday. These restrictions include the requirement for 'Teen Accounts' and parental controls that limit what children can view. All Instagram accounts belonging to users under the age of 18 in the United States, United Kingdom, Canada, and Australia will become 'Teen Accounts' within 60 days and be set to private by default. Besides other new privacy settings and limitations, the new account type, called Teen Accounts, will only allow messages from users they follow or are already connected to. It will also put users in sleep mode between 10 p.m. and 7 a.m. to encourage sleep, and it will give users access to the most restrictive tier of the app's settings for viewing sensitive content. Also Read : Heartstopper Season 3: Check out trailer, cast, release date and where to watch Users who are 16 or 17 years old can adjust their settings, but the company states that users under 16 must have parental consent via their parent's Instagram account. Along with additional monitoring tools, parents will be able to see which accounts their children are messaging from, though they won't be able to read the messages. Instagram said it will use artificial intelligence to proactively find these teens and put them into more restricted accounts if they try to trick the platform by changing their birthday. Instagram's CEO, Adam Mosseri, told the New York Times that it's likely that the recent modifications will have an impact on teens' use of the app: Instagram is willing to take risks, to move us forward, and to make progress, but there are lots of risks, and it's definitely going to hurt teen growth and teen engagement. The new limitations will go into effect globally beginning early in 2019 and in the European Union later this year. Also Read: Facebook owner Meta bans Russian State media outlets. Here's why For years, Instagram has suggested setting limits for its younger user base. The company previously proposed a version of the app called 'Instagram Youth' intended for users under the age of 13; however, it was purportedly abandoned in 2021. In the same year, Instagram announced that it would allow users under the age of sixteen to create new accounts by default, but they would not be able to change their account type to public without authorization. All users under the age of 13 are already prohibited from using the social media platform, and Instagram deletes accounts belonging to minors upon discovering them. What are 'Teen Accounts'? Teen Accounts are unique user accounts for those under the age of 18 in order to provide a safer online experience. They have default privacy settings that restrict who they can message and what content they can view. Can parents keep an eye on their adolescent's Instagram life? It is true that parents can keep an eye on their adolescent's activities, including who they message, even though they are unable to read the messages themselves. For users under 16, they also have some control over specific account settings.

[7]

Instagram launches 'teen account' with enhanced parental supervision options By Invezz

Invezz.com - Meta, the parent company of Instagram, is introducing a new account setting specifically designed for users under the age of 18 in a bid to improve safety on its platform. Beginning Tuesday, teenagers in the US, UK, Canada, and Australia will automatically be placed in a restricted "teen account" with enhanced parental supervision options when signing up for Instagram. Existing accounts held by users under 18 will transition to this new setting over the next 60 days. Meta plans to roll out similar changes to teen accounts in the European Union later this year. This move comes amidst increasing public backlash over social media's influence on young people's mental health, with lawmakers, parents, and advocacy groups criticizing tech companies for failing to protect children from harmful content and online predators. In January, the Mark Zuckerberg-led social media giant announced that it will implement new content guidelines to ensure teenagers using the platform get a secure and age-appropriate digital environment as advised by experts. However, in June, a Wall Street Journal investigation revealed that the platform was continuing to recommend adult content to underage users. One of the most significant updates to the new teen accounts is the enhanced parental supervision options. Parents will now have the ability to oversee their children's Instagram usage by setting time limits, blocking app access during nighttime hours, and monitoring who their teens are messaging. Teens under the age of 16 will need parental permission to change their account settings, while 16 and 17-year-olds will be allowed to modify certain restrictions independently. "The three concerns we're hearing from parents are that their teens are seeing content that they don't want to see, that they're getting contacted by people they don't want to be contacted by, or that they're spending too much time on the app," explained Naomi Gleit, Meta's head of product. "Teen accounts are really focused on addressing those three concerns." In addition to the monitoring tools, these accounts will limit "sensitive content," such as videos of violent behavior or cosmetic procedures. Meta will also implement a feature that reminds teens if they've been on Instagram for more than 60 minutes and introduces a "sleep mode," which disables notifications and sends auto-replies to messages between 10 p.m. and 7 a.m. This feature is designed to help teens manage their time on the app and avoid excessive use at night. While these restrictions are enabled by default for all teens, those aged 16 and 17 will have the option to turn them off. However, kids under 16 will need a parent's consent to adjust the settings. The introduction of teen accounts coincides with ongoing legal battles Meta is facing, as dozens of US states have sued the company, accusing it of deliberately designing addictive features on Instagram and Facebook (NASDAQ:META) that harm young users. The lawsuits claim that Meta's platforms contribute to the worsening youth mental health crisis, with teens exposed to unhealthy amounts of screen time, harmful content, and online bullying. US Surgeon General Vivek Murthy voiced concerns last year about the pressures being placed on parents to manage their children's online experiences without adequate support from tech companies. "We're asking parents to manage a technology that's rapidly evolving, that fundamentally changes how their kids think about themselves, how they build friendships, and how they experience the world." Meta's latest effort to improve online safety for teens follows a series of prior attempts, many of which were criticized for not going far enough. For instance, teens will still be able to bypass the 60-minute time notification if they wish to keep scrolling, unless parents enable stricter parental controls through the "parental supervision" mode. Nick Clegg, Meta's president of global affairs, acknowledged last week that parental control features have been underutilized, saying, "One of the things we do find ... is that even when we build these controls, parents don't use them." Unlike some of Meta's other recent actions, such as the ability for EU users to opt out of having their data used to train AI models (a feature not yet available in other regions), the teen accounts are part of a global strategy. In addition to the US, UK, Canada, and Australia, Meta plans to introduce these changes across the European Union by the end of the year. Antigone Davis, Meta's director of global safety, emphasized that this new feature was driven by parental concerns rather than government mandates. "Parents everywhere are thinking about these issues," Davis told Guardian Australia. "The technology at this point is pretty much ubiquitous, and parents are thinking about it. From the perspective of youth safety, it really does make the most sense to be thinking about these kinds of things globally." The timing of Meta's announcement aligns with broader governmental efforts to regulate children's access to social media platforms. Just a week prior, the Australian government proposed new legislation to raise the age at which teens can access social media platforms to somewhere between 14 and 16. If enacted, this law would place Australia among the first countries to enforce such a ban, with other nations like the UK monitoring its progress closely. As countries like Australia and the UK explore further restrictions on social media for teens, Meta's new teen accounts reflect a growing global awareness of the need for greater online protections for young users. With its new features, Meta hopes to strike a balance between empowering parents and keeping Instagram a safe space for teens.

[8]

How to set up monitored 'teen accounts' on social media

Instagram is once again rolling out safety features for underage users -- this time, automatic "teen accounts" for underage users. Those under 18 will automatically be subject to limitations on who can message them, how much "sensitive" content is recommended and who can see their profiles. Users under 16 won't be able to change these settings without permission from a parent account -- unless they successfully misrepresent their age at sign-up. (Parents will receive a notification about the attempted change, which they can approve or deny.) It's the latest in a number of incremental changes to the app's privacy settings and algorithmic recommendations in the name of child safety -- some of which debuted just days before congressional hearings about risks to kids online. But Meta-owned Instagram isn't the only app that claims to be beefing up its protections for young users. Snapchat, YouTube and Spotify have also recently introduced new settings for parents to keep an eye on their teens. Many of these features require some setup, so parents must take the initiative to turn them on. It's still unclear how effective these features are at minimizing negative experiences for young people on social media -- and how often teens can work around them. In 2022, for instance, Instagram added an age verification step when underage accounts try to change their birthdays to appear older -- but kids could still choose whatever birthday they wanted when setting up a new account. In conjunction with the new teen accounts, Meta says it will require an ID check any time teens try to use an adult account, which could stop them from spinning up fake parents. Here's how to use the latest teen safety features from Instagram, Snapchat and YouTube. Teen accounts on Instagram Your teen's account will automatically be subject to age-based restrictions -- Instagram even claims it will use artificial intelligence to scan accounts for teens lying about their age. (This may also help advertisers, who can target teen accounts based on age and geography.) But you'll need to visit Meta's Family Center (familycenter.meta.com) if you want to tweak any of the settings or prevent your 17- or 18-year-old from opting out of the restrictions. For example: Teen accounts come with a new "sleep mode" that mutes notifications automatically between 10 p.m. and 7 a.m., but your teen can still scroll away at night if you don't select the "block teen from Instagram" option under Time Management. You can adjust the time windows when the app is blocked or set daily time limits for Instagram use. Now, Family Center will also show which accounts your teen has been direct messaging on Instagram, though not the content of those messages. Public posting on Snapchat This month, Snapchat started letting 16- and 17-year-old users post publicly, rather than only in private stories and messages to friends. They can create public posts from their stories or post short-form "Spotlight" videos. With the change comes some built-in limitations (teens' public posts won't get recommended to people they don't share any social connections with, the company says) as well as oversight options for parents. To use them, you'll need to create an account in the Snapchat app. Then, go to the main tab and tap the Settings gear icon in the top right corner. Scroll down to the cluster of options under "Privacy Controls" and select Family Center. Tap Continue and invite your teen to link accounts. Once they accept, you'll be able to go to the Content & Privacy section of Family Center and see whether your teen has been posting publicly. The idea is to talk with them about the benefits and risks of sharing publicly, including possible ramifications for school sports, college admissions and future jobs. Public posting on YouTube This month, YouTube, the most popular app among teens, according to 2023 data from Pew Research, launched linked accounts that give parents more visibility into their teens' activity on the app. The update is still rolling out, so you might have to wait a few weeks before it shows up. The new feature focuses mainly on teens who share their own videos on YouTube, allowing parents to see the number of video uploads, subscriptions and comments on teens' accounts. It will also alert parents if teens start a livestream or post a new video. Parents still can't see which videos their teens watch, though last year the app said it would cut back on recommending videos that "could be problematic" if viewed repeatedly, such as content comparing different bodies. To set up linked accounts, you'll need to create a YouTube account, go to Settings in the main menu, then scroll down to Family Center.

[9]

Instagram is restricting teen accounts -- and blocking sneaky workarounds

This is a Mint Premium article gifted to you. Subscribe to enjoy similar stories. Starting this week, it will begin automatically making youth accounts private, with the most restrictive settings. And younger teens won't be able to get around it by changing settings or creating adult accounts with fake birth dates. Account restrictions for teens include direct messaging only with people they follow or are already connected to, a reduction in adult-oriented content, automatic muting during nighttime hours and more. Building on changes to teen accounts it announced earlier this year -- and following years of criticism about child safety -- the Meta Platforms-owned social network said it would shift 100 million teenagers in the U.S. and around the world into the guardrailed accounts. The move applies to all accounts with an under-18 birth date, though teens 16 and older will be able to change their settings without parental approval. Any new teen accounts will be similarly restricted starting Tuesday. Parents will no longer have to manually enter those settings using Instagram's parental supervision tool. Teens are unlikely to be happy with the changes. Instagram is expecting to lose "some meaningful amount of teen growth and teen engagement," Instagram head Adam Mosseri said in an interview. "I have to believe earning some trust from parents and giving parents peace of mind will help business in the long run, but it will certainly hurt in the short term." Instagram plans to go even further, starting next year: Using artificial intelligence, it said, it will identify children who are lying about their age -- then automatically place them into the restricted teen accounts. Instagram and other social-media companies are under pressure from lawmakers and parents to protect their youngest users. Social media has contributed to bullying, eating disorders and anxiety and depression stemming from social comparisons, according to parents, doctors and researchers. The U.S. Surgeon General last year issued an advisory about the effects social media has on youth mental health. Mosseri said the changes aren't in response to legal or regulatory pressure, but are happening because Instagram has arrived at what it feels is the right approach to teen safety. Under the new accounts, teens won't be able to see sensitive content, such as posts or videos that show people fighting or that promote cosmetic procedures -- and Instagram's algorithm won't recommend sexually suggestive content or content about suicide and self-harm. A Wall Street Journal investigation earlier this summer revealed that sexual videos were being recommended to teen accounts. Mosseri said Instagram has worked hard to ensure that the platform doesn't show teens such content. The new teen default settings should significantly reduce the chances of that, he added. Teen accounts will receive notifications telling them to close the app after an hour. (They can ignore it.) Sleep mode, which mutes notifications overnight, will be automatically enabled. Teens 15 and under will need a parent's permission -- via the parental supervision tool -- to change the more-restrictive settings. "If you want, you can override the default settings," Mosseri said of parents. "But if you don't have time to do that, you don't have to do anything." If teens try to bypass the changes by creating new accounts with an older birth date, they will be prompted to either show an ID or to upload a video selfie for Instagram's face-based age-prediction tool. The company already requires age verification when young people attempt to change the birth date on their accounts to say they are over 18. Teens 16 and older will be able to change the restrictive settings themselves, unless their account is already under parental supervision. Instagram's reasoning is that older teens are more mature and need more autonomy (which is one reason I have said teens should wait until 16 to be on social media). The changes will roll out to teens in the U.S., the U.K., Canada and Australia within the next two months and to teens in Europe later this year. The changes will apply to teens in the rest of the world starting in January. Early next year in the U.S., Meta plans to use its adult classifier AI model to determine which Instagram account holders really are teens. The company, which also owns Facebook and WhatsApp, has already used that model to prevent teens from accessing such adult features as Facebook Dating. Verifying users' ages has been considered one answer to safety-related problems on social media. Mosseri said he still believes Apple and Alphabet's Google -- which make the operating systems for most phones -- should provide age verification at the device level. Apple has said that social-media companies are best positioned to verify age and that sharing its users' ages with third-party apps could go against privacy expectations. Mosseri said AI models that predict age aren't perfect. Meta's AI is trained on an account's interactions with other users and content, among other signals, to determine whether the birth date is false. When the model begins policing, it will look for accounts held by people who are likely under 18. Those accounts will be placed under the teen restrictions, an Instagram spokeswoman said. But in the event the AI was wrong, the user will have an opportunity to appeal, and further train the AI. Instagram and other social-media platforms prohibit children under 13 from using their services -- but it is an open secret that many kids still sign up. Mosseri said he hopes the AI model will eventually be able to identify those underage users. If they can't prove their eligibility, Instagram will disable their accounts, he said.

[10]

Instagram makes all teen accounts private, in a highly scrutinized push for child safety

A view of what two features look like for Instagram's Teen Account, including the ability to set daily use limits and permitting parents to view with whom their teen kids are messaging. Provided by Meta hide caption Instagram on Tuesday unveiled a round of changes that will make the accounts of millions of teenagers private, enhance parental supervision and set messaging restrictions as the default in an effort to shield kids from harm. Meta said users under 16 will now need a parent's approval to change the restricted settings, dubbed "Teen Accounts," which filter out offensive words and limit who can contact them. "It's addressing the same three concerns we're hearing from parents around unwanted contact, inappropriate contact and time spent," said Naomi Gleit, Meta's head of product, in an interview with NPR. With teens all being switched to private accounts, they can only be messaged or tagged by people they follow. Content from accounts they don't follow will be in the most restrictive setting, and the app will make periodic screen time reminders under a revamped "take a break" feature. Instagram, which is used by more than 2 billion people globally, has been under intensifying scrutiny over its failure to adequately address a broad range of harms, including the app's role in fueling the youth mental health crisis and the promotion of child sexualization. States have sued Meta over Instagram's "dopamine manipulating" features that authorities say have led to an entire generation becoming hooked on the app. In January, Meta chief executive Mark Zuckerberg stood up during a Congressional hearing and apologized to parents of kids who died of causes related to social media, like those who died by suicide following online harassment, a dramatic moment that underscored the escalating pressure the CEO has faced over child safety concerns. The new features announced on Tuesday follow other child safety measures Meta has recently released, including in January, when the company said content involving self-harm, eating disorders and nudity would be blocked for teen users. Meta's push comes as Congress dithers on passing the Kids Online Safety Act, or KOSA, a bill that would require social media companies to do more to prevent bullying, sexual exploitation and the spread of harmful content about eating disorders and substance abuse. The measure passed in the Senate, but hit a snag in the House over concerns the regulation would infringe on the free speech of young people, although the effort has been championed by child safety advocates. If it passes, KOSA would be the first new Congressional legislation to protect kids online since the 1990s. Meta has opposed parts of the bill. Jason Kelley with the Electronic Frontier Foundation said the new Instagram policies seem intended to head off the introduction of additional regulations at a time when bipartisan support has coalesced around holding Big Tech to account. "This change is saying, 'We're already doing a lot of the things KOSA would require," Kelley said. "A lot of the time, a company like Meta does the legislation's requirements on their own, so they wouldn't be required to by law." Meta requires users to be at least 13 years old to create an account. Social media researchers, however, have long noted that young people can lie about their age to get on the platform and may have multiple fake accounts, known as "finstas," to avoid detection by their parents. Officials at Meta say they have built new artificial intelligence systems to detect teens who lie about their age. This is in addition to working with British company Yoti, which analyzes someone's face from their photos and estimates an age. Meta has partnered with the company since 2022. Since then, Meta has required teens to prove their age by submitting a video selfie or a form of identification. Now, Meta says, if a young person tries to log into a new account with an adult birthday, it will place them in the teen protected settings. In January, the Washington Post reported that Meta's own internal research found that few parents used parental controls, with under 10 percent of teens on Instagram using the feature. Child safety advocates have long criticized parental controls, which other apps like TikTok, Snapchat and Google also make available, because it puts the onus on parents, not the companies, to assume responsibility for the platform. While parental supervision on Instagram still requires both a teen and parent to opt in, the new policies add a feature that allows parents to see who their teens have been recently messaging (though not the content of the messages) and what subjects they are exploring on the app. Meta is hoping to avoid one worrisome situation: Someone who is not a parent finding a way to oversee a teen's account. "If we determine a parent or guardian is not eligible, they are blocked from the supervision experience," Meta wrote in a white paper about Tuesday's new child safety measures. But misuse is still possible among rightful parents, said Kelley with the Electronic Frontier Foundation. He said if parents are abusive or try to prevent their kids from searching for information about their political beliefs, religion or sexual identity, having more options to snoop could cause trouble. "I think that definitely could lead to a lot of problems, especially for young people in abusive households who may require them to have these parentally supervised accounts, and young people who are exploring their identities," Kelley said. "In already-problematic situations, it could raise the risk for young people." Meta points out that parents will be limited to viewing about three dozen topics that their teens are interested in, including things like outdoor activities, animals and music. Meta says the topic-viewing is less about parents surveilling kids and more about learning about a child's curiosities. Still, some of the new Instagram features for teens will be aimed at filtering out sensitive content from the app's Explore Page and on Reels, the app's short-form video service. Teens have long mastered ways of avoiding detection by algorithms. Kelley points out that many use what's known as "algospeak," or ways of evading automated take-down systems, like writing "unalive" to refer to a death, or "corn" as a way of discussing pornography. "Kids are savvy, and algospeak will continue to evolve," Kelley said. "It will continue to be an endless cat-and-mouse game."

[11]

How to set up monitored 'teen accounts' on social media

How to manage a new Instagram "teen account" and other tips for Snapchat and YouTube. Instagram is once again rolling out safety features for underage users -- this time, automatic "teen accounts" for underage users. Those under 18 will automatically be subject to limitations on who can message them, how much "sensitive" content is recommended and who can see their profiles. Users under 16 won't be able to change these settings without permission from a parent account -- unless they successfully misrepresent their age at sign-up. (Parents will receive a notification about the attempted change, which they can approve or deny.) It's the latest in a number of incremental changes to the app's privacy settings and algorithmic recommendations in the name of child safety -- some of which debuted just days before congressional hearings about risks to kids online. But Meta-owned Instagram isn't the only app that claims to be beefing up its protections for young users. Snapchat, YouTube and Spotify have also recently introduced new settings for parents to keep an eye on their teens. Many of these features require some setup, so parents must take the initiative to turn them on. It's still unclear how effective these features are at minimizing negative experiences for young people on social media -- and how often teens can work around them. In 2022, for instance, Instagram added an age verification step when underage accounts try to change their birthdays to appear older -- but kids could still choose whatever birthday they wanted when setting up a new account. In conjunction with the new teen accounts, Meta says it will require an ID check any time teens try to use an adult account, which could stop them from spinning up fake parents. Here's how to use the latest teen safety features from Instagram, Snapchat and YouTube. For tips on more apps including TikTok, Switch and Discord, check out our guide. Teen accounts on Instagram Your teen's account will automatically be subject to age-based restrictions -- Instagram even claims it will use artificial intelligence to scan accounts for teens lying about their age. (This may also help advertisers, who can target teen accounts based on age and geography.) But you'll need to visit Meta's Family Center if you want to tweak any of the settings or prevent your 17- or 18-year-old from opting out of the restrictions. For example: Teen accounts come with a new "sleep mode" that mutes notifications automatically between 10 p.m. and 7 a.m., but your teen can still scroll away at night if you don't select the "block teen from Instagram" option under Time Management. You can adjust the time windows when the app is blocked or set daily time limits for Instagram use. Now, Family Center will also show which accounts your teen has been direct messaging on Instagram, though not the content of those messages. Public posting on Snapchat This month, Snapchat started letting 16- and 17-year-old users post publicly, rather than only in private stories and messages to friends. They can create public posts from their stories or post short-form "Spotlight" videos. With the change comes some built-in limitations (teens' public posts won't get recommended to people they don't share any social connections with, the company says) as well as oversight options for parents. To use them, you'll need to create an account in the Snapchat app. Then, go to the main tab and tap the Settings gear icon in the top right corner. Scroll down to the cluster of options under "Privacy Controls" and select Family Center. Tap Continue and invite your teen to link accounts. Once they accept, you'll be able to go to the Content & Privacy section of Family Center and see whether your teen has been posting publicly. The idea is to talk with them about the benefits and risks of sharing publicly, including possible ramifications for school sports, college admissions and future jobs. Public posting on YouTube This month, YouTube, the most popular app among teens, according to 2023 data from Pew Research, launched linked accounts that give parents more visibility into their teens' activity on the app. The update is still rolling out, so you might have to wait a few weeks before it shows up. The new feature focuses mainly on teens who share their own videos on YouTube, allowing parents to see the number of video uploads, subscriptions and comments on teens' accounts. It will also alert parents if teens start a live stream or post a new video. Parents still can't see which videos their teens watch, though last year the app said it would cut back on recommending videos that "could be problematic" if viewed repeatedly, such as content comparing different bodies. To set up linked accounts, you'll need to create a YouTube account, go to Settings in the main menu, then scroll down to Family Center.

[12]

Instagram Introduces 'Teen Accounts' To Be The Safety Net For Young Users, Restricting DMs, Limiting App Usage And More

Many companies are actively working towards monitoring the social media consumption of teenagers as parents have growing concerns about ensuring child safety in the online presence. Instagram has been actively working on bringing automatic safe protection for teen users and putting parents at ease. The platform has introduced a new safety net that places users under 18 into restrictive and more private accounts, irrespective of whether they have recently been on the platform or for a while. While Instagram has gained massive popularity in recent times as a social media platform, with many young people indulging in the experience as well, parents of teenagers have actively expressed their concerns regarding monitoring the usage and the extent of access to the platform. To combat this, Instagram is taking a holistic approach by introducing Teen Accounts for users 18 and under and is introducing a set of privacy features. The set of restrictions is automatically applied to young users, and all teenagers' accounts are private by default. For minors, even more stringent restrictions would be placed that prevent strangers from sending DMs to young users. There is also a Sleep Mode feature that would mute the notifications at night from 10 PM to 7 AM to control the usage of social media for teens. Teens would be able to select only topics relevant to their specific age group, which would then appear on the Explore page or the platform's recommendations. Alerts would also be in place to remind young users to use Instagram sparingly and to close the app. The company is actively working on controlling teen users' end and giving more control to parents who want to keep a tab of their kid's activities on the app. Parents would be able to know who the child has been messaging in the last week and the topics they have been viewing. Teenagers 16 and above can change some of the settings, but those who are even younger would need their parents' permission for any tweaks to be made. Instagram's teen accounts are being rolled out to users in the UK, U.S., Canada and Australia. To ensure young users do not rely on workarounds, Instagram now has more ways to verify age, such as uploading an ID card or even a video selfie. Instagram would even use AI to verify a user's age through information shared otherwise, but that would require a multi-layered approach to ensure accuracy.

[13]

Instagram is rolling out Teen Accounts by default. It's about time.

This story is available exclusively to Business Insider subscribers. Become an Insider and start reading now. Have an account? Log in. The teen settings will automatically apply to newly made accounts and existing ones. Nick Clegg, head of global policy at Meta, said that just offering parents the option to use content controls hasn't worked. "Even when we build these controls, parents don't use them. So we have a bit of a behavioral issue," Clegg said at an event last week. "We as an engineering company might build these things, and then we say at events like this: 'Oh, we've given parents choices to restrict the amount of time kids are [online]' -- parents don't use it." It's not hard to imagine why the existing parental controls aren't popular. They're buried deep in the app settings, and you have to even be aware that these exist in the first place. Well-meaning parents who care deeply about their children's safety and mental health may have simply never known it was possible to set up these kinds of controls. Although some of these features had already existed in some form, this is actually a big change -- rolling all these piecemeal parental control features into one thing. Crucially, it's turned on by default. Teen Accounts will have "Sleep Mode" at night -- and at all times of the day, the accounts will have a reminder that pops up to take a break after an hour of use. Accounts will be defaulted to private settings and won't allow strangers to message or tag them. For younger teens, parents can see who their children direct message with -- but won't be able to read their DMs. Teens under 16 will need parental permission to opt out of these more restrictive settings. Accounts of 16- and 17-year-olds will automatically default to Teen Accounts, but they'll be able to change the settings on their own (unless a parent has already signed onto parental controls). And although age is self-reported, Meta already has been using AI to help detect if someone is under 18. It says it is building tools to find accounts where kids lied about their age at signup. Meta certainly is aware that it has a perception problem with teen safety. Instagram head Adam Mosseri said in an internal email that was unearthed in recent lawsuits filed by a group of states against the company: "Social comparison is to Instagram [what] election interference is to Facebook." (Meta has long believed that the election interference of 2016 was overblown in public perception -- my interpretation of Mosseri's statement is that he thinks this issue is overblown by the media, but that the controversy is sticking around.) Last year, Meta published a blog saying that it wanted regulators to force Apple and Google to be in charge of creating parental permissions at the app store level. That would relieve some of Meta's burden -- but Apple reportedly was firmly against this, sending lobbyists to fight off proposed state laws requiring the company to age-verify users. The US Surgeon General recently suggested that social media should come with a warning label for teens. And the series of lawsuits filed against Meta by various state attorneys general have highlighted the potential harms that can come to teens using social media. There's a burst of interest in banning phones from schools. Making a huge, sweeping, default change to how Instagram handles teen users is a smart move for Meta, which has the burden of needing to prove to parents and lawmakers that it is making real change.

[14]

Meta to introduce 'teen accounts' to Instagram as governments consider social media age limits

Meta says teen accounts will apply to new users under 16 and restrictions will eventually be extended to existing accounts used by teenagers Meta is putting Instagram users under the age of 18 into new "teen accounts" to allow parents greater control over their activities, including the ability to block children from viewing the app at night. In an announcement made a week after the Australian government proposed restricting children from accessing such platforms, Meta says it is launching teen accounts for Instagram that will apply to new users. The setting will then be extended to existing accounts held by teenagers over time. Changes under the teen account setting include giving parents the ability to set daily time limits for using the app, block teens from using Instagram at certain times, see the accounts their child is messaging and viewing the content categories they are viewing. Teenagers signing up to Instagram are already placed by default into the strictest privacy settings, which include barring adults from messaging teens who don't follow them and muting notifications at night. However, under the new "teen account" feature users under the age of 16 will now need parental permission to change those settings, while 16-18 year olds defaulted into the new features will be able to change them independently. Once an under-16 tries to change their settings, the parental supervision features will allow adults to set new time limits, block access at night and view who their child is exchanging messages with. It came after the Australian government last week announced plans to introduce legislation to parliament by the end of the year to raise the age children can access social media up to an as-yet-undefined age between 14 and 16. But unlike other actions the company has taken recently - including by allowing EU users to opt-out of having their data used to train its AI model but not offering a similar option in Australia - Meta's move is global and will apply to the US, UK and Canada as well as Australia. Meta's director of global safety, Antigone Davis, said that the decision to introduce teen accounts was driven by parents and not by any government legislation or proposals. "Parents everywhere are thinking about these issues," Davis told Guardian Australia. "The technology at this point is pretty much ubiquitous, and parents are thinking about it. From the perspective of youth safety, it really does make the most sense to be thinking about these kinds of things globally and addressing parents concerns globally." Davis did not rule out bringing the changes to Facebook in the future but said the company would examine what measures could make sense for different apps. The Australian prime minister, Anthony Albanese, said the key motivator for the policy to raise the age teenagers can access social media was to have them having "real experiences". "Well what we want to [do] is to get our kids off their devices and on to the footy fields or the netball courts, to get them interacting with real people, having real experiences," he told Channel Seven's Sunrise program. "And we know that social media is doing social harm." But Davis said teenagers would view social media as also providing "real" experiences for them. "For the teen who plays soccer and is on the soccer team and is trying to perfect a particular kick or a particular pass, they're going to use social media to figure that out, and in a way that we might not have done, and in some ways that's the real value," she said. "I think they move much more fluidly through these apps and their online and offline world. I don't think they make this that separation." If the Australian proposal goes ahead, the country could be one of the first to bring a ban into effect. The UK technology secretary, Peter Kyle, last week said he was keeping a close eye on how the Australian model may work and had an open mind about whether the UK may follow in the future. Existing private accounts settings for teens that will be switched to the new teen account feature include u-18s needing to accept new followers, being placed into the most sensitive content restrictions and filtering out offensive words and phrases in posts and messages. The changes also come after Nick Clegg, a senior executive at Instagram's parent, Meta, said parents don't use parental supervision features. "One of the things we do find ... is that even when we build these controls, parents don't use them," he said last week.

[15]

Instagram Is Finally Doing Right by Teens

After years of pressure and criticism, the app is making major changes. Teenagers are said to live on their phones, and one of the places where they spend the most time is Instagram. For many years, the perception has been that they are totally unsupervised there, much to their detriment. That may be changing: Meta, which owns Instagram, announced today that teenagers who use the app will be subject to a slew of new restrictions, as well as increased parental oversight. Under the new policy, accounts made or owned by anyone under the age of 18 will have limited functionality by default -- a bid, the company says, to give parents "peace of mind that their teens are safe with the right protections in place." These changes, many would argue, are long overdue. For years, people have worried about the effects that unfettered and unsupervised social-media use may have on young people -- that these platforms may contribute to depression, anxiety, severe body-image issues, and even suicide risk. Meta has been under the microscope particularly since the former Facebook data scientist Frances Haugen leaked a trove of internal documents in 2021, some of which had to do with the experiences of teens on Instagram and Facebook. Subsequently, Meta and other social-media companies were hit by a wave of lawsuits related to alleged damage that the platforms have done to adolescents; politicians on both the right and the left suggested it might be a good idea to require parental consent for children to use algorithmic feeds, or to prohibit social-media use for younger teenagers altogether. "Facebook is not interested in making significant changes to improve kids' safety on their platforms," Marsha Blackburn, a Republican senator from Tennessee, said during one of Haugen's congressional appearances. "At least, nothing that would result in losing eyeballs on posts or decreasing their ad revenue." Now Meta is trying to prove otherwise. The new safeguards will almost certainly make for a less engaging Instagram for minors. The new "Teen" accounts are private by default, meaning that their posts cannot be viewed by anyone who is not an approved follower, and they can receive messages only from accounts they follow "or are already connected to." Teens will also receive prompts to close the app after 60 minutes of use, and their accounts will automatically be in "sleep mode," which mutes notifications, from 10 p.m. to 7 a.m. (The app would still be usable during these hours, however, and teens can choose to check their direct messages at any time.) Additionally, these accounts will be subject to Instagram's "most restrictive" content filter by default, and those under 16 will be unable to change the setting without parental permission. (Meta already sets this filter for younger users, though until now, those users have been free to change it themselves.) In a press release, Meta notes that the filter should limit teens' exposure to content showing "people fighting" or promoting "cosmetic procedures," for example. Some flexibility is built into the new system. Although Instagram will enroll all teenage users under 18 into this new program, those who are 16 and older can change their default settings -- disabling sleep mode, say. (Quite obviously, teenagers could simply lie about their age when creating an account in the first place -- more on that in a minute.) If younger teens want to make changes, they will have to add a parent or guardian through the app's settings and make any tweak with their agreement. Separately, an expanded parental-supervision tool -- which both parent and teen have to opt into -- allows parents and guardians to see whom their teenager has messaged in the past seven days (though they can't read the content of those messages), set daily time limits on the app (to be enforced with either a pop-up reminder or a hard shutdown), and block Instagram for preset periods during the day (such as school hours). "On the face of it, it's what a lot of people have advocated for a long time," Candice L. Odgers, the associate dean for research and a professor of psychological science and informatics at UC Irvine, told me. (Earlier this year, Odgers wrote an article for The Atlantic about her research on children's use of digital technology, which argued that extreme rhetoric about the supposed harmful effects of social media may be damaging in itself.) I gave her only a brief summary of Instagram's update, details of which were not yet public when we spoke; she commented that the default settings may be the most significant development here. "For a long time, what we've said is it's too much of an onus put on parents, adolescents, and caregivers," she told me. "The closer we can get to safety by design ... the better it's going to be for everybody." Read: The panic over smartphones doesn't help teens Jonathan Haidt, a social psychologist at NYU's Stern School of Business and one of the most well-known and influential voices on the topic of teenage social-media use, has argued that these apps are out-and-out "dangerous" for young people. His latest book, titled The Anxious Generation: How the Great Rewiring of Childhood Is Causing an Epidemic of Mental Illness, makes this argument at length and is a best seller. (Haidt is also a regular contributor to The Atlantic.) But even he saw today's changes as a "big step in the right direction" and told me he is "very encouraged" by them. "Meta is the big fish," he said. "For Meta to move first, I think, is a very good sign, and it's likely to encourage other platforms to treat teens differently." (He did qualify his optimism, saying that young people will probably still be on their phone way too much. But "at least it would remove some of the worst things, like being contacted by strange men.") Liza Crenshaw, a spokesperson on the youth and well-being team at Meta, told me that the company was focused on responding to feedback from parents when it created these features. "I don't really know that we're trying to solve for anything else other than what we hear from parents that they want," she told me. She said the company had interviewed parents who had asked for more ways to be involved in their teens' use of the app, and wanted to see safety features turned on by default. Odgers praised that approach in theory, noting that as a parent herself, it's better to have the most restrictive settings in place and then talk about lifting some of them over time and through negotiation. But, she added, "the devil is in the details." The most obvious issue with Instagram's new approach is age verification. The company has already experimented with using facial-analysis software to estimate users' ages and apply some restrictions to younger users' accounts. The next step is to use it to help them place accounts in the "Teen" category in situations where a younger teen may be trying to skirt restrictions. If the system thinks a user is lying about their age, it can ask them to verify their age with an ID or via a "facial estimation" tool, which involves an AI analysis of someone's "biometric selfie." Jonathan Haidt: Get phones out of schools now There are other wrinkles. To prevent a situation in which, for example, a 20-year-old signs up to be the parent-guardian giving permission for a dozen of their 16-year-old sibling's friends to turn off restrictive settings, Meta says it will cap the number of accounts that one person can supervise. Crenshaw declined to be more specific about what that cap will be, saying that Meta won't be sharing all of the ways it will combat circumvention of the parental-supervision tool. She also acknowledged that the company will have to be careful about not punishing teens who don't have traditional parent-guardians in their life, but said she couldn't give details on how Instagram will make these distinctions. Still, many concerned parents may see these features as cause for celebration. Meta certainly does. This morning, the company is holding a three-hour announcement event at its New York office hosted by the actor Jessica Alba, and on Thursday night, there will be a party at Public Records, a popular music venue in Brooklyn. The celebrations could be in honor of the prevailing of common sense: Scientists broadly agree that there is enough evidence to cause concern about the relationship between social-media use and depression and anxiety, particularly among younger teenage girls. They have disagreed on what to do about it, but today's measures represent a good-faith effort to do something.

Share

Share

Copy Link

Instagram announces significant changes to protect teen users, including automatic private accounts, increased restrictions, and mandatory parental controls for users under 16. The move comes amid growing concerns about online safety for young users.

Instagram's New Teen Safety Measures

In a significant move to enhance online safety for younger users, Instagram has announced a series of changes that will automatically place teen accounts into more restrictive settings. The social media giant, owned by Meta, will now make all accounts for users under 16 private by default and implement mandatory parental controls

1

.Automatic Private Accounts and Increased Restrictions

Under the new policy, teen accounts will be set to private automatically, limiting who can see their content and interact with them. This change applies to both new and existing accounts for users under 16. Additionally, Instagram will implement stricter controls on direct messages, limiting communication to followers only

2

.Mandatory Parental Controls

Perhaps the most significant change is the introduction of mandatory parental controls for users under 16. Parents or guardians will now have the ability to monitor their teen's activity on the platform, including setting time limits and receiving notifications about potentially harmful content

3

.AI-Powered Age Verification

To ensure compliance with these new measures, Instagram will employ artificial intelligence to verify users' ages. This technology will analyze various factors, including user behavior and content, to determine if an account belongs to a teen

2

.Response to Growing Concerns

These changes come in response to increasing scrutiny from lawmakers, regulators, and child safety advocates regarding the impact of social media on young users. Meta, Instagram's parent company, has faced criticism for not doing enough to protect teens from harmful content and online predators

4

.Related Stories

Industry-Wide Implications

Instagram's move is likely to set a precedent for other social media platforms. As concerns about online safety for young users continue to grow, other companies may feel pressure to implement similar measures

5

.Balancing Safety and User Experience