Intel Unveils New Xeon 6 CPUs with Advanced AI Performance Features for Nvidia's DGX B300 Systems

6 Sources

6 Sources

[1]

Intel Announces New Xeon 6 CPU Models With SST-TF & Priority Core Turbo "PCT"

"The introduction of PCT, paired with Intel SST-TF, marks a significant leap forward in AI system performance. PCT allows for dynamic prioritization of high-priority cores (HP cores), enabling them to run at higher turbo frequencies. In parallel, lower-priority cores (LP cores) operate at base frequency, ensuring optimal distribution of CPU resources. This capability is critical for AI workloads that demand sequential or serial processing, feeding GPUs faster and improving overall system efficiency."

[2]

Intel launches three new Xeon 6 P-Core CPUs, will debut in Nvidia DGX B300 AI systems

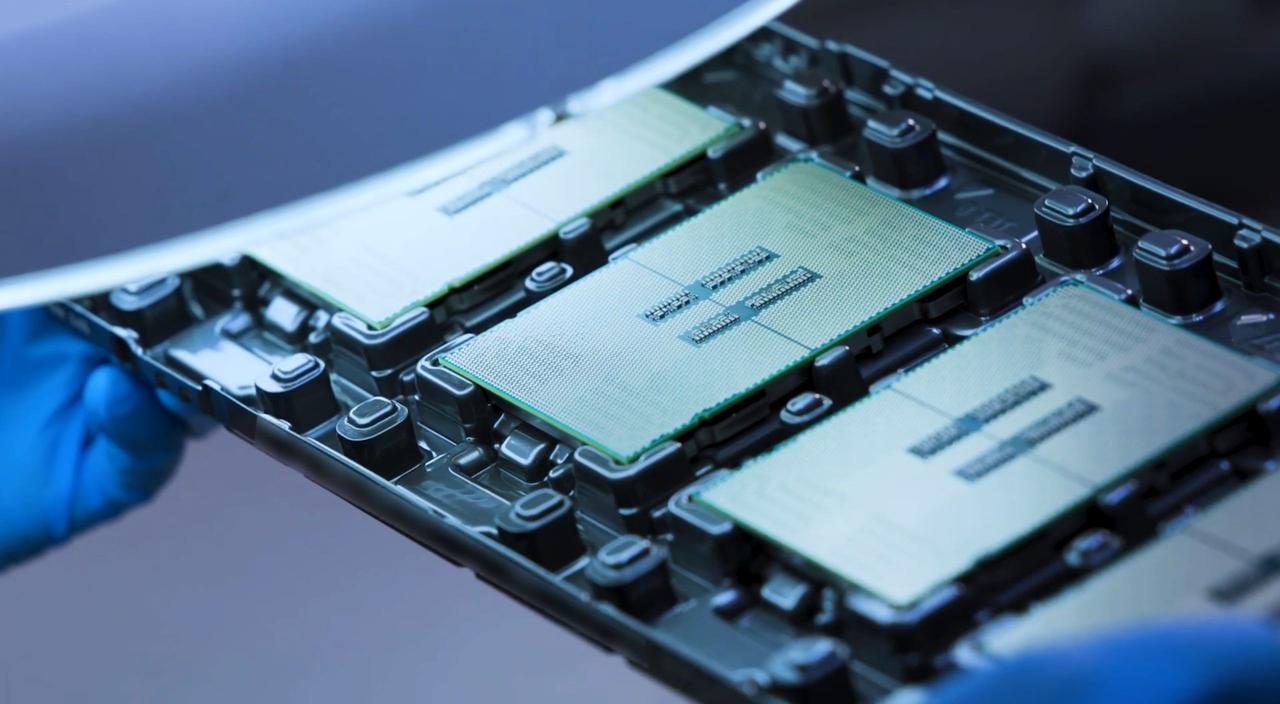

The new processors, replete with Intel's Performance-cores, also feature new Intel Priority Core Turbo (PCT) and Intel Speed Select Technology - Turbo Frequency, which the company claims delivers customizable CPU core frequencies to improve GPU performance for demanding AI workloads. All three are available now, and the Intel Xeon 6776P also comes integrated in the Nvidia DGX B300, the company's latest AI-accelerated systems. Intel says that the introduction of PCT and Intel SST-TF as a pairing marks "a significant leap forward in AI system performance." PCT should allow for the dynamic prioritization of high-priority cores, enabling higher turbo frequencies. Meanwhile, lower-priority cores operate at base frequency in parallel to optimize resource distribution. PCT can reportedly run up to eight, high-priority cores at elevated turbo frequencies, according to Intel. Intel's Xeon 6 CPUs include up to 128 P-cores per CPU and 20% more PCIe lanes than previous-generation Xeon processors, with up to 192 PCIe lanes per 2S server. Intel also claims Xeon 6 offers 30% faster memory speeds compared to the competition (specifically the latest AMD EPYC processors), thanks to Multiplexed Rank DIMMs (MRDIMMs) and Compute Express Link, and up to 2.3x higher memory bandwidth compared to the previous generation. Intel says its P-Core Xeon 6 processors have 2 DIMMs per channel (2DPC), and says the 2DPC configuration supports up to 8TB of system memory. It also says MRDIMMs boost bandwidth and performance, all while reducing latency. The new CPUs also feature up to 504 MB L3 cache for faster data retrieval. Intel Xeon 6 processors also feature Intel AMX, which can offload certain tasks to the CPU. Intel confirmed AMX now features support for FP16 precision arithmetic, which enables efficient data pre-processing and critical CPU tasks in AI workloads. Alongside its three new P-Core processors, Intel has also added a B-variant 6716P-B. The latter features just 40 cores and draws less power. Intel says its Xeon 6 processors with P-cores "provide the ideal combination of performance and energy efficiency" to handle the increasing demands of AI computing. This week, at Computex 2025, the company also unveiled its brand new $299 aRc Pro B50 with 16GB of memory, as well as 'Project Battlematrix' workstations with 24GB Arc Pro 60 GPUs.

[3]

Nvidia taps Intel's Xeon 6 CPUs for DGX B300 boxes

AI-optimized CPUs promise 4.6GHz clocks, at least for one in eight cores Computex When Nvidia first teased its Arm-based Grace CPU back in 2021, many saw it as a threat to Intel and AMD. Four years later, the Arm-based silicon is at the heart of the GPU giant's most powerful AI systems, but it has not yet replaced x86 entirely. At Computex on Thursday, Intel revealed three new Xeon 6 processors, including one that will serve as the host CPU in Nvidia's DGX B300 platform announced at GTC back in March. According to Intel, each B300 system will feature a pair of its 64-core Xeon 6776P processors, which will be responsible for feeding the platform's 16 Blackwell Ultra GPUs with numbers to crunch. (In case you missed it, Nvidia is counting GPU dies as individual accelerators now.) However, the chips announced Thursday aren't your typical Xeons. Unlike the rest of the Xeon 6 lineup, these ones were optimized specifically to babysit GPUs. While most people largely associate generative AI with power-hungry graphics cards, there is still plenty of work for the CPU to do. Things like key-value caches -- the model's short term memory -- often need to be shuttled from system memory to HBM as AI sessions are fired up and time out, while workloads, such as vector databases used in retrieval augmented generation (RAG) pipelines, are commonly run on CPU cores. The Xeon 6776P is one of three CPUs equipped with Intel's priority core turbo (PCT) and speed select technology turbo frequency (SST-TF) tech. The idea behind these technologies is that by limiting most of the cores to their base frequencies, the remaining cores can boost higher more consistently even when the chip is fully loaded up. Milan Mehta, a senior product planner for Intel's Xeon division, told El Reg that the tech will enable up to eight cores per socket to run at 4.6GHz -- 700MHz beyond the chip's max rated turbo -- while the remaining 48 cores are pinned at their base frequency of 2.3GHz. If this sounds familiar, Intel has employed a similar strategy on its desktop chips going back to Alder Lake, which offloaded background tasks to the chips' dedicated efficiency cores, freeing up its performance cores for higher priority workloads. But because Intel's 6700P-series chips don't have e-cores, the functionality has to be achieved through clock pinning. "We found that having this mix of some cores high, some cores low, helps with driving data to the GPUs," Mehta said. "It's not going to make a 3x difference, but it's going to improve overall GPU utilization and overall AI inference and training performance." While the CPUs have been optimized for AI host duty this generation, the B300 itself follows a fairly standard DGX config. Each CPU is connected to four dual-GPU Blackwell Ultra SXM modules via an equal number ConnectX-8 NICs in a sort of daisy chain arrangement. If you're curious, here's a full rundown of the new Xeons announced this week: Nvidia's decision to tap Intel for its next-gen DGX boxen isn't all that surprising. Chipzilla's fourth and fifth-gen Xeons were used in Nvidia's DGX H100, H200, and B200 platforms. The last time Nvidia tapped AMD for its DGX systems was in 2020, when the A100 made its debut. Nvdia isn't the only one with an affinity for Intel's CPUs, at least when it comes to AI. When AMD debuted its competitor to the H100 in late 2023, the House of Zen wasn't above strapping the chips to an Intel part if it meant beating Nvidia. Nvidia is also sticking with x86 for its newly launched RTX Pro Servers, but since this is more of a reference design than a first party DGX offering, it'll be up to Lenovo, HPE, Dell, and others to decide whose CPUs they'd like to pair with the system's eight RTX Pro 6000 Server GPUs. With the introduction of NVLink Fusion this week, Nvidia will soon extend support to even more CPU platforms, including upcoming Arm-based server chips from Qualcomm and Fujitsu. As we discussed last week, the tech will enable third-party CPU vendors to use the GPU giant's speedy 1.8 TBps NVLink interconnect fabric to communicate with Nvidia graphics directly. In a more surprising move, Nvidia will also support tying third-party AI accelerators to its own Grace CPUs. Even as Nvidia opens the door to new CPU platforms, the chip biz continues to invest in its own, homegrown Arm-based silicon. At GTC in March, Nvidia offered the best look yet at its upcoming Vera CPU platform. Named after American astronomer Vera Rubin, the CPU is set to replace Grace next year. The chip will feature 88 "custom Arm cores" with simultaneous multithreading pushing thread count to 176 per socket along with Nvidia's latest 1.8 TBps NVLink-C2C interconnect. Despite the chip's higher core count, its 50W TDP suggests those cores may be stripped down to the bare minimum necessary to keep the GPUs humming along. While that might sound like a strange idea, many of these AI systems may as well be appliances that you interact with via an API. Vera is set to debut alongside Nvidia's 288 GB Rubin GPUs next year. ®

[4]

Intel Offers new Xeon 6 CPUs to Maximize GPU-Accelerated AI Performance

Intel today unveiled three new additions to its Intel Xeon 6 series of central processing units (CPUs), designed specifically to manage the most advanced graphics processing unit (GPU)-powered AI systems. These new processors with Performance-cores (P-cores) include Intel's innovative Priority Core Turbo (PCT) technology and Intel Speed Select Technology - Turbo Frequency (Intel SST-TF), delivering customizable CPU core frequencies to boost GPU performance across demanding AI workloads. The new Xeon 6 processors are available today, with one of the three currently serving as the host CPU for the NVIDIA DGX B300, the company's latest generation of AI-accelerated systems. The NVIDIA DGX B300 integrates the Intel Xeon 6776P processor, which plays a vital role in managing, orchestrating and supporting the AI-accelerated system. With robust memory capacity and bandwidth, the Xeon 6776P supports the growing needs of AI models and datasets. Maximizing AI Performance with Priority Core Turbo The introduction of PCT, paired with Intel SST-TF, marks a significant leap forward in AI system performance. PCT allows for dynamic prioritization of high-priority cores, enabling them to run at higher turbo frequencies. In parallel, lower-priority cores operate at base frequency, ensuring optimal distribution of CPU resources. This capability is critical for AI workloads that demand sequential or serial processing, feeding GPUs faster and improving overall system efficiency. Looking more broadly, Intel Xeon 6 processors with P-cores deliver industry-leading features for any AI system, including:High Core Counts and Exceptional Single-Threaded Performance: With up to 128 P-cores per CPU, these processors ensure balanced workload distribution for intensive AI tasks. 30% Faster Memory Speeds: When compared to the competition, Intel Xeon 6 offers superior memory performance at high-capacity configurations and supports leading-edge memory bandwidth with MRDIMMs and Compute Express Link. Enhanced I/O Performance: With up to 20% more PCIe lanes than previous Xeon processors, these CPUs enable faster data transfer for I/O-intensive workloads. Unmatched Reliability and Serviceability: These processors are built for maximum uptime with robust reliability, availability and serviceability features that minimize business disruptions. Intel Advanced Matrix Extensions: These CPUs support FP16 precision arithmetic, enabling efficient data preprocessing and critical CPU tasks in AI workloads. As enterprises modernize their infrastructure to handle the increasing demands of AI, Intel Xeon 6 processors with P-cores provide the ideal combination of performance and energy efficiency. These processors support a wide range of data center and network applications, solidifying Intel's position as the leader in AI-optimized CPU solutions. Source: Intel

[5]

Intel: New Xeon 6 CPU Boosts GPU Performance In Nvidia's DGX B300 System

The semiconductor giant says the new Xeon 6776P processor serving as the host CPU for Nvidia's Blackwell Ultra-based DGX B300 system features its new Priority Core Turbo technology as well as Intel Speed Select - Turbo Frequency to enable 'customizable CPU core frequencies' for the purpose of maximizing GPU performance Intel said one of its three newly revealed Xeon 6 processors designed to "boost GPU performance across demanding AI workloads" will serve as the host CPU for Nvidia's new Blackwell Ultra-based DGX B300 system. The Santa Clara, Calif.-based company announced the three new Xeon 6 processors with performance cores on Thursday, saying that they feature the company's new Priority Core Turbo technology as well as Intel Speed Select - Turbo Frequency to enable "customizable CPU core frequencies" for the purpose of maximizing GPU performance. [Related: Qualcomm's Plan To Sell Server CPUs Includes A Partnership With Nvidia] Intel made the announcement as CEO Lip-Bu Tan tries to chart a new path forward for the company's AI strategy, which includes the Gaudi 3 accelerator chips that didn't meet its modest sales goal for the product last year. While Intel continues to push Gaudi 3 as a more affordable alternative to Nvidia's GPUs and develops a future rack-scale platform based on its next-generation Jaguar Shores chips, the company has also made a priority to position Xeon as the best host CPU for AI servers. "These new Xeon SKUs demonstrate the unmatched performance of Intel Xeon 6, making it the ideal CPU for next-gen GPU-accelerated AI systems," said Karin Eibschitz Segal, interim head of Intel's Data Center Group, in a statement. "We're thrilled to deepen our collaboration with Nvidia to deliver one of the industry's highest-performing AI systems, helping accelerate AI adoption across industries," she added. Intel said the new Xeon 6776P processor will serve as the host CPU for Nvidia's DGX B300 system, with each node consisting of two Xeon chips and eight Blackwell Ultra GPUs. "With robust memory capacity and bandwidth, the Xeon 6776P supports the growing needs of AI models and datasets," the company said. The new Priority Core Turbo feature in these new Xeon processors "allows for dynamic prioritization of high-priority cores, enabling them to run at higher turbo frequencies," according to Intel. "In parallel, lower-priority cores operate at base frequency, ensuring optimal distribution of CPU resources," the company added. "This capability is critical for AI workloads that demand sequential or serial processing, feeding GPUs faster and improving overall system efficiency," Intel said. Revealed at Nvidia's GTC 2025 event in March, the DGX B300 is the x86-based complement to the AI infrastructure giant's flagship GB300 NVL72 platform, which includes its Arm-based Grace CPUs and Blackwell Ultra GPUs to provide optimal AI performance. With a single DGX B300 capable of 72 petaflops of 8-bit floating-point (FP8) and 144 petaflops of 4-bit float-point performance, the system serves as the building block for the B300-based DGX SuperPod cluster. This SuperPod provides 11 times faster inference on large language models, seven times more compute and four times larger memory compared to a Hopper-based DGX cluster, according to Nvidia.

[6]

Intel's Newly Launched Xeon 6 P-Core CPUs Bring Game Changer Priority Core Turbo And SST-TF For GPU-Fed AI Systems

Intel's new Xeon 6 processors have been launched for the NVIDIA DGX B300 platform, enhancing AI performance by bringing innovative features. CPU-side bottlenecks, which some GPU-powered systems have to face, will no longer throttle the performance with Intel's newly launched Xeon 6 Performance Core processors. Intel has today lifted the embargo on three of its latest Xeon 6 P-core processors, such as the Intel Xeon 6776P, a 64-core/128-thread chip that can easily take care of the demands of high-performance GPUs. Intel's Blackwell-based DGX B300 system is equipped with the Xeon 6776P, and hence, its GPU will now have access to a powerful CPU that will result in a noticeable performance boost, directly helping users with AI workloads. These new Xeon SKUs demonstrate the unmatched performance of Intel Xeon 6, making it the ideal CPU for next-gen GPU-accelerated AI systems, We're thrilled to deepen our collaboration with NVIDIA to deliver one of the industry's highest-performing AI systems, helping accelerate AI adoption across industries. - Karin Eibschitz Segal, Corporate VP and Interim GM, DGC, Intel With the launch of Xeon 6 processors, Intel introduced two features, which it calls "PCT" and "SST-TF" or Priority Core Turbo and Speed Select Technology - Technology Frequency, respectively. The PCT increases the frequency of selected high-priority cores on the CPU during critical workloads, ensuring the GPU gets enough instructions for quicker processing. The SST-TF is a feature that tunes the core frequencies according to the loads, ensuring better performance and efficiency. Intel claims that the Xeon 6 offers 30% faster memory speeds compared to its predecessors. If you are familiar with AI workloads, then you might be aware of how crucial high-speed and high-capacity memory for faster execution is. With support for MRDIMMs and CXL, AI systems based on the Intel Xeon 6 processors can access high-bandwidth memories to speed up workloads. The Xeon 6 also offers 20% more PCI-E lanes than its predecessors, which opens up more paths for faster and more data transfer to and from the GPU, ensuring users achieve faster I/O performance. That said, with improvements in multiple areas, the Xeon 6 processors seem a powerful solution for enterprises. Through features such as PCT and SST-TF, the Xeon 6 will speed up large AI models training and inference, helping businesses to be more efficient than before.

Share

Share

Copy Link

Intel introduces three new Xeon 6 processors with Priority Core Turbo and Speed Select Technology, optimized for AI workloads and featured in Nvidia's latest DGX B300 AI systems.

Intel's New Xeon 6 CPUs: Powering Next-Gen AI Systems

Intel has unveiled three new Xeon 6 processors, specifically designed to manage advanced GPU-powered AI systems. These new CPUs, featuring Performance-cores (P-cores), incorporate innovative technologies aimed at boosting AI workload performance

1

2

.

Source: Wccftech

Key Features and Technologies

The standout features of these new processors include:

-

Priority Core Turbo (PCT): This technology allows for dynamic prioritization of high-priority cores, enabling them to run at higher turbo frequencies. PCT can run up to eight high-priority cores at elevated turbo frequencies

2

. -

Intel Speed Select Technology - Turbo Frequency (Intel SST-TF): This feature works in tandem with PCT to deliver customizable CPU core frequencies, optimizing GPU performance for demanding AI workloads

1

. -

High Core Count: The new Xeon 6 CPUs offer up to 128 P-cores per CPU, ensuring balanced workload distribution for intensive AI tasks

4

. -

Enhanced Memory and I/O Performance: These processors provide 30% faster memory speeds compared to competitors, support up to 8TB of system memory, and offer up to 20% more PCIe lanes than previous-generation Xeon processors

2

4

.

Xeon 6776P: Powering Nvidia's DGX B300

One of the three new processors, the Intel Xeon 6776P, has been selected to serve as the host CPU for Nvidia's DGX B300, the company's latest generation of AI-accelerated systems

1

3

. Each DGX B300 node will feature two Xeon 6776P processors paired with eight Blackwell Ultra GPUs5

.The Xeon 6776P boasts 64 cores and can achieve clock speeds up to 4.6GHz on up to eight cores per socket, while the remaining 48 cores operate at a base frequency of 2.3GHz

3

. This configuration is designed to optimize data flow to the GPUs, improving overall AI inference and training performance3

.Impact on AI Workloads

The introduction of PCT and SST-TF marks a significant advancement in AI system performance. These technologies are particularly crucial for AI workloads that require sequential or serial processing, as they enable faster data feeding to GPUs and improve overall system efficiency

1

4

.Intel's Advanced Matrix Extensions (AMX) in these CPUs now support FP16 precision arithmetic, facilitating efficient data preprocessing and critical CPU tasks in AI workloads

2

4

.Related Stories

Industry Implications

This development showcases a deepening collaboration between Intel and Nvidia in the AI hardware space. Despite Nvidia's development of its own Arm-based Grace CPUs, the company continues to rely on Intel's x86 architecture for certain high-performance AI systems

3

5

.The new Xeon 6 processors are positioned to support a wide range of data center and network applications, reinforcing Intel's role in providing AI-optimized CPU solutions

4

. As enterprises modernize their infrastructure to handle increasing AI demands, these processors aim to provide an ideal combination of performance and energy efficiency4

.Conclusion

Intel's new Xeon 6 processors represent a significant step forward in CPU technology for AI applications. By optimizing performance for GPU-accelerated systems and offering features tailored to AI workloads, Intel is strengthening its position in the rapidly evolving AI hardware market.

Source: The Register

References

Summarized by

Navi

[2]

[3]

Related Stories

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology