Jensen Huang says AI doomer narrative is hurting society and damaging the industry

12 Sources

12 Sources

[1]

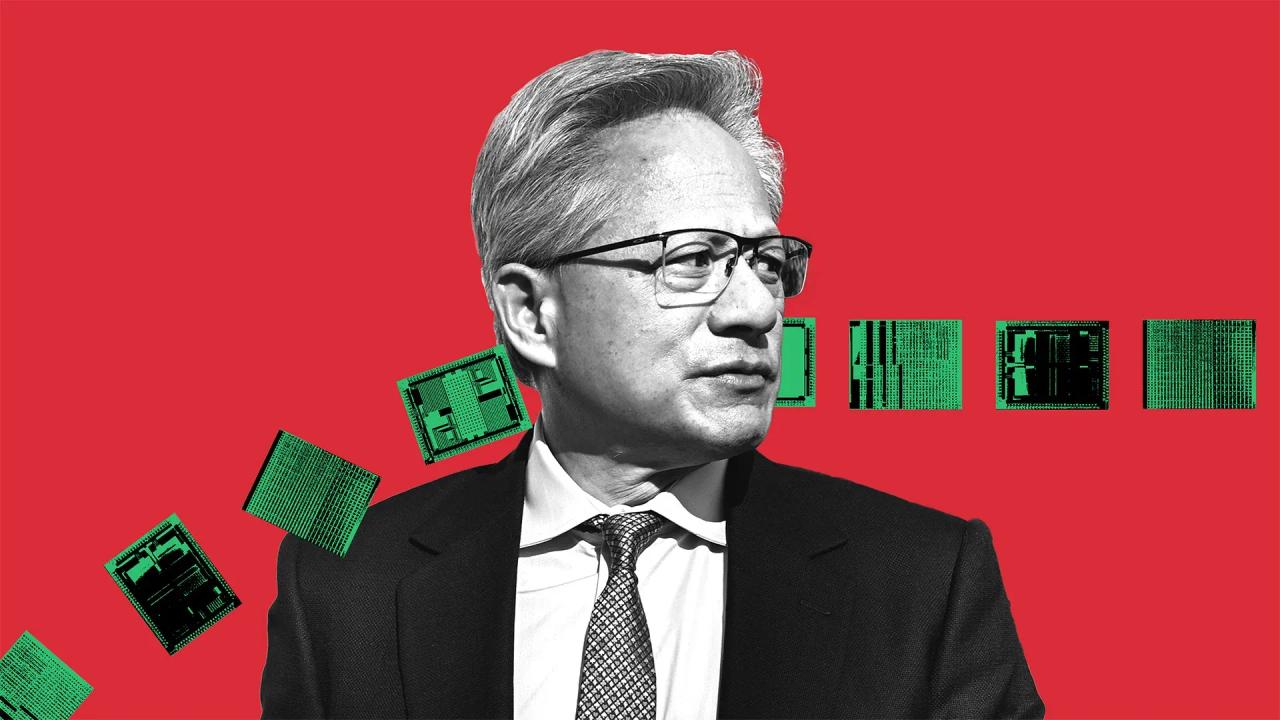

Jensen Huang claims that 'god AI' is a myth -- Nvidia chief says 'doomer narrative' is 'extremely hurtful'

Nvidia CEO Jensen Huang went on the No Priors podcast to discuss the current advances in AI technology and to refute claims he believes are wrong about AI. The multi-billionaire made his feelings very clear about the current climate of AI, saying that doom-and-gloom influencers have negatively impacted the AI industry. He also claims that we are far away from "god AI" becoming a reality. A third of the way into the podcast, Jensen claimed that someday we might have god AI, but clarified that someday will likely be on the level of a biblical or galactic scale. Huang said, "I don't see any researchers having any reasonable ability to create god AI. The ability [for AI] to understand human language, genome language, and molecular language and protein language and amino-acid language and physics language all supremely well. That god AI just doesn't exist." Huang further clarified that god AI he believes is not coming "next week." But he deems that AI should be used to advance the human population as much as possible in the meantime, before this prophetic god AI shows up. He also does not desire a god-level AI to exist: "I think that the idea of a monolithic, gigantic company/country/nation-state is just.. super unhelpful, it's too extreme. If you want to take it to that level, we should just stop everything..." Moving on, Jensen fired shots at influencers who paint AI in a negative way: "..extremely hurtful frankly, and I think we've done a lot of damage lately with very well respected people who have painted a doomer narrative, end of the world narrative, science fiction narrative. And I appreciate that most of us grew up enjoying science fiction, but it's not helpful. It's not helpful to people, it's not helpful to the industry, it's not helpful to society, it's not helpful to the governments." Huang wants AI to succeed as a tool that people can use to make their work more efficient. One use case that Huang addressed last week is the ongoing labor shortage, noting that robots can act as "AI immigrants". However, the real-world effectiveness of AI paints a different picture. Stanford University reported last year that job listings dropped 13% in three years due to AI. Furthermore, Fortune reported that 95% of AI implementations have no impact on P&L. But that is not stopping the tech industry from increasing AI capacity worldwide. Meta just announced a 6-gigawatt-capable nuclear power plant aimed at powering AI datacenters, following in the footsteps of The Stargate Project from OpenAI.

[2]

Jensen Huang says relentless negativity around AI is hurting society and has "done a lot of damage"

Serving tech enthusiasts for over 25 years. TechSpot means tech analysis and advice you can trust. A hot potato: Ever since ChatGPT was released to the masses, there's been a lot of negativity around generative AI - from the number of jobs its set to make obsolete to more extreme concerns like an apocalyptic event. Nvidia's Jensen Huang isn't happy about it, claiming that this "doomer narrative" is "not helpful to society." It's not an entirely unsurprising view from the CEO of the company whose hardware powers the AI industry. Huang made his feelings about AI skeptics, haters, and doom-mongers clear on a recent episode of the No Priors podcast. The boss of the world's most valuable company said "the battle of the narratives" between those who think AI will benefit society and those who believe it will degrade or even destroy it was one of his biggest takeaways from 2025. Huang did admit that "it's too simplistic" to dismiss either of these views entirely, but he believes some naysayers' views are having a detrimental effect. "I think we've done a lot of damage with very well-respected people who have painted a doomer narrative, end of the world narrative, science fiction narrative," Huang said. "And I appreciate that many of us grew up and enjoyed science fiction, but it's not helpful. It's not helpful to people. It's not helpful to the industry. It's not helpful to society. It's not helpful to the governments." Huang never named names, but he's spoken out against those who've warned about AI's consequences in the past. Last June, soon after Anthropic leader Dario Amodei said that AI could wipe out about half of all entry-level white-collar jobs in the next five years, leading to unemployment spikes up to 20%, Huang said he "pretty much disagree[d] with almost everything" his fellow CEO said. Huang appeared to reference Amodei again during the No Priors podcast. He said no company should ask governments for more AI regulation. "Their intentions are clearly deeply conflicted, and their intentions are clearly not completely in the best interest of society," he said. "I mean, they're obviously CEOs, they're obviously companies, and obviously they're advocating for themselves." In May 2025, Huang and Amodei clashed over the AI Diffusion Rules that restrict the export of advanced AI technologies to countries such as China. Anthropic has argued for tighter controls and enforcement, and highlighted some of the unusual cases of people smuggling chips into the Asian nation. Nvidia hit back, stating its chips have never been smuggled into China via the likes of fake pregnant bellies or alongside live lobsters, despite Chinese customs documenting these cases. Huang also claimed that the amount of negativity surrounding AI could make some skeptics' worst fears a reality. "When 90% of the messaging is all around the end of the world and the pessimism, and I think we're scaring people from making the investments in AI that makes it safer, more functional, more productive, and more useful to society," he said. Mustafa Suleyman hates that you hate AI This isn't the first case of a rich executive whose company is hugely invested in the AI industry complaining that people don't love the tech as much as they should. Microsoft's Satya Nadella recently complained that the conversation around AI needs to move beyond "slop." There was also Mustafa Suleyman, CEO of Microsoft's AI group, who called public criticism of AI "mind-blowing" in November. As a reminder, it's now estimated that more than 20% of YouTube's feed can be defined as slop, and the number of people who lose their jobs due to AI or related technologies keeps growing. It's unlikely that the negativity is going to go away because it hurts a few executives' feelings.

[3]

Jensen Huang Is Begging You to Stop Being So Negative About AI

Nvidia CEO Jensen Huang, who has seen his net worth skyrocket by nearly $100 billion since the AI boom started a couple of years ago, would really appreciate it if you would stop talking about the potential harms of the technology that's supercharged his fortune. It's really harshing his vibe. In an appearance on the No Priors podcast hosted by Elad Gil and Sarah Guo, Huang took aim at people who have suggested AI may have some significant, detrimental impact, from job displacement to expanding the surveillance state. "[It's] extremely hurtful, frankly, and I think we've done a lot of damage with very well-respected people who have painted a doomer narrative," he said. According to Huang, considering the potential existential risks of unleashing AI on society may do more harm than good. "It's not helpful. It's not helpful to people. It's not helpful to the industry. It's not helpful to society. It's not helpful to the governments," he said. He particularly took issue with other people in the industry going to the government and asking for regulation and mandatory safeguards. "You have to ask yourself, you know, what is the purpose of that narrative and what are their intentions," he asked rhetorically. "Why are they talking to governments about these things to create regulations to suffocate startups?" Huang isn't totally off-base about some of what he's suggesting. Regulatory capture is a real risk, especially as multi-billion-dollar companies look to lock in their lead by using their absurd wealth to sway politicians and cement favorable policy. And there's no doubt that AI players have been getting into the lobbying business. According to the Wall Street Journal, Silicon Valley firms have already poured more than $100 million into new Super PACs to push pro-AI messaging in the lead-up to midterm elections in 2026. There is also zero doubt that industry players use societal-scale risks as a marketing tactic: it makes their product seem full of endless potential, and it suggests they need to maintain control of it to keep everyone safe rather than letting this powerful tool fall into the wrong hands or be controlled by some government regulator. But just being optimistic doesn't mitigate some of the very real risks that AI presents. "When 90% of the messaging is all around the end of the world and the pessimism, and I think we're scaring people from making the investments in AI that makes it safer, more functional, more productive, and more useful to society," Huang said, without pointing to how more pouring more money into AI infrastructure makes us safer, other than to suggest more is better. Huang doesn't have a solution for the very real risk of job displacementâ€"not necessarily because AI is so powerful that it's replacing human labor, but because companies are so eager to chase the next big thing that they're pulling the ladder up on would-be entry-level employees despite the fact that early AI investments have been more of a money suck than a profit generator. He doesn't have a solution for the ongoing issues of misinformation, abuse, and the ongoing mental health crisis being exacerbated by AI. We are all simply beta testers on the path to answers. The only apparent solution is to speed up investment and development with the belief that, at the other end, there will be a superintelligence that solves all those problems. If the doomers have a hidden agenda of control, it's hard to look at Huang's position and not see an ulterior motive, too: padding his bottom line.

[4]

Nvidia's Jensen Huang slams doomsday AI hype

AI and robots could address labor shortages as digital workforce supplements Nvidia CEO Jensen Huang has addressed the ongoing debate around advanced artificial intelligence, arguing the idea of a so-called "god AI" is not remotely feasible today. Huang explained no researcher currently has any reasonable ability to develop AI that fully understands human language, molecular structures, protein sequences, or physics at a comprehensive level. Huang emphasized such a system sits far beyond current technology and will not appear in the near future, dismissing extreme claims of imminent AI omnipotence. Huang criticized influencers and commentators who promote a "doomer" narrative, saying that exaggerated fears have been "extremely hurtful" to the industry and society at large. "I don't see any researchers having any reasonable ability to create god AI... That god AI just doesn't exist," Huang said. He argued that framing AI as an existential threat or likening it to science fiction scenarios does not help governments, workers, or technology companies address realistic challenges. Instead, he suggested that the focus should remain on practical applications where AI can improve human productivity rather than spreading fear about a hypothetical, god-level intelligence. The Nvidia head highlighted several real-world ways AI can be applied responsibly. For example, he described how AI and robots could help address labor shortages, functioning like "AI immigrants" that support industries struggling to find workers. Huang also framed AI as a complement to productivity tools, offering capabilities that enhance human output and efficiency rather than replacing it entirely. Despite these potential benefits, reports from Stanford University and Fortune indicate that AI has had a limited impact on job listings over the past few years. Many AI tools have produced little measurable effect on business operations or financial performance. Huang made it clear that he does not want a god-level AI to exist, calling the concept of a single monolithic system controlled by a company, nation, or other entity "super unhelpful." He stressed that the tech industry should focus on incremental improvements that benefit humans today. Even as companies like Meta expand AI infrastructure globally, building massive data centers powered by nuclear energy, Huang insisted that responsible development and realistic expectations matter more than hype. Via Tom's Hardware

[5]

Nvidia CEO Says Everyone Should Stop Being So Negative About AI

Commander of multi-trillion dollar computing hardware empire Jensen Huang thinks everyone should stop being so negative about AI and all the ways it could potentially upend civilization, because it's a "doomer narrative" that's "not helpful to society," TechSpot reports. The Nvidia chief made these remarks during a recent episode of the No Priors podcast, in which he more or less dismissed dire predictions for AI's future as nothing but science fiction. "I think we've done a lot of damage with very well-respected people who have painted a doomer narrative, end of the world narrative, science fiction narrative," Huang said. "And I appreciate that many of us grew up and enjoyed science fiction, but it's not helpful. It's not helpful to people. It's not helpful to the industry. It's not helpful to society. It's not helpful to the governments." "Doomer messages causes policy, and that policy may affect the industry in some way," he added. Scrambling his take somewhat Huang then caveated that he doesn't totally dismiss everything that AI critics have to say. "It's too simplistic to say that everything that the doomers are saying are irrelevant," Huang said. "That's not true. A lot of very sensible things are being said," he added, neglecting to provide any examples. Nvidia's chips are essential for training AI models, and the rabid demand for them has catapulted the chipmaker to a nearly $5 trillion valuation. To keep the gravy train going, Huang has sometimes been even more of an AI booster than the actual AI companies themselves. When Anthropic CEO Dario Amodei warned that AI could erase half of entry-level white collar jobs in the next five years, Huang countered that Amodei was just fearmongering to make it seem like Anthropic was the only company responsible enough to build AI. And to be fair to Huang, it's a good point, with a lot of apocalyptic-sounding warnings of AI risks tending to distract from its more mundane issues. Nonetheless, Huang has made plenty more outlandish AI claims himself, such as reportedly telling his employees that they're "insane" if they don't use AI to do everything -- nevermind his out-of-touch proclamation that AI won't take your job, but will instead make you work even harder.

[6]

Nvidia CEO Jensun Huang critiques 'well respected people who have painted a doomer narrative' around AI right after saying 'I guess someday we will have God AI'

But he does admit "A lot of very sensible things are being said" by doomers. You know Nvidia, the gaming hardware company that managed to hit a $5 trillion evaluation last year. The AI boom has led to ever-increasing investment into the company, and so it's really in Nvidia's interest for the world to be all in on the potential benefits of AI. Huang reflects on the AI naysayers in a recent interview with No Priors (via Business Insider). The Nvidia leaders and No Priors hosts Elad Gil and Sarah Guo venture down a lot of paths during the nearly 80-minute conversation, including what happens to jobs under an AI future, and when we might see a 'god AI'. One key point is the various narratives around the ethics of the machine learning tech. Huang argues the world of the future is one where AI leads to more jobs: "People say, 'gosh, all of these robots that we're talking about. It's going to take away jobs'. As we know very clearly, we don't have enough factory workers. Our economy is actually limited by the number of factory workers we have." Huang therefore argues that robotics will lead to a huge repair industry, and that will naturally lead to more jobs. Huang elaborates that, under the new age of AI, tasks and jobs are different things. He clarifies this point with a few different analogies throughout the chat, explaining that a waiter's job isn't just to collect orders but to give customers "a great experience". He says the goal of Nvidia software engineers is to solve problems, "and we have so many undiscovered problems". Huang adds that nothing would give him more joy than if none of his software engineers were coding, explaining 'If Nvidia was more productive, it doesn't result in layoffs. It results in us doing more things.' However, it's not just job concerns that Huang gestures at here. He says, "There are... many people in the government who obviously aren't as familiar with, as comfortable with the technology, and when PhDs of this and CEOs of that goes to governments and explain and describe these end-of-the-world scenarios and extremely, extremely dystopian future, you have to ask yourself, 'What is the purpose of that narrative and what are their intentions and what do they hope? Why are they talking to governments about these things to create regulations to suffocate startups?" Elad Gil asks if Huang believes the point of this is regulatory capture -- an attempt to stop startups from competing effectively through regulations -- but Huang says he doesn't know. Huang instead admits "a lot of very sensible things are being said" by doomers (ie, those who are pessimistic about the future of AI) but he also clarifies that "When 90% of the messaging is all around the end of the world and the doom and the pessimism and you know, I think we're scaring people from making investments in AI that make it safer, more functional, more productive, and more useful to society." Huang says, "I think we've done a lot of damage with very well respected people who have painted a doomer narrative. End of the world narrative. Science fiction narrative." As with much of the AI industry, there's a bit of a 'better to ask forgiveness than permission' narrative here, from the likes of Meta and OpenAI scraping as much material as possible, to entirely ignoring copyright, to claims that AI will solve the global warming problems that are partially caused by AI's explosive growth. There's also a rapid building of infrastructure and trillions of dollars invested into the technology that's been setting off some folks 'tech bubble' spidey sense for some time. This isn't helped by the volume of buzzwords bandied around the tech, either. Mere minutes before talking about naysayers, Huang says, "I guess someday we will have God AI. That someday is probably on biblical scales, you know, I think galactic scales." In a basic sense, he's describing one monolithic model that does everything, But even Huang has his doubts: "I don't think any company practically believes they're anywhere near God AI, and nor do I see any researchers having any reasonable ability to create God AI. The ability to understand human language and genome language and molecular language and protein language and amino acid language, and physics language all supremely well. That God AI just doesn't exist." Well, if God AI is coming, I don't think I'll be a believer.

[7]

Jensen Huang rejects -god AI- fears, criticizes AI doomer narratives

Nvidia CEO Jensen Huang used a recent appearance on the No Priors podcast to push back against two themes he says are distorting how the public discusses artificial intelligence: the idea that "god AI" is close at hand, and the growing influence of doomsday-style messaging around the technology. Huang's argument is not that AI is trivial, but that the debate has drifted toward extreme conclusions that do not reflect the current technical reality, and that those extremes are shaping policy and perception in unproductive ways. Huang described "god AI" as something far beyond today's generative models. In his framing, it would be an AI that can deeply understand and operate across multiple complex domains at once, including human language as well as scientific and biological systems such as genomics, molecular and protein interactions, amino-acid behavior, and physics. He stated that he does not see researchers having a realistic ability to create that kind of system anytime soon, and he emphasized that it is not something arriving in the near-term. The point he's making is straightforward: an all-encompassing AI with unified mastery across these fields is not an imminent engineering milestone. He also criticized what he called the doomer narrative surrounding AI, arguing that well-known voices have leaned too heavily on end-of-the-world and science-fiction framing. According to Huang, this approach is not helpful to people, the industry, governments, or society, and it damages the quality of the discussion by pushing it toward fear-driven conclusions. Huang further rejected the idea that the conversation should revolve around a monolithic scenario where a single company or nation-state ends up with a dominant "god-level" AI. In his view, framing the debate around that extreme is counterproductive and can lead to calls to halt progress rather than govern real deployments. Huang's preferred framing is to treat AI as applied technology aimed at improving productivity. He has pointed to labor constraints as a practical motivation for automation, including robotics, describing robots as a way to supplement workforces where hiring supply does not meet demand. However, the wider market picture remains mixed. Some research suggests parts of the job market, particularly at entry-level, are being affected as automation expands. At the same time, enterprise AI rollouts often struggle to translate experimentation into measurable financial outcomes, particularly when integration requires process change, training, and organizational redesign rather than simple tool deployment. Despite the uneven results, the AI capacity race continues. Large technology firms are still increasing data center footprint and securing long-duration power, including nuclear-related agreements and multi-gigawatt planning, reflecting expectations that AI training and inference demand will keep rising. That ongoing infrastructure build signals that the industry is treating AI compute as a strategic resource, even as debate continues about the pace of capability advances and the near-term economic impact of deployments.

[8]

NVIDIA's Jensen Huang wants the AI doom and a gloom to stop as it's 'extremely hurtful'

TL;DR: NVIDIA CEO Jensen Huang addressed AI doomsday fears, emphasizing that negative narratives harm societal acceptance and innovation. He highlighted concerns over calls for strict AI regulations potentially stifling startups and urged a balanced view on AI's impact, focusing on its role in solving labor shortages and advancing technology responsibly. Before NVIDIA's CEO Jensen Huang unveiled the Vera Rubin AI computing platform at CES 2026, Huang sat down for an interview where he discussed the doom-speak surrounding AI and its potential impact on humanity. Skip to 23:54 for the full conversation In an interview with No Priors, Huang discussed many topics stemming from AI, such as the biggest surprises of 2025, how AI will influence jobs, solving labor shortages with robotics, and the AI "doomer" narrative, along with regulation. Since ChatGPT's explosion in popularity and the billions of dollars that have been thrown into the development of new and more sophisticated AI models, some researchers and industry experts have warned about the potential impact on humanity when all-encompassing AI models emerge. Some experts have issued warnings about how AI has the potential to destroy people's lives, with others mentioning privacy issues following an increasingly encroaching surveillance state. But, according to Huang, these concerns aren't needed, and have actually done irreversible harm to society's acceptance of AI. "[It's] extremely hurtful, frankly, and I think we've done a lot of damage with very well-respected people who have painted a doomer narrative," said Huang. "It's not helpful. It's not helpful to people. It's not helpful to the industry. It's not helpful to society. It's not helpful to the governments," added Huang The NVIDIA CEO went on to point out a particular point of concern, which is that people in the technology industry are going to the government to request regulation on AI, as well as mandatory safeguards for its development. "You have to ask yourself, you know, what is the purpose of that narrative and what are their intentions. Why are they talking to governments about these things to create regulations to suffocate startups?"

[9]

Nvidia CEO Jensen Huang slams "doomsday" AI narratives

Nvidia CEO Jensen Huang has sharply criticized the prevalence of "doomsday" warnings regarding artificial intelligence, arguing that such pessimism discourages necessary investment in AI safety and harms public discourse. Speaking on the "No Priors" podcast, Huang observed that 2025 was defined by a "battle of narratives" where negative science-fiction scenarios dominated, which he described as unhelpful to the industry, governments, and society at large. Huang also warned against "regulatory capture," suggesting that tech CEOs who advocate for heavy government oversight are likely acting out of self-interest rather than the public good. Although he did not name specific individuals, his comments appear to target figures like OpenAI's Sam Altman and Elon Musk, who have previously called for strict AI regulation. Huang has also publicly disagreed with Anthropic CEO Dario Amodei regarding predictions that AI will eliminate significant numbers of white-collar jobs. According to Huang, the overwhelming focus on "end of the world" scenarios is counterproductive because it scares people away from investing in the very technologies that would make AI systems safer and more useful. His comments align with similar sentiments from Microsoft CEO Satya Nadella, who recently called for a new equilibrium in how humans interact with cognitive tools. A spokesperson for Nvidia declined to provide further comment on Huang's statements.

[10]

Jensen Huang Has Had it With Your AI Slander

In a recent No Priors podcast episode, hosted by Elad Gil and Sarah Guo, Huang argued that ruminating on potential risks of AI will inevitably elicit more harm than good. "It's not helpful. It's not helpful to people. It's not helpful to the industry. It's not helpful to society. It's not helpful to the governments," he said. What Huang did not explain, however, is how increased investment alone would make AI meaningfully safer or more socially beneficial. Nor did he offer concrete answers to some of the most pressing concerns critics raise, including job displacement, the expansion of the surveillance state, the spread of misinformation, or AI's growing links to mental health harms.

[11]

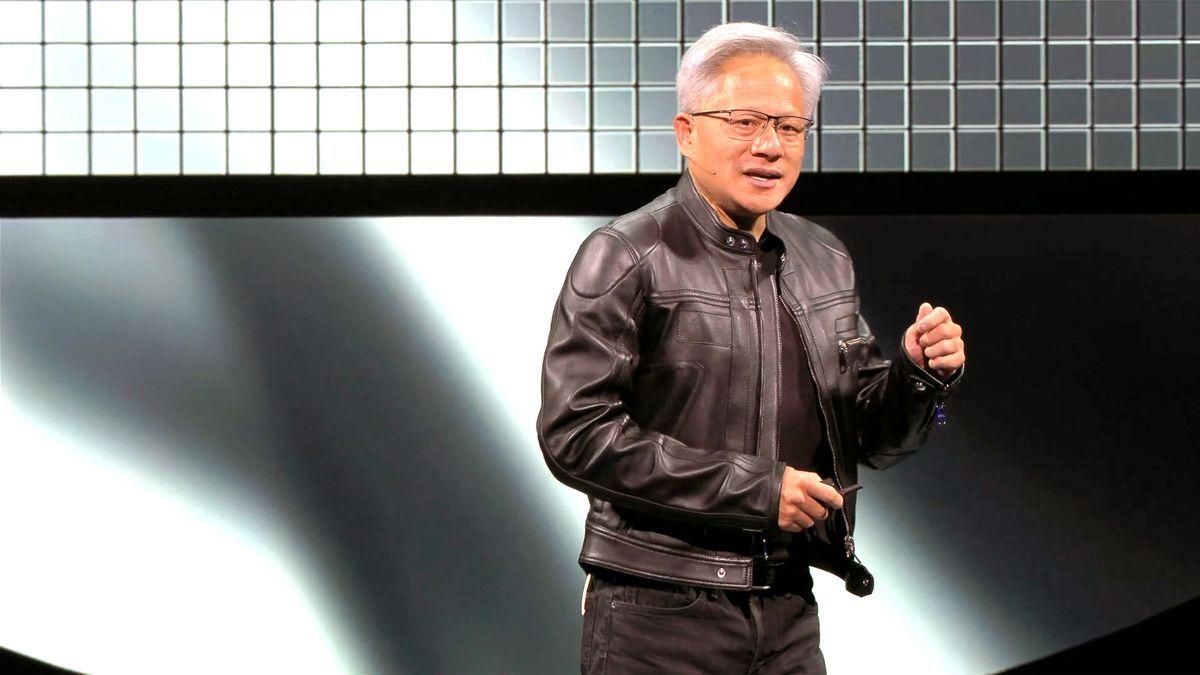

Nvidia CEO Jensen Huang pushes back on AI doomsday talk: Says, 'we grew up enjoying science fiction, but it's not helpful'

Nvidia CEO Jensen Huang has criticised "doomer" narratives that portray artificial intelligence as an apocalyptic threat, arguing such fear distorts public understanding and policy. Speaking on the No Priors podcast, he warned that science-fiction framing can lead to restrictive regulation and may serve rival corporate interests. Huang urged focusing instead on real engineering challenges, system reliability and practical safety, as AI steadily becomes more predictable, monitored and widely deployed. By reframing artificial intelligence as a tool still struggling to perfect basic reliability rather than a force poised to end humanity, Nvidia chief executive Jensen Huang has reopened the debate on how the world should talk about its fastest growing technology. In a recent appearance on the No Priors podcast, later reported by TechSpot, Huang criticised what he called an exaggerated culture of fear around AI, arguing that dramatic predictions are distorting public understanding and policy making. Huang said influential voices have helped popularise an image of AI as an unstoppable threat, borrowing heavily from the language of science fiction. "I think we've done a lot of damage with very well-respected people who have painted a doomer narrative, end-of-the-world narrative, science fiction narrative," he said on the podcast. "We grew up enjoying science fiction, but it's not helpful. It's not helpful to people, to the industry, to society or to governments." According to TechSpot, Huang also cautioned that such messaging often shapes regulation. Alarmist scenarios, he argued, can encourage governments to draft restrictive policies that may slow innovation or disadvantage smaller companies trying to enter the AI ecosystem. During the discussion, Huang suggested that some of the loudest warnings about AI risks may come from leaders with competing commercial interests. He raised concerns about regulatory capture, a situation where established firms influence rules in ways that limit competition. While stopping short of naming individuals, Huang said intentions can become "deeply conflicted" when technology executives lobby governments to impose strict controls on emerging players. At the same time, he acknowledged that not all criticism of AI is misguided. "It's too simplistic to say everything the doomers are saying is irrelevant," he said, adding that several concerns are sensible, even if he did not detail them. Rather than focusing on hypothetical future catastrophes, Huang urged attention on present-day technical challenges. He compared AI safety to automobile design. The first measure of safety, he argued, is whether a system works as intended. "The first part of safety is performance," Huang said. "That it works as advertised." Over the past two to three years, he noted, the industry has invested heavily in improving reasoning, research capability and accuracy, making AI systems more predictable and useful. He also pointed to rising investment in synthetic data, cybersecurity applications and monitoring tools that allow AI systems to supervise one another, reducing the likelihood of unchecked behaviour. As AI becomes cheaper to deploy, Huang believes oversight will increase rather than disappear, with multiple systems watching and correcting each other. Huang's views carry weight not only because of his long tenure but because of Nvidia's central role in the AI boom. The Taiwanese American entrepreneur co-founded Nvidia in 1993 and has led it since. Under his leadership, the company pioneered the graphics processing unit in 1999 and later transformed those chips into the backbone of modern AI training through its CUDA software platform. By October 2025, Nvidia became the first company to cross a $5 trillion market valuation, driven largely by demand for its AI hardware. As of January 2026, Huang's personal net worth is estimated at about $164 billion, placing him among the world's richest individuals. His position has often made him one of AI's most vocal optimists. Last year, when Anthropic chief executive Dario Amodei warned that AI could eliminate half of entry-level white-collar jobs within five years, Huang dismissed the claim as fear-driven positioning. He has also drawn criticism for encouraging employees to use AI tools extensively and for suggesting that AI would change jobs rather than replace them, often by increasing workloads.

[12]

NVIDIA's CEO Says You Shouldn't Talk Bad About AI, Saying That Those Fueling the "Doomer Narrative" Are Deeply Conflicted People

NVIDIA's CEO has spoken against the people who throw negativity around AI, saying that they have intentions that are not in the "best interests of society". Well, while AI developments have seen optimism across the tech industry, mainly in many ways it has managed to revolutionize human workloads, there's still massive skepticism across the broader audience on the fact that AI would lead to unemployment, of course, driven by the fact that it would replace many forms of human labor. In an interview with No Priors, NVIDIA's CEO calls this sentiment the "doomers' narrative", claiming that talking such stuff about AI does no good, and more importantly, he says that those pushing this narrative aren't ordinary people, but relatively CEOs pushing governments. And so it sounds like it's really important to have this conversation. Extremely hurtful, frankly. And I think we've done a lot of damage with very well-respected people who have who have painted a doomer narrative, end of the world narrative, science fiction narrative. And I appreciate that many of us grew up and enjoyed science fiction, but it's not helpful. It's not helpful to people. It's not helpful to the industry. It's not helpful to society. It's not helpful to the governments. There are a lot of many people in the government who obviously aren't as familiar with as as comfortable with the technology. - NVIDIA's CEO Well, Jensen didn't mention any names when talking about this topic, but he did say that companies pushing for AI regulations have gotten it all wrong, and judging by this, NVIDIA's CEO is likely referring to Anthropic's CEO, Dario Amodei, who has voiced towards AI regulations, and how the technology would take up "half of white collar jobs". Both CEOs have shown criticism against each other openly in public as well, and since Jensen specifically mentioned "CEOs being involved", it won't be wrong to say that the pointers were defintely towards Amodei. NVIDIA's CEO argues that AI regulations ultimately hinder progress, whether in the form of chip export controls or barriers to AI advancement. He says that while elements within the industry have tried to slow down AI progress, the technology is now helping at several mainstream fronts, thereby arguing that further developing AI is now a necessity. Remember, just two years ago, people were talking about slowing the industry down. But as we advance quickly, what did we solve? We solved grounding. We solved reasoning. We solved research. All of that technology was applied for good, improving the functionality of the A.I. The end has not come. It's become more useful. It's become more functional. It's become able to do what we ask it to do, you know? And so the first the first part of the safety of a product is that it performed as advertised. The first part. Of safety is performance that it's supposed like the first part of safety of a car isn't that some person is going to jump into the car and use it as a missile. The first part of the car is it works as advertised. There's no doubt that AI has become increasingly mainstream, driven by substantial investments, data center buildouts, and, of course, the compute advancements brought about by the likes of NVIDIA/AMD. AI is eventually moving into a world where it is divided into multiple layers, such as generative, agentic, physical, and many others. When viewed from a wider perspective, the primary objective of such advancements is to automate elements of human labor and drive efficiency figures. Or at least, this is the narrative brought up by NVIDIA's Jensen Huang.

Share

Share

Copy Link

Nvidia CEO Jensen Huang criticized what he calls the AI doomer narrative in a recent podcast appearance, arguing that negativity around AI has done significant damage to society and the industry. Huang dismissed fears of god AI as science fiction while advocating for practical applications that enhance human productivity.

Jensen Huang Takes Aim at Negativity Around AI

Nvidia CEO Jensen Huang has launched a pointed critique against what he terms the doomer narrative surrounding AI, arguing that widespread pessimism about the technology is causing real harm. Speaking on the No Priors podcast, Huang stated that "extremely hurtful" messaging from "very well-respected people" has painted AI in an unnecessarily dark light. The billionaire CEO, whose company powers much of the AI industry through its specialized chips, said the relentless focus on end-of-the-world scenarios is "not helpful to people, not helpful to the industry, not helpful to society, not helpful to the governments"

2

.

Source: PC Gamer

Huang's comments come as concerns about AI's potential harms have intensified, ranging from job displacement to misinformation and surveillance expansion. His net worth has surged by nearly $100 billion since the AI boom began, making Nvidia one of the world's most valuable companies with a valuation approaching $5 trillion

3

. The CEO acknowledged that "it's too simplistic" to dismiss all criticism entirely, but maintained that the science fiction narrative around AI has done substantial damage to progress and investments in the technology5

.The Myth of God AI

Huang directly addressed fears about superintelligent systems, declaring that no researcher currently has "any reasonable ability to create god AI." He defined this hypothetical system as one that could supremely understand human language, genome language, molecular language, protein language, amino-acid language, and physics language simultaneously. "That god AI just doesn't exist," Huang stated flatly, adding that such technology won't arrive "next week" and may remain on a biblical or galactic timescale

4

.

Source: Inc.

The Nvidia chief expressed strong opposition to the idea of a monolithic AI system controlled by a single company, country, or nation-state, calling it "super unhelpful" and "too extreme." He suggested that if concerns reach that level, "we should just stop everything," but emphasized his belief that current technology remains far from such scenarios.

Clashes Over AI Regulation and Industry Direction

Huang took particular issue with companies seeking AI regulation from governments, suggesting their intentions are "clearly deeply conflicted" and "not completely in the best interest of society." Without naming names, he appeared to reference Anthropic CEO Dario Amodei, who has advocated for tighter controls on AI technology

2

. Last June, Huang said he "pretty much disagreed with almost everything" Amodei stated about AI potentially eliminating half of entry-level white-collar jobs within five years, leading to unemployment spikes up to 20%2

.The two executives clashed again in May 2025 over AI chip export restrictions to countries like China. While Anthropic argued for tighter enforcement and highlighted unusual smuggling cases, Nvidia pushed back against claims its chips had been smuggled via fake pregnant bellies or alongside live lobsters

2

. Concerns about regulatory capture have emerged as Silicon Valley firms poured more than $100 million into new Super PACs to push pro-AI messaging ahead of the 2026 midterm elections, according to the Wall Street Journal3

.Related Stories

Focus on Human Productivity and Practical Applications

Huang argued that AI should function as a tool to enhance human productivity rather than replace workers entirely. He highlighted potential applications like "AI immigrants"—robots that could address ongoing labor shortages in various industries

4

. The CEO suggested that negative messaging scares people away from making investments that could make AI "safer, more functional, more productive, and more useful to society"2

.

Source: TechRadar

However, real-world data presents a more complex picture. Stanford University reported that job listings dropped 13% over three years due to AI, while Fortune found that 95% of AI implementations have had no measurable impact on profit and loss statements. Despite these findings, tech companies continue expanding AI infrastructure globally. Meta recently announced a 6-gigawatt-capable nuclear power plant aimed at powering AI datacenters, following OpenAI's Stargate Project.

Estimates now suggest more than 20% of YouTube's feed consists of AI-generated slop, while the number of people losing jobs to AI-related technologies continues growing

2

. Huang isn't alone among tech executives complaining about public perception—Microsoft's Satya Nadella recently urged moving conversation beyond "slop," while Mustafa Suleyman, CEO of Microsoft's AI group, called public criticism "mind-blowing" in November2

. Critics note that dismissing concerns about misinformation, job displacement, and mental health impacts while advocating for accelerated development raises questions about whether the push for optimism serves society or primarily benefits those profiting from the technology3

.References

Summarized by

Navi

[1]

[2]

[4]

Related Stories

Nvidia CEO Jensen Huang and Anthropic CEO Dario Amodei Clash Over AI's Impact on Jobs

12 Jun 2025•Technology

Nvidia CEO Jensen Huang Pushes for Maximum AI Automation While Promising Job Security

25 Nov 2025•Business and Economy

Nvidia CEO Jensen Huang Weighs In on AI's Impact on Jobs and Innovation

14 Jul 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation