Meta's AI Chatbot Training Raises Privacy Concerns as Contractors Report Access to Personal Data

2 Sources

2 Sources

[1]

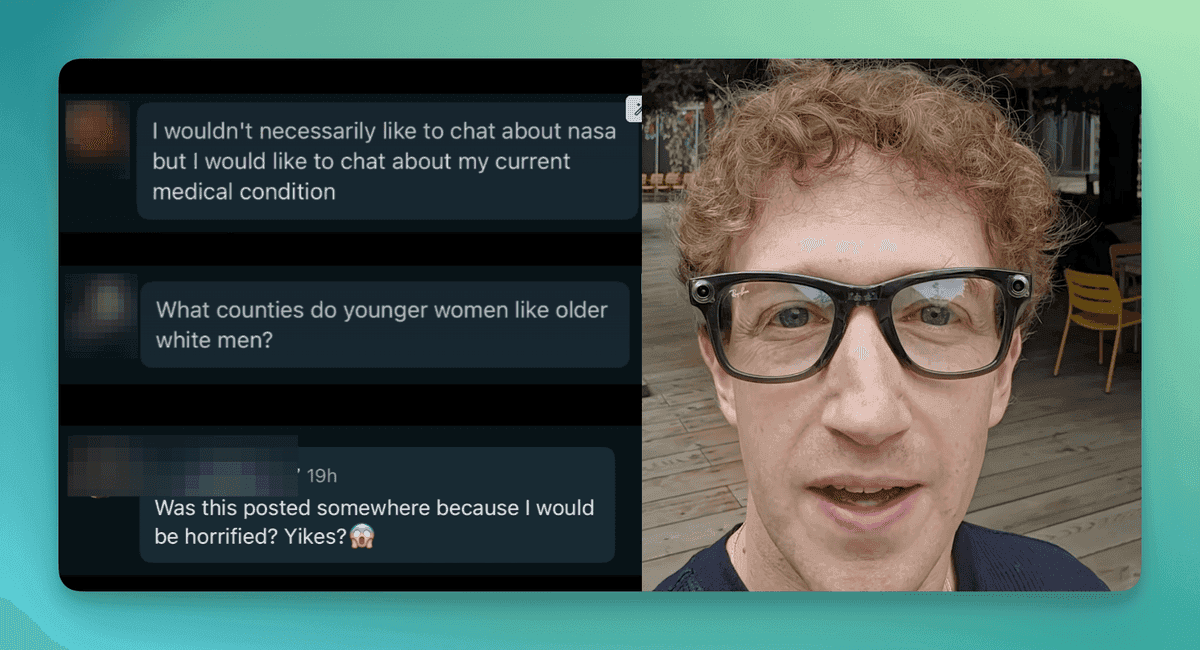

Meta contractors say they can see Facebook users sharing private information with their AI chatbots

Business Insider spoke with four contract workers who Meta hires through Alignerr and Scale AI-owned Outlier, two platforms that enlist human reviewers to help train AI, and the contractors said "unredacted personal data was more common for the Meta projects they worked on" compared to similar projects for other clients in Silicon Valley. And according to those contractors, many users on Meta's various platforms like Facebook and Instagram were sharing highly personal details. Users would talk to Meta's AI like they were speaking with friends, or even romantic partners, sending selfies and even "explicit photos." To be clear, people getting too close to their AI chatbots is well-documented, and Meta's practice -- using human contractors to assess the quality of AI-powered assistants for the sake of improving future interactions -- is hardly new. Back in 2019, The Guardian reported how Apple contractors regularly heard extremely sensitive information from Siri users even though the company had "no specific procedures to deal with sensitive recordings" at the time. Similarly, Bloomberg reported how Amazon had thousands of employees and contractors around the world manually reviewing and transcribing clips from Alexa users. Vice and Motherboard also reported on Microsoft's hired contractors recording and reviewing voice content, even though that meant contractors would often hear children's voices via accidental activation on their Xbox consoles. But Meta is a different story, particularly given its track record over the past decade when it comes to reliance on third-party contractors and the company's lapses in data governance. In 2018, The New York Times and The Guardian reported on how Cambridge Analytica, a political consultancy group owned by Republican hedge-fund billionaire Howard Mercer, exploited Facebook to harvest data from tens of millions of users without their consent, and used that data to profile U.S. voters and target them with personalized political ads to help elect President Donald Trump in 2016. The breach stemmed from a personality quiz app that collected data -- not just from participants, but also from their friends. It led to Facebook getting hit with a $5 billion fine from the Federal Trade Commission (FTC), one of the largest privacy settlements in U.S. history. The Cambridge Analytica scandal exposed broader issues with Facebook's developer platform, which had allowed for vast data access, but had limited oversight. According to internal documents released by Frances Haugen, a whistleblower, in 2021, revealed Meta's leadership often prioritized growth and engagement over privacy or safety concerns. Meta has also faced scrutiny over its use of contractors: In 2019, Bloomberg reported how Facebook paid contractors to transcribe users' audio chats without knowing how it was obtained in the first place. (Facebook, at the time, said the recordings only came from users who had opted into the transcription services, adding they had also "paused" that practice.) Facebook has spent years trying to rehabilitate its image: It rebranded to Meta in October 2021, framing the name change as a forward-looking shift in focus to "the metaverse" rather than a response to controversies surrounding misinformation, privacy, and platform safety. But Meta's legacy in handling data casts a long shadow. And while using human reviewers to improve large language models (LLMs) is common industry practice at this point, the latest report about Meta's use of contractors, and the information contractors say they're able to see, does raise fresh questions around how data is handled by the parent company of the world's most popular social networks. In a statement to Fortune, a Meta spokesperson said the company has "strict policies that govern personal data access for all employees and contractors." "While we work with contractors to help improve training data quality, we intentionally limit what personal information they see, and we have processes and guardrails in place instructing them how to handle any such information they may encounter," the spokesperson said. "For projects focused on AI personalization ... contractors are permitted in the course of their work to access certain personal information in accordance with our publicly available privacy policies and AI terms. Regardless of the project, any unauthorized sharing or misuse of personal information is a violation of our data policies and we will take appropriate action," they added.

[2]

AI Contractors at Meta Could See Users' Personal Data, Including Selfies

Two workers said that they saw selfies that users sent to the chatbot. Meta's AI training may have exposed your personal information to contract workers. To fine-tune its AI models, Meta hires outside contractors to read conversations between users and its chatbot, which has one billion monthly active users as of May, according to the company. Now, some contractors are claiming to have viewed personal data while reading and reviewing exchanges. Four contract workers hired through training companies Outlier and Alignerr told Business Insider that they repeatedly saw Meta AI chats that contained the user's name, phone number, email address, gender, hobbies, and personal details. The information was either included in the text of a conversation by a user, which Meta's privacy policy warns against, or it was given to contractors by Meta, which placed the personal data alongside chat histories. Related: Meta Takes on ChatGPT By Releasing a Standalone AI App: 'A Long Journey' One worker said that they saw personal information in more than half of the thousands of chats they reviewed per week. Two other workers claimed to have seen selfies that users from the U.S. and India sent to the chatbot. Other big tech companies, like Google and OpenAI, have asked contractors to train AI in a similar manner and tackle comparable projects, but two of the contract workers who also juggled tasks for other clients claimed that Meta's tasks were more likely to include personal information. All of the contractors expressed that users engaged in personal discussions with Meta's AI chatbot, ranging from flirting with the chatbot to talking about people and problems in their lives. Sometimes, users would incorporate personal information into these interactions, adding their locations and job titles, which the contractors were later able to see. Related: 'The Market Is Hot': Here's How Much a Typical Meta Employee Makes in a Year While the situation may pose a significant data privacy risk, Meta claims that it has imposed "strict" measures around employees and contractors who see personal data. "We intentionally limit what personal information they see, and we have processes and guardrails in place instructing them how to handle any such information they may encounter," a Meta spokesperson told Business Insider. Meta has plans to invest heavily in AI. The company committed $66 billion to $72 billion in 2025 for AI infrastructure.

Share

Share

Copy Link

Meta contractors reveal they can see personal information shared by users with AI chatbots, raising questions about data privacy and the company's history of data governance issues.

Meta's AI Training Practices Raise Privacy Concerns

Meta, the parent company of Facebook and Instagram, is facing scrutiny over its AI training practices after contractors reported seeing users' personal information while reviewing conversations with AI chatbots. This revelation has reignited concerns about data privacy and Meta's history of data governance issues

1

.

Source: Entrepreneur

Contractors' Access to Personal Data

Four contract workers, hired through AI training companies Outlier and Alignerr, disclosed to Business Insider that they frequently encountered users' personal information while reviewing AI chat interactions. This data included names, phone numbers, email addresses, gender, hobbies, and other personal details. Some contractors reported seeing selfies sent by users from the United States and India

2

.One contractor claimed to have seen personal information in more than half of the thousands of chats reviewed weekly. The contractors noted that users often engaged in personal discussions with Meta's AI chatbot, sharing intimate details about their lives and relationships

2

.Industry-Wide Practice and Meta's Response

The use of human reviewers to improve large language models (LLMs) is a common practice in the tech industry. Companies like Google, OpenAI, Apple, and Amazon have also employed similar methods. However, contractors working on Meta's projects reported a higher frequency of encountering personal data compared to tasks for other clients

1

.Meta has responded to these concerns, stating that they have "strict policies that govern personal data access for all employees and contractors." The company claims to intentionally limit the personal information visible to contractors and has processes in place to handle such information

1

.Related Stories

Historical Context and Data Governance Issues

Source: Fortune

This incident recalls Meta's troubled history with data governance, most notably the Cambridge Analytica scandal in 2018. That breach resulted in a $5 billion fine from the Federal Trade Commission and exposed broader issues with Facebook's developer platform and data access policies

1

.Internal documents released by whistleblower Frances Haugen in 2021 suggested that Meta's leadership often prioritized growth and engagement over privacy or safety concerns. The company has since attempted to rehabilitate its image, including rebranding from Facebook to Meta in October 2021

1

.Implications for AI Development and User Privacy

This situation highlights the ongoing challenges in balancing AI development with user privacy. As Meta plans to invest heavily in AI infrastructure, with commitments of $66 billion to $72 billion for 2025, the company faces increased pressure to ensure robust data protection measures

2

.The incident also underscores the need for greater transparency in how tech companies handle user data in AI training processes. As AI chatbots become more sophisticated and integrated into daily life, users may need to be more cautious about the information they share, even in seemingly private conversations with AI assistants.

References

Summarized by

Navi

[1]

Related Stories

Recent Highlights

1

Samsung unveils Galaxy S26 lineup with Privacy Display tech and expanded AI capabilities

Technology

2

Anthropic refuses Pentagon's ultimatum over AI use in mass surveillance and autonomous weapons

Policy and Regulation

3

AI models deploy nuclear weapons in 95% of war games, raising alarm over military use

Science and Research