Microsoft Unveils Mu: A Small Language Model Powering AI-Enhanced Windows 11 Settings

5 Sources

5 Sources

[1]

Meet Mu, the small language model in charge of Microsoft's Settings AI agent

In brief: Small language models are generally more compact and efficient than LLMs, as they are designed to run on local hardware or edge devices. Microsoft is now bringing yet another SLM to Windows 11, as users apparently need a few AI-powered hints to help them find specific OS settings and customize their PC experience. Microsoft recently announced Mu, a new small language model designed to integrate with the Windows 11 UI experience. Mu will work alongside Phi Silica - the language model previously introduced on Copilot+ PCs - to power the AI agent feature in Windows Settings that the company is currently developing. Like Phi Silica, Mu will run entirely on the system's neural processing unit, delivering responses at over 100 tokens per second, which should provide a smooth user experience in Settings. Microsoft describes Mu as an efficient 330-million-parameter model optimized for small-scale deployments. It is based on a transformer encoder-decoder architecture, where the encoder converts user input into a fixed-length latent representation, and the decoder generates output tokens from that representation. Microsoft designed Mu as a highly optimized "sibling" to Phi Silica, using several techniques to reduce the parameter count and improve efficiency. The SLM was trained using an unspecified number of A100 GPUs on the Azure Machine Learning platform, progressing through multiple phases and applying techniques that Redmond developed during work on the Phi models. After fine-tuning the model, the developers found that Mu delivers performance nearly comparable to Phi-3.5-mini, despite being just one-tenth its size. Furthermore, Microsoft collaborated with silicon partners Intel, AMD, and Qualcomm to ensure that Mu is fully optimized for running on local NPU-powered machines. What was the point of all these optimization efforts? According to Redmond, Mu will power the new "agentic" experience in the upcoming AI-enhanced Settings app. The AI and cloud giant aims to provide a new "chat" feature that lets Windows users ask settings-related questions in natural language and receive effective answers, even when they barely know what they're looking for. Agentic AI in the Settings app is supposed to improve Windows' ease of use. Microsoft acknowledged that training an SLM capable of handling (and modifying) hundreds of system settings was a challenge, and the company says it welcomes user feedback on the new feature. Personally, I never experienced any usability or discoverability issues with Settings during eight years of using Windows 10, yet Microsoft remains bullish about transforming Windows into an "AI-first" platform.

[2]

Microsoft's 'Mu' Will Power More Windows 11 Improvements

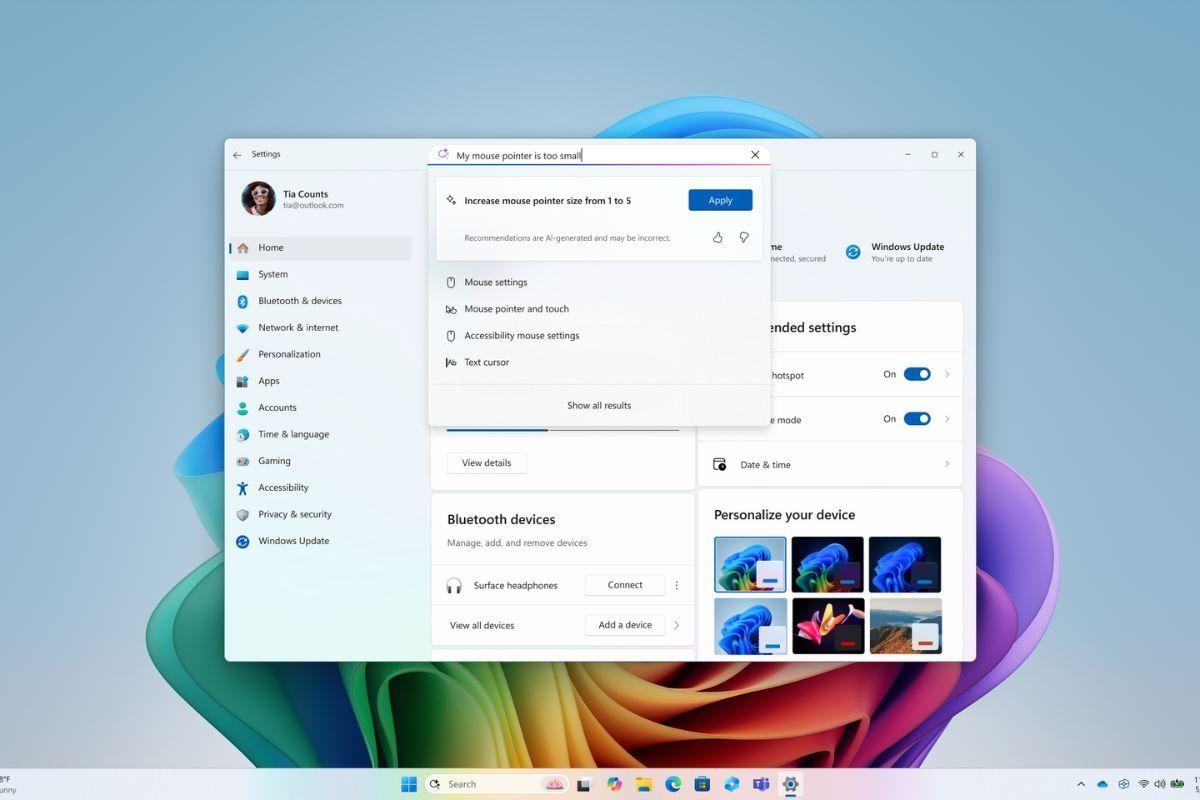

Large language models (LLMs) are the talk of the town, but small language models are also important for certain tasks, especially on power-limited devices like phones and laptops. Microsoft just revealed its new Mu language model, and it's already powering some Windows 11 features. Microsoft already uses a small language model called Phi Silica in Windows 11, allowing Copilot+ PC features to work without slowdowns on chipsets like the Snapdragon X Plus. Popular AI chatbots like ChatGPT, Copilot, and Gemini use more advanced LLMs that require powerful GPUs, but smaller models like Phi Silica and Mu can achieve similar results with a fraction of the processing power, at the cost of less versatility. Mu is a "micro-sized, task-specific language model" designed to run efficiently on a Neural Processing Unit, or NPU, like the ones found in recent Copilot+ PC computers. Microsoft used many different optimization techniques to achieve high performance on limited power, including a transformer encoder-decoder architecture, weight sharing in certain components to reduce the total parameter count, and only using hardware-accelerated operations. Microsoft says Mu can run at more than 200 tokens per second on a Surface Laptop 7, which is a faster response than you'd typically get from the free versions of ChatGPT or Gemini in a web browser. The Mu model is being used first for the search bar in the Windows 11 settings app, which rolled out recently to Windows Insiders on Snapdragon PCs. It can understand prompts like "how to control my PC by voice" or "my mouse pointer is too small" and locate the correct setting. It's not clear if Mu will be used for other Copilot+ PC features. Microsoft said in a blog post, "Managing the extensive array of Windows settings posed its own challenges, particularly with overlapping functionalities. For instance, even a simple query like "Increase brightness" could refer to multiple settings changes - if a user has dual monitors, does that mean increasing brightness to the primary monitor or a secondary monitor? To address this, we refined our training data to prioritize the most used settings as we continue to refine the experience for more complex tasks." Lightweight language models that run locally are some of the best uses for generative AI, since responsiveness and data privacy is much easier when there's no cloud servers involved. That didn't stop Recall from nearly being a security disaster, though. Source: Windows Blog

[3]

Microsoft Launches Mu, a Small Language Model That Runs Locally on Copilot+ PCs | AIM

The 330 million parameter model was trained using Azure's A100 GPUs and fine-tuned through a multi-phase process. Microsoft has introduced Mu, a compact on-device language model designed to run entirely on neural processing units (NPUs) in Copilot+ PCs. It is already powering the AI agent in the Windows Settings app for users in the Dev Channel under the Windows Insider Program. Mu is built on a Transformer encoder-decoder architecture, enabling faster, more efficient inference by separating input and output tokens. According to Microsoft, the model achieves over 100 tokens per second and reduces first-token latency by 47% compared to similarly sized decoder-only models. "This model addresses scenarios that require inferring complex input-output relationships," the company said in a blog post. "It delivers high performance while running locally." The 330 million parameter model was trained using Azure's A100 GPUs and fine-tuned through a multi-phase process. Mu uses features like dual LayerNorm, rotary positional embeddings, and grouped-query attention to improve inference speed and accuracy within constrained edge-device budgets. To meet device-specific requirements, Microsoft applied post-training quantisation, converting model weights from floating-point to lower-precision formats, such as 8-bit and 16-bit integers. These optimisations were carried out in collaboration with hardware partners including AMD, Intel, and Qualcomm. On Surface Laptop 7, Mu can generate over 200 tokens per second. One of Mu's first applications was as an agent in Windows Settings. It maps natural language queries to system actions, allowing users to adjust system settings using everyday language. The agent is integrated into the Settings search box and activates for multi-word queries, while short or vague inputs continue to trigger traditional search responses. While a larger Phi LoRA model initially met accuracy benchmarks, its latency exceeded acceptable limits. Mu, with task-specific fine-tuning, managed to meet both performance and latency goals. Microsoft said it scaled the training dataset to 3.6 million samples and expanded support from 50 to hundreds of system settings, using automated labelling, prompt tuning, and noise injection to improve model precision. "We observed that the model performed best on multi-word queries that conveyed clear intent, as opposed to short or partial-word inputs, which often lack sufficient context for accurate interpretation," the blog noted. In cases where a user query may imply multiple settings, such as "increase brightness" on a dual-monitor setup, training data was prioritised to reflect the most common user scenarios. Mu's development builds on earlier work with the Phi and Phi Silica models and is expected to serve as a foundation for future AI agents on Windows devices.

[4]

This AI Model Is Behind the Agents Feature in Windows 11 Settings

The Mu AI model responds at over 100 tokens per second Mu has an encoder-decoder architecture The AI model comes with 330 million parameters Microsoft has introduced Mu, a new artificial intelligence (AI) model that can run locally on a device. Last week, the Redmond-based tech giant released new Windows 11 features in beta, among which was the new AI agents feature in Settings. The feature allows users to describe what they want to do in the Settings menu, and uses AI agents to either navigate to the option or autonomously perform the action. The company has now confirmed that the feature is powered by the Mu small language model (SLM). In a blog post, the tech giant detailed its new AI model. It is currently deployed entirely on-device in compatible Copilot+ PCs, and it runs on the device's neural processing unit (NPU). Microsoft has worked on the optimisation and latency of the model and claims that it responds at more than 100 tokens per second to meet the "demanding UX requirements of the agent in Settings scenario." Mu is built on a transformer-based encoder-decoder architecture featuring 330 million token parameters, making the SLM a good fit for small-scale deployment. In such an architecture, the encoder first converts the input into a legible fixed-length representation, which is then analysed by the decoder, which also generates the output. Microsoft said this architecture was preferred due to the high efficiency and optimisation, which is necessary when functioning with limited computational bandwidth. To keep it aligned with the NPU's restrictions, the company also opted for layer dimensions and optimised parameter distribution between the encoder and decoder. Distilled from the company's Phi models, Mu was trained using A100 GPUs on Azure Machine Learning. Typically, distilled models exhibit higher efficiency compared to the parent model. Microsoft further improved its efficiency by pairing the model with task-specific data and fine-tuning via low-rank adaptation (LoRA) methods. Interestingly, the company claims that Mu performs at a similar level as the Phi-3.5-mini despite being one-tenth the size. The tech giant also had to solve another problem before the model could power AI agents in Settings -- it needed to be able to handle input and output tokens to change hundreds of system settings. This required not only a vast knowledge network but also low latency to complete tasks almost instantaneously. Hence, Microsoft massively scaled up its training data, going from 50 settings to hundreds, and used techniques like synthetic labelling and noise injection to teach the AI how people phrase common tasks. After training with more than 3.6 million examples, the model became fast and accurate enough to respond in under half a second, the company claimed. One important challenge was that Mu performed better with multi-word queries over shorter or vague phrases. For instance, typing "lower screen brightness at night" gives it more context than just typing "brightness." To solve this, Microsoft continues to show traditional keyword-based search results when a query is too vague. Microsoft also observed a language-based gap. In instances when a setting could apply to more than a single functionality (for instance, "increase brightness" could refer to the device's screen or an external monitor). To address this gap, the AI model currently focuses on the most commonly used settings. This is something the tech giant continues to refine.

[5]

What is Microsoft's Mu: A small AI model for Windows 11 Settings

Windows 11 is getting a new kind of assistant, not one that floats in a sidebar or takes over your screen, but one that subtly reshapes how you interact with your computer's settings. It's called Mu, a small AI model developed by Microsoft, and it lives quietly inside the Settings app on Copilot+ PCs. Built to understand natural language commands and help users navigate system preferences, Mu may be small in size, but it marks a big shift in how operating systems are adapting to AI. Unlike Microsoft's more publicized Copilot integrations powered by large cloud-based language models, Mu is entirely on-device. Think of it as the tech-savvy librarian behind the counter who can instantly point you to the exact book you're looking for, or better yet, fetch it for you. It's trained to understand requests like "make text easier to read" or "turn on battery saver," and then either guide users to the correct setting or apply the change directly (with permission). No menus. No tutorials. Just a few words typed or spoken in natural language. Also read: Microsoft's new AI agent for Windows PC: What all can it do? Mu is part of a new breed of artificial intelligence called Small Language Models (SLMs). These are scaled-down versions of the giants like GPT-4 or Claude, optimized to run efficiently on devices with limited computing resources. At just 330 million parameters, Mu is a featherweight compared to its larger counterparts, but it's been specifically trained to handle real-time Windows user tasks. It runs on dedicated Neural Processing Units (NPUs) found in new Copilot+ PCs, especially those powered by Qualcomm's Snapdragon X chips, with blazing fast response times under 500 milliseconds. Also read: 5 reasons why Microsoft Copilot should be your choice over Gemini The technology behind Mu is built on Microsoft's Phi family of models, internal research projects focused on maximizing AI performance within tight computational constraints. By distilling the capabilities of larger transformer models into a smaller encoder-decoder format, Mu is able to maintain a conversational feel while being nimble enough to run directly on your laptop. This local processing also means that no user data needs to leave your device, which is a significant privacy benefit at a time when AI is often associated with cloud surveillance and data scraping. For now, Mu's reach is modest. It only lives inside the Settings app and only on Copilot+ PCs. But that modesty is intentional. Microsoft isn't aiming to wow users with flashy conversations or personality-driven chatbots. Instead, Mu is part of a quiet revolution in user experience, making Windows feel more intuitive, more responsive, and more helpful without getting in your way. It's a utility, not a show. The rollout is currently limited to Windows Insiders. Users on the Dev Channel and Beta Channel with compatible hardware can already test the Mu-powered Settings agent. It's still early days, but the feedback so far indicates the feature is fast, effective, and surprisingly human-like in its understanding. And that's what sets Mu apart. It doesn't try to be everything. It tries to be useful. In an era of increasingly bloated AI features, Mu is refreshingly focused. It's a tool you forget exists, until you need it, and it works. Looking ahead, Microsoft plans to expand Mu's capabilities with support for additional languages and deployment across AMD and Intel Copilot+ PCs once those devices become widely available. There's also the potential for Mu-like models to quietly show up in other corners of the Windows experience, maybe File Explorer, maybe Settings Sync, maybe Accessibility tools. For now, though, Mu is exactly where it needs to be: behind the scenes, making your system feel smarter, without showing off. And that's the kind of AI upgrade most users won't even notice, but they'll never want to live without.

Share

Share

Copy Link

Microsoft introduces Mu, a compact on-device language model designed to run on neural processing units in Copilot+ PCs, powering an AI agent in Windows 11 Settings for improved user experience.

Microsoft Introduces Mu: A Compact AI Model for Windows 11

Microsoft has unveiled Mu, a new small language model (SLM) designed to enhance the Windows 11 user experience. This 330 million parameter model is specifically created to run entirely on neural processing units (NPUs) in Copilot+ PCs, marking a significant step in Microsoft's efforts to integrate AI into its operating system

1

2

.Mu's Architecture and Capabilities

Source: TechSpot

Mu is built on a transformer encoder-decoder architecture, which enables faster and more efficient inference by separating input and output tokens. This design allows Mu to achieve impressive performance, generating over 100 tokens per second and reducing first-token latency by 47% compared to similarly sized decoder-only models

3

.The model was trained using Azure's A100 GPUs and underwent a multi-phase fine-tuning process. Microsoft employed various optimization techniques, including dual LayerNorm, rotary positional embeddings, and grouped-query attention, to improve inference speed and accuracy within the constraints of edge devices

3

.Integration with Windows 11 Settings

Source: Gadgets 360

One of Mu's primary applications is powering the AI agent feature in the Windows 11 Settings app. This integration allows users to interact with system settings using natural language queries, making it easier to locate and modify specific options

1

4

.Microsoft faced challenges in training Mu to handle hundreds of system settings while maintaining low latency. The company scaled up its training dataset to 3.6 million samples and expanded support from 50 to hundreds of system settings. Techniques such as automated labelling, prompt tuning, and noise injection were used to improve the model's precision

3

4

.Performance and Optimization

Despite its compact size, Microsoft claims that Mu delivers performance nearly comparable to Phi-3.5-mini, despite being just one-tenth its size. The company collaborated with silicon partners Intel, AMD, and Qualcomm to ensure that Mu is fully optimized for running on local NPU-powered machines

1

2

.To meet device-specific requirements, Microsoft applied post-training quantization, converting model weights from floating-point to lower-precision formats, such as 8-bit and 16-bit integers

3

.Related Stories

User Experience and Privacy Considerations

Mu's on-device processing capabilities offer significant privacy benefits, as no user data needs to leave the device for AI-powered interactions

5

. The model is designed to understand multi-word queries that convey clear intent, providing more accurate responses to specific user requests3

4

.Microsoft acknowledges that the model performs best with detailed queries rather than short or vague inputs. To address this, traditional keyword-based search results are still shown when a query lacks sufficient context

4

.Future Developments and Implications

While Mu is currently limited to the Settings app on Copilot+ PCs, its development builds on earlier work with the Phi and Phi Silica models. Microsoft envisions Mu as a foundation for future AI agents on Windows devices, potentially expanding to other areas of the operating system

3

5

.As Microsoft continues to refine the Mu model and its applications, the company is actively seeking user feedback on this new AI-enhanced feature in Windows 11. This development represents a significant step towards Microsoft's goal of transforming Windows into an "AI-first" platform, although some users may question the necessity of such AI integration for basic system settings

1

5

.References

Summarized by

Navi

[2]

Related Stories

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Samsung unveils Galaxy S26 lineup with Privacy Display tech and expanded AI capabilities

Technology

3

Anthropic faces Pentagon ultimatum over AI use in mass surveillance and autonomous weapons

Policy and Regulation