Viral Call Recording App Neon Taken Down After Major Security Breach

17 Sources

17 Sources

[1]

Exclusive: Neon takes down app after exposing users' phone numbers, call recordings, and transcripts

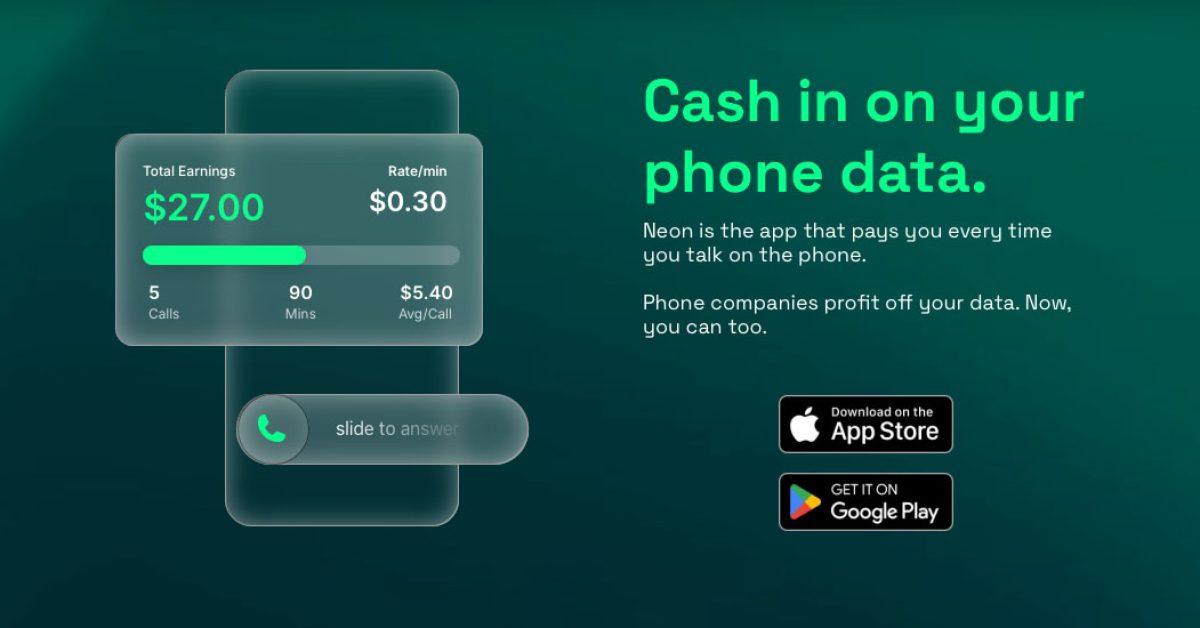

A viral app called Neon, which offers to record your phone calls and pay you for the audio so it can sell that data to AI companies, has rapidly risen to the ranks of the top-five free iPhone apps since its launch last week. The app already has thousands of users and was downloaded 75,000 times yesterday alone, according to app intelligence provider Appfigures. Neon pitches itself as a way for users to make by providing call recordings that help train, improve, and test AI models. But now Neon has gone offline, at least for now, after a security flaw allowed anyone to access the phone numbers, call recordings, and transcripts of any other user, TechCrunch can now report. TechCrunch discovered the security flaw during a short test of the app on Thursday. We alerted the app's founder, Alex Kiam (who previously did not respond to a request for comment about the app), to the flaw soon after our discovery. Kiam told TechCrunch later Thursday that he took down the app's servers and began notifying users about pausing the app, but fell short of informing his users about the security lapse. The Neon app stopped functioning soon after we contacted Kiam. At fault was the fact that the Neon app's servers were not preventing any logged-in user from accessing someone else's data. TechCrunch created a new user account on a dedicated iPhone and verified a phone number as part of the sign-up process. We used a network traffic analysis tool called Burp Suite to inspect the network data flowing in and out of the Neon app, allowing us to understand how the app works at a technical level, such as how the app communicates with its back-end servers. After making some test phone calls, the app showed us a list of our most recent calls and how much money each call earned. But our network analysis tool revealed details that were not visible to regular users in the Neon app. These details included the text-based transcript of the call and a web address to the audio files, which anyone could publicly access as long as they had the link. For example, here you can see the transcript from our test call between two TechCrunch reporters confirming that the recording worked properly. But the backend servers were also capable of spitting out reams of other people's call recordings and their transcripts. In one case, TechCrunch found that the Neon servers could produce data about the most recent calls made by the app's users, as well as providing public web links to their raw audio files and the transcript text of what was said on the call. (The audio files contain recordings of just those who installed Neon, not those they contacted.) Similarly, the Neon servers could be manipulated to reveal the most recent call records (also known as metadata) from any its users. This metadata contained the user's phone number and the phone number of the person they're calling, when the call was made, its duration, and how much money each call earned. A review of a handful of transcripts and audio files suggests some users may be using the app to make lengthy calls that covertly record real-world conversations with other people in order to generate money through the app. Soon after we alerted Neon to the flaw on Thursday, the company's founder, Kiam, sent out an email to customers alerting them to the app's shutdown. "Your data privacy is our number one priority, and we want to make sure it is fully secure even during this period of rapid growth. Because of this, we are temporarily taking the app down to add extra layers of security," the email, shared with TechCrunch, reads. Notably, the email makes no mention of a security lapse or that it exposed users' phone numbers, call recordings, and call transcripts to any other user who knew where to look. It's unclear when Neon will come back online or whether this security lapse will gain the attention of the app stores. Apple and Google have not yet responded to TechCrunch's requests for comment about whether or not Neon was compliant with their respective developer guidelines. However, this would not be the first time that an app with serious security issues has made it onto these app marketplaces. Recently, a popular mobile dating companion app, Tea, experienced a data breach, which exposed its users' personal information and government-issued identity documents. Popular apps like Bumble and Hinge were caught in 2024 exposing their users' locations. Both stores also have to regularly purge malicious apps that slip past their app review processes. When asked, Kiam did not immediately say if the app had undergone any security review ahead of its launch, and if so, who performed the review. Kiam also did not say, when asked, if the company has the technical means, such as logs, to determine if anyone else found the flaw before us or if any user data was stolen. TechCrunch additionally reached out to Upfront Ventures and Xfund, which Kiam claims in a LinkedIn post have invested in his app. Neither firm has responded to our requests for comment as of publication.

[2]

Neon, the No. 2 social app on the Apple App Store, pays users to record their phone calls and sells data to AI firms | TechCrunch

A new app offering to record your phone calls and pay you for the audio so it can sell the data to AI companies is, unbelievably, the No. 2 app in Apple's U.S. App Store's Social Networking section. The app, Neon Mobile, pitches itself as a money-making tool offering "hundreds or even thousands of dollars per year" for access to your audio conversations. Neon's website says the company pays 30¢ per minute when you call other Neon users and up to $30 per day maximum for making calls to anyone else. The app also pays for referrals. The app first ranked No. 476 in the Social Networking category of the U.S. App Store on September 18, but jumped to No. 10 at the end of yesterday, according to data from app intelligence firm Appfigures. On Wednesday, Neon was spotted in the No. 2 position on the iPhone's top free charts for social apps. Neon also became the No. 7 top overall app or game earlier on Wednesday morning, and became the No. 6 top app. According to Neon's terms of service, the company's mobile app can capture users' inbound and outbound phone calls. However, Neon's marketing claims to only record your side of the call unless it's with another Neon user. That data is being sold to "AI companies," the company's terms of service state, "for the purpose of developing, training, testing, and improving machine learning models, artificial intelligence tools and systems, and related technologies." The fact that such an app exists and is permitted on the app stores is an indication of how far AI has encroached into users' lives and areas once thought of as private. Its high ranking within the Apple App Store, meanwhile, is proof that there is now some subsection of the market seemingly willing to exchange their privacy for pennies, regardless of the larger cost to themselves or society. Despite what Neon's privacy policy says, its terms include a very broad license to its user data, where Neon grants itself a: "...worldwide, exclusive, irrevocable, transferable, royalty-free, fully paid right and license (with the right to sublicense through multiple tiers) to sell, use, host, store, transfer, publicly display, publicly perform (including by means of a digital audio transmission), communicate to the public, reproduce, modify for the purpose of formatting for display, create derivative works as authorized in these Terms, and distribute your Recordings, in whole or in part, in any media formats and through any media channels, in each instance whether now known or hereafter developed." That leaves plenty of wiggle room for Neon to do more with users' data than it claims. The terms also include an extensive section on beta features, which have no warranty and may have all sorts of issues and bugs. Though Neon's app raises many red flags, it may be technically legal. "Recording only one side of the phone call is aimed at avoiding wiretap laws," Jennifer Daniels, a partner at the law firm Blank Rome's Privacy, Security & Data Protection Group, tells TechCrunch. "Under [the] laws of many states, you have to have consent from both parties to a conversation in order to record it... It's an interesting approach," says Daniels. Peter Jackson, cybersecurity and privacy attorney at Greenberg Glusker, agreed -- and tells TechCrunch that the language around "one-sided transcripts" sounds like it could be a backdoor way of saying that Neon records users' calls in their entirety, but may just remove what the other party said from the final transcript. In addition, the legal experts pointed to concerns about how anonymized the data may really be. Neon claims it removes users' names, emails, and phone numbers before selling data to AI companies. But the company doesn't say how AI partners or others it sells to could use that data. Voice data could be used to make fake calls that sound like they're coming from you, or AI companies could use your voice to make their own AI voices. "Once your voice is over there, it can be used for fraud," says Jackson. "Now, this company has your phone number and essentially enough information -- they have recordings of your voice, which could be used to create an impersonation of you and do all sorts of fraud." Even if the company itself is trustworthy, Neon doesn't disclose who its trusted partners are or what those entities are allowed to do with users' data further down the road. Neon is also subject to potential data breaches, as any company with valuable data may be. In a brief test by TechCrunch, Neon did not offer any indication that it was recording the user's call, nor did it warn the call recipient. The app worked like any other voice-over-IP app, and the Caller ID displayed the inbound phone number, as usual. (We'll leave it to security researchers to attempt to verify the app's other claims.) Neon founder Alex Kiam didn't return a request for comment. Kiam, who is identified only as "Alex" on the company website, operates Neon from a New York apartment, a business filing shows. A LinkedIn post indicates Kiam raised money from Upfront Ventures a few months ago for his startup, but the investor didn't respond to an inquiry from TechCrunch as of the time of writing. There was a time when companies looking to profit from data collection through mobile apps handled this type of thing on the sly. When it was revealed in 2019 that Facebook was paying teens to install an app that spies on them, it was a scandal. The following year, headlines buzzed again when it was discovered that app store analytics providers operated dozens of seemingly innocuous apps to collect usage data about the mobile app ecosystem. There are regular warnings to be wary of VPN apps, which often aren't as private as they claim. There are even government reports detailing how agencies regularly purchase personal data that's "commercially available" on the market. Now, AI agents regularly join meetings to take notes, and always-on AI devices are on the market. But at least in those cases, everyone is consenting to a recording, Daniels tells TechCrunch. In light of this widespread usage and sale of personal data, there are likely now those cynical enough to think that if their data is being sold anyway, they may as well profit from it. Unfortunately, they may be sharing more information than they realize and putting others' privacy at risk when they do. "There is a tremendous desire on the part of, certainly, knowledge workers -- and frankly, everybody -- to make it as easy as possible to do your job," says Jackson. "And some of these productivity tools do that at the expense of, obviously, your privacy, but also, increasingly, the privacy of those with whom you are interacting on a day-to-day basis."

[3]

Founder of Viral Call-Recording App Neon Says Service Will Come Back, With a Bonus

The Neon app provides a monetary incentive for providing phone recordings as training data to AI companies. A controversial app that claims to pay people for recordings of their phone calls, which are then used to train AI models, could soon return after being disabled due to a significant security flaw. Alex Kiam, the founder of Neon, emailed app users on Tuesday to inform them that their payments are still in place, despite the app going dark. "Your earnings have not disappeared -- when we're back online, we'll pay you everything you've earned, plus a little bonus to thank you for your patience!" Kiam said in the email. He promised Neon would be back "soon" and apologized. He did not respond to a request for further comment. Neon was recently among the top five free iOS app downloads. However, it no longer appears on that list since it stopped functioning on Sept. 25, after TechCrunch reported on a significant security bug. According to TechCrunch, a flaw in the app allowed people to access calls from other users, transcripts and metadata about calls. Per Neon's terms of service, users who submit their phone recordings grant the company the right and license to "sell, use, host, store, transfer" as well as publicly display, reproduce and distribute the information "in any media formats and through any media channels." Neon founder Alex Kiam had confirmed the exposed data in an email to CNET last week. "We took down the servers as soon as TechCrunch informed us," he said. At the time, Neon stated that it was pausing the app to "add extra layers of security." An email to users said: "You will not be able to make calls or cash out, and the app will temporarily display $0 in your account, but your money has not disappeared. The app will be back online soon." Don't miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source. Before the app went offline, a legal expert warned against using it. David Hoppe, founder and managing partner of Gamma Law, which advises clients on technological issues, told CNET that because some states have consent rules on recording phone calls, anyone who uses Neon should be cautious or avoid it entirely. If users don't know if using the app is legal on both ends of a call, he warned, "Do not use this app." Neon is still available for download on iOS and Android. According to its website, the company records outgoing phone calls and pays you up to $30 a day for regular calls or 30 cents a minute if the call is to another Neon user. Calls to non-Neon users pay 15 cents a minute. The app also offers $30 for referrals. A Neon app FAQ says: "Once redeemed, payouts are typically processed within three business days, though timing may occasionally be shorter or longer." According to Tech Crunch, the iOS version reached as high as the No. 2 spot in social-networking apps before the flaw was announced. Its rating in Apple's App Store has diminished significantly over the past several days, with some reviews referring to it as a scam. The Android app only has a 1.8-star rating, and some user comments report network errors when trying to cash out. According to the company's FAQ, the call data it collects is anonymized and used to train AI voice assistants. "This helps train their systems to understand diverse, real-world speech," it says. AI companies need increasing amounts of data to train their models. "The industry is hungry for real conversations because they capture timing, filler words, interruptions and emotions that synthetic data misses, which improves the quality of AI models," said Zahra Timsah, CEO of i-Gentic AI, which works in AI compliance. "But that doesn't give apps a pass on privacy or consent," Timsah said. Neon promises it only draws from recording one side of the phone conversation, the caller's, which appears to be a way of skirting state laws that prohibit recording phone calls without permission. Many states only require one person on a call to be aware that a call is being recorded. But others, including California, Florida and Maryland, have laws requiring all phone call parties to consent to recording. It's unclear how Neon functions with calls to those states. For Neon-to-Neon calls, two-party consent would presumably be implied. The app purportedly doesn't record regular phone calls, only those made within the Neon app or received from another person using Neon. TechCrunch, one of the first sites to write about the app, pointed out that sharing voice data can be a security risk, even if a company promises to remove identifying information from the data. Neon could be pushing its luck, especially across states and countries, regarding privacy and IP laws or regulations, depending on how it handles consent and where the data ends up. "We don't know if there are sufficient safeguards to exclude the person on the other end of the conversation, but some level of consent would be required, and informing them of it being provided," said Valence Howden, a data governance expert and advisory fellow at Info-Tech Research Group. Howden said that even if the data is anonymized, AI might not have difficulty retroactively discovering who is on the line in a Neon conversation. "AI can infer a lot, correct or otherwise, to fill in gaps in what it receives, and may be able to provide direct links if names or personal information are part of the exchange," he said. Putting aside the requirements the Neon app had to meet to be included in Apple's App Store, it's reasonable to still have questions about the legality of recording phone calls, especially in states where all parties must consent. Hoppe said Neon's terms of service won't protect an app user if they face legal liability over recordings. And it doesn't help, legally speaking, that the person recording was paid for those recordings. "Imagine a user in California records a call with a friend, also in California, without telling them. That user has just violated California's penal code," Hoppe said. "They could face criminal charges and, equally scary, be sued civilly by the person they recorded." According to Hoppe, those violations could result in penalties of thousands of dollars per incident. "Unless you are absolutely certain of the consent laws in your state and the state of the person you're calling, and you have explicitly informed and received consent from every other person on the call, do not use this app," he warned.

[4]

Serious security flaw prompts take-down of popular call recording app Neon

The Neon app has a security flaw that can expose call data.The app has been taken offline for now.The developer expects the app to return in one to two weeks. People trying to earn money by sharing their personal phone conversations with the new Neon app will have to find another way to generate income, at least for now. On Thursday, the service was taken down by its developer after the discovery of a serious security flaw that let Neon users access the call recordings and other data of fellow users. TechCrunch said it found the security vulnerability during a test of the Neon app. The flaw exposed the phone numbers, call recordings, and transcripts of Neon users to anyone signed in to the app. In its research, TechCrunch learned that the servers used by Neon were failing to prevent any logged-in user from accessing another person's call data. While making test phone calls, TechCrunch's Zack Whittaker said he saw a list of his recent calls and how much money each call earned. That's the way the app is supposed to work. But using a network analysis tool, Whittaker uncovered details not available through the app, including a transcript of the call and a URL to the audio files, information anyone could view as long as they had the link. Also: This app will pay you $30/day to record your phone calls for AI - but is it worth it? In response to the flaw, TechCrunch alerted the developer, Alex Kiam, who took down the service and notified users via the following email message: "Thanks for using the app! Your data privacy is our number one priority, and we want to make sure it is fully secure even during this period of rapid growth. Because of this, we are temporarily taking the app down to add extra layers of security. You will not be able to make calls or cash out, and the app will temporarily display $0 in your account, but your money has not disappeared. The app will be back online soon. Stay tuned!" In a message sent to ZDNET, Kiam cited the security vulnerability as the reason for the app's vanishing act. Also: Does your generative AI protect your privacy? New study ranks them best to worst "We took down the server in order to protect people's privacy, given the security vulnerability," Kiam said. "So the app doesn't work right now. We are working to a) fix the vulnerability and b) do a thorough security audit. When both happen, we will relaunch. 1-2 weeks." In my testing, I was still able to find and download the app. But I was unable to kick off the registration process, which simply triggered an error message instead. Initially launched in July for iOS and Android users, Neon offers a new spin on a way to make money from your personal data. Officially known as Neon - Money Talks, the app pays you for certain phone conversations that you share with the company behind the app. Recordings of your phone calls are then sent to AI developers who use natural language to train their chatbots. Only calls made or received through the Neon app are recorded. Neon will give you 30 cents per minute when you speak with another Neon user. In that case, both sides of the conversation are recorded. You'll earn 15 cents per minute when you speak with a non-Neon user. Here, only your end of the call is recorded and shared. You can earn up to $30 a day by sharing your calls with Neon. The company will also dole out a $30 referral fee for each person you convince to use the app. Also: How to remove yourself from Whitepages in 5 quick steps - and why you should Neon promises to anonymize your calls, in which case it removes names, numbers, addresses, and other PII (personally identifiable information) before the calls are shared. The call recordings are encrypted and sold only to trusted and vetted AI companies, according to Neon. Despite the assurances to protect your privacy, the developer seems to have neglected to properly test and vet the app against security weaknesses. The notion of sharing your private phone calls to make a few bucks seems like a slippery road to take. But the reveal of this security vulnerability raises even more red flags about the app and the value of your privacy.

[5]

This App Sells Call Recordings to AI Firms, Now Trending on US App Store

Jibin is a tech news writer based in Ahmedabad, India, who loves breaking down complex information for a broader audience. Don't miss out on our latest stories. Add PCMag as a preferred source on Google. Apps that sell user data usually raise privacy concerns. But when the same apps offer money, people react differently. As TechCrunch reports, an app called Neon is trending on Apple's US App Store's Social Networking section. If you make phone calls using the app, the conversations get recorded and sold to third-party AI developers. "This helps train their systems to understand diverse, real-world speech," Neon says on its website. In exchange for recordings, you receive a fixed amount of money. You can earn $0.30 per minute when talking to a fellow Neon user, and $0.15 per minute when talking to a non-Neon user (only the Neon user's voice gets recorded). The earnings are capped at $30 a day, but you can get an additional $30 bonus for each successful referral. Why is Neon doing this? "We want to build a new model where you're not just the product, but a partner," it says. "Most companies sell your data without telling you, and they definitely don't cut you in. It's your data, and it's time you got paid for it." While it sells your recordings, it promises not to sell your personal information. All personally identifiable information, such as names, numbers, and addresses, is redacted before handing over the calls to "vetted companies," it claims on its website. However, the company's terms of service allow it to use the data however it sees fit, so use the app at your own risk. By submitting recordings, "you grant Neon Mobile a worldwide, exclusive, irrevocable, transferable, royalty-free, fully paid right and license (with the right to sublicense through multiple tiers) to sell, use, host, store, transfer, publicly display, publicly perform (including by means of a digital audio transmission), communicate to the public, reproduce, modify for the purpose of formatting for display, create derivative works as authorized in these Terms." According to Reddit discussions, the app pays close to $18-19 per hour. Payouts are processed 2-3 days after you earn your first 10 cents, Neon says, but some users have been complaining about lengthy delays. If you want Neon to stop recording your calls, you can just delete your account from app settings. Calls already recorded, however, may not get deleted; we couldn't spot a mention of that on the website. According to TechCrunch, the app peaked at No. 2 on Apple's US App Store. This suggests that many people are willing to trade privacy for money. Or maybe they are just trying to make a quick buck.

[6]

Neon, the Popular Free App That Pays for Call Recordings, Has Been Disabled

Despite a server "pause" and a reported security flaw, Neon remains one of the top-downloaded free apps in the iOS app store. A new app that promises to pay people for their mobile phone call records, which are then used to train AI models, has been disabled after a report of a major security flaw. Neon is still in the top 10 of iOS free app downloads, but after a report in TechCrunch Thursday about a security flaw that the news site found in the service, its servers have apparently been made unavailable to users. The app can still be downloaded, but it's no longer functioning. It's unclear whether the service will return or how long it will take. Emails to Neon Mobile, the company behind the app, have not been returned. Don't miss any of our unbiased tech content and lab-based reviews. Add CNET as a preferred Google source. According to TechCrunch, a flaw in the app allowed people using it to access calls from other users, transcripts and metadata about calls. The company sent a notification to Neon users that it's pausing the service, but did not explicitly mention why, TechCrunch said. Before the app was disabled, a legal expert warned about trouble the app might cause, separate from potential security flaws. David B. Hoppe, the founder and managing partner of Gamma Law, which advises clients on thorny technological issues, told CNET that because some states have consent rules on recording phone calls, people using Neon should be very careful or avoid it entirely. Without certainty of its legality, he warned, "do not use this app." Cash for calls Neon is still available (at least for the time being) on iOS and Android. The company records users' outgoing phone calls and pays them up to $30 a day for regular calls or 30 cents a minute if the call is to another Neon user. Calls to non-Neon users pay 15 cents a minute. The app also offers $30 for referrals. "You can cash out as soon as you earn your first ten cents," a Neon app FAQ says, "Once redeemed, payouts are typically processed within three business days, though timing may occasionally be shorter or longer." The company promises it only draws from the recording of one side of the phone conversation, the caller's, which appears to be a way of skirting state laws that prohibit recording phone calls without permission. While many states only require one person on a call to be aware that a call is being recorded, others, including California, Florida and Maryland, have laws that require all parties on a phone call to consent to recording. It's unclear how Neon functions with calls to those states. For Neon-to-Neon calls, two-party consent would presumably be implied. The app doesn't record regular phone app calls, only those made within the Neon app or received from another person using Neon. While the iOS version has shot up in popularity -- it reached as high as the No. 2 spot this week -- the Android version appears to be having some problems, at least according to some of the most recent reviews on the Google Play Store. The Android app only has a 2.4-star rating, and some user comments report network errors when people try to cash out on the Neon app. Training AI using your data According to the company's FAQ, the call data is anonymized and used to train AI voice assistants. "This helps train their systems to understand diverse, real-world speech," it says. AI companies need increasing amounts of data to train their models, which may be why Neon is offering the monetary incentive. "The industry is hungry for real conversations because they capture timing, filler words, interruptions and emotions that synthetic data misses, which improves quality of AI models," said Zahra Timsah, CEO of i-Gentic AI, which works in AI compliance. "But that doesn't give apps a pass on privacy or consent," Timsah said. Pushing legal limits TechCrunch, which was one of the first sites to write about the app, pointed out that sharing voice data can be a security risk, even if a company promises to remove identifying information from the data. Neon could be pushing its luck, especially across states and countries, when it comes to privacy and IP laws or regulations, depending on how it handles consent and where the data ends up. "We don't know if there are sufficient safeguards to exclude the person on the other end of the conversation, but some level of consent would be required, and informing them of it being provided," said Valence Howden, a data governance expert and advisory fellow at Info-Tech Research Group. Howden said that even if the data is anonymized, AI might not have a hard time retroactively discovering who is on the line in a Neon conversation. "AI can infer a lot, correct or otherwise, to fill in gaps in what it receives, and may be able to provide direct links if names or personal information are part of the exchange," he said. Can I be liable for call recordings? Putting aside the requirements the Neon app had to meet in order to be included in Apple's App Store, it's reasonable to still have questions about the legality of recording phone calls, especially in states where all parties must consent. That may be a major reason to avoid Neon, according to Hoppe, the legal expert. "In the United States, it is not legal to simply record a phone call because an app's terms of service say you can," Hoppe said. "So, imagine a user in California records a call with a friend, also in California, without telling them. That user has just violated California's penal code. They could face criminal charges and, equally scary, be sued civilly by the person they recorded." Violations, he said, could result in penalties of thousands of dollars per incident. Hoppe said Neon's terms of service won't protect an app user if they face legal liability over recordings. And it doesn't help, legally speaking, that the person recording was paid for doing so. "The user is the one pressing the record button," Hoppe said. "My strongest recommendation to anyone considering this would be: unless you are absolutely certain of the consent laws in your state and the state of the person you're calling, and you have explicitly informed and received consent from every other person on the call, do not use this app."

[7]

This app will pay you $30/day to record your phone calls for AI - but is it worth it?

The app sells recordings of your calls to AI companies for training. A new app is promising to give you hundreds or even thousands of dollars per year. And all you have to do is share your phone conversations for the purpose of training AI. Yep, there's always a catch. Free to iPhone owners via Apple's App Store and Android users at the Google Play Store, a new program called Neon - Money Talks will pay you for certain phone conversations that you share with the company behind the app. Recordings of your phone calls are then passed along to AI developers who use natural language to train their chatbots. Also: Does your generative AI protect your privacy? New study ranks them best to worst Before you nix the idea of sharing your private phone calls for a few bucks, know that Neon is near the top of the charts among the most popular free apps in the App Store. That must mean a lot of people are willing to put their privacy up for sale, at least at the right price. Before I come down too hard on this whole concept, let's go through the finer points. Only calls made or received through the Neon app are recorded. Any conversations you have through the regular phone app on your iPhone or Android phone are excluded. Neon will pay you 30 cents per minute when you speak with another Neon user. In that case, both sides of the conversation are recorded. You'll get 15 cents per minute when you speak with a non-Neon user. Here, only your end of the call is recorded, or at least shared. You can earn up to $30 a day max by sharing your calls with Neon. The company will also dole out a $30 referral fee for each person you convince to use the app. Also: How Google's new AI model protects user privacy without sacrificing performance As for your privacy, Neon promises that it anonymizes your calls, which means it removes names, numbers, addresses, and other PII (personally identifiable information) before the calls are shared. The call recordings are encrypted and sold only to trusted and vetted AI companies, according to Neon. After you install and open the app, you're asked to share your phone number for verification purposes. You're then prompted to enter your first name and email address and agree to the terms and conditions. To dive in, you'd then make a phone call through Neon just as you would with any other calling app. To qualify, though, you have to follow certain rules. You must conduct a normal phone call, meaning genuine, two-way conversations. No long silent calls with no conversation, no putting your phone on speakerphone and not talking with the other person, and no playing pre-recorded audio. To get paid, you can cash out your earnings as soon as you score your first ten cents. After you redeem a payout, you'll typically get paid within three business days. Also: Tor browser's great - but for more complete privacy protection, you need to add this Based on the popularity of Neon, many people appear to be fine with this whole idea. You do have control over the process since only calls made through the app are recorded. Neon promises to anonymize the recordings and remove personal details. And I guess in this age of AI and social media, more people seem perfectly OK sharing private tidbits of their lives. Plus, many AIs already try to capture the natural language used in your chats to train themselves. So why not get paid for it? "Telecom companies are profiting off your data, and we think you deserve a cut," Neon says on its FAQ page. "With your consent, we process your call recordings, remove all personal info, and sell the anonymized audio to companies training AI." But I still ask the question: At what price, privacy? Do you really want some AI vacuuming up your personal phone calls, even with the data supposedly anonymous? Is that worth a few dollars a day? Those are just some of the questions you might want to ask yourself if you're thinking of giving Neon a shot.

[8]

Call-recording app Neon goes offline after security flaw uncovered

Neon is an call-recording app that pays users for access to the audio, which the app in turn sells to AI companies for training their models. Since its launch last week, it quickly rose in popularity, but the service was taken offline today. TechCrunch reported that it found a security flaw that allowed any logged-in user to access other accounts' phone numbers, the phone numbers called, call recordings and transcripts. TechCrunch said that it contacted Neon founder Alex Kiam about the issue. "Kiam told TechCrunch later Thursday that he took down the app's servers and began notifying users about pausing the app, but fell short of informing his users about the security lapse," the publication reported. The app went dark "soon after" TC contacted Kiam. Neon does not appear to have a timeline about if or when the service will resume or what additional security protections it may add. The full report from TechCrunch is here and certainly worth reading if you've used Neon.

[9]

Neon, an App That Pays to Record Your Phone Calls Hit #2 on the App Store, Taken Down Over Security Flaw

Neon wants use the calls to train AI, but a security concern forced it to go offline, for now. After coming out of nowhere, a viral new app that pays people to record their phone calls for the purpose of training AI has been yanked offline after a security flaw allegedly exposed user data. Neon founder Alex Kiam told Gizmodo in an email that the app's servers are down while the team patches the vulnerability and conducts a security audit to ensure the issue doesn't happen again. Neon launched just last week and quickly shot to the number two spot on iPhone's top free app chart before it was taken down on Thursday. The app pays users who agree to record their calls and lets Neon sell those recordings and other data to AI companies to train their models and voice assistants. It was pitched as a way for people to earn some money from their data, which tech companies have long profited from. "Companies collect and sell your data every day. We think you deserve a cut," the company's website says. Things took a turn on Thursday after TechCrunch discovered and reported a major flaw that let nearly anyone access sensitive Neon user data, including phone numbers, call recordings, and transcripts. While testing the app, TechCrunch used the network-traffic tool Burp Suite to analyze the data coming in and out of the app. Neon's interface only shows a simple list of a user's recent calls and how much each earned. However, Burp Suite was able to get a lot more info from the app's back-end servers, like full call transcripts and public links to the raw audio files from other users' calls. Probing further, TechCrunch reporters discovered they could also access call metadata from other users. That information included both parties' phone numbers, the time and duration of a call, and how much each call earned. Kiam said the Neon team shut down the app's servers immediately after TechCrunch alerted them to the flaw. In an email to users, the company said it expects to be back online soon. "Your data privacy is our number one priority, and we want to make sure it is fully secure even during this period of rapid growth," the email reads. "Because of this, we are temporarily taking the app down to add extra layers of security." How Neon Works Users sign up with their phone number and grant Neon permission to record calls made via the app. Every time they place or receive a call from the app, it automatically records both sides of the conversation if the other party also uses Neon, or, in theory, just the Neon user's side if the person isn't on the app. The recordings and related data are then supposed to be anonymized -- stripped of identifying details -- and sold to vetted AI and data partners. Users earn $0.30 per minute for calls with another Neon user or $0.15 per minute when calling a non-user, capped at $30 a day.

[10]

This Free App Pays You to Record Your Calls for AI Training and It's a Hit

Neon is one of the top-downloaded free apps on the iOS App Store. A new app that promises to pay people for their mobile phone call records, which is then used to train AI models, is getting so popular it's entered Apple's list of top-ranked free apps. As of Thursday, Neon was the fourth most popular iOS free app, ahead of Google, Temu and TikTok. It had earlier been as high as the No. 2 spot. Neon is available on iOS and on Android and the idea is that the company records the outgoing phone calls of users and pays them up to $30 a day for regular calls or 30 cents a minute if the call is to another Neon user. Calls to non-Neon users pay 15 cents a minute. The app also offers $30 for referrals. "You can cash out as soon as you earn your first ten cents," a Neon app FAQ says, "Once redeemed, payouts are typically processed within 3 business days, though timing may occasionally be shorter or longer." The company promises it only draws from the recording of one side of the phone conversation, the caller's, which appears to be a way of skirting state laws that prohibit recording phone calls without permission. While many states only require one person on a call to be aware that a call is being recorded, others including California, Florida and Maryland, have laws that require all parties on a phone call to consent to recording. It's unclear how Neon is able to function with calls to those states. For Neon-to-Neon calls, two-party consent would presumably be implied. The app does not record regular phone app calls, only those made within the Neon app or received from another person using Neon. An email to Neon Mobile, the company behind the app, was not immediately returned. While the iOS version has shot up in popularity, the Android version appears to be having some problems, at least according to some of the most recent reviews on the Google Play store. The Android app only has a 2.4 star rating and some user comments report network errors when people try to cash out on the Neon app. According to the company's FAQ, the call data is anonymized and used to train AI voice assistants. "This helps train their systems to understand diverse, real-world speech," it says. As pointed out by TechCrunch, one of the first sites to write about the app, sharing voice data can be a security risk, even if a company promises to remove identifying information from the data.

[11]

This new app pays you to use your call recordings for AI training - but is it worth it?

The app sells recordings of your calls to AI companies for training. A new app is promising to give you hundreds or even thousands of dollars per year. And all you have to do is share your phone conversations for the purpose of training AI. Yep, there's always a catch. Free to iPhone owners via Apple's App Store and Android users at the Google Play Store, a new program called Neon - Money Talks will pay you for certain phone conversations that you share with the company behind the app. Recordings of your phone calls are then passed along to AI developers who use natural language to train their chatbots. Also: Does your generative AI protect your privacy? New study ranks them best to worst Before you nix the idea of sharing your private phone calls for a few bucks, know that Neon is near the top of the charts among the most popular free apps in the App Store. That must mean a lot of people are willing to put their privacy up for sale, at least at the right price. Before I come down too hard on this whole concept, let's go through the finer points. Only calls made or received through the Neon app are recorded. Any conversations you have through the regular phone app on your iPhone or Android phone are excluded. Neon will pay you 30 cents per minute when you speak with another Neon user. In that case, both sides of the conversation are recorded. You'll get 15 cents per minute when you speak with a non-Neon user. Here, only your end of the call is recorded, or at least shared. You can earn up to $30 a day max by sharing your calls with Neon. The company will also dole out a $30 referral fee for each person you convince to use the app. Also: How Google's new AI model protects user privacy without sacrificing performance As for your privacy, Neon promises that it anonymizes your calls, which means it removes names, numbers, addresses, and other PII (personally identifiable information) before the calls are shared. The call recordings are encrypted and sold only to trusted and vetted AI companies, according to Neon. After you install and open the app, you're asked to share your phone number for verification purposes. You're then prompted to enter your first name and email address and agree to the terms and conditions. To dive in, you'd then make a phone call through Neon just as you would with any other calling app. To qualify, though, you have to follow certain rules. You must conduct a normal phone call, meaning genuine, two-way conversations. No long silent calls with no conversation, no putting your phone on speakerphone and not talking with the other person, and no playing pre-recorded audio. To get paid, you can cash out your earnings as soon as you score your first ten cents. After you redeem a payout, you'll typically get paid within three business days. Also: Tor browser's great - but for more complete privacy protection, you need to add this Based on the popularity of Neon, many people appear to be fine with this whole idea. You do have control over the process since only calls made through the app are recorded. Neon promises to anonymize the recordings and remove personal details. And I guess in this age of AI and social media, more people seem perfectly OK sharing private tidbits of their lives. Plus, many AIs already try to capture the natural language used in your chats to train themselves. So why not get paid for it? "Telecom companies are profiting off your data, and we think you deserve a cut," Neon says on its FAQ page. "With your consent, we process your call recordings, remove all personal info, and sell the anonymized audio to companies training AI." But I still ask the question: At what price, privacy? Do you really want some AI vacuuming up your personal phone calls, even with the data supposedly anonymous? Is that worth a few dollars a day? Those are just some of the questions you might want to ask yourself if you're thinking of giving Neon a shot.

[12]

Viral call recording app taken offline after exposing user data - 9to5Mac

Earlier today, we covered the skyrocketing success of Neon, an app that pays users in exchange for recording their phone calls. Now, the app has gone offline, following the discovery of an egregious security breach. Here are the details. For the past few days, Neon Mobile has been making waves at the App Store, promising to pay "hundreds or even thousands of dollars per year" to users willing to share their audio conversations so that they can sell this data to AI companies. As of this morning, the app ranked 7th overall on the App Store's free charts, and 2nd in Social Networking. Now, it has gone dark following TechCrunch's discovery of a serious flaw: "But now Neon has gone offline, at least for now, after a security flaw allowed anyone to access the phone numbers, call recordings, and transcripts of any other user, TechCrunch can now report." As TechCrunch explains in detail in the original story, during the process of reporting about the app,their reporters decided to look into Neon's inner workings and data flows. After noticing they were able to intercept data about their own calls, they also managed to trick Neon's servers into handing call records and metadata from any other user: "This metadata contained the user's phone number and the phone number of the person they're calling, when the call was made, its duration, and how much money each call earned." To make matters worse, TechCrunch also revealed that, based on the transcripts and call recordings it accessed, some users were trying to game the app and maximize their payouts by secretly recording real-world conversations of people who didn't know they were being recorded. As the reporters alerted Neon's founder, Alex Kiam, about the security flaw, he took the app offline and contacted users informing them that the app would be temporarily taken down. However, he failed to mention the data breach: "Your data privacy is our number one priority, and we want to make sure it is fully secure even during this period of rapid growth. Because of this, we are temporarily taking the app down to add extra layers of security," the email, shared with TechCrunch, reads. TechCrunch reached out to Apple and Google about the app's predicament, but hasn't heard back from either company. The site also says that it is not sure whether the app will come back online.

[13]

New social app Neon sells your recorded phone calls for AI data training -- here's why this is a terrible idea

You definitely don't want to willingly give up your personal data for a few bucks A new app called Neon Mobile has skyrocketed to the number two spot in the App Store's social networking category which pays users for the recorded audio from their phone calls. As reported by TechCrunch, the app refers to itself as a "moneymaking tool" and offers to pay users for access to their audio conversations, which it then turns around and sells to AI companies. The fee is 30 cents a minute for phone calls to other Neon users, or up to $30 a day for calls to non-Neon users, and the app pays for referrals as well. It captures inbound and outbound calls on the best iPhones and best Android phones, but only records your side of the call if it's connected to another Neon user. This collected data is then sold to AI companies, according to Neon, "for the purpose of developing, training, testing, and improving machine learning models, artificial intelligence tools and systems, and related technologies." Neon says that it removes personal data like names, emails and phone numbers before this data gets handed over but doesn't specify how the AI companies it sells that data to will be using it. That's troubling in an era of deepfakes and phishing or vishing, and especially so when Neon's vague and inclusive license statement give it so much access to further potential usage. Those terms include beta features that have no warranty, and the data set is a gold mine for a potential breach. In TechCrunch's reporting, they state that while testing the app it did not provide proper notification that the user was even being recorded during the call - and the recipient wasn't alerted about possible recording either. Given the popularity of the app, many users seem unconcerned about selling out their privacy. And given the amount of data breaches that occur over the course of a year, many people may figure that data privacy is a lost cause already. However, there's a stark difference between having your car get broken into and leaving your keys in it. Just like with a data leak, willingly giving away huge chunks of personal information can leave you open to serious consequences. For instance, once your information is out of your control after you hand it over to someone else, it can be sold, resold, exposed in a breach, leaked to the dark web and more. AI models can reveal sensitive information, which may lead to potential issues with employment, lending or acquiring housing. Additionally, there are few safeguards or standards that are consistent with AI companies, and data breaches are becoming more and more common. Privacy issues could occur too and if a company is sold or goes out of business ,there could be legal complications. Your data is like currency, which is why I recommend going to great lengths to protect it by investing in one of the best identity theft protection services and by installing one of the best antivirus software suites on all of your devices. You also want to make sure that you don't fall for phishing attacks and don't click on unexpected links or fall for scams. Why willingly hand over this information for such a cheap price? The Neon Mobile app is climbing the rankings now but we'll see if it can maintain a top spot in Apple's App Store for long. Just like with the other security stories I cover, if something seems too good to be true, it probably is and that's the feeling I get with this new app. However, I could be wrong and Neon Mobile could be the first of many apps that pay your for your data for AI training. Only time will tell but based on what I've see and learned so far, I'd recommend avoiding it for now.

[14]

Neon, the viral app that pays users to record calls, goes offline after exposing data

A significant security flaw has resulted in the viral app that paid users to record their phone calls going offline. Credit: time99lek / iStock / Getty Images Plus Less than 24 hours after receiving attention and going viral, the Neon Mobile app has already exposed users' phone numbers, call recordings, and transcripts. Just yesterday, Mashable covered a viral new app that was rising up the App Store charts called Neon. The app paid users to record their phone calls, which Neon then provided to AI companies for training. Mashable warned users at the time to be cautious if using the app as there was too much unknown about the company, its founder, and their claims about keeping data safe and anonymous. Now, 24 hours later, Neon has gone offline after TechCrunch uncovered a security flaw that exposed users' phone numbers, call recordings, and call transcripts. "Your data privacy is our number one priority, and we want to make sure it is fully secure even during this period of rapid growth," reads an email sent to users by Neon founder Alex Kiam. "Because of this, we are temporarily taking the app down to add extra layers of security." As TechCrunch notes, while Kiam took down the app's servers and let users know about the downtime, the email failed to warn users about the specific security issue that exposed their phone numbers, call recordings, and transcripts. Also, it should be noted that it appears only the app's servers have been taken down, rendering the app itself, which remains in the App Store, available but useless. According to TechCrunch, they discovered the security flaw using a network analysis tool that showed data both being pushed into and sent out of the app. While users logged into the app itself could not access private user data, the data was exposed to anyone utilizing such a tool. This data included a URL to the recorded call's audio files, which was accessible to anyone with the link, and a text transcript of the call. However, it wasn't just call files and transcripts that were accessible. TechCrunch discovered that Neon's servers also exposed data concerning the most recent calls made by other users of the app. TechCrunch was able to access audio links and transcripts to those recorded calls as well. Furthermore, the metadata connected to those calls were also exposed. This metadata included the user's phone number, the phone number they called, how long the call was and what time it was made, as well as how much was earned from the call. It's not everyday that a chart-topping app in the App Store is outright pulled from distribution. TechCrunch reports that app platform Appfigures tracked that Neon was downloaded 75,000 times just yesterday. If and when Neon makes a comeback, it will certainly receive increased scrutiny to be sure it addressed the issues.

[15]

iPhone app that pays to record calls is somehow outranking social media

Social media kept all your data and never paid for it. Neon Mobile says it's time you get a cut. In the age of AI, privacy experts are raising alarms about the huge appetite for user data to feed it as training material. AI companies are paying billions in lawsuits for illicitly using books and videos to train their AI, and users are also being warned about the importance of their personal data, more so than ever. But it seems if you pay people to record something as personal as their phone calls, it's a hit formula. What's the big picture? "Cash in on your phone data." That's the motto behind an app called Neon Mobile, which claims to pay users so that it can record their phone calls. "Profit from your data and choose which calls to share fully anonymously," the company says on its product page, adding that whatever is recorded is never traced back to the user. The rates are not public, but Reddit discussions suggest that the app is paying over $19 per hour of recording. Recommended Videos The company is trying to pull off a techno-social stunt, it seems, noting that if Big Tech can collect your data and sell it, you deserve a cut for your phone calls, at least. And it seems a lot of users are okay with the idea of selling their call recordings. According to TechCrunch, the app peaked at No. 2 on the App Store in the US under the free category. To give you an idea, the app is currently ranking ahead of heavyweights such as Instagram, WhatsApp, X, Clash Royale, and Gmail. At the time of writing this article, SensorTower and AppFigures data showed that the app was still trending at No. 4 in the App Store. But why? Neon Mobile's argument is somewhat valid. Tech giants such as Meta and Google have collected user data and built an empire out of it over the years. Neon boldly claims that it will pay you outright for submitting a copy of your call recording. Why, you might ask. The company says the call recordings will be used to train AI models, and it holds the rights to sell them further to other interested parties. It is one of the scariest and outright dystopian disclaimers I have read in a while. Have a look: By Submitting Recordings or other information to the Service, you grant Neon Mobile a worldwide, exclusive, irrevocable, transferable, royalty-free, fully paid right and license (with the right to sublicense through multiple tiers) to sell, use, host, store, transfer, publicly display, publicly perform (including by means of a digital audio transmission), communicate to the public, reproduce, modify for the purpose of formatting for display, create derivative works as authorized in these Terms, and distribute your Recordings, in whole or in part, in any media formats and through any media channels, in each instance whether now known or hereafter developed. AI giants aren't doing anything too benevolent either. Anthropic says it will use Claude users' interactions to train AI models and will keep them on servers for five years. Following the launch of the Alexa+ assistant, Amazon ended on-device processing facility, which means all your voice data must go to Amazon's cloud servers.

[16]

Bizarre app that pays you to share your audio calls takes off

A bizarre app that invites you to record and share your audio calls so that it can sell the data to AI companies has become the second most downloaded social app in the app store. Neon Mobile says that users can sell their privacy for hundreds or even thousands of dollars per year by allowing their audio conversations to be used for AI training ... TechCrunch spotted the app's raid climb up the App Store charts. The app first ranked No. 476 in the Social Networking category of the U.S. App Store on September 18 but jumped to No. 10 at the end of yesterday [and by] Wednesday morning had became the No. 6 top app. In its marketing materials, the company argues that AI companies use your data for training, so why shouldn't you get paid? Telecom companies are profiting off your data, and we think you deserve a cut. With your consent, we process your call recordings, remove all personal info, and sell the anonymized audio to companies training Al. The company pays users $0.15 per minute, rising to $0.30 a minute when you're calling other Neon users. It says you can earn up to $30 per day. Neon says that only your side of the call is recorded unless both people are using the app when it will record both sides. When you make a call through the Neon app, it's recorded. If you're the only Neon user on the call, we'll only record your side. If both people are using Neon, we'll record both sides -- as long as at least one person starts the call in the app. Don't worry, our technology automatically filters out names, numbers, and other personal details. There's an interesting note in the privacy policy. If you delete the Neon app (but do not close your Neon account), your calls can still be recorded when other Neon users who have the app call you. If you want to stop call recordings with other Neon users, close your account through your profile settings. Some experts have noted that the company is very vague about only recording one side of the conversation. Peter Jackson, cybersecurity and privacy attorney at Greenberg Glusker, [told] TechCrunch that the language around "one-sided transcripts" sounds like it could be a backdoor way of saying that Neon records users' calls in their entirety but may just remove what the other party said from the final transcript. The company's terms and conditions also seem to allow it to do pretty much anything it likes with the data.

[17]

What is Neon? The app that pays users to record their phone calls.

A new app is paying users to record their phone calls for AI training purposes. Credit: Neon Mobile Get paid to record your phone calls and hand them over to third parties? It may seem a bit dystopian, but this app has quickly risen to the top of the App Store charts. Neon Mobile is a new app for iOS and Android devices that's quickly growing in popularity, at least according to the mobile app charts. On Apple's App Store, Neon is currently sitting at number 2 for free Social Networking apps and is in the number 4 position in the top rankings of all free apps in the App Store. But, what is Neon and why are so many people downloading it? Here's what you need to know. It's simple: Neon records users' phone calls and then pays them for it. Why does Neon want to record your phone calls? That's simple too: To collect your data to sell to third-parties. Neon is quite open about what they're using it for too. On Neon's website, the app makers say that they sell your anonymized data to "companies training AI." Neon says it removes all personal information so there's nothing identifiable being handed over to these AI companies. Neon's pitch to users is also pretty straightforward. "Telecom companies are profiting off your data, and we think you deserve a cut," Neon's website says. According to Neon, the app only records the Neon users' side of the call. The person that the Neon user calls is not recorded, unless they are also a Neon user too. Neon pays users 15 cents per minute when they talk to a non-Neon user and pays 30 cents per minute when talking to another Neon user. Neon users can make a maximum of $30 per day from calls and an unlimited amount of money from referring people to Neon. Each referral pays $30. To hit that $30 per day maximum for making calls, a user would need to talk to Neon users for 100 minutes per day or talk with non-Neon users for 200 minutes per day. Neon appears to have raised money from Upfront Ventures, according to Neon founder Alex Kiam. As TechCrunch points out, the company seems to be run out of a New York City apartment. This alone isn't a reason to be skeptical. Many startups have been run out of small living spaces before. However, there are some red flags. Neon Mobile doesn't provide much information about the company on its website. In fact, Alex Kiam simply refers to himself as "Alex" on the site. The company also simply promises to keep your private and identifiable information safe on a "trust us" basis. However, there's not many details surrounding Neon or their processes to keep that information anonymous that enables that trust. The reviews for Neon on the App Store and Google Play store are also mixed, with users reporting problems using the app or receiving their payout. It's unclear, however, if those are issues being experienced by just a few individual users or if it's more widespread. TechCrunch also noticed that Neon's privacy policy and terms has users giving away much more than they might have thought they were when signing up for the app. For example, Neon grants itself the following rights to your content: ...worldwide, exclusive, irrevocable, transferable, royalty-free, fully paid right and license (with the right to sublicense through multiple tiers) to sell, use, host, store, transfer, publicly display, publicly perform (including by means of a digital audio transmission), communicate to the public, reproduce, modify for the purpose of formatting for display, create derivative works as authorized in these Terms, and distribute your Recordings, in whole or in part, in any media formats and through any media channels, in each instance whether now known or hereafter developed. Neon also carves out exceptions for its guarantees to users regarding any beta features due to the fact that they might contain bugs. In addition, Neon is offering conflicting payout information. On the App Store, Neon's description claims that the company pays 45 cents per recording phone call minute and $25 per referral. This runs counter to the 30 cents per minute payment the $30 per referral as detailed on its website. Users should proceed with caution regarding Neon until more is known about the company. And, even then, the company's purpose is to sell your recorded phone calls to companies for AI training. Users should consider if that's worth the price they're being paid.

Share

Share

Copy Link

Neon, a popular app that pays users for recording their phone calls, has been taken offline due to a significant security flaw that exposed users' private data. The app's founder promises to return with improved security measures.

Rise and Fall of Neon: The Controversial Call Recording App

Neon, a viral app that rocketed to the top of Apple's U.S. App Store, has been taken offline following the discovery of a major security flaw. The app, which offered users money in exchange for recording their phone calls and selling the data to AI companies, quickly gained popularity but faced significant privacy concerns

1

2

.How Neon Works

Neon's business model was based on paying users for their call recordings, which were then sold to AI companies for training and improving machine learning models. The app offered 30 cents per minute for calls between Neon users and 15 cents per minute for calls to non-Neon users, with a daily cap of $30

2

3

.

Source: 9to5Mac

The Security Breach

TechCrunch discovered a critical security vulnerability that allowed any logged-in user to access other users' phone numbers, call recordings, and transcripts. This flaw exposed sensitive personal information and conversations to potential misuse

1

4

.

Source: ZDNet

Immediate Aftermath

Upon being alerted to the security issue, Neon's founder, Alex Kiam, took down the app's servers and notified users about the temporary shutdown. However, the initial communication failed to mention the security breach, citing only the need to 'add extra layers of security'

1

3

.Privacy and Legal Concerns

Legal experts raised concerns about the app's compliance with state laws regarding call recording consent. While Neon claimed to only record the user's side of non-Neon calls, questions remained about the legality and ethics of such practices

2

5

.Related Stories

Future of Neon

Despite the setback, Kiam has assured users that the app will return 'soon' with improved security measures. He promised that users' earnings are safe and that they will receive a bonus as compensation for the inconvenience

3

4

.Broader Implications for AI and Privacy

The Neon incident highlights the growing demand for real-world data in AI training and the ethical challenges it presents. It raises questions about the value of personal privacy in an era where data has become a valuable commodity

2

5

.

Source: PC Magazine

As AI companies continue to seek diverse, real-world speech data for training their models, the incident serves as a cautionary tale about the potential risks and responsibilities involved in collecting and managing sensitive user information

4

5

.References

Summarized by

Navi

[1]

Related Stories

Neon App Promises Return After Privacy Breach, Raising Concerns About AI Training Data

02 Oct 2025•Technology

Nearly 200 AI Apps on App Store Expose Millions of User Records in Massive Security Breach

20 Jan 2026•Technology

DeepSeek AI App Faces Serious Security and Privacy Concerns

05 Feb 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology