Neural Texture Compression: AI-Powered Solution for VRAM Efficiency in Gaming GPUs

4 Sources

4 Sources

[1]

VRAM-friendly neural texture compression inches closer to reality - enthusiast shows massive compression benefits with Nvidia and Intel demos

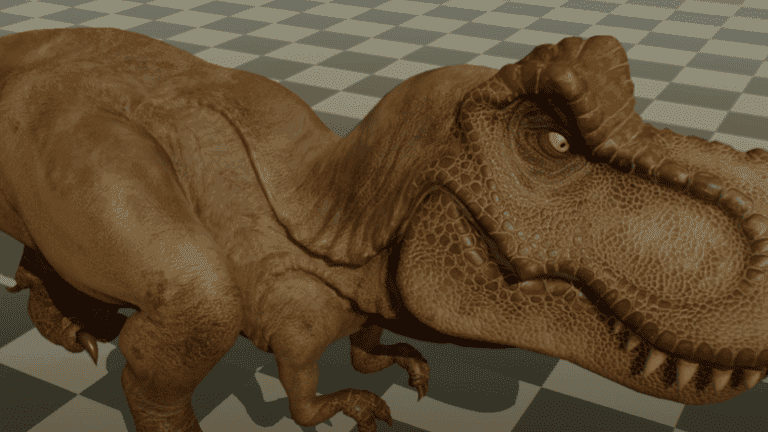

AI texture decompression promises better image quality, lower resource usage Nvidia's Blackwell architecture touts support for a raft of AI-powered "neural rendering" features. Among the more interesting of these is neural texture compression, or NTC. As developers pursue more realistic gaming experiences, texture sizes have also grown, creating more pressure on limited hardware resources like VRAM. An enthusiast has now demoed texture compression tech in action on both Nvidia and Intel test systems, demonstrating massive improvements in compression, a feature that will ultimately allow developers to do more with less VRAM, or to enable more features within the same GPU memory capacity. NTC promises to greatly reduce texture sizes on disk and in memory and to improve the image quality of rendered scenes compared to the block-based texture compression techniques widely used today. It allows developers to use a small neural network optimized for each material in a scene to decompress those textures. To enable neural rendering features like these, Nvidia, Microsoft, and other vendors have worked together to create a DirectX feature called Cooperative Vectors that gives developers fine-grained access to the matrix acceleration engines in modern Nvidia, Intel, and AMD GPUs. (Nvidia calls these Tensor Cores, Intel calls them XMX engines, and AMD calls them AI Accelerators). NTC hasn't appeared in a shipping game yet, but the pieces are coming together. A new video from YouTuber Compusemble shows that an NTC-powered future could be a bright one. Compusemble walks us through two practical demonstrations of NTC: one from Intel and the other from Nvidia. Intel's demo shows a walking T-Rex. The textures decompressed via NTC for this example are visibly crisper and sharper relative to those using the block compression method commonly employed today. The results from NTC look much closer to the native, uncompressed texture. At least on Compusemble's system, which includes an RTX 5090, the average pass time required increases from 0.045ms to 0.111ms at 4K, or an increase of 2.5x. Even so, that's a tiny portion of the overall frame time. Dramatically, NTC without Cooperative Vectors enabled requires a shocking 5.7 ms of pass time, demonstrating that Cooperative Vectors and matrix engines are essential for this technique to be practical. Nvidia's demo shows the benefits of NTC for VRAM usage. Uncompressed, the textures for the flight helmet in this demo occupy 272 MB. Block compression reduces that to 98 MB, but NTC provides a further dramatic reduction to 11.37 MB. As with Intel's dinosaur demo, there's a small computational cost to enabling NTC, but that tradeoff seems well worth it in exchange for the more efficient usage of fixed resources that the technique enables. Overall, these demos show that neural texture compression could have dramatic and exciting benefits for developers and gamers, whether that's reducing VRAM pressure for a given scene or increasing the visual complexity possible within a set amount of resources. We hope developers begin taking advantage of this technique soon.

[2]

Neural Texture Compression demo shows it can do wonders for VRAM usage

In context: Modern game engines can put severe strain on today's hardware. However, Nvidia's business decisions have left many GPUs with less VRAM than they should. Fortunately, improved texture compression in games helps make the most of what's available. Neural Texture Compression (NTC) is a new technique that improves texture quality while reducing VRAM usage. It relies on a specialized neural network trained to compress and decompress material textures efficiently on the fly. Nvidia has been developing the technology for years, though no developer has released a game that takes full advantage of it. Even so, PC gaming enthusiasts have shown that it works - and that it could have a significant impact on the industry. Compusemble, a YouTube channel focused on PC gaming and tech, recently shared a new video highlighting NTC's impressive capabilities. After an initial look earlier this year, the channel tested two NTC samples - one from Intel and one from Nvidia. The Intel example features a walking dinosaur, demonstrating how NTC outperforms standard block compression. The technology cuts VRAM use dramatically while maintaining nearly identical visual quality to uncompressed textures. However, both demos reveal that NTC demands significant computational power, making Cooperative Vectors essential for efficiently using a GPU's VRAM without sacrificing frame rates. Cooperative Vectors accelerate AI workloads for real-time rendering by optimizing vector operations, delivering a noticeable performance boost in both AI training and game rendering. Intel said it designed its T-Rex demo to specifically showcase Cooperative Vectors, a new feature in DirectX 12. The technology offers a standardized, cross-vendor approach to hardware-accelerated matrix multiplication units and works across different discrete GPU brands. Cooperative Vectors could help developers optimize AI-driven graphics techniques without being locked into a single GPU manufacturer's ecosystem. According to Compusemble's tests, Cooperative Vectors cut the average frame rendering time in Intel's dino demo at 4K (with NTC) from 5.77ms to 0.11ms - a 98-percent reduction. Nvidia's demo shows a smaller but still significant improvement, dropping from 1.44ms (4K, DLSS, NTC) to 0.74ms, a 49-percent decrease. Meanwhile, NTC reduces texture memory usage from 272MB uncompressed to just 11.37MB.

[3]

Intel has joined Nvidia with its own neural texture compression tech but it probably won't rescue your VRAM-starved 8 GB GPU any time soon

We can surely all agree it's a great pity that some otherwise decent modern GPUs are hobbled by a shortage of VRAM. I'm looking at you, Nvidia RTX 5060. So, what if there was a way to squeeze games into a much smaller memory footprint, without impacting image quality? Actually, there is, at least in part, and it's called neural texture compression (NTC). Indeed, the basic idea is nothing new, as we've been posting about NTC since the dark days of 2023. Not to overly flex, but our own Nick also got there ages ago with the Nvidia demo, which he experimented with back in February. However, as ever with a novel 3D rendering technology, the caveat was a certain green-tinged proprietary aspect. Nvidia's take on NTC is known as RTXNTC, and while it doesn't require an Nvidia GPU, it really only runs well on RTX 40 and 50 series GPUs. However, more recently, Intel has gotten in on the game with a more GPU-agnostic approach targeted at low-power GPUs that could open the technology out to a broader range of graphics cards. Now, both technologies have been combined in a video demo by Compusemble that shows off the benefits of each. And it's pretty impressive stuff. Roughly speaking, in both cases, the principles are the same, namely using AI to compress video game textures far more efficiently than conventional compression techniques. Actually, what NTC does is transform textures into a set of weights from which texture information can then be generated. But the basic idea is to maintain image quality while reducing memory size. In the simplest terms, doing that gives you two options. Either you can dramatically improve image quality in a similar memory footprint. Or you can dramatically reduce the memory footprint of textures with minimal image quality loss. The latter is particularly interesting for recent generations of relatively affordable graphics cards, many of which top out at 8 GB of VRAM, including the aforementioned RTX 5060 and its RTX 4060 predecessor. In modern games, there are frequent occasions when those GPUs have sufficient raw GPU power to produce decent frame rates, but insufficient VRAM to house all the 3D assets. That in turn forces the GPU to pull data in from main system memory, and performance craters. When Nick tested out the Nvidia demo, he found that the textures shrank in size by 96% at the cost of a 15% frame rate hit. The caveat to this is that he was using an RTX 4080 Super. Lower performance GPUs lack sufficient Tensor core performance to achieve such minimal frame-rate reductions, therefore requiring a different technique to maintain performance, which only shrinks the memory footprint by 64%. Well, I say only. That's still a very large reduction. Neural texture compression won't shrink all game data. Nvidia says it works best "for materials with multiple channels." That means not regular RGB textures, but materials with information about how it absorbs, reflects, emits, and refracts in the form of normal maps, opacity and occlusion. But that's actually a hefty chunk of the data in modern games, and the obvious hope is that reducing that footprint could make the difference between a game that fits in VRAM and one that doesn't. Anyway, the YouTube video is a neat little demonstration of the potential benefits of NTC. The snag is that it's not a new idea and here we are over two years from our first post on the subject and all we have to show for it are a couple of tech demos. Supporting NTC will require game devs to add it to their arsenal. The point is that it isn't something that could be added to, say, the graphics driver that can then be applied to legacy titles. So, even if game developers do begun to use it for new titles, the question is whether they'd bother to put the effort in to update existing games. There's also a chance that the gaming community might not take to NTC well, especially if it's used in a way that impacts image quality. Given that it's ultimately an AI tech that generates textures, it's open to accusations of "fakeness". In other words, here are some fake textures to go along with your fake frames. In then end, it will all come down to image quality and timing. The demos in the YouTube video show how it can produce impressive results. The question is how long it will take game developers to adopt it and what video card you'll have if and when they do.

[4]

AI texture decompression will breathe new life into GPUs with only 8GB of VRAM

Neural Texture Compression with DirectX 12's new Cooperative Vectors is set to be a game changer, boosting image quality and lowering the memory footprint. As an Amazon Associate, we earn from qualifying purchases. TweakTown may also earn commissions from other affiliate partners at no extra cost to you. When NVIDIA announced the new GeForce RTX 50 Series and RTX Blackwell architecture earlier this year, the company focused heavily on the new architecture's AI capabilities and how new 'neural shaders' and AI rendering tools will drive the next generation of real-time graphics. One of the latest technologies the company introduced is called RTX Neural Texture Compression, which sees a shift away from block-compressed textures to neurally compressed textures, saving up to 7X the VRAM. In the age of 4K gaming, where high-quality textures sit in VRAM, properly implementing this technology will dramatically reduce the VRAM requirement for running games at 1440p or 4K using high-quality textures. Neural texture compression is a reality in DirectX 12 thanks to a recent update introducing Cooperative Vectors for rendering. Intel also leverages this technology for neural texture compression, as seen in its walking T-Rex demonstration. YouTube channel Compusemble has put together a video of Intel and NVIDIA's AI-powered texture compression demos in action, and the results are impressive. Cooperative Vectors is the key to making this technology a reality, as with other AI rendering tools like DLSS, there's a cost in rendering time to use AI for texture compression. The good news is that in the video, the render time of traditional block compression increases only slightly, going from 0.045ms to 0.111ms when shifting to neural texture compression. Even better, the AI approach improves texture quality and detail. Without Cooperative Vectors, the render cost increases dramatically to 5.7ms. We're now at the proof of concept stage for Cooperative Vectors, and with these results, it's likely only a matter of time before we start implementing neural texture compression support. In the NVIDIA demo, the VRAM requirement decreases from 98MB to 11.37MB, which is enough to breathe new life into capable GPUs currently held back by VRAM limitations - namely, 8GB cards like the GeForce RTX 5060, RTX 5060 Ti 8GB, and Radeon RX 9060 XT 8GB.

Share

Share

Copy Link

Neural Texture Compression (NTC) emerges as a promising AI-driven technology to significantly reduce VRAM usage in GPUs while maintaining or improving texture quality, potentially revolutionizing game development and graphics rendering.

The Promise of Neural Texture Compression

Neural Texture Compression (NTC) is emerging as a game-changing technology in the world of computer graphics, particularly for gaming. This AI-powered technique promises to significantly reduce the memory footprint of textures while maintaining or even improving image quality. As modern games push the limits of graphics hardware, NTC could be the key to unlocking better performance and visual fidelity, especially for GPUs with limited VRAM

1

2

.How NTC Works

NTC utilizes small neural networks optimized for each material in a scene to decompress textures. This approach differs from traditional block-based compression techniques, allowing for more efficient storage and rendering of textures. The technology transforms textures into a set of weights from which texture information can be generated on-the-fly

3

.Impressive Demonstrations

Recent demonstrations by Intel and Nvidia have showcased the potential of NTC:

- Intel's demo featuring a walking T-Rex showed visibly crisper and sharper textures compared to standard block compression methods

1

. - Nvidia's demo highlighted dramatic VRAM savings, reducing texture memory usage for a flight helmet from 272 MB uncompressed to just 11.37 MB with NTC

1

2

.

Source: Tom's Hardware

The Role of Cooperative Vectors

A crucial element enabling NTC is DirectX 12's Cooperative Vectors feature. This technology provides developers with fine-grained access to matrix acceleration engines in modern GPUs from Nvidia, Intel, and AMD

1

3

. Cooperative Vectors significantly reduce the computational cost of NTC:- In Intel's demo, it cut the average frame rendering time at 4K from 5.77ms to 0.11ms - a 98% reduction

2

. - For Nvidia's demo, rendering time decreased from 1.44ms to 0.74ms, a 49% improvement

2

.

Potential Impact on Gaming

The implementation of NTC could have far-reaching effects on game development and player experience:

- VRAM Efficiency: Games could run more smoothly on GPUs with limited VRAM, like the 8GB models that are common in mid-range cards

4

. - Enhanced Visual Quality: Developers could pack more high-quality textures into the same memory footprint, leading to richer, more detailed game worlds

1

3

. - Cross-Platform Optimization: The standardized approach of Cooperative Vectors could help developers optimize AI-driven graphics techniques across different GPU brands

2

.

Source: TechSpot

Related Stories

Challenges and Considerations

Despite its promise, NTC faces some hurdles:

- Adoption by Developers: The technology requires integration into game engines and workflows, which may take time

3

. - Performance Trade-offs: While the memory savings are significant, there is a small computational cost that needs to be balanced

1

. - Community Acceptance: Some gamers might be skeptical of AI-generated textures, potentially viewing them as "fake"

3

.

Future Outlook

As NTC matures, it could become a standard feature in game development, potentially extending the lifespan of GPUs with lower VRAM and enabling more complex graphics in future games. However, its widespread adoption will depend on how quickly game developers embrace the technology and how well it performs across a range of hardware configurations

3

4

.Neural Texture Compression represents a significant step forward in graphics technology, leveraging AI to address the growing demands of modern gaming. As the industry continues to push the boundaries of visual fidelity, technologies like NTC may prove crucial in delivering immersive experiences while managing hardware limitations.

References

Summarized by

Navi

[1]

Related Stories

Nvidia's Neural Texture Compression: A Game-Changer for VRAM Usage and Game Sizes

11 Feb 2025•Technology

Nvidia's AI Texture Compression: A Potential Game-Changer for 8GB GPUs

17 Jul 2025•Technology

NVIDIA Unveils RTX Kit: Ushering in the Era of Neural Rendering for Next-Gen Gaming Graphics

08 Jan 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation