Neurophos Raises $110M to Build Optical AI Chips That Challenge Nvidia's GPU Dominance

5 Sources

5 Sources

[1]

From invisibility cloaks to AI chips: Neurophos raises $110M to build tiny optical processors for inferencing

Twenty years ago, a Duke University professor, David R. Smith, used artificial composite materials called "metamaterials" to make a real-life invisibility cloak. While this cloak didn't really work like Harry Potter's, exhibiting limited ability to conceal objects from the light of a single microwave length, those advances in material science did eventually trickle down to electromagnetism research. Today, Austin-based Neurophos, a photonics startup spun out of Duke University and Metacept (an incubator run by Smith), is taking that research further to solve what may be the biggest problem facing AI labs and hyperscalers: how to scale computing power while keeping power consumption in check. The startup has come up with "metasurface modulators" with optical properties that enable it to serve as a tensor core processor for doing matrix vector multiplication -- math that is at the heart of a lot of AI work (particularly inferencing), currently performed by specialized GPUs and TPUs that use traditional silicon gates and transistors. By fitting thousands of these modulators on a chip, Neurophos claims, its "optical processing unit" is significantly faster than the silicon GPUs currently used en masse at AI data centers, and far more efficient at inferencing (running trained models), which can be a fairly expensive task. To fund the development of its chips, Neurophos has just raised $110 million in a Series A round led by Gates Frontier (Bill Gates' venture firm), with participation from Microsoft's M12, Carbon Direct, Aramco Ventures, Bosch Ventures, Tectonic Ventures, Space Capital, and others. Now, photonic chips are nothing new. In theory, photonic chips offer higher performance than traditional silicon because light produces less heat than electricity, it can travel faster, and is far less susceptible to changes in temperature and electromagnetic fields. But optical components tend to be much larger than their silicon counterparts, and can be difficult to mass-produce. And photonic chips also need converters to transform data from digital to analog and back, which can be large and take up a lot of power. Neurophos, however, posits that the metasurface it has developed can solve all of those problems in one swoop because it is about "10,000 times" smaller than traditional optical transistors. The small size, the startup claims, enables it to fit thousands of units on a chip, which results in far more efficiency than traditional silicon because the chip can do many more calculations at once. "When you shrink the optical transistor, you can do way more math in the optics domain before you have to do that conversion back to the electronics domain," Dr. Patrick Bowen, CEO and co-founder of Neurophos, told TechCrunch. "If you want to go fast, you have to solve the energy efficiency problem first. Because if you're going to take a chip and make it 100 times faster, it burns 100 times more power. So you get the privilege of going fast after you solve the energy efficiency problem." The result, Neurophos claims, is an optical processing unit that can wildly outperform Nvidia's B200 AI GPU. The startup says its chip can run at 56 GHz, yielding a peak 235 Peta Operations per Second (POPS) and consuming 675 watts, compared to the B200, which can deliver 9 POPS at 1,000 watts. Bowen says Neurophos has already signed multiple customers, and companies including Microsoft are "looking very closely" at the startup's products. Still, the startup is entering a crowded market that's dominated by Nvidia, the world's most valuable public company, whose products have more or less underpinned the entire AI boom. There are also other companies working on photonics, though some, like Lighmatter, have pivoted to focusing on interconnects. And Neurophos is still a few years away from production, expecting its first chips to hit the market by mid-2028. But Bowen is confident the performance and efficiency advances of the metasurface will prove a sufficient moat. "What everyone else is doing is, and this includes Nvidia, in terms of the fundamental physics of the silicon, it's really evolutionary rather than revolutionary, and it's tied to the progress of TSMC. If you look at the improvement of TSMC nodes, on average, they improve in energy efficiency about 15%, and that takes a couple years," he said. "Even if we chart out Nvidia's improvement in architecture over the years, by the time we come out in 2028, we still have massive advantages over everyone else in the market because we're starting with a 50x over Blackwell in both energy efficiency and raw speed." And to address the manufacturing issue optical chips have traditionally faced, Neurophos says its chips can be made with standard silicon foundry materials, tools, and processes. The fresh funding will be used for the development of the company's first integrated photonic compute system, including datacenter-ready OPU modules, a full software stack, and early-access developer hardware. The company is also opening a San Francisco engineering site and expanding its HQ in Austin, Texas. "Modern AI inference demands monumental amounts of power and compute," Dr. Marc Tremblay, corporate vice president and technical fellow of core AI infrastructure at Microsoft, said in a statement. "We need a breakthrough in compute on par with the leaps we've seen in AI models themselves, which is what Neurophos' technology and high-talent density team is developing."

[2]

Bill Gates-backed silicon photonics startup develops optical transistors 10,000x smaller than current tech -- optical chip can process 1,000 x 1,000 multiplication matrices

Will this let us break free from the limitations of current silicon technology? Neurophos, an AI chip started based in Austin, Texas and backed by Bill Gates' Gates Frontier Fund, says that it has developed an optical processing unit (OPU) that the company claims is ten times more powerful than Nvidia's latest Vera Rubin NVL72 AI supercomputer in FP4 / INT4 compute workloads, while still consuming a similar amount of power. According to The Register, the company achieves this by using a larger matrix and a much higher clock speed. "On chip, there is a single photonic sensor that is 1,000 by 1,000 in size," Neurophos CEO Patrick Bowen told the publication. This is about 15 times larger than the usual 256 x 256 matrix used in most AI GPUs. Despite that, the company was able to make its optical transistor around 10,000 times smaller than what's currently available. "The equivalent of the optical transistor that you get from Silicon Photonics factories today is massive. It's like 2 mm long," Bowen added. "You just can't fit enough of them on a chip in order to get a compute density that remotely competes with digital CMOS today." The company's first-generation accelerator will have "the optical equivalent" of one tensor core, at around 25 square mm in size. This pales in comparison with Nvidia's Vera Rubin chip, which is reported to have 576 tensor cores, but the difference is how Neurophos is using the photonic die. But aside from its larger 1,000 x 1,000 Matrix tile size, the startup's first OPU, which it calls the Tulkas T100, will operate at a cool 56 GHz -- much higher than the 9.1 GHz world record achieved on an Intel Core i9-14900KF and the 2.6 GHz boost clock on the Nvidia RTX Pro 6000. This allows it to beat Nvidia's AI GPUs despite appearing to be underpowered on paper. More importantly, Bowen says that it built its optical transistors using current semiconductor fabrication technologies, so it could potentially tap fabs like Intel or TSMC to mass produce them. Nevertheless, the chips are still in the testing phase and are not expected to enter volume production until 2028. It also needs to address challenges, like the need for massive amounts of vector processing units and static memory (SRAM). Photonics is a new frontier that many companies are paying attention to. Nvidia already uses Spectrum-X Ethernet photonics switch systems in its Rubin platform, while AMD is set to develop a $280 million hub, specifically geared toward researching silicon photonics. Either way, it appears that this latest development is just a new wrinkle on the photonics frontier, and we should expect many more developments to come as the technology matures. Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

[3]

Neurophos bets on optical transistors to bend Moore's Law

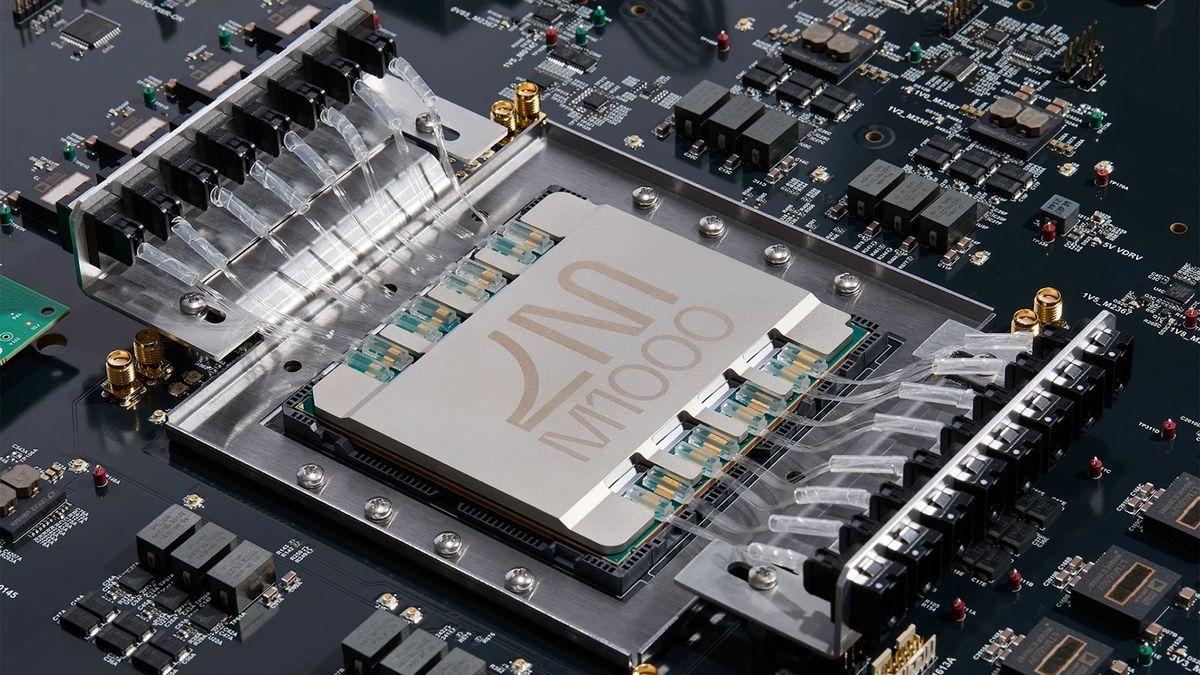

Neurophos is developing a massive optical systolic array clocked at 56GHz good for 470 petaFLOPS of FP4 compute As Moore's Law slows to a crawl and the amount of energy required to deliver generational performance gains grows, some chip designers are looking to alternative architectures for salvation. Neurophos is among those trying to upend Moore's Law and make good on analog computing's long-promised yet largely untapped potential. The Austin, Texas-based AI chip startup says it's developing an optical processing unit (OPU) that in theory is capable of delivering 470 petaFLOPS of FP4 / INT4 compute -- about 10x that of Nvidia's newly unveiled Rubin GPUs -- while using roughly the same amount of power. Neurophos CEO Patrick Bowen tells El Reg this is possible in part because of the micron-scale metamaterial optical modulators, essentially photonic transistors, that the company has spent the past several years developing. "The equivalent of the optical transistor that you get from Silicon Photonics factories today is massive. It's like 2 mm long. You just can't fit enough of them on chip in order to get a compute density that remotely competes with digital CMOS today," he explained. Neurophos' optical transistors, Bowen says, are roughly 10,000x smaller. "We got our first silicon back in May demonstrating that we could do that with a standard CMOS process, which means it's compatible with existing foundry technologies." Using these transistors, Neurophos claims to have developed the optical equivalent of a tensor core. "On chip, there is a single photonic tensor core that is 1,000 by 1,000 [processing elements] in size," he said. This is quite a bit bigger than what's typically seen in most AI accelerators and GPUs, which employ matrix multiplication engines that are at most 256x256 processing elements in size. However, rather than having dozens or even hundreds of these tensor cores, like we see in Nvidia's GPUs, Neurophos only needs one. Bowen tells us the tensor core on its first-gen accelerator will occupy roughly 25 mm. The rest of the reticle-sized chip is "the boondoggle of what it takes to support this insane tensor core," Bowen said. Specifically, Neurophos needs a whack-ton of vector processing units and SRAM to keep the tensor core from starving for data. This is because the tensor core itself -- and yes, again, there'll only be one of them on the entire reticle-sized die -- is operating at around 56 gigahertz. But because the matrix-matrix multiplication is done optically, Bowen notes that the only power consumed by the tensor core is what's needed to drive the opto-electrical conversion from digital to analog and back again. Neurophos says its first OPU, codenamed the Tulkas T100, will feature a dual reticle design equipped with 768 GB of HBM that's capable of 470 petaOPS while consuming 1 to 2 kilowatts of power under load. As impressive as all this sounds, it's important to remember that these figures are more like goal posts at this point. The chip is still in active development with full production not expected to begin until mid-2028. Even then, Bowen doesn't expect it to ship in large volumes. "We're talking thousands of chips. Not tens of thousands of chips." While Neurophos believes its optical tensor cores can address a broad array of AI inference workloads, it expects its first chip will be used primarily as a prefill processor. As we've previously discussed, LLM inference can be broken into two phases: a compute intensive prefill stage in which input tokens are processed, and a memory bandwidth bound stage in which output tokens are generated. Over the past year or so, we've seen chip designers like Nvidia disaggregate prefill and decode into separate pools of GPUs. For its latest generation of GPUs, Nvidia has developed a dedicated prefill accelerator that it calls Rubin CPX. Bowen envisions the Tulkas T100 filling a similar role as Rubin CPX. "The current vision, which is subject to change, is basically we would put one rack of ours, which is 256 of our chips, and that would be paired with something like an NVL576 rack," he said. Long-term, Bowen aims to tackle the decode phase as well, but notes that a variety of technologies, including co-packaged optics, will need to be developed before the startup is ready to take on token generation. While the Tulkas T100 won't ship until at least 2028, Bowen says the company is actively working on a proof of concept (PoC) chip to validate the compute and power densities it's claiming. This week, Neurophos completed a $110 million Series-A funding round led by Gates Frontier, with participation from Microsoft's venture fund and other investors, which Bowen says will fund development of this PoC. ®

[4]

Bill Gates' VC Fund Leads $110 Million Funding for Chip Upstart

Bill Gates' VC fund, Microsoft Corp.'s investment arm and Saudi Arabia's Aramco Ventures are investing $110 million in Neurophos Inc., a chip company that aims to develop a new technology capable of outperforming accelerators used to run AI models. The funding round was led by Gates Frontier, the venture investment arm of Microsoft's co-founder, the company told Bloomberg News. Microsoft's venture unit, M12, also participated in the round along with Aramco Ventures, the venture arm of Saudi Arabia's state-owned oil producer. Other investors included Bosch Ventures, Tectonic Ventures and Space Capital. Austin-based Neurophos is developing what it calls an optical processing unit, or OPU. It's a chip that's made up of one million micron-scale optical processing elements that rely on photons or light to transmit data. The more conventional graphics processing unit, or GPU, used for training and running AI models relies on electrons, or simply electricity, to transmit data. The market for chips that train and run AI models is dominated by Nvidia Corp., which has a market capitalization of more than $4 trillion. There are multiple companies chasing it, with technology pitched as better specifically for running the models as opposed to training them. Those include players like Advanced Micro Devices Inc. as well as Cerebras Systems Inc., which is in talks to raise fresh funds at a $22 billion valuation, Bloomberg reported last week. Neurophos claims its technology will provide 100 times more performance and energy efficiency than current leading chips.

[5]

Chip startup Neurophos gets $110M to replace electrons with photons and accelerate AI compute

Another day, and another artificial intelligence chipmaker is raking in millions of dollars in funding. Today it's a startup called Neurophos Inc., which has just closed on an oversubscribed early-stage funding round worth $110 million, bringing its total amount raised to $118 million to date. The Series A round was led by Gates Frontier and saw the participation of laundry list of other investors including Microsoft Corp.'s venture capital arm M12, Carbon Direct Capital, Aramco Ventures, Bosch Ventures, Tectonic Ventures, Space Capital, DNX Ventures, Geometry, MetaVC Partners, Morgan Creek Capital, Silicon Catalyst Ventures, Gaingels and more. Like many other chip startups, Neurophos says it's looking to solve the growing shortage of compute capacity that will be required to fuel the adoption of artificial intelligence technologies in the coming years. It explains that data centers struggle with critical limitations in terms of the total compute power and scalability they can provide, as well as the enormous energy they require, and it argues that the current trend of racing to build even more of them isn't the solution. Neurophos' answer is an entirely new kind of AI accelerator chip, called an "optical processing unit" or OPU, which integrates more than a million micron-scale optical processing elements on a single chip. The company says its prototype chips deliver up to 100 times the performance of existing AI processors, and will eventually provide data center operators with a vastly more powerful drop-in replacement for the graphics processing units they rely on today. Neurophos is led by its co-founder and Chief Executive Patrick Bowen, who says that the AI industry cannot afford to wait for Moore's Law to keep up with its humongous demands for compute capacity. "Our breakthrough in photonics unlocks an entirely new dimension of scaling by packing massive optical parallelism onto a single chip," he said. "This physics-level shift means both efficiency and raw speed improve as we scale up, breaking free from the power walls that constrain traditional GPUs." The startup's key innovation is the development of proprietary micron-scale metamaterial optical modulators, which are 10,000 times smaller than existing photonic elements, enabling photonic computing to become a reality for the first time. It integrates these modulators with compute in-memory technology to overcome traditional hardware bottlenecks by merging memory and processing to speed up the matrix multiplications central to AI, as well as reduce data movement. Neurophos' chips use photons to bypass the speed and energy limitations of electrons and achieve clock speeds of more than 100 gigahertz. In other words, it's developing an entirely new kind of AI accelerator that promises immense efficiency gains over GPUs, and it has already demonstrated an impressive performance of more than 300 trillion operations per second per watt in early tests, far surpassing existing standards. The design of its chips also results in a significant reduction in power consumption, the company said. The startup, which is partnering with the Norwegian data center operator Terakraft to launch a real-world pilot of its optical AI accelerator in 2027, says it's hopeful it can manufacture its first complete systems by early 2028, before gearing up production later that year. M12 Managing Partner Michael Stewart said the timeline is realistic enough that he's more than happy to underwrite it. Holger Mueller of Constellation Research said investors are willing to throw huge amounts of money at promising chip startups because the anticipated demand for AI compute is expected to become an unprecedented opportunity, and Nvidia can't fulfill it alone. Indeed, the analyst said, many companies are looking for faster and more affordable ways to run their AI models, as the scarcity of Nvidia's GPUs keeps on driving prices up. "There's a lot of innovation coming from the startup camp, and Neurophos is a great example of this, trying to squeeze millions of optical processing units onto its silicon chips," Mueller said. "The funding will help Neurophos to try and reach its next major milestone and show that it can produce and operate its OPUs in commercial quantities. The success of these efforts will help to determine if the OPU acronym becomes a household name in the same way that GPU has already become." Microsoft has emerged as one of the startup's biggest fans. It's not just backing it financially, but actively exploring the benefits of its OPUs. "Modern AI inference demands monumental amounts of power and compute," said Marc Tremblay, Microsoft's corporate vice president and technical fellow of core AI infrastructure. "We need a breakthrough in compute on par with the leaps we've seen in AI models themselves, which is what Neurophos' technology and high-talent density team is developing." Neurophos said the funds from today's round will accelerate the delivery of its first integrated photonic compute system, including data center-ready OPU modules, complete with a full software stack and early access developer hardware. In addition, it's planning to expand its Austin, Texas-based headquarters and open a new engineering center in San Francisco, where it'll be able to demonstrate its technology to prospective buyers.

Share

Share

Copy Link

Austin-based Neurophos has raised $110 million in Series A funding led by Bill Gates' Gates Frontier to develop optical processing units that promise 100x better performance than current AI chips. The startup's breakthrough uses metamaterial optical transistors 10,000 times smaller than existing photonic elements, aiming to address AI's mounting energy and compute challenges.

Neurophos Secures $110 Million Funding to Revolutionize AI Hardware

Neurophos, an Austin, Texas-based AI chip startup, has closed a $110 million Series A funding round led by Gates Frontier, Bill Gates' venture firm, with participation from Microsoft's M12, Aramco Ventures, Bosch Ventures, and others

1

. The company, spun out of Duke University and Metacept, aims to tackle what may be the biggest challenge facing AI labs and hyperscalers: scaling computing power while keeping energy consumption in check1

. This brings the startup's total funding to $118 million to date5

.Optical Processing Unit Built on Breakthrough Photonics Technology

At the heart of Neurophos' innovation is its optical processing unit, which integrates more than one million micron-scale optical processing elements on a single chip

5

. The company has developed proprietary metasurface modulators with optical properties that enable the chip to serve as a tensor core processor for matrix vector multiplication—math at the heart of AI inference work currently performed by specialized GPUs and TPUs1

. These optical transistors are approximately 10,000 times smaller than traditional optical components available from silicon photonics factories today2

3

.

Source: SiliconANGLE

CEO and co-founder Patrick Bowen explained the significance: "When you shrink the optical transistor, you can do way more math in the optics domain before you have to do that conversion back to the electronics domain"

1

. The startup claims its approach addresses the fundamental challenge that has plagued photonic computing: optical components have traditionally been much larger than silicon counterparts and difficult to mass-produce.Replace Electrons With Photons for Massive Performance Gains

Neurophos' first-generation chip, the Tulkas T100, features a single photonic tensor core measuring 1,000 by 1,000 processing elements—roughly 15 times larger than the typical 256 x 256 matrix used in most AI GPUs

2

3

. This optical AI chip operates at 56 GHz, yielding a peak 235 Peta Operations per Second (POPS) and consuming 675 watts, compared to Nvidia's B200, which delivers 9 POPS at 1,000 watts1

. The company claims its dual reticle design will be capable of 470 petaFLOPS of FP4/INT4 compute—about 10 times that of Nvidia's newly unveiled Rubin GPUs—while using roughly the same amount of power3

.

Source: The Register

The chip uses photons instead of electrons to transmit data, bypassing the speed and energy limitations inherent in traditional electrical systems. Early tests have demonstrated performance exceeding 300 trillion operations per second per watt, far surpassing existing standards for energy-efficient AI hardware

5

.Challenging Moore's Law and Nvidia's Market Dominance

Bowen argues that the AI industry cannot afford to wait for Moore's Law to keep pace with demand. "What everyone else is doing, including Nvidia, in terms of the fundamental physics of the silicon, it's really evolutionary rather than revolutionary," he stated

1

. He noted that TSMC node improvements average about 15% in energy efficiency every couple of years, while Neurophos claims a 50x advantage over Nvidia's Blackwell architecture in both energy efficiency and raw speed by its 2028 launch1

.The startup enters a market dominated by Nvidia, the world's most valuable public company with a market capitalization exceeding $4 trillion . Other competitors like Lightmatter have pivoted to focusing on interconnects, while companies like Cerebras Systems are reportedly raising funds at a $22 billion valuation .

Related Stories

Path to Production and Early Customer Adoption

Neurophos expects its first chips to hit the market by mid-2028, with the Tulkas T100 equipped with 768 GB of HBM consuming 1 to 2 kilowatts of power under load

3

. The company is actively working on a proof-of-concept chip to validate its claimed compute density and power efficiency3

. Initial production volumes are expected to be in the thousands rather than tens of thousands of chips3

.The startup envisions its first chip being used primarily as a prefill processor for LLM inference—the compute-intensive stage where input tokens are processed

3

. Bowen suggests pairing one rack of 256 Neurophos chips with something like an NVL576 rack from Nvidia3

. The company has already signed multiple customers and says Microsoft is "looking very closely" at its products1

. The startup is also partnering with Norwegian data center operator Terakraft to launch a real-world pilot of its optical AI accelerator in 20275

.Manufacturing Advantage Through Standard Processes

A critical advantage for Neurophos is that its chips can be manufactured using standard silicon foundry materials, tools, and processes

1

. Bowen confirmed the company received its first silicon back in May, demonstrating compatibility with standard CMOS processes and existing foundry technologies3

. This addresses a historical challenge with photonic chips, which have been difficult to mass-produce. The company could potentially tap fabs like Intel or TSMC for production2

.

Source: Tom's Hardware

Microsoft's Marc Tremblay, corporate vice president and technical fellow of core AI infrastructure, noted: "Modern AI inference demands monumental amounts of power and compute. We need a breakthrough in compute on par with the leaps we've seen in AI models themselves"

5

. The fresh funding will accelerate development of the company's first integrated photonic compute system, including datacenter-ready OPU modules and a full software stack1

.References

Summarized by

Navi

[1]

[3]

Related Stories

Nvidia Unveils Groundbreaking Silicon Photonics Technology for Next-Gen AI Data Centers

25 Aug 2025•Technology

Lightmatter Unveils Groundbreaking Photonic Interconnects for AI Chips

01 Apr 2025•Technology

Photonic Startups Attract Major Investments as AI Data Centers Seek Efficiency Boost

16 Oct 2024•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology