Nvidia and Memory Giants Collaborate on SOCAMM: A New Standard for AI-Focused Memory Modules

2 Sources

2 Sources

[1]

Nvidia, SK Hynix, Samsung and Micron reportedly working on new SOCAMM memory standard

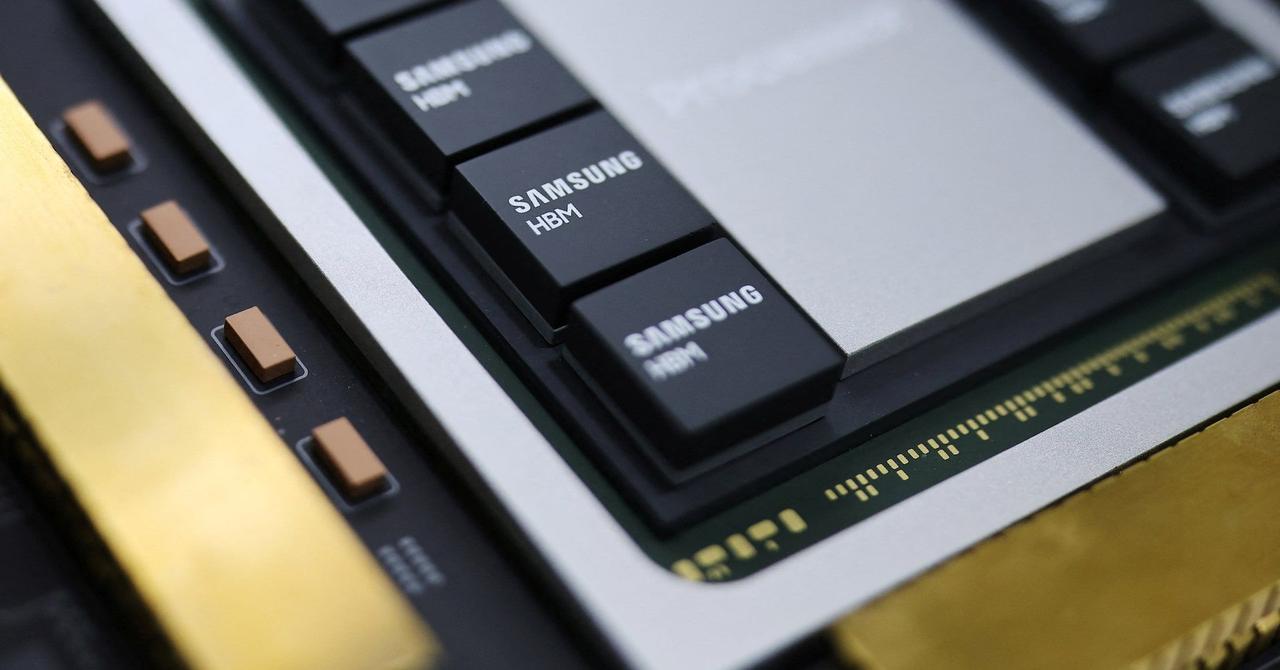

Nvidia is reportedly teaming up with memory manufacturers SK hynix, Micron and Samsung to create a new memory standard that's both small in size, but big on performance, according to a report via SEDaily. The new standard, named System On Chip Advanced Memory Module (SOCAMM) is in the works with all three large scale memory makers. The report claims (via machine translation): 'Nvidia and memory companies are currently exchanging SOCAMM prototypes to conduct performance tests'. We could also be seeing the new standard sooner rather than later, adding that 'mass production could be possible as early as later this year'. The SOCAMM module is suspected to be put into use for Nvidia's next-generation successor to Nvidia's Project Digits AI computers, announced at CES 2025. SOCAMM is expected to be a sizable upgrade over Low-Power Compression Attached Memory Modules (LPCAMM), and traditional DRAM, thanks to several factors. The first is that SOCAMM is set to be more cost-effective when compared to traditional DRAM that uses the SO-DIMM form-factor. The report further details that SOCAMM may place LPDDR5X memory directly onto the substrate, offering further power efficiency. Secondly, SOCAMM is reported to feature a significant number of I/O ports when compared to LPCAMM and traditional DRAM modules. SOCAMM has up to 694 I/O ports, outshining LPCAMM's 644, or traditional DRAM's 260. The new standard is also reported to possess a 'detachable' module, which may offer easy upgradability further down the line. When combined with its small hardware footprint (which SEDaily reports is around the size of adult's middle finger), it is smaller than traditional DRAM modules, which could potentially enhance total capacity. Finer details about SOCAMM are still firmly shrouded in mystery, as Nvidia appears to be developing the standard without any input from the Joint Electron Device Engineering council (JEDEC). This could end up being a big deal, as Nvidia is seemingly venturing out on its own to refine and create a new standard, with the key memory makers in tow. But, SO-DIMM's time in the sun has been ticking for a while, as the CAMM Common Spec was set to supersede it. The reason as to why that might be is down to the company's focus on AI workloads. Running local AI models demand a large amount of DRAM, and Nvidia would be wise to push for more I/O, capacity and more configurability. Jensen Huang made it clear at CES 2025 that making AI mainstream is a big focus for the company, though it's not gone without criticism. While Nvidia's initial Project Digits AI computers go on sale in May this year, the advent of SOCAMM may be a good reason for many to wait.

[2]

NVIDIA & Memory Partners Rumored To Be Developing "Compact" SOCAMM Modules, Focused Towards Personal AI Supercomputers

NVIDIA is rumored to be developing the exclusive and compact "SOCAMM" memory modules, which it hopes will take personal AI supercomputers like Project DIGITS to new levels. It seems like Team Green has decided to start the next big market opportunity for memory manufacturers. According to a report by the South Korean media outlet Sedaily, NVIDIA is in discussion with Samsung Electronics, SK Hynix, and Micron to develop a specialized DRAM module that would not only feature a compact size but much higher performance compared to existing standards like LPCAMM. NVIDIA and memory companies are currently exchanging SOCAMM prototypes and conducting performance tests. It is expected that mass production will be possible by the end of this year at the earliest. - Sedaily For now, details around the SOCAMM memory standard are pretty confined, except for the fact that it is a much more cost-effective implementation, considering that it has the advantage of being a lower-power module. Additionally, it is claimed that SOCAMM features an I/O port of up to 694, which is higher than both PC DRAM modules and LPCAMM, showing that performance will be pretty dominant among all DRAM products. Due to this high I/O port count, SOCAMM is said to solve the data bottleneck between the processor and memory. Interestingly, SOCAMM is a detachable memory, meaning it can be replaced with an upgraded standard without any trouble. With a small size, manufacturers can essentially squeeze in the optimal DRAM count on the modules, ensuring high capacities. This project is mainly led by NVIDIA and its partners for now, and the application of this standard is said to be targeted towards compact AI supercomputers like Project DIGITIS. Given that the industry is focused towards bringing AI computational capabilities on the palm of humans, it is imminent that standards like SOCAMM will see massive market adoption, potentially making it the next place for revenue generation. We are expected to see the standard in action by the end of the year.

Share

Share

Copy Link

Nvidia is reportedly working with SK Hynix, Samsung, and Micron to develop SOCAMM (System On Chip Advanced Memory Module), a new memory standard designed for high-performance AI applications. This compact and efficient module could revolutionize personal AI supercomputers.

Nvidia Spearheads Development of SOCAMM Memory Standard

Nvidia, in collaboration with memory manufacturing giants SK Hynix, Samsung, and Micron, is reportedly developing a new memory standard called System On Chip Advanced Memory Module (SOCAMM). This innovative technology is designed to be compact yet powerful, potentially revolutionizing the landscape of personal AI supercomputers

1

2

.Key Features of SOCAMM

The SOCAMM standard boasts several advantages over existing memory technologies:

-

Compact Size: SOCAMM modules are reportedly about the size of an adult's middle finger, significantly smaller than traditional DRAM modules

1

. -

High Performance: With up to 694 I/O ports, SOCAMM outperforms both LPCAMM (644 ports) and traditional DRAM (260 ports), potentially solving data bottlenecks between processors and memory

1

2

. -

Cost-Effectiveness: SOCAMM is expected to be more cost-effective compared to traditional DRAM using the SO-DIMM form factor

1

. -

Power Efficiency: The new standard may incorporate LPDDR5X memory directly onto the substrate, offering improved power efficiency

1

. -

Upgradability: SOCAMM features a "detachable" module design, allowing for easy future upgrades

1

2

.

Implications for AI Computing

SOCAMM is anticipated to be a significant upgrade over Low-Power Compression Attached Memory Modules (LPCAMM) and traditional DRAM. Its development aligns with Nvidia's focus on AI workloads, which demand large amounts of DRAM

1

.The new memory standard is expected to be utilized in Nvidia's next-generation successor to the Project Digits AI computers, announced at CES 2025. This move underscores Nvidia's commitment to making AI mainstream, as emphasized by CEO Jensen Huang

1

.Related Stories

Industry Impact and Timeline

Nvidia's development of SOCAMM without input from the Joint Electron Device Engineering Council (JEDEC) marks a significant departure from traditional industry practices

1

. This independent approach, coupled with collaboration from major memory manufacturers, could reshape the memory market.According to reports, Nvidia and its partners are currently exchanging SOCAMM prototypes and conducting performance tests. Mass production could begin as early as the end of this year, potentially making SOCAMM-equipped devices available in the near future

1

2

.Market Potential

As the tech industry increasingly focuses on bringing AI computational capabilities to personal devices, standards like SOCAMM are expected to see widespread adoption. This could create a new revenue generation opportunity for memory manufacturers and further solidify Nvidia's position in the AI hardware market

2

.While the finer details of SOCAMM remain undisclosed, its potential to enhance AI computing capabilities in compact devices makes it a development worth watching for both industry professionals and tech enthusiasts alike.

References

Summarized by

Navi

[1]

Related Stories

Nvidia Collaborates with Major Memory Makers on New SOCAMM Format for AI Servers

24 Mar 2025•Technology

Samsung gains ground in HBM4 race as Nvidia production ignites AI memory battle with SK Hynix, Micron

02 Jan 2026•Technology

SK hynix Leads the Charge in Next-Gen AI Memory with World's First 12-Layer HBM4 Samples

19 Mar 2025•Technology

Recent Highlights

1

Pentagon threatens Anthropic with Defense Production Act over AI military use restrictions

Policy and Regulation

2

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

3

Anthropic accuses Chinese AI labs of stealing Claude through 24,000 fake accounts

Policy and Regulation