NVIDIA and Stability AI Optimize Stable Diffusion 3.5 for RTX GPUs, Reducing VRAM Usage by 40%

2 Sources

2 Sources

[1]

NVIDIA TensorRT Boosts Stable Diffusion 3.5 Performance on NVIDIA GeForce RTX and RTX PRO GPUs

Performance on Stable Diffusion doubled with 40% less VRAM; plus, new TensorRT for RTX software development kit now available for developers. Generative AI has reshaped how people create, imagine and interact with digital content. As AI models continue to grow in capability and complexity, they require more VRAM, or video random access memory. The base Stable Diffusion 3.5 Large model, for example, uses over 18GB of VRAM -- limiting the number of systems that can run it well. By applying quantization to the model, noncritical layers can be removed or run with lower precision. NVIDIA GeForce RTX 40 Series and the Ada Lovelace generation of NVIDIA RTX PRO GPUs support FP8 quantization to help run these quantized models, and the latest-generation NVIDIA Blackwell GPUs also add support for FP4. NVIDIA collaborated with Stability AI to quantize its latest model, Stable Diffusion (SD) 3.5 Large, to FP8 -- reducing VRAM consumption by 40%. Further optimizations to SD3.5 Large and Medium with the NVIDIA TensorRT software development kit (SDK) double performance. In addition, TensorRT has been reimagined for RTX AI PCs, combining its industry-leading performance with just-in-time (JIT), on-device engine building and an 8x smaller package size for seamless AI deployment to more than 100 million RTX AI PCs. TensorRT for RTX is now available as a standalone SDK for developers. RTX-Accelerated AI NVIDIA and Stability AI are boosting the performance and reducing the VRAM requirements of Stable Diffusion 3.5, one of the world's most popular AI image models. With NVIDIA TensorRT acceleration and quantization, users can now generate and edit images faster and more efficiently on NVIDIA RTX GPUs. To address the VRAM limitations of SD3.5 Large, the model was quantized with TensorRT to FP8, reducing the VRAM requirement by 40% to 11GB. This means five GeForce RTX 50 Series GPUs can run the model from memory instead of just one. SD3.5 Large and Medium models were also optimized with TensorRT, an AI backend for taking full advantage of Tensor Cores. TensorRT optimizes a model's weights and graph -- the instructions on how to run a model -- specifically for RTX GPUs. Combined, FP8 TensorRT delivers a 2.3x performance boost on SD3.5 Large compared with running the original models in BF16 PyTorch, while using 40% less memory. And in SD3.5 Medium, BF16 TensorRT provides a 1.7x performance increase compared with BF16 PyTorch. The optimized models are now available on Stability AI's Hugging Face page. NVIDIA and Stability AI are also collaborating to release SD3.5 as an NVIDIA NIM microservice, making it easier for creators and developers to access and deploy the model for a wide range of applications. The NIM microservice is expected to be released in July. Previously, developers needed to pre-generate and package TensorRT engines for each class of GPU -- a process that would yield GPU-specific optimizations but required significant time. With the new version of TensorRT, developers can create a generic TensorRT engine that's optimized on device in seconds. This JIT compilation approach can be done in the background during installation or when they first use the feature. The easy-to-integrate SDK is now 8x smaller and can be invoked through Windows ML -- Microsoft's new AI inference backend in Windows. Developers can download the new standalone SDK from the NVIDIA Developer page or test it in the Windows ML preview. GTC Paris runs through Thursday, June 12, with hands-on demos and sessions led by industry leaders. Whether attending in person or joining online, there's still plenty to explore at the event. Each week, the RTX AI Garage blog series features community-driven AI innovations and content for those looking to learn more about NVIDIA NIM microservices and AI Blueprints, as well as building AI agents, creative workflows, digital humans, productivity apps and more on AI PCs and workstations.

[2]

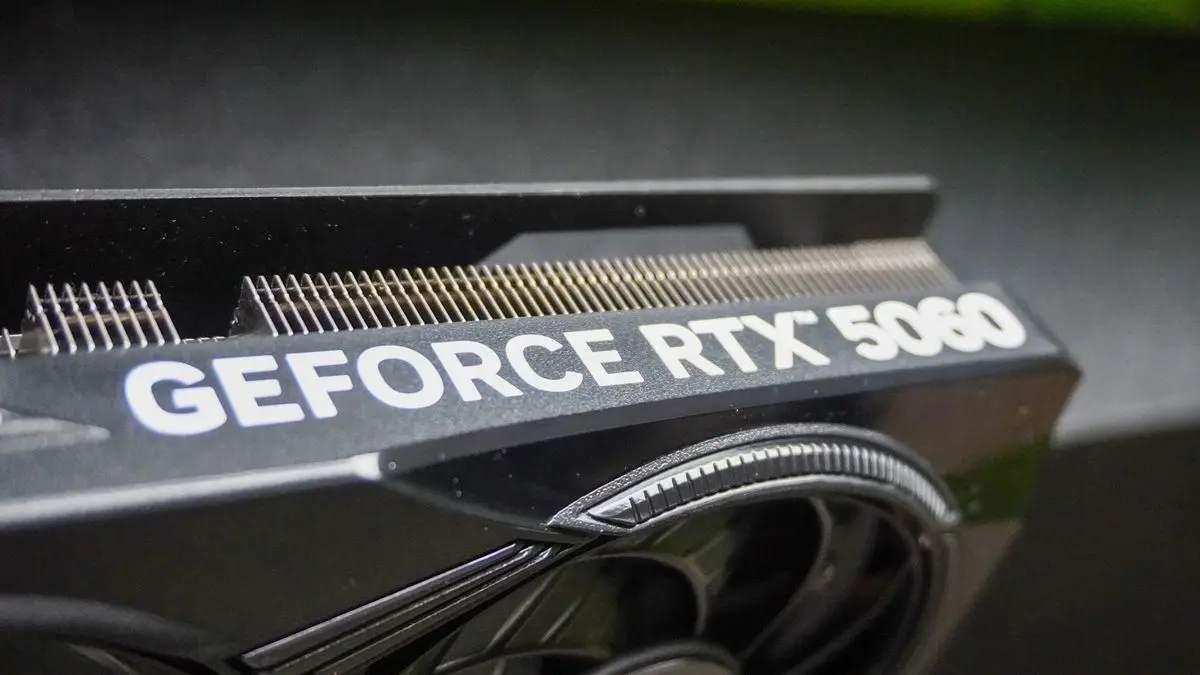

Stable Diffusion 3.5 VRAM requirement reduced by 40% to run on more GeForce RTX GPUs

As an Amazon Associate, we earn from qualifying purchases. TweakTown may also earn commissions from other affiliate partners at no extra cost to you. The VRAM capacity debate is currently being discussed in the PC gaming space. The consensus is that in 2025, you will need more than 8GB of VRAM for high-end 1440p and 4K gaming. VRAM is also a key and crucial component for running local AI, and the demand for more memory is growing alongside the arrival of more complex models. The powerful Stable Diffusion 3.5 large language model for creating images from text models uses 18GB of VRAM. This limits the use of the GeForce RTX 50 Series to the flagship GeForce RTX 5090. Well, not anymore, as NVIDIA has collaborated with Stability AI to quantify the model to FP8, reducing the VRAM requirement by 40% to 11GB. Alongside optimizations with TensorRT to double performance, this now means that five GeForce RTX 50 Series GPUs (the RTX 5060 Ti 16GB, RTX 5070, RTX 5070 Ti, RTX 5080, and RTX 5090) can run the model locally. According to NVIDIA, the TensorRT optimizations for Stable Diffusion 3.5 allow the model to fully take advantage of the Tensor Cores inside GeForce RTX hardware. The FP8 TensorRT version delivers a 2.3X performance boost to running the model in BF16 PyTorch while utilizing 40% less memory. The new optimized models are available via Stability AI's Hugging Face portal. In July, NVIDIA and Stability AI plan to release the model as an NVIDIA NIM microservice for creators looking to deploy it in a wide range of apps. Clever optimizations like this for new large AI models are fantastic to see. With the GeForce RTX 50 Series supporting FP4, we'll probably see more of these types of updates that will allow powerful generative AI tools to run locally on more GPUs, especially with NVIDIA announcing that there are now over 100 million RTX AI PCs worldwide.

Share

Share

Copy Link

NVIDIA collaborates with Stability AI to optimize Stable Diffusion 3.5, reducing VRAM requirements and doubling performance on RTX GPUs. This breakthrough enables more widespread use of the powerful AI image generation model.

NVIDIA and Stability AI Collaborate on Stable Diffusion 3.5 Optimization

NVIDIA has partnered with Stability AI to significantly enhance the performance and accessibility of Stable Diffusion 3.5, one of the world's most popular AI image generation models. This collaboration has resulted in substantial improvements in both efficiency and VRAM usage, making the model more accessible to a wider range of NVIDIA RTX GPUs

1

2

.Reducing VRAM Requirements

Source: TweakTown

The base Stable Diffusion 3.5 Large model initially required over 18GB of VRAM, limiting its use to high-end GPUs. Through quantization techniques, NVIDIA and Stability AI have managed to reduce the VRAM requirement by 40%, bringing it down to 11GB

1

. This optimization allows the model to run on five GeForce RTX 50 Series GPUs instead of just one, significantly expanding its potential user base2

.Performance Enhancements with TensorRT

In addition to VRAM reduction, NVIDIA has applied its TensorRT software development kit (SDK) to optimize Stable Diffusion 3.5 Large and Medium models. These optimizations take full advantage of the Tensor Cores in RTX GPUs, resulting in impressive performance gains

1

:- SD3.5 Large: 2.3x performance boost compared to BF16 PyTorch

- SD3.5 Medium: 1.7x performance increase over BF16 PyTorch

TensorRT for RTX: Reimagined for AI PCs

NVIDIA has also introduced a new version of TensorRT specifically designed for RTX AI PCs. This updated SDK offers several key improvements

1

:- Just-in-time (JIT) on-device engine building

- 8x smaller package size

- Seamless AI deployment to over 100 million RTX AI PCs

The new TensorRT for RTX is now available as a standalone SDK for developers, allowing for easier integration and optimization of AI models on RTX hardware

1

.Related Stories

Implications for AI Development and Deployment

These advancements have significant implications for AI developers and end-users:

- Wider accessibility: More GPUs can now run complex AI models like Stable Diffusion 3.5

- Improved efficiency: Reduced VRAM usage and increased performance allow for faster and more resource-efficient AI operations

- Easier deployment: The new TensorRT for RTX SDK simplifies the process of optimizing and deploying AI models on RTX hardware

Future Developments

NVIDIA and Stability AI are planning to release Stable Diffusion 3.5 as an NVIDIA NIM microservice in July, further simplifying access and deployment for creators and developers across various applications

1

.As AI models continue to grow in complexity and capability, optimizations like these will play a crucial role in making advanced AI tools more accessible to a broader range of users and devices. With NVIDIA's announcement of over 100 million RTX AI PCs worldwide, the potential impact of these improvements is substantial

2

.References

Summarized by

Navi

[1]

Related Stories

NVIDIA RTX delivers 3x faster AI video generation and 35% boost for language models on PC

06 Jan 2026•Technology

NVIDIA Expands Project G-Assist Accessibility and Enhances RTX Features

19 Aug 2025•Technology

NVIDIA's Blackwell GPUs and RTX 50 Series: Revolutionizing AI for Consumers and Creators

16 Jan 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation