Nvidia Collaborates with Major Memory Makers on New SOCAMM Format for AI Servers

2 Sources

2 Sources

[1]

Intel, AMD left out as Nvidia convinces Samsung, SK Hynix and Micron to develop new proprietary memory format for its own AI servers

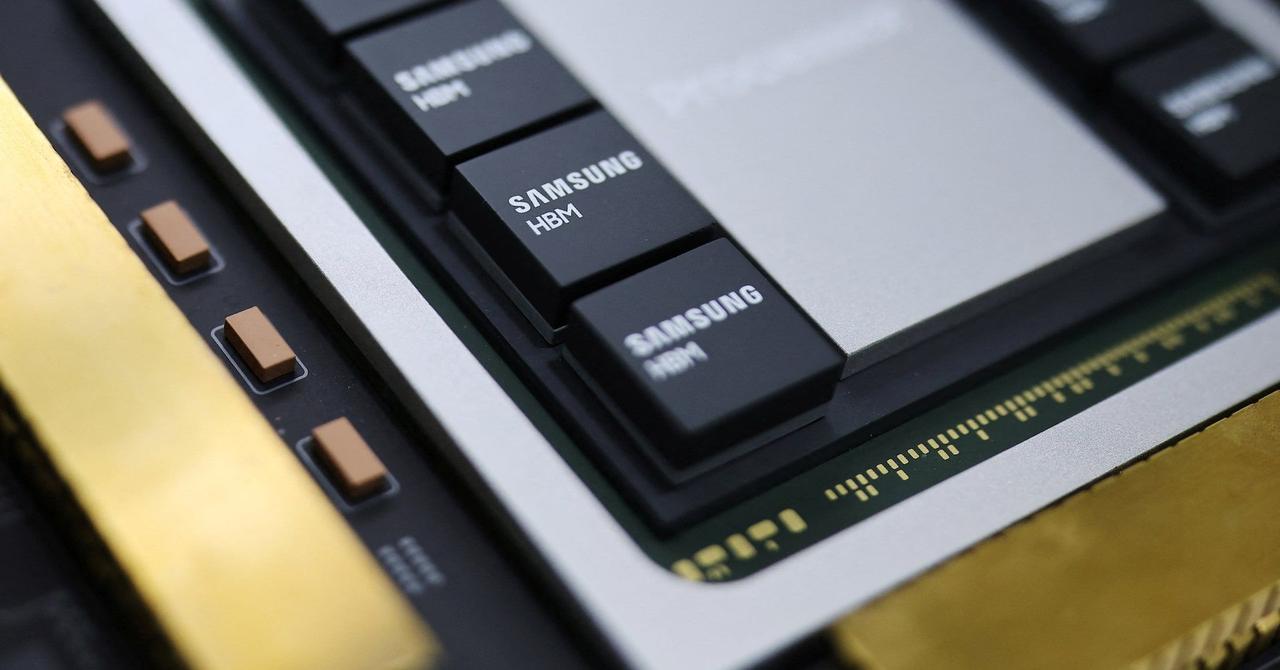

SK Hynix plans production of SOCAMM as AI infrastructure demand grows At the recent Nvidia GTC 2025, memory makers Micron and SK Hynix took the wraps off their respective SOCAMM solutions. This new modular memory form factor is designed to unlock the full potential of AI platforms and has been developed exclusively for Nvidia's Grace Blackwell platform. SOCAMM, or Small Outline Compression Attached Memory Module, is based on LPDDR5X and intended to address growing performance and efficiency demands in AI servers. The form factor reportedly offers higher bandwidth, lower power consumption, and a smaller footprint compared to traditional memory modules such as RDIMMs and MRDIMMs. SOCAMM is specific to Nvidia's AI architecture and so can't be used in AMD or Intel systems. Micron announced it will be the first to ship SOCAMM products in volume and its 128GB SOCAMM modules are designed for the Nvidia GB300 Grace Blackwell Ultra Superchip. According to the company, the modules deliver more than 2.5 times the bandwidth of RDIMMs while using one-third the power. The compact 14x90mm design is intended to support efficient server layouts and thermal management. "AI is driving a paradigm shift in computing, and memory is at the heart of this evolution," said Raj Narasimhan, senior vice president and general manager of Micron's Compute and Networking Business Unit. "Micron's contributions to the Nvidia Grace Blackwell platform yield performance and power-saving benefits for AI training and inference applications." SK Hynix also presented its own low-power SOCAMM solution at GTC 2025 as part of a broader AI memory portfolio. Unlike Micron, the company didn't go into too much detail about it, but said it is positioning SOCAMM as a key offering for future AI infrastructure and plans to begin mass production "in line with the market's emergence". "We are proud to present our line-up of industry-leading products at GTC 2025," SK Hynix's President & Head of AI Infra Juseon (Justin) Kim said. "With a differentiated competitiveness in the AI memory space, we are on track to bring our future as the Full Stack AI Memory Provider forward."

[2]

Micron and NVIDIA collaborate on new modular LPDDR5X memory solution, for GB300 Blackwell Ultra

TL;DR: Micron's SOCAMM, developed with NVIDIA, is a modular LPDDR5X memory solution for the GB300 Grace Blackwell Superchip. Micron's new SOCAMM, a modular LPDDR5X memory solution, was developed in collaboration with NVIDIA to support its just-announced GB300 Grace Blackwell Superchip announced at GTC 2025 this week. Micron's new modular SOCAMM memory modules provide over 2.5x higher bandwidth at the same capacity as RDIMMs, and they're super-small, occupying just one-third the size of the industry-standard RDIMM form factor. Thanks to LPDDR5X, the new SOCAMM modules use one-third the power of regular DDR5 DIMMs, while SOCAMM placements of 16-die stacks of LPDDR5X memory enable a 128GB memory module: the highest-capacity LPDDR5X memory solution, which is perfect for training large AI models and more concurrent users on inference workloads. Raj Narasimhan, senior vice president and general manager of Micron's Compute and Networking Business Unit, explained: "AI is driving a paradigm shift in computing, and memory is at the heart of this evolution. Micron's contributions to the NVIDIA Grace Blackwell platform yields significant performance and power-saving benefits for AI training and inference applications. HBM and LP memory solutions help unlock improved computational capabilities for GPUs". SOCAMM: a new standard for AI memory performance and efficiency: Micron's SOCAMM solution is now in volume production. The modular SOCAMM solution enables accelerated data processing, superior performance, unmatched power efficiency and enhanced serviceability to provide high-capacity memory for increasing AI workload requirements. Micron SOCAMM is the world's fastest, smallest, lowest-power and highest capacity modular memory solution,1 designed to meet the demands of AI servers and data-intensive applications. This new SOCAMM solution enables data centers to get the same compute capacity with better bandwidth, improved power consumption and scaling capabilities to provide infrastructure flexibility.

Share

Share

Copy Link

Nvidia partners with Samsung, SK Hynix, and Micron to develop SOCAMM, a new proprietary memory format for AI servers, offering higher performance and efficiency compared to traditional memory modules.

Nvidia Introduces SOCAMM: A New Memory Standard for AI Servers

In a significant development for the AI hardware industry, Nvidia has partnered with major memory manufacturers Samsung, SK Hynix, and Micron to create a new proprietary memory format called SOCAMM (Small Outline Compression Attached Memory Module). This collaboration, unveiled at Nvidia GTC 2025, aims to enhance the performance and efficiency of AI servers

1

2

.SOCAMM: Technical Specifications and Advantages

SOCAMM is based on LPDDR5X technology and is designed specifically for Nvidia's Grace Blackwell platform. The new memory format offers several advantages over traditional memory modules like RDIMMs and MRDIMMs:

- Higher bandwidth: SOCAMM delivers more than 2.5 times the bandwidth of RDIMMs at the same capacity

2

. - Lower power consumption: It uses only one-third of the power compared to regular DDR5 DIMMs

2

. - Smaller footprint: The compact 14x90mm design occupies just one-third the size of standard RDIMM form factors

1

2

. - Improved efficiency: SOCAMM is optimized for efficient server layouts and thermal management

1

.

Micron's SOCAMM Implementation

Micron has taken the lead in SOCAMM production, announcing that it will be the first to ship these products in volume. Key features of Micron's SOCAMM modules include:

- 128GB capacity: Achieved through 16-die stacks of LPDDR5X memory

2

. - Compatibility: Designed specifically for the Nvidia GB300 Grace Blackwell Ultra Superchip

1

2

. - AI optimization: Tailored for training large AI models and supporting more concurrent users on inference workloads

2

.

Related Stories

Industry Impact and Future Prospects

The introduction of SOCAMM represents a significant shift in the AI hardware landscape:

- Exclusive to Nvidia: SOCAMM is specific to Nvidia's AI architecture and cannot be used in AMD or Intel systems

1

. - Market positioning: SK Hynix is positioning SOCAMM as a key offering for future AI infrastructure

1

. - Production plans: While Micron has already begun volume production, SK Hynix plans to start mass production "in line with the market's emergence"

1

.

Expert Opinions

Industry leaders have expressed optimism about the potential of SOCAMM:

Raj Narasimhan, SVP at Micron, stated, "AI is driving a paradigm shift in computing, and memory is at the heart of this evolution. Micron's contributions to the Nvidia Grace Blackwell platform yield performance and power-saving benefits for AI training and inference applications"

1

2

.Juseon Kim, President at SK Hynix, commented, "We are proud to present our line-up of industry-leading products at GTC 2025. With a differentiated competitiveness in the AI memory space, we are on track to bring our future as the Full Stack AI Memory Provider forward"

1

.As the AI industry continues to evolve rapidly, SOCAMM represents a significant advancement in memory technology, potentially reshaping the landscape of AI server architecture and performance.

References

Summarized by

Navi

Related Stories

Nvidia and Memory Giants Collaborate on SOCAMM: A New Standard for AI-Focused Memory Modules

18 Feb 2025•Technology

Samsung gains ground in HBM4 race as Nvidia production ignites AI memory battle with SK Hynix, Micron

02 Jan 2026•Technology

SK hynix Leads the Charge in Next-Gen AI Memory with World's First 12-Layer HBM4 Samples

19 Mar 2025•Technology

Recent Highlights

1

Pentagon threatens Anthropic with Defense Production Act over AI military use restrictions

Policy and Regulation

2

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

3

Anthropic accuses Chinese AI labs of stealing Claude through 24,000 fake accounts

Policy and Regulation