NVIDIA Expands Omniverse with Generative AI for Physical AI Applications

4 Sources

4 Sources

[1]

NVIDIA Expands Omniverse With Generative Physical AI

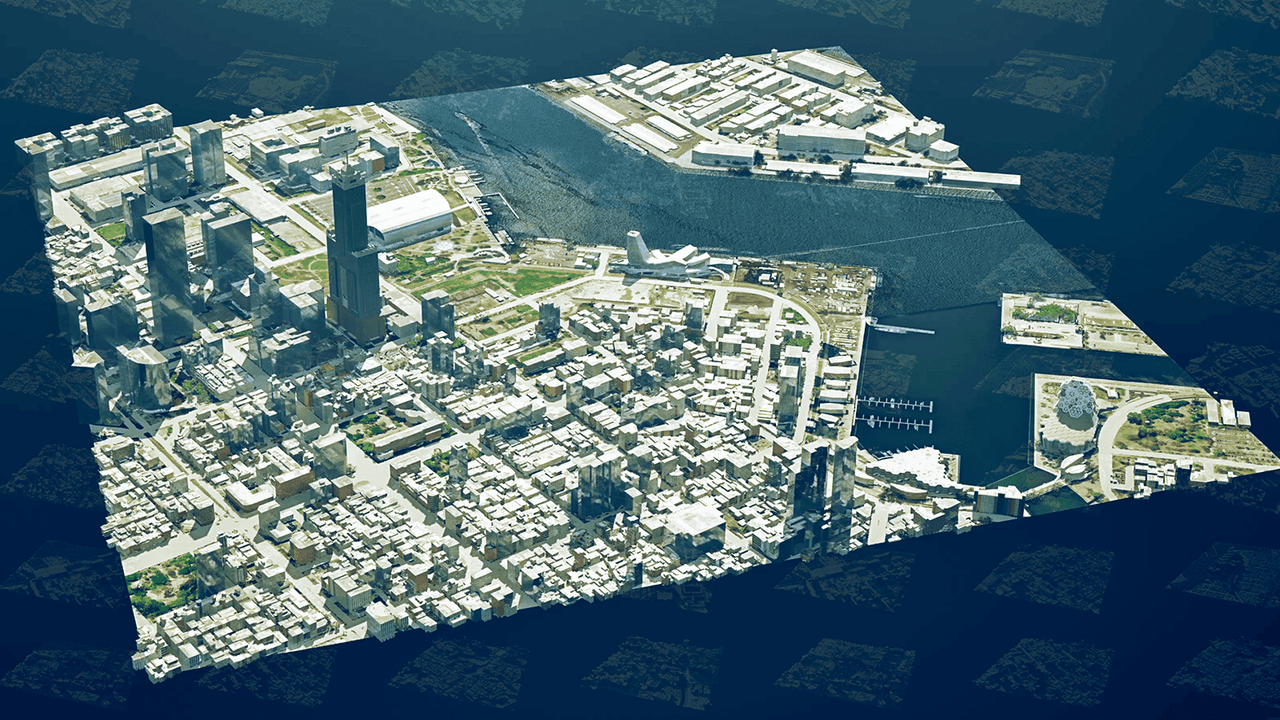

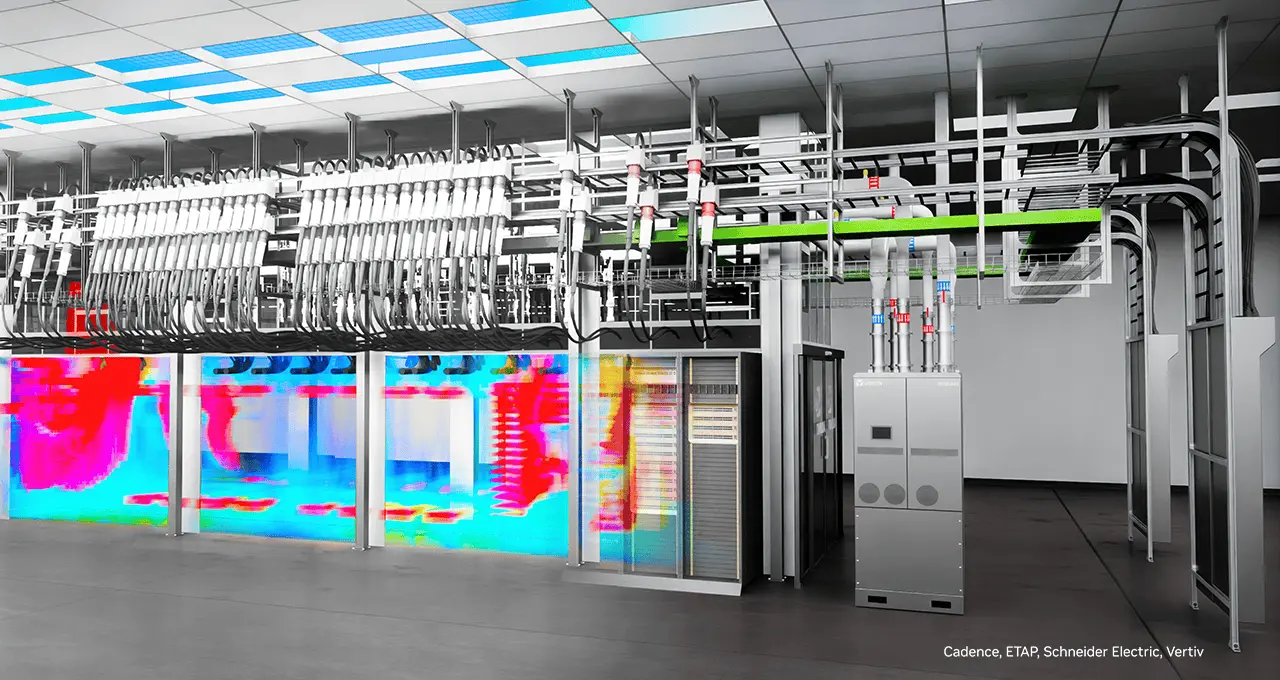

* New Models, Including Cosmos World Foundation Models, and Omniverse Mega Factory and Robotic Digital Twin Blueprint Lay the Foundation for Industrial AI * Leading Developers Accenture, Altair, Ansys, Cadence, Microsoft and Siemens Among First to Adopt Platform Libraries CES -- NVIDIA today announced generative AI models and blueprints that expand NVIDIA Omniverse™ integration further into physical AI applications such as robotics, autonomous vehicles and vision AI. Global leaders in software development and professional services are using Omniverse to develop new products and services that will accelerate the next era of industrial AI. Accenture, Altair, Ansys, Cadence, Foretellix, Microsoft and Neural Concept are among the first to integrate Omniverse into their next-generation software products and professional services. Siemens, a leader in industrial automation, announced today at the CES trade show the availability of Teamcenter Digital Reality Viewer -- the first Siemens Xcelerator application powered by NVIDIA Omniverse libraries. "Physical AI will revolutionize the $50 trillion manufacturing and logistics industries. Everything that moves -- from cars and trucks to factories and warehouses -- will be robotic and embodied by AI," said Jensen Huang, founder and CEO at NVIDIA. "NVIDIA's Omniverse digital twin operating system and Cosmos physical AI serve as the foundational libraries for digitalizing the world's physical industries." New Models and Frameworks Accelerate World Building for Physical AI Creating 3D worlds for physical AI simulation requires three steps: world building, labeling the world with physical attributes and making it photoreal. NVIDIA offers generative AI models that accelerate each step. The USD Code and USD Search NVIDIA NIM™ microservices are now generally available, letting developers use text prompts to generate or search for OpenUSD assets. A new NVIDIA Edify SimReady generative AI model unveiled today can automatically label existing 3D assets with attributes like physics or materials, enabling developers to process 1,000 3D objects in minutes instead of over 40 hours manually. NVIDIA Omniverse, paired with new NVIDIA Cosmos™ world foundation models, creates a synthetic data multiplication engine -- letting developers easily generate massive amounts of controllable, photoreal synthetic data. Developers can compose 3D scenarios in Omniverse and render images or videos as outputs. These can then be used with text prompts to condition Cosmos models to generate countless synthetic virtual environments for physical AI training. NVIDIA Omniverse Blueprints Speed Up Industrial, Robotic Workflows During the CES keynote, NVIDIA also announced four new blueprints that make it easier for developers to build Universal Scene Description (OpenUSD)-based Omniverse digital twins for physical AI. The blueprints include: New free Learn OpenUSD courses are also now available to help developers build OpenUSD-based worlds faster than ever. Market Leaders Supercharge Industrial AI Using NVIDIA Omniverse Global leaders in software development and professional services are using Omniverse to develop new products and services that are poised to accelerate the next era of industrial AI. Building on its adoption of Omniverse libraries in its Reality Digital Twin data center digital twin platform, Cadence, a leader in electronic systems design, announced further integration of Omniverse into Allegro, its leading electronic computer-aided design application used by the world's largest semiconductor companies. Altair, a leader in computational intelligence, is adopting the Omniverse blueprint for real-time CAE digital twins for interactive computational fluid dynamics (CFD). Ansys is adopting Omniverse into Ansys Fluent, a leading CAE application. And Neural Concept is integrating Omniverse libraries into its next-generation software products, enabling real-time CFD and enhancing engineering workflows. Accenture, a leading global professional services company, is using Mega to help German supply chain solutions leader KION by building next-generation autonomous warehouses and robotic fleets for their network of global warehousing and distribution customers. AV toolchain provider Foretellix, a leader in data-driven autonomy development, is using the AV simulation blueprint to enable full 3D sensor simulation for optimized AV testing and validation. Research organization MITRE is also deploying the blueprint, in collaboration with the University of Michigan's Mcity testing facility, to create an industry-wide AV validation platform. Katana Studio is using the Omniverse spatial streaming workflow to create custom car configurators for Nissan and Volkswagen, allowing them to design and review car models in an immersive experience while improving the customer decision-making process. Innoactive, an XR streaming platform for enterprises, used the workflow to add platform support for spatial streaming to Apple Vision Pro. The solution enables Volkswagen Group to conduct design and engineering project reviews at human-eye resolution. Innoactive also collaborated with Syntegon, a provider of processing and packaging technology solutions for pharmaceutical production, to enable Syntegon's customers to walk through and review digital twins of custom installations before they are built.

[2]

Nvidia uses GenAI to integrate Omniverse virtual creations into physical AI apps

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Nvidia unveiled generative AI models and blueprints that expand Nvidia Omniverse integration further into physical AI applications such as robotics, autonomous vehicles and vision AI. As part of the CES 2025 opening keynote by Nvidia CEO Jensen Huang, the company said global leaders in software development and professional services are using Omniverse to develop new products and services that will accelerate the next era of industrial AI. Accenture, Altair, Ansys, Cadence, Foretellix, Microsoft and Neural Concept are among the first to integrate Omniverse into their next-generation software products and professional services. Siemens, a leader in industrial automation, announced today at the CES trade show the availability of Teamcenter Digital Reality Viewer -- the first Siemens Xcelerator application powered by Nvidia Omniverse libraries. "Physical AI will revolutionize the $50 trillion manufacturing and logistics industries. Everything that moves -- from cars and trucks to factories and warehouses -- will be robotic and embodied by AI," said Huang, in a statement. "Nvidia's Omniverse digital twin operating system and Cosmos physical AI serve as the foundational libraries for digitalizing the world's physical industries." New Models and frameworks accelerate world building for physical AI Creating 3D worlds for physical AI simulation requires three steps: world-building, labeling the world with physical attributes and making it photoreal. Nvidia offers generative AI models that accelerate each step. The USD Code and USD Search Nvidia NIM microservices are now generally available, letting developers use text prompts to generate or search for OpenUSD assets. A new Nvidia Edify SimReady generative AI model unveiled today can automatically label existing 3D assets with attributes like physics or materials, enabling developers to process 1,000 3D objects in minutes instead of over 40 hours manually. Nvidia Omniverse, paired with new Nvidia Cosmos world foundation models, creates a synthetic data multiplication engine -- letting developers easily generate massive amounts of controllable, photoreal synthetic data. Developers can compose 3D scenarios in Omniverse and render images or videos as outputs. These can then be used with text prompts to condition Cosmos models to generate countless synthetic virtual environments for physical AI training. Nvidia Omniverse blueprints speed up industrial, robotic workflows During the CES keynote, Nvidia also announced four new blueprints that make it easier for developers to build Universal Scene Description (OpenUSD)-based Omniverse digital twins for physical AI. The blueprints include: New free, Learn OpenUSD courses are also now available to help developers build OpenUSD-based worlds faster than ever. Market Leaders Supercharge Industrial AI Using NVIDIA Omniverse Global leaders in software development and professional services are using Omniverse to develop new products and services that are poised to accelerate the next era of industrial AI. Building on its adoption of Omniverse libraries in its Reality Digital Twin data center digital twin platform, Cadence, a leader in electronic systems design, announced further integration of Omniverse into Allegro, its leading electronic computer-aided design application used by the world's largest semiconductor companies. Altair, a leader in computational intelligence, is adopting the Omniverse blueprint for real-time CAE digital twins for interactive computational fluid dynamics (CFD). Ansys is adopting Omniverse into Ansys Fluent, a leading CAE application. And Neural Concept is integrating Omniverse libraries into its next-generation software products, enabling real-time CFD and enhancing engineering workflows. Accenture, a leading global professional services company, is using Mega to help German supply chain solutions leader KION by building next-generation autonomous warehouses and robotic fleets for their network of global warehousing and distribution customers. AV toolchain provider Foretellix, a leader in data-driven autonomy development, is using the AV Simulation blueprint to enable full 3D sensor simulation for optimized AV testing and validation. Research organization MITRE is also deploying the blueprint, in collaboration with the University of Michigan's Mcity testing facility, to create an industry-wide AV validation platform. Katana Studio is using the Omniverse spatial streaming workflow to create custom car configurators for Nissan and Volkswagen, allowing them to design and review car models in an imersive experience while improving the customer decision-making process. Innoactive, an XR streaming platform for enterprises, leveraged the workflow to add platform support for spatial streaming to Apple Vision Pro. The solution enables Volkswagen Group to conduct design and engineering project reviews at human-eye resolution. Innoactive also collaborated with Syntegon, a provider of processing and packaging technology solutions for pharmaceutical production, to enable Syntegon's customers to walk through and review digital twins of custom installations before they are built.

[3]

Nvidia announces early access for Omniverse Sensor RTX for smarter autonomous machines

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Nvidia announced early access for Omniverse Cloud Sensor RTX software to enable smarter autonomous machines with generative AI. Generative AI and foundation models let autonomous machines generalize beyond the operational design domains on which they've been trained. Using new AI techniques such as tokenization and large language and diffusion models, developers and researchers can now address longstanding hurdles to autonomy. Nvidia made the announcement during Nvidia CEO Jensen Huang's keynote at CES 2025. These larger models require massive amounts of diverse data for training, fine-tuning and validation. But collecting such data -- including from rare edge cases and potentially hazardous scenarios, like a pedestrian crossing in front of an autonomous vehicle (AV) at night or a human entering a welding robot work cell -- can be incredibly difficult and resource-intensive. To help developers fill this gap, NVIDIA Omniverse Cloud Sensor RTX APIs enable physically accurate sensor simulation for generating datasets at scale. The application programming interfaces (APIs) are designed to support sensors commonly used for autonomy -- including cameras, radar and lidar -- and can integrate seamlessly into existing workflows to accelerate the development of autonomous vehicles and robots of every kind. Omniverse Sensor RTX APIs are now available to select developers in early access. Organizations such as Accenture, Foretellix, MITRE and Mcity are integrating these APIs via domain-specific blueprints to provide end customers with the tools they need to deploy the next generation of industrial manufacturing robots and self-driving cars. Powering Industrial AI With Omniverse Blueprints In complex environments like factories and warehouses, robots must be orchestrated to safely and efficiently work alongside machinery and human workers. All those moving parts present a massive challenge when designing, testing or validating operations while avoiding disruptions. Mega is an Omniverse Blueprint that offers enterprises a reference architecture of Nvidia accelerated computing, AI, NVIDIA Isaac and NVIDIA Omniverse technologies. Enterprises can use it to develop digital twins and test AI-powered robot brains that drive robots, cameras, equipment and more for handling enormous complexity and scale. Integrating Omniverse Sensor RTX, the blueprint lets robotics developers simultaneously render sensor data from any type of intelligent machine in a factory for high-fidelity, large-scale sensor simulation. With the ability to test operations and workflows in simulation, manufacturers can save considerable time and investment, and improve efficiency in entirely new ways. International supply chain solutions company Kion Group and Accenture are using the Mega blueprint to build Omniverse digital twins that serve as virtual training and testing environments for industrial AI's robot brains, tapping into data from smart cameras, forklifts, robotic equipment and digital humans. The robot brains perceive the simulated environment with physically accurate sensor data rendered by the Omniverse Sensor RTX APIs. They use this data to plan and act, with each action precisely tracked with Mega, alongside the state and position of all the assets in the digital twin. With these capabilities, developers can continuously build and test new layouts before they're implemented in the physical world. Driving AV Development and Validation Autonomous vehicles have been under development for over a decade, but barriers in acquiring the right training and validation data and slow iteration cycles have hindered large-scale deployment. To address this need for sensor data, companies are harnessing the Nvidia Omniverse Blueprint for AV simulation, a reference workflow that enables physically accurate sensor simulation. The workflow uses Omniverse Sensor RTX APIs to render the camera, radar and lidar data necessary for AV development and validation. AV toolchain provider Foretellix has integrated the blueprint into its Foretify AV development toolchain to transform object-level simulation into physically accurate sensor simulation. The Foretify toolchain can generate any number of testing scenarios simultaneously. By adding sensor simulation capabilities to these scenarios, Foretify can now enable developers to evaluate the completeness of their AV development, as well as train and test at the levels of fidelity and scale needed to achieve large-scale and safe deployment. In addition, Foretellix will use the newly announced Nvidia Cosmos platform to generate an even greater diversity of scenarios for verification and validation. Nuro, an autonomous driving technology provider with one of the largest level 4 deployments in the U.S., is using the Foretify toolchain to train, test and validate its self-driving vehicles before deployment. In addition, research organization MITRE is collaborating with the University of Michigan's Mcity testing facility to build a digital AV validation framework for regulatory use, including a digital twin of Mcity's 32-acre proving ground for autonomous vehicles. The project uses the AV simulation blueprint to render physically accurate sensor data at scale in the virtual environment, boosting training effectiveness. The future of robotics and autonomy is coming into sharp focus, thanks to the power of high-fidelity sensor simulation. Learn more about these solutions at CES by visiting Accenture at Ballroom F and Foretellix booth 4016 in the West Hall.

[4]

Building Smarter Autonomous Machines: NVIDIA Announces Early Access for Omniverse Sensor RTX

Organizations including Accenture and Foretellix are accelerating the development of next-generation self-driving cars and robots with high-fidelity, scalable sensor simulation. Generative AI and foundation models let autonomous machines generalize beyond the operational design domains on which they've been trained. Using new AI techniques such as tokenization and large language and diffusion models, developers and researchers can now address longstanding hurdles to autonomy. These larger models require massive amounts of diverse data for training, fine-tuning and validation. But collecting such data -- including from rare edge cases and potentially hazardous scenarios, like a pedestrian crossing in front of an autonomous vehicle (AV) at night or a human entering a welding robot work cell -- can be incredibly difficult and resource-intensive. To help developers fill this gap, NVIDIA Omniverse Cloud Sensor RTX APIs enable physically accurate sensor simulation for generating datasets at scale. The application programming interfaces (APIs) are designed to support sensors commonly used for autonomy -- including cameras, radar and lidar -- and can integrate seamlessly into existing workflows to accelerate the development of autonomous vehicles and robots of every kind. Omniverse Sensor RTX APIs are now available to select developers in early access. Organizations such as Accenture, Foretellix, MITRE and Mcity are integrating these APIs via domain-specific blueprints to provide end customers with the tools they need to deploy the next generation of industrial manufacturing robots and self-driving cars. Powering Industrial AI With Omniverse Blueprints In complex environments like factories and warehouses, robots must be orchestrated to safely and efficiently work alongside machinery and human workers. All those moving parts present a massive challenge when designing, testing or validating operations while avoiding disruptions. Mega is an Omniverse Blueprint that offers enterprises a reference architecture of NVIDIA accelerated computing, AI, NVIDIA Isaac and NVIDIA Omniverse technologies. Enterprises can use it to develop digital twins and test AI-powered robot brains that drive robots, cameras, equipment and more to handle enormous complexity and scale. Integrating Omniverse Sensor RTX, the blueprint lets robotics developers simultaneously render sensor data from any type of intelligent machine in a factory for high-fidelity, large-scale sensor simulation. With the ability to test operations and workflows in simulation, manufacturers can save considerable time and investment, and improve efficiency in entirely new ways. International supply chain solutions company KION Group and Accenture are using the Mega blueprint to build Omniverse digital twins that serve as virtual training and testing environments for industrial AI's robot brains, tapping into data from smart cameras, forklifts, robotic equipment and digital humans. The robot brains perceive the simulated environment with physically accurate sensor data rendered by the Omniverse Sensor RTX APIs. They use this data to plan and act, with each action precisely tracked with Mega, alongside the state and position of all the assets in the digital twin. With these capabilities, developers can continuously build and test new layouts before they're implemented in the physical world. Driving AV Development and Validation Autonomous vehicles have been under development for over a decade, but barriers in acquiring the right training and validation data and slow iteration cycles have hindered large-scale deployment. To address this need for sensor data, companies are harnessing the NVIDIA Omniverse Blueprint for AV simulation, a reference workflow that enables physically accurate sensor simulation. The workflow uses Omniverse Sensor RTX APIs to render the camera, radar and lidar data necessary for AV development and validation. AV toolchain provider Foretellix has integrated the blueprint into its Foretify AV development toolchain to transform object-level simulation into physically accurate sensor simulation. The Foretify toolchain can generate any number of testing scenarios simultaneously. By adding sensor simulation capabilities to these scenarios, Foretify can now enable developers to evaluate the completeness of their AV development, as well as train and test at the levels of fidelity and scale needed to achieve large-scale and safe deployment. In addition, Foretellix will use the newly announced NVIDIA Cosmos platform to generate an even greater diversity of scenarios for verification and validation. Nuro, an autonomous driving technology provider with one of the largest level 4 deployments in the U.S., is using the Foretify toolchain to train, test and validate its self-driving vehicles before deployment. In addition, research organization MITRE is collaborating with the University of Michigan's Mcity testing facility to build a digital AV validation framework for regulatory use, including a digital twin of Mcity's 32-acre proving ground for autonomous vehicles. The project uses the AV simulation blueprint to render physically accurate sensor data at scale in the virtual environment, boosting training effectiveness. The future of robotics and autonomy is coming into sharp focus, thanks to the power of high-fidelity sensor simulation. Learn more about these solutions at CES by visiting Accenture at Ballroom F at the Venetian and Foretellix booth 4016 in the West Hall of Las Vegas Convention Center. Learn more about the latest in automotive and generative AI technologies by joining NVIDIA at CES.

Share

Share

Copy Link

NVIDIA announces new generative AI models and blueprints for Omniverse, expanding its integration into physical AI applications like robotics and autonomous vehicles. The company also introduces early access to Omniverse Cloud Sensor RTX for smarter autonomous machines.

NVIDIA Unveils Generative AI Models for Omniverse

NVIDIA has announced a significant expansion of its Omniverse platform with new generative AI models and blueprints, aimed at revolutionizing physical AI applications such as robotics, autonomous vehicles, and vision AI

1

. This development, revealed at CES 2025, marks a major step towards integrating virtual creations into real-world AI applications.Expanding Omniverse Integration

The expansion includes the introduction of NVIDIA Cosmos world foundation models, which create a synthetic data multiplication engine. This allows developers to easily generate massive amounts of controllable, photoreal synthetic data for physical AI training

2

. The company also announced the general availability of USD Code and USD Search NVIDIA NIM microservices, enabling developers to use text prompts to generate or search for OpenUSD assets1

.New Blueprints for Industrial and Robotic Workflows

NVIDIA introduced four new blueprints to simplify the development of Universal Scene Description (OpenUSD)-based Omniverse digital twins for physical AI

1

. These blueprints are designed to accelerate industrial and robotic workflows, making it easier for developers to create complex simulations and environments.Omniverse Cloud Sensor RTX for Autonomous Machines

In a related announcement, NVIDIA revealed early access to Omniverse Cloud Sensor RTX software, aimed at enabling smarter autonomous machines with generative AI

3

. This technology addresses the challenge of collecting diverse data for training, fine-tuning, and validating autonomous systems, especially in rare or hazardous scenarios4

.Industry Adoption and Collaboration

Several industry leaders are already integrating Omniverse into their products and services:

- Siemens announced the Teamcenter Digital Reality Viewer, powered by NVIDIA Omniverse libraries

1

. - Cadence is further integrating Omniverse into Allegro, its electronic computer-aided design application

2

. - Altair and Ansys are adopting Omniverse for real-time CAE digital twins and computational fluid dynamics

2

. - Accenture is using the Mega blueprint to help KION Group build next-generation autonomous warehouses

2

.

Related Stories

Impact on Manufacturing and Logistics

Jensen Huang, NVIDIA's founder and CEO, emphasized the transformative potential of physical AI: "Physical AI will revolutionize the $50 trillion manufacturing and logistics industries. Everything that moves -- from cars and trucks to factories and warehouses -- will be robotic and embodied by AI"

1

. This vision underscores the significant impact NVIDIA's technologies are expected to have on various industries.Advancing Autonomous Vehicle Development

The Omniverse Blueprint for AV simulation, which uses Omniverse Sensor RTX APIs, is being adopted by companies like Foretellix to enhance autonomous vehicle development and validation

4

. This technology enables the generation of physically accurate sensor data, crucial for training and testing autonomous vehicles at scale.As these technologies continue to evolve, they promise to accelerate the development and deployment of advanced autonomous systems across multiple industries, potentially reshaping the future of manufacturing, logistics, and transportation.

References

Summarized by

Navi

[3]

Related Stories

NVIDIA Expands Omniverse Platform to Revolutionize AI Factory Design and Industrial Robotics

19 Mar 2025•Technology

NVIDIA Unveils Cosmos Reason and Advanced AI Models for Robotics and Physical AI Applications

12 Aug 2025•Technology

NVIDIA Expands Omniverse Blueprint for AI Factory Digital Twins, Accelerating Industrial AI Development

19 May 2025•Technology

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy