NVIDIA's Vera Rubin AI systems projected to consume 9.3% of global NAND supply by 2027

2 Sources

2 Sources

[1]

Oh no: NVIDIA's next-gen Vera Rubin AI systems to eat up MILLIONS of terabytes of SSDs

TL;DR: NVIDIA's next-gen Vera Rubin AI systems are projected to demand up to 115.2 million terabytes of SSD NAND by 2027, potentially causing a significant global NAND supply shortage. This surge, representing 9.3% of total NAND demand, may intensify SSD price increases amid ongoing DRAM and memory crises. The only other word I heard more than "AI" at CES 2026 was "DRAM" and the on-going crisis, but now it could get worse with reports that NVIDIA's next-gen Vera Rubin AI systems will eat up MILLIONS of terabytes of SSD capacity in the years to come. That's just Vera Rubin let alone Rubin Ultra, let alone NVIDIA's next-gen Feynman GPU architecture after that... but in a new X post by @Jukan, we're hearing from a Citi analysis of the subject. Citi explained: "We estimate that approximately 1,152TB of additional SSD NAND will be required per Vera Rubin server system to support NVIDIA's ICMS operations. Accordingly, assuming Vera Rubin server shipments of 30,000 units in 2026 and 100,000 units in 2027, NAND demand driven by ICMS is projected to reach 34.6 million TB in 2026 and 115.2 million TB in 2027". "This represents 2.8% of expected global NAND demand in 2026 and 9.3% in 2027, a meaningful scale that is likely to create significant upside potential for demand. As a result, with this structural demand increase factored in, the global NAND supply shortage is expected to intensify further". NVIDIA could use up to 16TB of NAND per GPU per rack = 1152TB of SSDs in a single Vera Rubin NVL72 AI server configuration. Citi estimates that Vera Rubin shipments could scale to 100,000 units in 2027, meaning that NVIDIA alone would require 115.2 million TB, which represents around 9.3% of the total global NAND demand projected for the next few years. I have heard many times from industry contacts that after the DRAM crisis will come the NAND crisis, and we'll see SSDs and M.2 SSDs skyrocket in price... sigh.

[2]

NVIDIA's Next Generation of AI Systems Could Gobble Up Millions of Terabytes of NAND SSDs, Potentially Worsening Storage Shortages

NVIDIA's next-gen Vera Rubin AI systems are projected to consume millions of terabytes in SSD capacity in the upcoming years, potentially triggering a NAND supply shock. NVIDIA's New Storage Solution Could Alone Take Up a Massive Portion of the Global NAND Output One of the biggest bottlenecks in agentic AI environments is that query processing generates a huge temporary memory log for context building called the KV Cache, and currently, the data is stored in HBM modules. However, considering how rapidly data requirements within AI clusters are scaling up, HBM cannot hold the capacity onboard, which is why at CES 2026, NVIDIA announced that the Bluefield-4 DPUs will be connected to a new storage solution, called the Inference Memory Context Storage (ICMS). While this will significantly boost data processing, it could trigger a situation similar to DRAM shortages. Based on an analysis by Citi, it is claimed that with one Vera Rubin system, NVIDIA could equip roughly 16 TB of NAND per GPU in a rack, which accounts for 1,152 TB in a single NVL72 configuration. And, based on Citi's estimates, Vera Rubin shipments could scale up to 100,000 units in 2027, which means that, from NVIDIA alone, the demand for NAND storage could increase to 115.2 million TB, representing 9.3% of the total global NAND demand projected for the upcoming years. Vera Rubin, equipped with ICMS, could alone create a supply shock that the NAND industry has not yet factored in. Given that NVIDIA has projected agentic AI to be the next primary focus of the applications layer, the need for a sufficient KV Cache pool is significant for upcoming server racks, which is why the demand for ICMS is expected to increase. It is also important to factor in that the NAND industry is already experiencing shortages due to the ongoing data center buildout, the inference craze, and the fact that NVIDIA now aims to acquire a significant portion of the total global NAND output. It would not be incorrect to say that the NAND industry could face a similar situation to what we are witnessing with DRAM, given that AI manufacturers aren't looking to halt their pursuit of superior compute capabilities. And, well, for the average consumer, getting access to general-purpose SSDs and storage devices could become another nightmare. News Source: Jukan Follow Wccftech on Google to get more of our news coverage in your feeds.

Share

Share

Copy Link

NVIDIA's next-generation Vera Rubin AI systems are set to consume massive amounts of storage, with projections showing demand reaching 115.2 million terabytes by 2027. This represents 9.3% of total global NAND supply and could trigger significant storage shortages and SSD price increases, mirroring the ongoing DRAM crisis that has already disrupted the memory market.

NVIDIA Vera Rubin Systems Set to Drive Massive NAND Demand

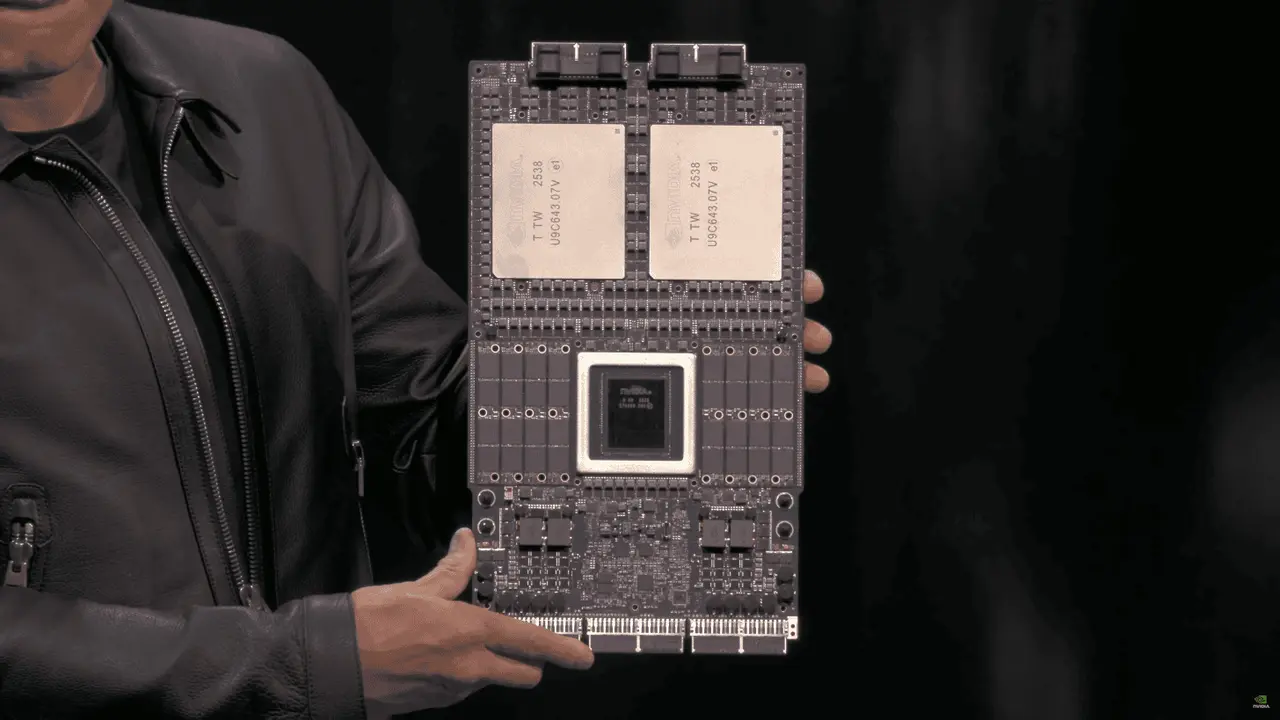

NVIDIA's next-generation Vera Rubin AI systems are poised to consume staggering amounts of storage capacity, potentially triggering a NAND supply shortage that could reshape the memory market. According to a Citi analysis, NVIDIA's Vera Rubin AI systems could require approximately 1,152TB of additional SSD NAND per server system to support the company's Inference Memory Context Storage (ICMS) operations

1

. With projected shipments of 30,000 units in 2026 and 100,000 units in 2027, NAND demand driven by ICMS is expected to reach 34.6 million TB in 2026 and 115.2 million TB in 20272

. This translates to 2.8% of expected global NAND demand in 2026 and 9.3% of global NAND supply in 2027, a scale that Citi warns "is likely to create significant upside potential for demand"1

.

Source: Wccftech

Understanding the Storage Architecture Behind the Demand

The surge in storage requirements stems from a fundamental shift in how agentic AI environments process data. Query processing in these systems generates massive temporary memory logs for context building called the KV Cache, which currently relies on HBM modules

2

. As data requirements within AI clusters scale rapidly, HBM can no longer hold the necessary capacity onboard. At CES 2026, NVIDIA announced that Bluefield-4 DPUs would connect to a new storage solution called Inference Memory Context Storage (ICMS), designed to address this bottleneck2

. Each Vera Rubin system could utilize approximately 16TB of NAND per GPU in a rack, totaling 1,152TB in a single NVL72 configuration1

. While this significantly boosts data processing capabilities, it creates unprecedented demand for NAND SSDs that the industry hasn't fully anticipated.

Source: TweakTown

Related Stories

Storage Shortages and Market Impact on the Horizon

The NAND supply shortage could intensify dramatically as this structural demand increase takes hold. Industry experts warn that the situation mirrors the ongoing DRAM crisis, with storage shortages potentially becoming the next major supply chain disruption

1

. The NAND industry already faces pressure from ongoing data center buildout and the inference craze, and NVIDIA's acquisition of a significant portion of total global NAND output could create a supply shock that manufacturers haven't factored into their production forecasts2

. For average consumers, accessing general-purpose SSDs and storage devices could become increasingly difficult and expensive. Industry contacts predict that after the memory crises involving DRAM, SSD price increases are inevitable as M.2 SSDs and other consumer storage products skyrocket in cost1

. The projections don't even account for Rubin Ultra or NVIDIA's next-generation Feynman GPU architecture, suggesting that demand pressures will only intensify beyond 2027. Given that NVIDIA has positioned agentic AI as the primary focus for future applications, the need for sufficient KV Cache pools in server systems means ICMS demand will continue climbing, potentially creating long-term structural changes in the storage market2

.References

Summarized by

Navi

Related Stories

Recent Highlights

1

Pentagon threatens Anthropic with Defense Production Act over AI military use restrictions

Policy and Regulation

2

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

3

Anthropic accuses Chinese AI labs of stealing Claude through 24,000 fake accounts

Policy and Regulation