NVIDIA Unveils Next-Gen AI Powerhouses: Rubin and Rubin Ultra GPUs with Vera CPUs

4 Sources

4 Sources

[1]

Nvidia announces new GPUs at GTC 2025, including Vera Rubin

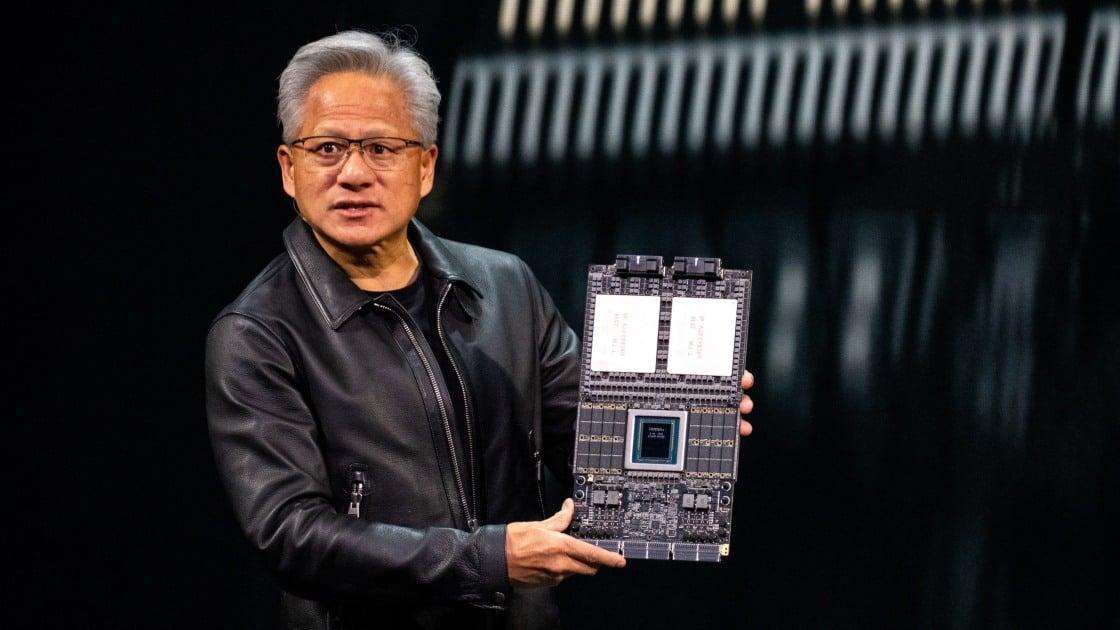

On stage at Nvidia GTC 2025 in San Jose, Jensen Huang announced a slew of new GPUs coming down the company's product pipeline in the next several months. Perhaps the most significant was Vera Rubin. Vera Rubin, which is set to be released in the second half of 2026, will feature up to 75TB of HBM4 memory and a CPU with 88 cores. The chip delivers substantial performance uplifts compared to its predecessor, Blackwell, Nvidia claims, particularly on AI inferencing and training workloads. Rubin will be followed by Rubin Ultra in the second half of 2027.

[2]

Nvidia shows off Rubin Ultra with 600,000-Watt Kyber racks and infrastructure, coming in 2027

Nvidia showed off a mockup of its future Rubin Ultra GPUs with the NVL576 Kyber racks and infrastructure at GTC 2025. These are intended to ship in the second half of 2027, more than two years away, and yet, as an AI infrastructure company, Nvidia is already well on its way to planning how we get from where we are today to where it wants us to be in a few years. That future includes GPU servers that are so powerful that they consume up to 600kW per rack. The current Blackwell B200 server racks already use copious amounts of power, up to 120kW per rack (give or take). The first Vera Rubin solutions, slated for the second half of 2026, will use the same infrastructure as Grace Blackwell, but the next Rubin Ultra solutions intend to quadruple the number of GPUs per rack. Along with that, we could be looking at single rack solutions that consume up to 600kW, as Jensen Huang verified during a question-and-answer session, with full SuperPODS requiring multi-megawatts of power. Kyber is the name of the rack infrastructure that will be used for these platforms. There are no hard specifications yet for Rubin Ultra, but there are performance targets. As discussed during the keynote and in regards to Nvidia's data center GPU roadmap going beyond Blackwell Ultra B300, Rubin NVL144 racks will offer up to 3.6 EFLOPS of FP4 inference in the second half of next year, with Rubin Ultra NVL576 racks in 2027 delivering up to 15 EFLOPS of FP4. It's a huge jump in compute density, along with power density. Each Rubin Ultra rack will consist of four 'pods,' each of which will deliver more computational power than an entire Rubin NVL144 rack. Each pod will house 18 blades, and each blade will support up to eight Rubin Ultra GPUs -- along with two Vera CPUs, presumably, though that wasn't explicitly stated. That's 176 GPUs per pod, and 576 per rack. The NVLink units are getting upgrades as well and will each have three next-generation NVLink connections, whereas the current NVLink 1U rack-mount units only have two NVLink connections. Either prototypes or mockups of both the NVLink and Rubin Ultra blades were on display with the Kyber rack. No one has provided clear power numbers, but Jensen talked about data centers in the coming years potentially needing megawatts of power per server rack. That's not Kyber, but whatever comes after could very well push beyond 1MW per rack, with Kyber targeting around 600kW if it keeps with the current 1000~1400 watts per GPU of the Blackwell series.

[3]

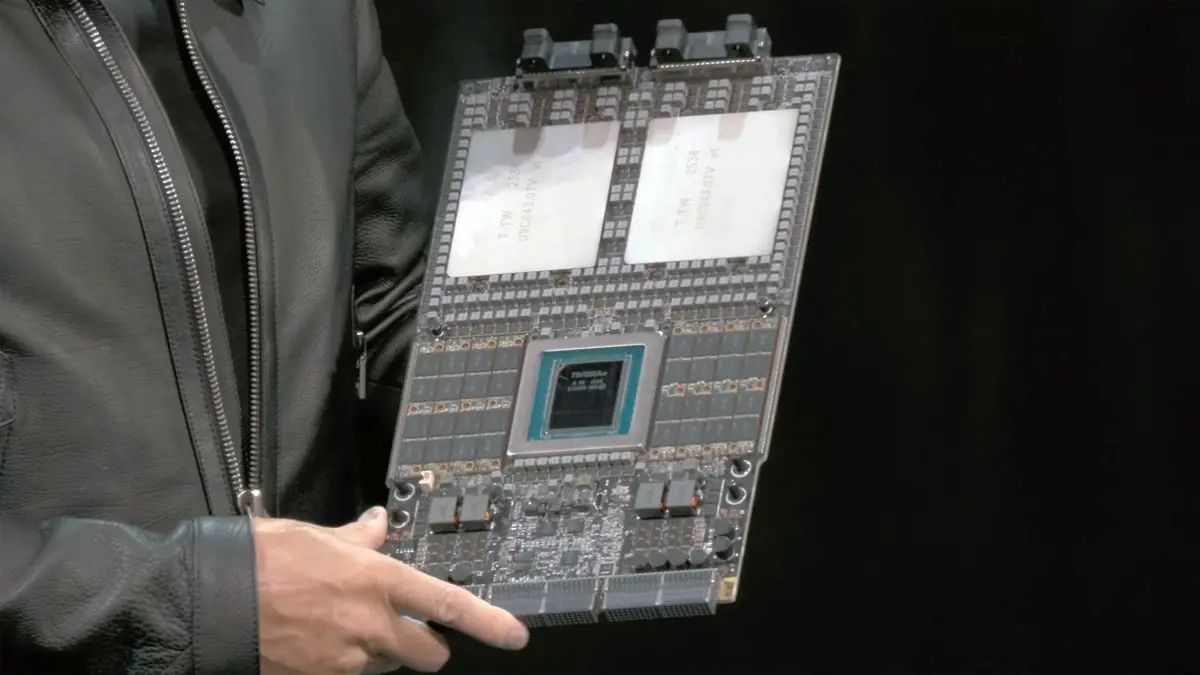

NVIDIA's next-gen Vera Rubin NVL576 AI server: 576 Rubin AI GPUs, 12672C/25344T CPU, new HBM4

TL;DR: NVIDIA's next-gen Vera Rubin NVL576 AI server platform has 1300 trillion transistors, 576 Rubin AI GPUs with 1TB of HBM4 memory, 22,672 Vera CPU cores with 25,344 Vera CPU threads, 1.5PB/sec NVLINK switch speed, and so much more. NVIDIA is hosting its GPU Technology Conference (GTC 2025) with an unveiling of its GB300 Blackwell Ultra, and a tease of its next-gen Rubin and Rubin Ultra AI GPUs, and its next-gen Vera CPUs... new ultra-fast AI platforms for AI computing deploying in 2026-2027. NVIDIA has increased performance by 50% with its new GB300 "Blackwell Ultra" AI systems over GB200 AI servers, as well as increased memory capacity to 288GB HBM3E on GB300 from 192GB of HBM3E on GB200. The new GB300 and current GB200 AI platforms are made into up to NVL72 solutions, but next-gen Rubin GPUs will scale up to a far larger NVL144 platform. The new NVIDIA Vera Rubin NVL144 platform will use two new chips, with the Rubin GPU using two Reticle-sized chips with up to 50 PFLOPs of FP4 performance and 288GB of next-gen, ultra-fast HBM4 memory. Alongside these chips will be an 88-core Vera CPU with a custom Arm architecture, 176 threads in total, with up to 1.8TB/sec of NVLINK-C2C interconnect. NVIDIA will have a huge 3.3x increase in FP4 inference and FP8 training capabilities over its already-faster GB300 NVL72 AI server, with the new Vera Rubin NVL144 platform featuring up to 13TB/sec of memory bandwidth from its new HBM4 memory, 75TB of fast memory, which is a 60% uplift over GB300, and 2x the NVLINK and CX9 capabilities, which are rated at up to 260TB/sec and 28.8TB/sec, respectively. But, boy-oh-boy does the AI silicon fun not end there my friends. NVIDIA will be introducing a second Rubin AI platform in 2H 2027 with the introduction of Rubin Ultra that will scale up the NVL system from an already-higher 144 to a game-changing 576. NVIDIA's new Rubin Ultra NVL576 AI server platform will use the same CPU architecture, but the Rubin Ultra GPUs will use 4 x Reticle-sized chips with up to 100 PFLOPs of FP4 performance and a total of 1TB of next-gen HBM4 memory across 16 HBM sites. Ooooh, yeah.. Now, let's move into just how much performance Rubin Ultra NVL576 has: 15 Exaflops of FP4 inference and 5 Exaflops of FP8 training capabilities, which is a 14x increase over GB300 NVL72, with 4.6PBsec of HBM4 memory and 3665TB of fast memory. This is an 8x increase over GB300 and 12 x the NVLINK and 8x the CX9 capabilities, which are rated at up to 1.5PB/sec and 115.2TB/sec, respectively. Some of the primary features of the Vera Rubin NVL576 system include:

[4]

NVIDIA Rubin & Rubin Ultra With Next-Gen Vera CPUs Start Arriving Next Year: Up To 1 TB HBM4 Memory, 4-Reticle Sized GPUs, 100PF FP4 & 88 CPU Cores

NVIDIA has laid out the plans for its next-gen AI powerhouses, the Rubin & Rubin Ultra GPUs, along with Vera CPUs, taking the segment to new heights. NVIDIA Rubin, Rubin Ultra GPUs & Next-Gen Vera CPUs Detailed - Superfast AI Platforms For AI Computing, Arriving in 2026-2027 This year, NVIDIA is upgrading Blackwell with its Blackwell Ultra platform, offering up to 288 GB of HBM3e memory, but next year, the Green Team is taking things to new heights with its brand-new CPU and GPU platforms, codenamed Rubin and Vera. At GTC, NVIDIA detailed the trio of its platforms launching in 2026 and 2027. Starting with the first platform, we have the Vera Rubin system which will scale the NVL72 solutions up to NVL144. These AI platforms will be arriving in the second half of 2026 and will be featured in Obereon Racks with liquid cooling support. In terms of specifications, the NVIDIA Vera Rubin NVL144 platform will utilize two new chips. The Rubin GPU will make use of two Reticle-sized chips, with up to 50 PFLOPs of FP4 performance and 288 GB of next-gen HBM4 memory. These chips will be equipped alongside an 88-core Vera CPU with a custom Arm architecture, 176 threads, and up to 1.8 TB/s of NVLINK-C2C interconnect. In terms of performance scaling, the NVIDIA Vera Rubin NVL144 platform will feature 3.6 Exaflops of FP4 inference and 1.2 Exaflops of FP8 Training capabilities, a 3.3x increase over GB300 NVL72, 13 TB/s of HBM4 memory with 75 TB of fast memory, a 60% uplift over GB300 and 2x the NVLINK and CX9 capabilities, rated at up to 260 TB/s and 28.8 TB/s, respectively. The second platform will be arriving in the second half of 2027 and will be called Rubin Ultra. This platform will scale the NVL system from 144 to 576. The architecture for the CPU remains the same, but the Rubin Ultra GPU will feature four reticle-sized chips, offering up to 100 PFLOPS of FP4 and a total HBM4e capacity of 1 TB scattered across 16 HBM sites. In terms of performance scaling, the NVIDIA Rubin Ultra NVL576 platform will feature 15 Exaflops of FP4 inference and 5 Exaflops of FP8 Training capabilities, a 14x increase over GB300 NVL72, 4.6 PB/s of HBM4 memory with 365 TB of fast memory, a 8x uplift over GB300 and 12x the NVLINK and 8x the CX9 capabilities, rated at up to 1.5 PB/s and 115.2 TB/s, respectively.

Share

Share

Copy Link

NVIDIA announces its upcoming Rubin and Rubin Ultra GPU platforms, along with Vera CPUs, set to revolutionize AI computing in 2026-2027 with unprecedented performance and memory capabilities.

NVIDIA's Next-Generation AI Platforms

NVIDIA has unveiled its roadmap for next-generation AI computing platforms at GTC 2025, showcasing the upcoming Rubin and Rubin Ultra GPU architectures alongside new Vera CPUs. These announcements signal a significant leap in AI processing capabilities, scheduled for deployment in 2026 and 2027

1

2

3

4

.Vera Rubin: The First Step

Set for release in the second half of 2026, the Vera Rubin platform marks NVIDIA's initial foray into this new generation of AI hardware

1

4

:- GPU: Two reticle-sized chips delivering up to 50 PFLOPs of FP4 performance

- Memory: 288GB of next-gen HBM4 memory

- CPU: 88-core Vera CPU with custom Arm architecture, featuring 176 threads

- Interconnect: Up to 1.8TB/sec NVLINK-C2C

The Vera Rubin NVL144 platform boasts impressive performance metrics:

- 3.6 Exaflops of FP4 inference and 1.2 Exaflops of FP8 training capabilities

- 13TB/sec of HBM4 memory bandwidth with 75TB of fast memory

- NVLINK and CX9 capabilities rated at 260TB/sec and 28.8TB/sec, respectively

Rubin Ultra: Pushing the Boundaries

Following Vera Rubin, NVIDIA plans to launch the even more powerful Rubin Ultra in the second half of 2027

2

3

4

:- GPU: Four reticle-sized chips offering up to 100 PFLOPs of FP4 performance

- Memory: 1TB of HBM4 memory across 16 HBM sites

- Scale: NVL system expanded from 144 to 576

The Rubin Ultra NVL576 platform promises unprecedented performance:

- 15 Exaflops of FP4 inference and 5 Exaflops of FP8 training capabilities

- 4.6PB/sec of HBM4 memory bandwidth with 365TB of fast memory

- NVLINK and CX9 capabilities increased to 1.5PB/sec and 115.2TB/sec, respectively

Related Stories

Infrastructure and Power Requirements

To support these advanced systems, NVIDIA is developing new infrastructure solutions

2

:- Kyber: A new rack infrastructure designed for Rubin Ultra

- Power consumption: Up to 600kW per rack for Rubin Ultra systems

- Cooling: Liquid cooling support in Obereon Racks

Impact on AI Computing

These announcements represent a significant advancement in AI computing capabilities:

- Vera Rubin NVL144 offers a 3.3x increase in FP4 inference and FP8 training over the GB300 NVL72 AI server

3

- Rubin Ultra NVL576 provides a 14x increase in the same metrics compared to GB300 NVL72

3

4

NVIDIA's roadmap demonstrates the company's commitment to pushing the boundaries of AI computing, with each generation offering substantial improvements in performance, memory capacity, and interconnect speeds. These advancements are poised to enable more complex and powerful AI models, potentially revolutionizing various fields that rely on high-performance computing for AI applications.

References

Summarized by

Navi

[2]

[3]

Related Stories

NVIDIA Unveils Roadmap for Next-Gen AI GPUs: Blackwell Ultra and Vera Rubin

28 Feb 2025•Technology

Nvidia Unveils Vera Rubin Superchip: Six-Trillion Transistor AI Platform Set for 2026 Production

29 Oct 2025•Technology

Nvidia ships first Vera Rubin AI system samples, promising 10x efficiency over Blackwell

25 Feb 2026•Technology

Recent Highlights

1

Samsung unveils Galaxy S26 lineup with Privacy Display tech and expanded AI capabilities

Technology

2

Anthropic refuses Pentagon's ultimatum over AI use in mass surveillance and autonomous weapons

Policy and Regulation

3

AI models deploy nuclear weapons in 95% of war games, raising alarm over military use

Science and Research