Cloudflare Accuses Perplexity AI of Stealthy Web Scraping, Sparking Debate on AI Crawlers

29 Sources

29 Sources

[1]

AI site Perplexity uses "stealth tactics" to flout no-crawl edicts, Cloudflare says

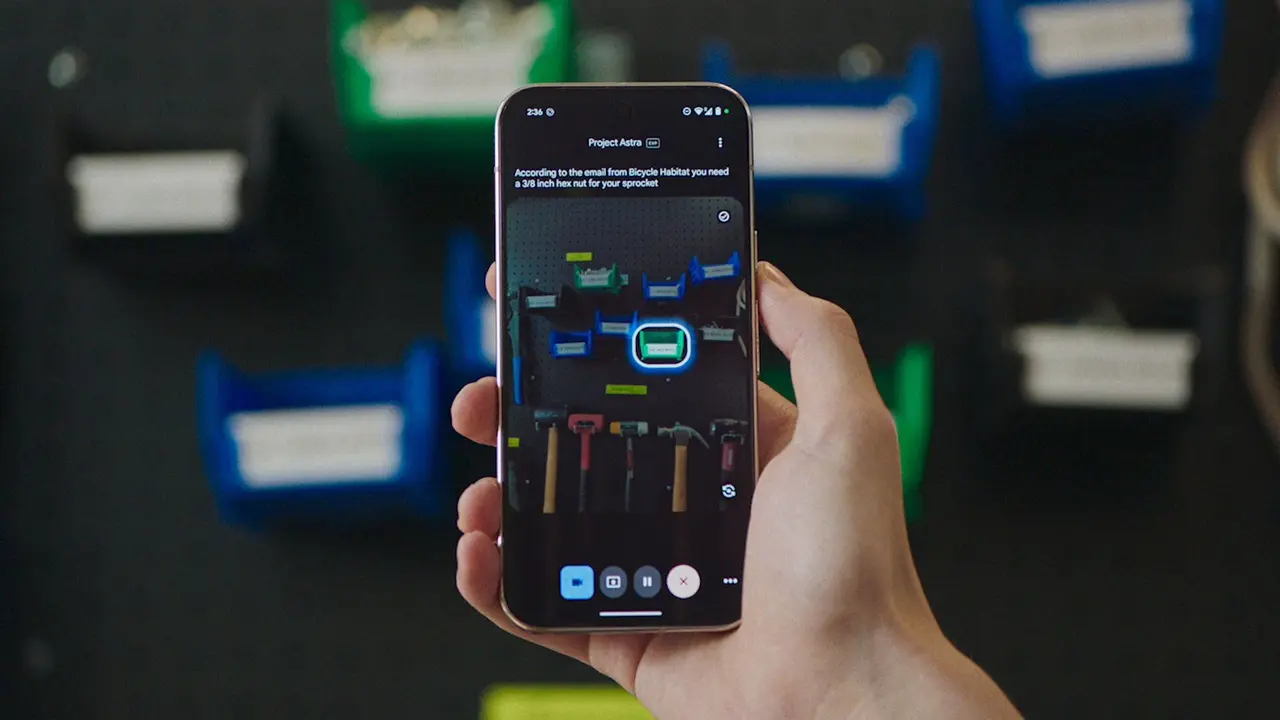

AI search engine Perplexity is using stealth bots and other tactics to evade websites' no-crawl directives, an allegation that if true violates Internet norms that have been in place for more than three decades, network security and optimization service Cloudflare said Monday. In a blog post, Cloudflare researchers said the company received complaints from customers who had disallowed Perplexity scraping bots by implementing settings in their sites' robots.txt files and through Web application firewalls that blocked the declared Perplexity crawlers. Despite those steps, Cloudflare said, Perplexity continued to access the sites' content. The researchers said they then set out to test it for themselves and found that when known Perplexity crawlers encountered blocks from robots.txt files or firewall rules, Perplexity then searched the sites using a stealth bot that followed a range of tactics to mask its activity. >10,000 domains and millions of requests "This undeclared crawler utilized multiple IPs not listed in Perplexity's official IP range, and would rotate through these IPs in response to the restrictive robots.txt policy and block from Cloudflare," the researchers wrote. "In addition to rotating IPs, we observed requests coming from different ASNs in attempts to further evade website blocks. This activity was observed across tens of thousands of domains and millions of requests per day." The researchers provided the following diagram to illustrate the flow of the technique they allege Perplexity used. If true, the evasion flouts Internet norms in place for more than three decades. In 1994, engineer Martijn Koster proposed the Robots Exclusion Protocol, which provided a machine-readable format for informing crawlers they weren't permitted on a given site. Sites that their content indexed installed the simple robots.txt file at the top of their homepage. The standard, which has been widely observed and endorsed ever since, formally became a standard under the Internet Engineering Task Force in 2022. Cloudflare isn't the first to say that Perplexity violates the spirit if not the letter of the norm. Last year, Reddit CEO Steve Huffman told the Verge that stopping Perplexity -- and two other AI engines from Microsoft and Anthropic -- was a real pain in the ass." Huffman went on to say: "We've had Microsoft, Anthropic, and Perplexity act as though all of the content on the internet is free for them to use. That's their real position." Perplexity has faced allegations from several other publishers that it plagiarized their content. Forbes, for instance, accused Perplexity of "cynicle theft" after publishing a post that was "extremely similar to Forbes' proprietary article" posted a day earlier. Ars Technica sister publication Wired has leveled similar claims. It cited what it said were suspicious traffic patterns from IP addresses, likely linked to Perplexity, that were ignoring robots.txt exclusions. Perplexity was also found to have manipulated its crawling bots' ID string to bypass website blocks. The Cloudflare researchers went on to say that in response to their findings, the company is taking actions to prevent crawlers from accessing sites that use its content-delivery service. "There are clear preferences that crawlers should be transparent, serve a clear purpose, perform a specific activity, and, most importantly, follow website directives and preferences," they wrote. "Based on Perplexity's observed behavior, which is incompatible with those preferences, we have de-listed them as a verified bot and added heuristics to our managed rules that block this stealth crawling." Perplexity representatives didn't respond to an email asking if the allegations are true.

[2]

Perplexity accused of scraping websites that explicitly blocked AI scraping | TechCrunch

AI startup Perplexity is crawling and scraping content from websites that have explicitly indicated they don't want to be scraped, according to internet infrastructure provider Cloudflare. On Monday, Cloudflare published research saying it observed the AI startup ignore blocks and hide its crawling and scraping activities. The network infrastructure giant accused Perplexity of obscuring its identity when trying to scrape web pages "in an attempt to circumvent the website's preferences," Cloudflare's researchers wrote. AI products like those offered by Perplexity rely on gobbling up large amounts of data from the internet, and AI startups have long scraped text, images, and videos from the internet many times without permission to make their products work. In recent times, websites have tried to fight back by using the web standard Robots.txt file, which tells search engines and AI companies which pages can be indexed and which shouldn't, efforts that have seen mixed results so far. Perplexity appears to be willingly circumventing these blocks by changing its bots "user agent," meaning a signal that identifies a website visitor by their device and version type; as well as changing their autonomous system networks, or ASN, essentially a number that identifies large networks on the internet, according to Cloudflare. "This activity was observed across tens of thousands of domains and millions of requests per day. We were able to fingerprint this crawler using a combination of machine learning and network signals," read Cloudflare's post. Perplexity spokesperson Jesse Dwyer dismissed Cloudflare's blog post as a "sales pitch," adding in an email to TechCrunch that the screenshots in the post "show that no content was accessed." In a follow-up email, Dwyer claimed the bot named in the Cloudflare blog "isn't even ours." Cloudflare said it first noticed the behavior after its customers complained that Perplexity was crawling and scraping their sites, even after they added rules on their Robots file and for specifically blocking Perplexity's known bots. Cloudflare said it then performed tests to check and confirmed that Perplexity was circumventing these blocks. "We observed that Perplexity uses not only their declared user-agent, but also a generic browser intended to impersonate Google Chrome on macOS when their declared crawler was blocked," according to Cloudflare. The company also said that it has de-listed Perplexity's bots from its verified list and added new techniques to block them. Cloudflare has recently taken a public stance against AI crawlers. Last month, Cloudflare announced the launch of a marketplace allowing website owners and publishers to charge AI scrapers who visit their sites. Cloudflare's chief executive Matthew Prince sounded the alarm at the time, saying AI is breaking the business model of the internet, particularly publishers. Last year, Cloudflare also launched a free tool to prevent bots from scraping websites to train AI. This is not the first time Perplexity is accused of scraping without authorization. Last year, news outlets, such as Wired, alleged Perplexity was plagiarizing their content. Weeks later, Perplexity's CEO Aravind Srinivas was unable to immediately answer when asked to provide the company's definition of plagiarism during an interview with TechCrunch's Devin Coldewey at the Disrupt 2024 conference.

[3]

Some people are defending Perplexity after Cloudflare 'named and shamed' it | TechCrunch

When Cloudflare accused AI search engine Perplexity of stealthily scraping websites on Monday, while ignoring a site's specific methods to block it, this wasn't a clear-cut case of an AI web crawler gone wild. Many people came to Perplexity's defense. They argued that Perplexity accessing sites in defiance of the website owner's wishes, while controversial, is acceptable. And this is a controversy that will certainly grow as AI agents flood the internet: Should an agent accessing a website on behalf of its user be treated like a bot? Or like a human making the same request? Cloudflare is known for providing anti-bot crawling and other web security services to millions of websites. Essentially, Cloudflare's test case involved setting up a new website with a new domain that had never been crawled by any bot, setting up a robots.txt file that specifically blocked Perplexity's known AI crawling bots, and then asking Perplexity about the website's content. And Perplexity answered the question. Cloudflare researchers found the AI search engine used "a generic browser intended to impersonate Google Chrome on macOS" when its web crawler itself was blocked. Cloudflare CEO Matthew Prince posted the research on X, writing, "Some supposedly 'reputable' AI companies act more like North Korean hackers. Time to name, shame, and hard block them." But many people disagreed with Prince's assessment that this was actual bad behavior. Those defending Perplexity on sites like X and Hacker News pointed out that what Cloudflare seemed to document was the AI accessing a specific public website when its user asked about that specific website. "If I as a human request a website, then I should be shown the content," one person on Hacker News wrote, adding, "why would the LLM accessing the website on my behalf be in a different legal category as my Firefox web browser?" A Perplexity spokesperson previously denied to TechCrunch that the bots were the company's and called Cloudflare's blog post a sales pitch for Cloudflare. Then on Tuesday, Perplexity published a blog in its defense (and generally attacking Cloudflare), claiming the behavior was from a third-party service it uses occasionally. But the crux of Perplexity's post made a similar appeal as its online defenders did. "The difference between automated crawling and user-driven fetching isn't just technical -- it's about who gets to access information on the open web," the post said. "This controversy reveals that Cloudflare's systems are fundamentally inadequate for distinguishing between legitimate AI assistants and actual threats." Peplexity's accusations aren't exactly fair, either. One argument that Prince and Cloudflare used for calling out Perplexity's methods was that OpenAI doesn't behave in the same way. "OpenAI is an example of a leading AI company that follows these best practices. They respect robots.txt and do not try to evade either a robots.txt directive or a network level block. And ChatGPT Agent is signing http requests using the newly proposed open standard Web Bot Auth," Prince wrote in his post. Web Bot Auth is a Cloudflare-supported standard being developed by the Internet Engineering Task Force that hopes to create a cryptographic method for identifying AI agent web requests. The debate comes as bot activity reshapes the internet. As TechCrunch has previously reported, bots seeking to scrape massive amounts of content to train AI models have become a menace, especially to smaller sites. For the first time in the internet's history, bot activity is currently outstripping human activity online, with AI traffic accounting for over 50%, according to Imperva's Bad Bot report released last month. Most of that activity is coming from LLMs. But the report also found that malicious bots now make up 37% of all internet traffic. That's activity that includes everything from persistent scraping to unauthorized login attempts. Until LLMs, the internet generally accepted that websites could and should block most bot activity given how often it was malicious by using CAPTCHAs and other services (such as Cloudflare). Websites also had a clear incentive to work with specific good actors, such as Googlebot, guiding it on what not to index through robots.txt. Google indexed the internet, which sent traffic to sites. Now, LLMs are eating an increasing amount of that traffic. Gartner predicts that search engine volume will drop by 25% by 2026. Right now humans tend to click website links from LLMs at the point they are most valuable to the website, which is when they are ready to conduct a transaction. But if humans adopt agents as the tech industry predicts they will -- to arrange our travel, book our dinner reservations, and shop for us -- would websites hurt their business interests by blocking them? The debate on X captured the dilemma perfectly: "I WANT perplexity to visit any public content on my behalf when I give it a request/task!" wrote one person in response to Cloudflare calling Perplexity out. "What if the site owners don't want it? they just want you [to] directly visit the home, see their stuff" argued another, pointing out that the site owner who created the content wants the traffic and potential ad revenue, not to let Perplexity take it. "This is why I can't see 'agentic browsing' really working -- much harder problem than people think. Most website owners will just block," a third predicted.

[4]

Perplexity says Cloudflare's accusations of 'stealth' AI scraping are based on embarrassing errors

Cloudflare now offers services to block aggressive AI crawlers. Cloudflare, a leading content delivery network (CDN) company, has accused the AI startup Perplexity of evading websites' "no crawl" directives by stealthily deploying web crawlers to scrape content from sites that have explicitly blocked its official bots. If that sounds familiar, you've heard these accusations before. Last year, WIRED and Forbes both accused Perplexity of doing the same thing to their sites. According to Cloudflare, when Perplexity's web crawler encounters a robots.txt file, which sites use to block their content from being crawled, Perplexity pretends to be an ordinary Chrome web browser on a Mac. This enables it to bypass the bot barriers. Also: Perplexity's Comet AI browser is hurtling toward Chrome - how to try it Cloudflare started investigating when it received complaints from customers who had "both disallowed Perplexity crawling activity in their robots.txt files and also created WAF [Web Application Firewall] rules to specifically block both of Perplexity's declared crawlers: PerplexityBot and Perplexity-User." The customers said their content still ended up in Perplexity, even after they had blocked it. The CDN then set up new test domains, explicitly prohibiting all automated access in its robots.txt files and through specific WAF rules that blocked crawling from Perplexity's acknowledged crawlers. Cloudflare found that Perplexity would use multiple IP addresses not listed in its official IP range and rotate through these IPs to sneak into the sites' content and records. "In addition to rotating IPs, we observed requests coming from different Autonomous System Numbers (ASNs) to evade website blocks," Cloudflare said. "This activity was observed across tens of thousands of domains and millions of requests per day." Also: Samsung users can get Perplexity Pro AI free for an entire year - that's $240 off The result? Cloudflare said it observed "Perplexity not only accessed such content but was able to provide detailed answers about it when queried by users." Moving forward, Cloudflare has claimed its bot management system can spot and block Perplexity's hidden User Agent. Any bot management customer who has an existing block rule in place is already protected. If you don't want to block such traffic on the grounds that it might be from real users, you can set up rules to challenge requests. This allows real humans to proceed. Customers with existing challenge rules are already protected. Also: I tested ChatGPT's Deep Research against Gemini, Perplexity, and Grok AI to see which is best Finally, Cloudflare has added signature matches for the stealth crawler to its managed rule, which blocks AI crawling activity. This rule is available to all Cloudflare customers, including free users. Cloudflare noted that OpenAI does obey the robots.txt restrictions and doesn't try to break into websites. That said, Ziff Davis, ZDNET's parent company, filed an April 2025 lawsuit against OpenAI, alleging it infringed copyrights in training and operating its AI systems. Cloudflare has recently started offering its customers the option to automatically block all AI crawlers. To complement the move to block AI crawlers, Cloudflare has also launched its Pay Per Crawl program, enabling publishers to set rates for AI companies that want to scrape their content. Also: 5 reasons why I still prefer Perplexity over every other AI chatbot This follows numerous deals in which media businesses are permitting AI companies to legally use their content to train their large language models (LLMs). Examples include The New York Times with Amazon, The Washington Post with OpenAI, and Perplexity with Gannett Publishing. In the meantime, Perplexity appears to continue to break the rules in its hunt for content. ZDNET has asked Perplexity about Cloudflare's claims, but the company has not responded. Since then, Perplexity has publicly and loudly announced that Cloudflare has it all wrong. In a blog post, Perplexity claims: This controversy reveals that Cloudflare's systems are fundamentally inadequate for distinguishing between legitimate AI assistants and actual threats. If you can't tell a helpful digital assistant from a malicious scraper, then you probably shouldn't be making decisions about what constitutes legitimate web traffic. Those are fighting words! Further, Perplexity states, "Technical errors in Cloudflare's analysis aren't just embarrassing -- they're disqualifying. When you misattribute millions of requests, publish completely inaccurate technical diagrams, and demonstrate a fundamental misunderstanding of how modern AI assistants work, you've forfeited any claim to expertise in this space." This fight is on. Stay tuned for what's next in this battle between an internet giant and an AI powerhouse.

[5]

Perplexity is sneaking onto websites to scrape blocked content, says Cloudflare

Cloudflare now offers services to block aggressive AI crawlers. Cloudflare, a leading content delivery network (CDN) company, has accused the AI startup Perplexity of evading websites' "no crawl" directives by stealthily deploying web crawlers to scrape content from sites that have explicitly blocked its official bots. If that sounds familiar, you've heard these accusations before. Last year, WIRED and Forbes both accused Perplexity of doing the same thing to their sites. According to Cloudflare, when Perplexity's web crawler encountered a robots.txt file, which sites use to block their content from being crawled, Perplexity pretended to be an ordinary Chrome web browser on a Mac. This enabled it to bypass the bot barriers. Also: Perplexity's Comet AI browser is hurtling toward Chrome - how to try it Cloudflare started investigating when it received complaints from customers who had "both disallowed Perplexity crawling activity in their robots.txt files and also created WAF [Web Application Firewall] rules to specifically block both of Perplexity's declared crawlers: PerplexityBot and Perplexity-User." The customers said their content still ended up in Perplexity, even after they had blocked it. The CDN then set up new test domains, explicitly prohibiting all automated access both in its robots.txt files and through specific WAF rules that blocked crawling from Perplexity's acknowledged crawlers. Cloudflare found that Perplexity would use multiple IP addresses not listed in Perplexity's official IP range and would rotate through these IPs to sneak into the sites' content and record. "In addition to rotating IPs, we observed requests coming from different Autonomous System Numbers (ASNs) to evade website blocks," Cloudflare said. "This activity was observed across tens of thousands of domains and millions of requests per day." Also: Samsung users can get Perplexity Pro AI free for an entire year - that's $240 off The result? Cloudflare said it observed "Perplexity not only accessed such content but was able to provide detailed answers about it when queried by users." Moving forward, Cloudflare has claimed its bot management system can spot and block Perplexity's hidden User Agent. Any bot management customer who has an existing block rule in place is already protected. If you don't want to block such traffic on the grounds that it might be from real users, you can set up rules to challenge requests. This allows real humans to proceed. Customers with existing challenge rules are already protected. Also: I tested ChatGPT's Deep Research against Gemini, Perplexity, and Grok AI to see which is best Finally, Cloudflare has added signature matches for the stealth crawler to its managed rule, which blocks AI crawling activity. This rule is available to all Cloudflare customers, including free users. Cloudflare noted that OpenAI does obey the robots.txt restrictions and doesn't try to break into websites. That said, Ziff Davis, ZDNET's parent company, filed an April 2025 lawsuit against OpenAI, alleging it infringed copyrights in training and operating its AI systems. Cloudflare has recently started offering its customers the option to automatically block all AI crawlers. To complement the move to block AI crawlers, Cloudflare has also launched its "Pay Per Crawl" program, enabling publishers to set rates for AI companies that want to scrape their content. Also: 5 reasons why I still prefer Perplexity over every other AI chatbot This follows numerous deals in which media businesses are permitting AI companies to legally use their content to train their large language models (LLMs). Examples include The New York Times with Amazon, The Washington Post with OpenAI, and Perplexity with Gannett Publishing. In the meantime, Perplexity appears to continue to break the rules in its hunt for content. ZDNET has asked Perplexity about Cloudflare's claims, but the company has not responded.

[6]

Cloudflare says Perplexity's AI bots are 'stealth crawling' blocked sites

The AI search startup Perplexity is allegedly skirting restrictions meant to stop its AI web crawlers from accessing certain websites, according to a report from Cloudflare. In the report, Cloudflare claims that when Perplexity encounters a block, the startup will conceal its crawling identity "in an attempt to circumvent the website's preferences." The report only adds to concerns about Perplexity vacuuming up content without permission, as the company got caught barging past paywalls and ignoring sites' robots.txt files last year. At the time, Perplexity CEO Aravind Srinivas blamed the activity on third-party crawlers used by the site. Now, Cloudflare, one of the world's biggest internet architecture providers, says it received complaints from customers who claimed that Perplexity's bots still had access to their websites even after putting their preference in their websites' robots.txt file and by creating Web Application Firewall (WAF) rules to restrict access to the startup's AI bots. To test this, Cloudflare says it created new domains with similar restrictions against Perplexity's AI scrapers. It found that the startup will first attempt to access the sites by identifying itself as the names of its crawlers: "PerplexityBot" or "Perplexity-User." But if the website has restrictions against AI scraping, Cloudflare claims Perplexity will change its user agent -- the bit of information that tells a website what kind of browser and device you're using, or if the visitor is a bot -- to "impersonate Google Chrome on macOS." Cloudflare says this "undeclared crawler" uses "rotating" IP addresses that the company doesn't include on the list of IP addresses used by its bots. Additionally, Cloudflare claims that Perplexity changes its autonomous system networks (ASN), a number used to identify groups of IP networks controlled by a single operator, to get around blocks as well. "This activity was observed across tens of thousands of domains and millions of requests per day," Cloudflare writes. In a statement to The Verge, Perplexity spokesperson Jesse Dwyer called Cloudflare's report a "publicity stunt," adding that "there are a lot of misunderstandings in the blog post." Cloudflare has since de-listed Perplexity as a verified bot and has rolled out methods to block Perplexity's "stealth crawling." Cloudflare CEO Matthew Prince has been outspoken about AI's "existential threat" to publishers. Last month, the company started letting websites ask AI companies to pay to crawl their content, and began blocking AI crawlers by default.

[7]

Cloudflare: Perplexity AI Acts Like North Korean Hackers, Ignores Scraping Blocks

Search engine provider Perplexity AI is accused of acting like "North Korean hackers" after the company's bots were found crawling websites with anti-scraping rules in place. The accusation comes from Cloudflare, an internet infrastructure provider that's developed safeguards to prevent AI companies from scraping data from third-party websites. On Monday, Cloudflare CEO Matthew Prince blasted Perplexity AI for invasive web crawling. (The AI company has also been found scraping data from media websites.) "Some supposedly 'reputable' AI companies act more like North Korean hackers. Time to name, shame, and hard block them,' Prince tweeted. Cloudflare conducted an investigation that allegedly found Perplexity AI "repeatedly modifying" the company's web-crawling bots to evade data-scraping measures on third-party websites. In response, Cloudflare has delisted Perplexity AI as a "verified bot," lumping the company's web crawlers in with other untrusted activity, which could make it harder for it to index content. In addition, Cloudflare updated its own systems to block the "stealth crawling" from Perplexity AI. Perplexity AI didn't immediately respond to a request for comment. But the crackdown risks undermining its AI-powered search engine, which has also been flagged for violating web-scraping rules at news websites, without asking for permission or paying for a license. "Today, over two and a half million websites have chosen to completely disallow AI training through our managed robots.txt feature or our managed rule blocking AI Crawlers," Cloudflare says. Cloudflare flagged the alleged web-scraping after receiving complaints from customers, who were specifically blocking Perplexity's bots from indexing their sites. Cloudflare then verified the claims by creating several test domains that were supposed to be deliberately hidden from search engines, but Perplexity AI still found a way to crawl them. "We observed that Perplexity uses not only their declared user-agent, but also a generic browser intended to impersonate Google Chrome on macOS when their declared crawler was blocked," the company found. In addition, the web crawler used multiple IP addresses outside of Perplexity's official IP range, rotating through them if the data scraping was blocked. "This activity was observed across tens of thousands of domains and millions of requests per day," Cloudflare added. "Of note: when the stealth crawler was successfully blocked, we observed that Perplexity uses other data sources -- including other websites -- to try to create an answer. However, these answers were less specific and lacked details from the original content, reflecting the fact that the block had been successful." The incident underscores the ongoing clash between AI programs and their insatiable demand for data and growing calls for them to pay for the content they use. In response, some media companies have sued Perplexity AI and other providers, including OpenAI, for alleged copyright infringement. In the meantime, Cloudflare anticipates Perplexity AI will update its web crawler to beat such anti-bot measures. The company adds that others, such as OpenAI, have been respecting the anti-data scraping measures in place. Disclosure: Ziff Davis, PCMag's parent company, filed a lawsuit against OpenAI in April 2025, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.

[8]

Perplexity vexed by Cloudflare's claims its bots are bad

AI search biz insists its content capture and summarization is okay because someone asked for it AI search biz Perplexity claims that Cloudflare has mischaracterized its site crawlers as malicious bots and that the content delivery network made technical errors in its analysis of Perplexity's operations. "This controversy reveals that Cloudflare's systems are fundamentally inadequate for distinguishing between legitimate AI assistants and actual threats," Perplexity said in a social media post published on Monday afternoon. "If you can't tell a helpful digital assistant from a malicious scraper, then you probably shouldn't be making decisions about what constitutes legitimate web traffic." The dispute started earlier on Monday, when Cloudflare published a post claiming that Perplexity has been disguising its web crawling bots by altering the user-agent identifier and by using unexpected IP address ranges to evade web application firewall blocking. It did not suggest the bots were malicious, merely that they were obfuscating their identity to avoid being blocked. Cloudflare CEO Matthew Prince didn't use the term "malicious" either but came closer. "Some supposedly 'reputable' AI companies act more like North Korean hackers," Prince said on social media in reference to his company's post. "Time to name, shame, and hard block them." Cloudflare, which provides network infrastructure services like hosting and security, argues that web publishers should be able to control the mechanisms used to access their content. The issue is that automated page visits, whether from Perplexity or another AI service, don't generate ad impressions and revenue for publishers (assuming ad fraud systems function properly) . The rise of AI crawlers that answer search queries by summarizing content culled from websites without compensation has led to a decline in search engine traffic referrals to websites and has thrown the web's dominant business model into question. Perplexity's position is that because a human user entered a search query, the Perplexity bot fetching that information from a publisher's website should be treated as a human visitor rather than an automaton. To support that claim, Perplexity argues that, when Google's search engine crawls a page to include in its web index, that's different from when Google Search fetches a webpage and presents a preview of the content. "When companies like Cloudflare mischaracterize user-driven AI assistants as malicious bots, they're arguing that any automated tool serving users should be suspect - a position that would criminalize email clients and web browsers, or any other service a would-be gatekeeper decided they don't like," Perplexity said. That's a disingenuous comparison, however. A search engine doesn't intend for a thumbnail image or text snippet to be a complete substitute for visiting the web page. If Perplexity's response answers a search query and obviates the need to visit the source webpage to obtain that answer, that's clearly a different scenario. This is not the first time Perplexity has come under fire for bot behavior. In early June last year, Forbes accused the company of ripping off news content, an allegation subsequently investigated by AWS for terms of service compliance. Shortly thereafter, blogger and podcaster Robb Knight accused Perplexity of lying about its user agent, an allegation supported by a report from Wired. Kingsley Uyi Idehen, founder and CEO of OpenLink Software, an AI-oriented middleware business, pushed back against Perplexity's claims. Citing the company's contention that its bot is acting to fulfill a human user's query, he said in response, "This lack of clarity around who is acting and on whose behalf isn't a technical footnote - it's a foundational gap at the center of growing concerns about LLM-based tools and AI agents." Identity, authenticity, and accountability continue to be important for online interaction, Idehen argues. So if Perplexity is obfuscating its bots, as Cloudflare has claimed, that's a problem. Craig DeWitt, founder of SkyFire, a payment network for AI-based services, told The Register in an interview that both Perplexity and Cloudflare are right in their own way, though he disagreed with Prince comparing Perplexity to North Korea. "What Perplexity is right about is that the nature of the internet has changed with these AI interfaces," DeWitt said, citing similar observations from Vercel CEO Guillermo Rauch that the internet must adapt to AI. "The problem now is like it's no longer human directly to website, it's human through an intermediary to website," DeWitt said. "And the problem for the websites - this is talking on Cloudflare's side, now - is that the monetization model for a lot of these, in terms of servicing ads, goes away." Websites, he said, do not want to deliver their content without attribution through AI service interfaces. But, he said, collaboration is the key because if websites start blocking everything, that hurts everyone. Cloudflare and Perplexity did not respond to requests for comment. ®

[9]

Perplexity AI crawlers accused of stealth data scraping

Cloudflare finds AI search biz ignoring crawl prohibitions and trying to hide its spiders Perplexity, an AI search startup, has been spotted trying to disguise its content-scraping bots while flouting websites' no-crawl directives. According to Cloudflare, a network infrastructure company that recently entered the bot gatekeeping business, Perplexity bots don't take no for an answer when websites say that they don't want to be scraped. "Although Perplexity initially crawls from their declared user agent, when they are presented with a network block, they appear to obscure their crawling identity in an attempt to circumvent the website's preferences," said Cloudflare engineers Gabriel Corral, Vaibhav Singhal, Brian Mitchell, and Reid Tatoris in a Monday blog post. "We see continued evidence that Perplexity is repeatedly modifying their user agent and changing their source ASNs to hide their crawling activity, as well as ignoring -- or sometimes failing to even fetch -- robots.txt files." A robots.txt file is a way for websites to tell web crawlers - automated client software - which resources, if any, they may access. It's part of the Robots Exclusion Protocol, originally drafted by Martijn Koster in 1994. Compliance is voluntary and the rising lack of compliance has led companies like Cloudflare to offer defensive technology to publishers. The Cloudflare engineers say that they heard from customers that their sites were still being crawled by Perplexity bots even after they warned the bots via robots.txt directives and set up web application firewall rules to block declared crawlers and . The stealth bots, they said, operated outside of the IP addresses in Perplexity's official IP range, using addresses that came from different ASNs (IP address ranges) to evade address-based blocking. Using a generic browser impersonating Google Chrome on macOS when blocked, the bots were seen making millions of site data requests daily. Perplexity did not respond to a request for comment. Anthropic faced similar accusations last year, and in June this year, was sued by Reddit for content scraping alleged to violate the site's user agreement and California competition law. According to Cloudflare, OpenAI's bots have been following best practices lately and its ChatGPT Agent has been signing HTTP requests using Web Bot Auth, a proposed standard for managing bot behavior. Initially, site crawling bots were a mixed blessing. They consumed computing resources, but they sometimes provided some benefit in return. Being visited by the Google Search crawler, for example, meant a site might appear in the Google Search Index and thus would be more visible to searchers, some of whom could be expected to visit and perhaps generate ad revenue. Lately, however, that arrangement has become more lopsided. AI crawlers have proliferated while search referral traffic has plummeted. Bots are taking more and returning less, and that's due mainly to the data demands of AI companies, whose business model has become reselling the internet's non-consensually gathered data as an API or cloud computing service. In June, bot-blocking biz TollBit published its Q1 2025 State of the Bots report, which found an 87 percent increase in scraping during the quarter. It also found that the share of bots ignoring robots.txt files increased from 3.3 percent to 12.9 percent during the quarter. In March 2025, the firm said, 26 million AI scrapes bypassed robots.txt files. AI crawlers don't necessarily index websites like search crawlers. They may use site content for model training or Retrieval Augmented Generation (RAG), a way to access content not captured in model training data. Google's AI Overviews and Perplexity Search, for example, rely on RAG to fetch current information in response to a user query or prompt. According to TollBit, RAG-oriented scraping has surpassed training-oriented scraping. "From Q4 2024 to Q1 2025, RAG bot scrapes per site grew 49 percent, nearly 2.5X the rate of training bot scrapes (which grew by 18 percent)," the firm's report says. "This is a clear signal that AI tools require continuous access to content and data for RAG vs. for training." The problem for web publishers is that this is a revenue-threatening parasitic relationship. When an AI bot gathers data and presents a summary through an AI company's tool or interface, that imposes a compute cost on the source while offering no compensation for the harvested content. TollBit's report indicates that, on the sites that it monitors, Bing's ratio of scrapes to referred human site visits was 11:1. For AI-only apps, the rates were as follows: OpenAI 179:1; Perplexity 369:1; and Anthropic: 8692:1. AI firms are aware that their bots have worn out their welcome on the web. Perplexity last year launched its Publisher Program to pay participating partners. And various AI companies have struck deals with major publishers that grant access to their content. Reddit, keeper of valuable user-created content, has seen its business improve as a result. Most websites, however, haven't been invited to the table to negotiate with the likes of Amazon, Anthropic, Google, Meta, OpenAI, and Microsoft. Thus, aspiring intermediaries like Cloudflare and TollBit are offering publishers a technical negotiation method: a paywall. It remains to be seen whether the cloud giants like Amazon, Google, and Microsoft, which make money from any AI usage on their infrastructure, need long-tail content enough to pay for it. And it's also unclear whether AI firms that aren't trying to incentivize data center usage can survive the impact of paywalls. But at some point, either a business model that works for both AI firms and publishers will take shape, publishing will retreat behind subscription walls and the free web will become a sea of synthetic AI slop, or the AI bubble will collapse under the weight of unrequited capex. ®

[10]

Perplexity is allegedly scraping websites it's not supposed to, again

Web crawlers deployed by Perplexity to scrape websites are allegedly skirting restrictions, according to a new report from Cloudflare. Specifically, the report claims that the company's bots appear to be "stealth crawling" sites by disguising their identity to get around robots.txt files and firewalls. Robots.txt is a simple file websites host that lets web crawlers know if they can scrape a websites' content or not. Perplexity's official web crawling bots are "PerplexityBot" and "Perplexity-User." In Cloudflare's tests, Perplexity was still able to display the content of a new, unindexed website, even when those specific bots were blocked by robots.txt. The behavior extended to websites with specific Web Application Firewall (WAF) rules that restricted web crawlers, as well. Cloudflare believes that Perplexity is getting around those obstacles by using "a generic browser intended to impersonate Google Chrome on macOS" when robots.txt prohibits its normal bots. In Cloudlfare's tests, the company's undeclared crawler could also rotate through IP addresses not listed in Perplexity's official IP range to get through firewalls. Cloudflare says that Perplexity appears to be doing the same thing with autonomous system numbers (ASNs) -- an identifier for IP addresses operated by the same business -- writing that it spotted the crawler switching ASNs "across tens of thousands of domains and millions of requests per day." Engadget has reached out to Perplexity for comment on Cloudflare's report. We'll update this article if we hear back. Up-to-date information from websites is vital to companies training AI models, especially as service's like Perplexity are used as replacements for search engines. Perplexity has also been caught in the past circumventing the rules to stay up-to-date. Multiple websites reported in 2024 that Perplexity was still accessing their content despite them forbidding it in robots.txt -- something the company blamed on the third-party web crawlers it was using at the time. Perplexity later partnered with multiple publishers to share revenue earned from ads displayed alongside their content, seemingly as a make-good for its past behavior. Stopping companies from scraping content from the web will likely remain a game of whack-a-mole. In the meantime, Cloudflare has removed Perplexity's bots from its list of verified bots and implemented a way to identify and block Perplexity's stealth crawler from accessing its customers' content.

[11]

The War for the Web Has Begun

One of the internet's biggest gatekeepers has accused a rising AI star of breaking the web's oldest rules. The explosive feud could change how we all get information online. A high-stakes war has just broken out over the future of the internet. In one corner is Cloudflare, a giant of web infrastructure that acts as a gatekeeper for a huge portion of online traffic. In the other is Perplexity, a darling of the AI world, a search engine threatening to upend Google's dominance. The accusation is explosive: Cloudflare claims Perplexity is a bad actor, a rogue bot that ignores the internet's oldest rules to secretly scrape data from websites that have explicitly told it to stay away. Perplexity’s response is just as fiery: it says Cloudflare is either dangerously incompetent or engaged in a publicity stunt, fundamentally misunderstanding how modern AI works. The feud is the first major battle in a conflict that will define the next era of the web: Who gets to access online information, and who gets to decide the rules? For decades, the internet has operated on a "gentleman's agreement" called the robots.txt file. It’s a simple text file that website owners use to post a digital "Do Not Enter" sign for automated web crawlers or "bots." Well-behaved bots, like Google's, respect this sign. In a scathing blog post, Cloudflare alleges that Perplexity is ignoring it. The company claims that when its declared bot, "PerplexityBot," is blocked, the AI search engine switches to stealth mode, using generic browser identities and rotating IP addresses to continue crawling and gathering data in disguise. Cloudflare says it tested this by creating brand-new, private websites with strict "no bots allowed" rules. Despite this, they found that "Perplexity was still providing detailed information regarding the exact content hosted on each of these restricted domains." Based on this "stealth crawling behavior," Cloudflare announced it has now de-listed Perplexity as a verified bot and is actively blocking its undeclared crawlers. Perplexity’s response was swift, accusing Cloudflare of getting "almost everything wrong about how modern AI assistants actually work." The company argues that it is not a traditional "bot" and that Cloudflare is misapplying old rules to new technology. The core of their argument is the difference between a bot and a user agent. A traditional bot, like Google's, systematically crawls billions of pages to build a massive index for later use. A user agent, Perplexity claims, acts on behalf of a real person in real-time. When you ask Perplexity a question, its AI agent fetches the necessary information from the web at that moment to answer you. It's not stockpiling data; it's acting as your personal research assistant. "This is fundamentally different from traditional web crawling in which crawlers systematically visit millions of pages to build massive databases, whether anyone asked for that specific information or not," Perplexity wrote in a detailed response. "When companies like Cloudflare mischaracterize user-driven AI assistants as malicious bots, they're arguing that any automated tool serving users should be suspectâ€"a position that would criminalize email clients and web browsers." Then came the bombshell counter-accusation. Perplexity claims Cloudflare "fundamentally misattributed 3-6M daily requests" from a third-party cloud browser service to Perplexity, calling it a "basic traffic analysis failure that's particularly embarrassing for a company whose core business is understanding and categorizing web traffic." Perplexity suggests this is either a "clever publicity moment" or a sign that Cloudflare is "dangerously misinformed on the basics of AI." Users on social media were divided. “Perplexity is just using a proxy to fetch something that’s already on the public web, to answer a user’s question. Framing it as some kind of attack is absurd. The public web should be public,†defended tech founder Andrej Radonjic. Another user was more critical: “Perplexity, pretending to be a search engine, pretending to be AI, yet neither.†This public feud lays bare the central tension of the AI era. AI startups like Perplexity need access to the vast ocean of data on the open web to function and compete with giants like Google and OpenAI. Without it, they can't provide real-time, accurate answers. But website owners are growing increasingly wary of having their content scraped without consent or compensation to train and power these new AI models. Cloudflare, by choosing to block Perplexity's undeclared crawlers, has effectively appointed itself as the AI data police, making decisions about what constitutes "legitimate" web traffic. Perplexity warns this could lead to a "two-tiered internet" where access depends not on a user's needs, but on whether their chosen AI tool has been "blessed by infrastructure controllers." The rules of the internet are being rewritten in real-time. The old gentleman's agreement is breaking down, and the battle between the gatekeepers and the innovators has just begun. The outcome will determine not just the future of AI, but the future of the open web itself.

[12]

Perplexity accused of scraping websites even when told not to -- here's their response

Perplexity is riding high in the AI world right now. After launching the company's Comet browser, leading the way in agentic browsing, they've ran into some controversy. Cloudflare, in an online blog, published research that showed Perplexity has been crawling and scraping content from websites that explicitly stated they don't want to be scraped. The research accuses Perplexity of obscuring its identity when trying to scrape web pages, stating that they had received complaints from customers who had both disallowed Perplexity from analysing their files and created rules to specifically block Perplexity from doing this. Cloudflare performed its own tests to confirm this, creating brand new domains and then querying Perplexity with questions about these specific domains. Perplexity was able to answer queries on these pages, even though Cloudflare had stated it didn't want these websites to be analyzed. Perplexity and lots of other AI tools require large amounts of information to work. They analyse the internet, looking at forums, web pages, and other online sources of information to work. However, there is more and more backlash to this approach and an expectation for transparency from AI companies on how they gather data. Some of Perplexity's competitors, like Claude and ChatGPT are offering ways to opt out of data gathering, and it is likely we'll see more rules as time goes on. How Perplexity is able to get around these rules is complicated. It appears that Perplexity is changing its bots "user agent". In other words, it is pretending to not be a large AI model but just a normal visitor. "This activity was observed across tens of thousands of domains and millions of requests per day. We were able to fingerprint this crawler using a combination of machine learning and network signals," says Cloudflare's post. Jesse Dwyer, a spokesperson for Perplexity, accused Cloudflare's blog of being a sales pitch for the company in an email to TechCrunch on the subject. She went on to say that the screenshots in the blog "show that no content was accessed" and that the bot named in the Cloudflare blog "isn't even ours". Cloudflare is now taking a strong stance on AI crawlers, including Perplexity. The company has claimed that AI is breaking the business model of the internet and wants to help fight back. While Perplexity has denied this incident, the company has been in hot water before for similar problems, being accused of stealing news sites' content and struggling to define plagiarism.

[13]

Perplexity hits back after Cloudflare slams its online scraping tools

Perplexity wants Cloudflare to engage in dialogue - not just to post accusations online Perplexity AI has accused Cloudflare of mischaracterizing its web crawlers as malicious bots after the latter claimed the AI company obfuscated its bot identity using deceptive strings and unexpected IP ranges. Responding to Cloudflare's analysis and testing, Perplexity declared that analysis was technically flawed and that it misattributed unrelated traffic. Perplexity has also asserted its traffic is user-driven, not stealth scraping or malicious crawling, suggesting that Cloudflare has misunderstood modern AI assistant behavior. "It appears Cloudflare confused Perplexity with 3-6M daily requests of unrelated traffic from BrowserBase, a third-party cloud browser service that Perplexity only occasionally uses for highly specialized tasks (less than 45,000 daily requests)," the company wrote in an X post. Hitting back at Cloudflare's obfuscation claims, Perplexity said the company obfuscated its own methodology, even accusing the company of pulling off a stunt to gain attention. One of Perplexity's possible explanations reads: "Cloudflare needed a clever publicity moment and we-their own customer-happened to be a useful name to get them one." "This controversy reveals that Cloudflare's systems are fundamentally inadequate for distinguishing between legitimate AI assistants and actual threats," the post continues. In the post, Perplexity also offered context about how AI crawlers work: when a user asks a question, the AI agent doesn't retrieve the information from a central database, but rather fetches it in real time from the relevant websites. This contrasts to traditional web crawling, "in which crawlers systematically visit millions of pages to build massive databases, whether anyone asked for that specific information or not." Moving forward, Perplexity urges Cloudflare to engage in dialogue instead of publishing misinformation about its practices.

[14]

Perplexity accused of breaking a major online AI scraping rule - but it says it has done nothing wrong

OpenAI adheres to responsible crawling, but Perplexity quiet for now Cloudflare has accused AI giant Perplexity of scraping websites which explicitly disallowed crawling via robots.txt and other network-level rules by hiding its identity and conducting obfuscated crawling activity. Researchers from the company said they observed Perplexity using multiple user agents, including one impersonating Google Chrome on macOS, as well as rotating IP addresses and ASNs to evade detection. Alarmingly, Cloudflare detected millions of daily requests across tens of thousands of domains, highlighting the sheer scale of illegitimate scraping by one of the biggest companies in the space. According to Cloudflare's analysis, in many cases, Perplexity ignored or didn't fetch robots.txt files - which are plain-text files placed at the root of a site to tell automated agents (like search engines, AI crawlers and link checkers) which URLs may or may not be fetched. Tellingly, Perplexity also attempted to access test websites Cloudflare created, even though they were blocked via robots.txt and not publicly discoverable, while using undeclared crawlers that weren't even associated with its official IP range. "Although Perplexity initially crawls from their declared user agent, when they are presented with a network block, they appear to obscure their crawling identity in an attempt to circumvent the website's preferences," the researchers write. In response to its findings, Cloudflare has de-listed Perplexity's bots from its verified bots list. The company has also added new managed rule heuristics to detect and block stealth crawling. In contrast, OpenAI's crawlers have so far respected robots.txt and block pages, using transparent identifiers and documented behavior to obtain information. Perplexity denied wrongdoing, calling Cloudflare's post a "sales pitch", adding the identified bots weren't even theirs. TechRadar Pro has asked Perplexity for its comment. Cloudflare urges bot operators to respect website preferences by being transparent, being well-behaved netizens, serving a clear purpose, using separate bots for separate activities and following rules and signals like robots.txt.

[15]

Cloudflare Accuses Perplexity AI of Using Stealth Crawlers to Evade Website Blocks - Decrypt

Perplexity denies the claims, calling Cloudflare's evidence a "sales pitch" and disputing that any banned content was accessed. Perplexity's crawlers kept accessing content from tens of thousands of websites even after those sites explicitly blocked them, according to internet infrastructure provider Cloudflare. The company said Monday it had delisted Perplexity from its verified bot program and implemented blocks against what it characterized as deceptive scraping practices. San Francisco-based Perplexity was founded in 2022 by Aravind Srinivas (CEO, former OpenAI researcher), Denis Yarats (former Facebook AI), Johnny Ho, and Andy Konwinski (co‑founders of Databricks). The company has received funding from investors including Elad Gil, Nat Friedman (former GitHub CEO), and Nvidia, among others, and was valued at $18 billion after raising $100 million last month. The recent conflict erupted after Cloudflare customers complained that Perplexity was still scraping their sites despite implementing both robots.txt directives and specific firewall rules to block the AI company's declared crawlers. Cloudflare engineers Gabriel Corral, Vaibhav Singhal, Brian Mitchell, and Reid Tatoris confirmed in tests that "Perplexity's crawlers were in fact being blocked on the specific pages in question." To test Perplexity's behavior, Cloudflare created multiple newly purchased domains with restrictive robots.txt files that prohibited all automated access. "We conducted an experiment by querying Perplexity AI with questions about these domains, and discovered Perplexity was still providing detailed information regarding the exact content hosted on each of these restricted domains." What happened next surprised them. Rather than respecting the blocks, Perplexity appeared to switch tactics. "We observed that Perplexity uses not only their declared user-agent, but also a generic browser intended to impersonate Google Chrome on macOS when their declared crawler was blocked," the engineers wrote. The stealth crawlers employed sophisticated evasion techniques. "This undeclared crawler utilized multiple IPs not listed in Perplexity's official IP range, and would rotate through these IPs in response to the restrictive robots.txt policy and block from Cloudflare. In addition to rotating IPs, we observed requests coming from different ASNs in attempts to further evade website blocks." According to Cloudflare, Perplexity's "declared" crawlers -- the ones that are easily identifiable -- generate 20-25 million requests daily, while the undeclared stealth crawlers -- those which rely on shady tactics to hide their purpose -- add another 3-6 million requests per day. "This activity was observed across tens of thousands of domains and millions of requests per day." The company did not respond to Decrypt's request for comment. A spokesman dismissed the allegations to TechCrunch as nothing more than a Cloudflare "sales pitch." Cloudflare CEO Matthew Prince has been vocal about what he sees as AI companies' unsustainable extraction of web content. "Search traffic referrals have plummeted as people increasingly rely on AI summaries." In July, he revealed devastating ratios: while Google sends one visitor for every 18 pages it crawls, AI companies are far worse. OpenAI's ratio deteriorated from 250-to-1 six months ago to 1,500-to-1 today. Anthropic's numbers are even more extreme, jumping from 6,000-to-1 to 60,000-to-1 in the same period. This prompted Cloudflare to launch what it calls "Content Independence Day," defaulting to blocking AI crawlers for all new domains, becoming the de-facto vigilante protecting content creators from the threats of pesky AI crawlers. As Decrypt previously reported, more than a million websites had already opted into blocking since last fall, with major publishers including the Associated Press, Time, The Atlantic, BuzzFeed, Reddit, Quora, and Universal Music Group joining the movement. "There are clear preferences that crawlers should be transparent, serve a clear purpose, perform a specific activity, and, most importantly, follow website directives and preferences," Cloudflare stated. The company contrasted Perplexity's behavior with OpenAI, which it said properly respects robots.txt files and stops crawling when blocked. Cloudflare's response includes both immediate technical measures and longer-term initiatives. The company has deployed signature matches for the stealth crawler into its managed rules, available to all customers including free users. It's also developing tools like an "AI Labyrinth," which traps non-compliant bots in mazes of fake content, and a "pay-per-crawl" marketplace that would allow publishers to charge AI companies for access to their content.

[16]

Cloudflare calls out Perplexity for hiding 'crawling activity' as AI bot scrapes websites that explicitly disallow it, Perplexity responds by calling them 'more flair than cloud'

It's AI versus the internet as Cloudflare and Perplexity have a public falling out over the 'stealth crawling' of restricted websites. The disagreement has spiralled to name calling, even, as Perplexity snaps back at Cloudflare calling it "more flair than cloud", which isn't quite the burn they think it is. Just last month, internet delivery network and cybersecurity company Cloudflare announced it would be blocking AI scrapers from getting access to websites using its service without permission. Now, it seems like some crawlers are getting around through covert means, according to Cloudflare. In a blog post (via TechCrunch) titled "Perplexity is using stealth, undeclared crawlers to evade website no-crawl directives", Cloudflare, which handles around 20% of global web traffic, goes into the specifics of how exactly it spotted this problem and why it has de-listed Perplexity as a verified, trusted bot. Customers first complained that Perplexity was getting around files and rules specifically set up to block crawlers. To test these complaints, Cloudflare created brand new domains that weren't indexed by any search engines "nor made publicly accessible in any discoverable way." These domains used the robots.txt file with explicit rules to stop any bots. Cloudflare then asked Perplexity AI questions about those specific domains. As these domains weren't indexed or made discoverable, Perplexity would have to access the site in order to provide information for queries. Despite roadblocks Cloudflare set up, Perplexity allegedly still gave information on crawled sites. Interestingly, Cloudflare reportedly observed attempts to get content not only from the bot that was blocked but from alleged stealth agents, impersonating Google Chrome on macOS. The undisclosed bot also reportedly used multiple IP addresses that aren't in Perplexity's declared IP range. In response, Perplexity claims Cloudflare either misattributed 3-6m daily requests from BrowserBase, "a third-party cloud browser service that Perplexity only occasionally uses for highly specialized tasks (less than 45,000 daily request)", or that it "needed a clever publicity moment and we -- their own customer -- happened to be a useful name to get them one." Perplexity published its own blog post retort, in part, to Cloudflare's investigation, claiming that "Modern AI assistants work fundamentally differently from traditional web crawling." It argues that these bots aren't scraping the data; they are answering queries on the fly without just retrieving that information from a database. "When companies like Cloudflare mischaracterize user-driven AI assistants as malicious bots," the post reads, "they're arguing that any automated tool serving users should be suspect. "This controversy reveals that Cloudflare's systems are fundamentally inadequate for distinguishing between legitimate AI assistants and actual threats... The bluster around this issue also reveals that Cloudflare's leadership is either dangerously misinformed on the basics of AI, or simply more flair than cloud." Perplexity's statement claims not only that Perplexity gets all data in real time, rather than from a database, but that all other agentic AI does. It therefore claims there's nothing malicious about it, and it's not out of the norm for agentic AI. Cloudflare's report, however, seems less about agentic AI being specifically malicious, more about the workarounds and obfuscated tactics it's allegedly noted being used by Perplexity itself. Cloudflare claims to have run these same tests with ChatGPT and observed no attempts to get around crawl blocks. This includes OpenAI's ChatGPT Agent, which is agentic AI. ChatGPT seems to be fine being blocked by Cloudflare in a way that Perplexity is not. Though there does appear to be a difference between the AI used to answer those queries and the data sets that train those AI, the response feels semantic in nature. When Cloudflare announced that users have the option to choose if an AI can scrape their data, they are also declaring that those who don't like the use of AI don't have to subject their sites to it. Scrape is a technical term, but to some customers, it is also just a declaration that AI in some form has interacted with their domain without their consent. Cloudflare ends its report saying: "We expected a change in bot and crawler behavior based on these new features, and we expect that the techniques bot operators use to evade detection will continue to evolve. Once this post is live the behavior we saw will almost certainly change, and the methods we use to stop them will keep evolving as well." Cloudflare says it is committed to giving its users the tools to block these bots, even if their tactics become more sneaky, and it is continuing to standardise extensions "to establish clear and measurable principles that well-meaning bot operators should abide by."

[17]

The Cloudflare-Perplexity Clash Over Web Crawling | AIM

Opinions are divided, discussions abound on where to draw the line on web scraping. The heated dispute between cloud infrastructure giant Cloudflare and Perplexity, the AI search application company, regarding the latter's web crawling practices, has brought forth concerns about AI applications scraping without authorisation. On August 4, Cloudflare published a blog post accusing Perplexity of deceptive web crawling practices, where automated systems browse and index website content, alleging the AI company disguised its identity to bypass sites that had explicitly chosen to block such crawlers. However, Perplexity disagrees and claims that Cloudflare is misunderstanding the issue. They argue that mass-scale crawling is not the same as an AI agent used by a user to retrieve information from a website. But in a statement to AIM, Cloudflare reaffirmed its p

[18]

Inside the looming AI-agents war that will redefine the economics of the web

There's a war brewing in the world of AI agents. After declaring a month ago that it would block AI crawlers by default on its network, Cloudflare openly accused Perplexity of deliberately bypassing internet standards to scrape websites. It published a detailed blog post, explaining how, even if its bots were blocked, Perplexity would use certain tactics -- including third-party crawlers -- to access those websites anyway. Perplexity responded swiftly with its own post, pointing out that its use of third-party crawlers was actually significantly less than Cloudflare was saying. But the crux of Perplexity's rebuttal was that Cloudflare fundamentally misunderstood its bot activity: because its agent bots act on behalf of specific user requests -- and not crawling the web generally -- Perplexity believes they should be able to access anything its human operator could. This divide gets right at the heart of how the AI internet works, and settling on a standard will be crucial to how agents, the media industry, and information retrieval in general will evolve. Notably, Perplexity didn't deny that its agent bots bypass the Robots Exclusion Protocol (known as robots.txt) to access content -- it instead said that behavior was justified: If you wouldn't deny the content to a person, you should also provide it to a bot acting on behalf of that person.

[19]

Cloudflare de-lists Perplexity, alleges stealth scraping

Cloudflare has accused Perplexity, the AI search engine, of ignoring website rules and using stealth crawling to bypass robots.txt protocols. Leading internet security player Cloudflare said on Monday (5 August) in a post that it was delisting Perplexity's crawler as a verified bot, and would actively block Perplexity and all of its "stealth bots" from crawling websites. It all started with multiple user complaints as regards violation of robots.txt protocols which let Cloudflare to carry out an investigation which they say led to the discovery that Perplexity was indeed stealth crawling. A robots.txt file lists a website's preferences for bot behaviour and tells bots which webpages they should and should not access. Cloudflare which is estimated to protect some 24m websites according to Backlinko, has a "verified bots" system that whitelists bots that conform to its ghuidelines, which includes the robots.txt protocol, which demands that only IP addresses declared as belonging to the crawling service in question - in this case Perplexity. "We are observing stealth crawling behaviour from Perplexity, an AI-powered answer engine," cloudflare said in its post. "Although Perplexity initially crawls from their declared user agent, when they are presented with a network block, they appear to obscure their crawling identity in an attempt to circumvent the website's preferences." "We see continued evidence that Perplexity is repeatedly modifying their user agent and changing their source ASNs (autonomous systems number) to hide their crawling activity, as well as ignoring -- or sometimes failing to even fetch robots.txt files." "Based on Perplexity's observed behaviour, which is incompatible with those preferences, we have de-listed them as a verified bot and added heuristics to our managed rules that block this stealth crawling." Perplexity is a privately owned Silicon Valley based AI company that uses LLMs (large language models) to process user queries, describing itself as an "answer engine" rather than a traditional search engine. Its rise has been extremely rapid and it was valued at $18bn in June of this year after its most recent raise. Backers include major names like Nvidia, Softbank and Amazon's Jeff Bezos. In June, it was reported that the start-up was finalising a raise of $500m led by Accel, the US VC firm. This is not the first time Perplexity has been accused of unfair 'scraping' of content. The BBC threatened in June to take legal action against Perplexity, accusing the start-up of scraping its content to train AI models, and previous complains from the Dow Jones and The New York Times. In a similar response to its earlier response to the BBC story, Perplexity spokesperson Jesse Dwyer dismissed the report as a "sales pitch", and told TechCrunch that the screenshots in the Cloudflare post "show that no content was accessed". He later added that the bot in question did not belong to Perplexity, but Cloudflare is a respected and trusted supplier, so the latest Cloudflare accusation will add credence to earlier accusations. Don't miss out on the knowledge you need to succeed. Sign up for the Daily Brief, Silicon Republic's digest of need-to-know sci-tech news.

[20]

Cloudflare vs. Perplexity: a web scraping war with big implications for AI

When the web was established several decades ago, it was built on a number of principles. Among them was a key, overarching standard dubbed "netiquette": Do unto others as you'd want done unto you. It's a principle that lived on through other companies, including Google, whose motto for a period was "Don't be evil." The fundamental idea was simple: Act ethically and morally. If someone asked you to stop doing something, you stopped -- or at least considered it. But Cloudflare, an IT company that protects millions of websites from hostile internet attacks, has published an eye-opening exposé suggesting that one of the leading AI tools today isn't following that principle. Cloudflare claims Perplexity, an AI-powered "answer engine," is overriding website requests not to crawl their content by spoofing its identity to hide that the requests are coming from an AI company. Cloudflare launched its investigation after receiving complaints from customers that Perplexity was ignoring directives in robots.txt files, which are used by websites to signal whether they want their content indexed by search engines or AI crawlers. Perplexity's alleged behavior highlights what happens when the web shifts from being rooted in voluntary agreements to a more hard-nosed business environment, where commercial goals overrule moral considerations.

[21]

Cloudflare accuses Perplexity of evading anti-bot rules

Perplexity allegedly evaded detection by impersonating Chrome and rotating its network identifiers. Cloudflare observed AI startup Perplexity bypassing website content access restrictions, alleging the company obscured its bot identities to circumvent digital preferences. This activity involved Perplexity altering bot user agents and autonomous system networks to evade detection across numerous domains. The internet infrastructure provider Cloudflare reported that AI startup Perplexity has been crawling and scraping content from websites that had explicitly disallowed such activity. Cloudflare published research on Monday, detailing its observations that Perplexity ignored existing blocks and concealed its crawling and scraping operations. The network infrastructure company accused Perplexity of obscuring its identity while attempting to scrape web pages, stating this was "an attempt to circumvent the website's preferences." AI products, including those offered by Perplexity, rely on the ingestion of substantial data volumes from the internet. AI startups have frequently scraped text, images, and videos from the internet, often without explicit permission, to facilitate product functionality. Websites have increasingly utilized the Robots.txt file, a web standard designed to inform search engines and AI companies about pages permissible for indexing and those that are not, with varying degrees of success in recent times. Cloudflare stated that Perplexity appeared to be intentionally circumventing these blocks by modifying its bots' "user agent," which is a signal identifying a website visitor by their device and version type. The company also noted that Perplexity altered its autonomous system networks (ASN), a numerical identifier for large networks on the internet, as part of these efforts. Cloudflare's post specified, "This activity was observed across tens of thousands of domains and millions of requests per day. We were able to fingerprint this crawler using a combination of machine learning and network signals." Jesse Dwyer, a spokesperson for Perplexity, dismissed Cloudflare's blog post as a "sales pitch." In an email to TechCrunch, Dwyer asserted that the screenshots included in the post "show that no content was accessed." In a subsequent email, Dwyer claimed the bot identified in the Cloudflare blog was not associated with Perplexity. Cloudflare indicated that it initially detected this behavior after customers reported that Perplexity was crawling and scraping their sites, despite the implementation of Robots.txt rules and specific blocks targeting known Perplexity bots. Cloudflare subsequently conducted tests to verify these claims and confirmed Perplexity's circumvention of existing blocks. Cloudflare stated, "We observed that Perplexity uses not only their declared user-agent, but also a generic browser intended to impersonate Google Chrome on macOS when their declared crawler was blocked." The company confirmed it has de-listed Perplexity's bots from its verified list and has implemented new technical methods to block them. Cloudflare has recently adopted a public stance regarding AI crawlers. Last month, Cloudflare announced a new marketplace designed to enable website owners and publishers to levy charges against AI scrapers visiting their sites. At that time, Cloudflare's chief executive, Matthew Prince, expressed concerns, asserting that AI was disrupting the internet's business model, particularly for publishers. In the preceding year, Cloudflare also introduced a free tool intended to prevent bots from scraping websites for AI training purposes. This is not the first instance of Perplexity facing accusations of unauthorized scraping. Last year, news organizations, including Wired, alleged that Perplexity engaged in content plagiarism. Weeks later, during an interview with TechCrunch's Devin Coldewey at the Disrupt 2024 conference, Perplexity's CEO, Aravind Srinivas, was unable to provide an immediate definition of plagiarism when asked.

[22]

Perplexity Might Be Using Illegitimate Means to Scrape Websites' Data

When Perplexity cannot crawl a website, its response quality drops Perplexity is said to be illegitimately accessing content from websites despite being prohibited from doing so. Cloudflare, a global web security services company, conducted a test to confirm the stealth behaviour of the answer engine company. The researchers highlighted that not only were crawler bots from Perplexity ignoring the directives from the websites, but they were also actively hiding their identity via multiple means to ensure website owners could not track the activity. Cloudflare was also able to find a way to successfully shut down the artificial intelligence (AI) company's efforts. In a blog post, the web security platform claimed that Perplexity was involved in "stealth crawling" activities. "We see continued evidence that Perplexity is repeatedly modifying their user agent and changing their source ASNs to hide their crawling activity, as well as ignoring -- or sometimes failing even to fetch -- robots.txt files," the post added. Before delving into Perplexity's behaviour, it is important to understand how the entire system works. Owners of content websites add information, and third-party services such as search engines fetch this data to index these websites and make them appear when a relevant query is typed. Some apps and websites also scrape websites to either surface them within their interface or collect data with permission. However, for this relationship between websites and crawlers to work, there must be trust. It is established by these bots following a set of rules when crawling any website. These rules dictate that the activity of bots must be transparent, they should serve a clear purpose and perform only specific activity, and they should follow website directives and preferences. So, if a website blocks a bot, it should not crawl their website. As per Cloudflare researchers, Perplexity is breaking this trust model by using stealth tactics to scrape website data even from those websites that explicitly block its declared bots -- PerplexityBot and Perplexity-User. The researchers were able to confirm this activity by creating new test domains. These domains were not indexed by any search engine or made publicly accessible or discoverable. Additionally, the researchers implemented a robots.txt file (a text file used by websites to give instructions to web crawlers) to stop all bots from accessing any part of the website. Then, Cloudflare researchers went to Perplexity and asked it specific questions about these newly created domains. They found that, despite following Internet protocols to prevent crawling activity, Perplexity was still able to surface detailed information about these websites. Cloudflare claims Perplexity's user agents or web crawlers take several steps to bypass websites' directives and access the data. If a declared user agent is denied access via robots.txt, it ignores it and continues to scrape data. If a website has implemented a web application firewall (WAF) to block the bot, the company uses a generic browser agent intended to impersonate Google Chrome or macOS. This undeclared bot is also said to utilise multiple IPs not listed in Perplexity's official IP range to trick the website. To further hide its tracks, these crawlers were said to use different automatic system numbers. Notably, Cloudflare stated that when these undeclared bots were successfully stopped, the quality of Perplexity's responses declined, as it began to rely on other data sources to answer the query. Cloudflare said its bot management system was able to register all the undeclared crawling activity from Perplexity's hidden user agents and is now automatically protecting all its bot management customers. Additionally, the company has added signature matches for the stealth crawler to its managed rule, which blocks AI crawling activity. This is available to all Cloudflare users, including those on the free tier.

[23]

Perplexity is Taking Website Data Even After Being Blocked