UC San Diego Engineers Develop Motion-Tolerant Wearable for Gesture-Based Robot Control

3 Sources

3 Sources

[1]

Next-Gen Wearable Lets You Control Machines with Simple Gestures - Neuroscience News

Summary: A new wearable system uses stretchable electronics and artificial intelligence to interpret human gestures with high accuracy even in chaotic, high-motion environments. Unlike traditional gesture-based wearables that fail under movement noise, this patch-based device filters interference in real time, allowing gestures to reliably control machines such as robotic arms. The technology has been validated in conditions ranging from running to simulated ocean turbulence, demonstrating robust performance under real-world disturbances. This advance brings gesture-based human-machine interaction closer to practical daily use across rehabilitation, industrial work, emergency response, and underwater operations. Engineers at the University of California San Diego have developed a next-generation wearable system that enables people to control machines using everyday gestures -- even while running, riding in a car or floating on turbulent ocean waves. The system, published on Nov. 17 in Nature Sensors, combines stretchable electronics with artificial intelligence to overcome a long-standing challenge in wearable technology: reliable recognition of gesture signals in real-world environments. Wearable technologies with gesture sensors work fine when a user is sitting still, but the signals start to fall apart under excessive motion noise, explained study co-first author Xiangjun Chen, a postdoctoral researcher in the Aiiso Yufeng Li Family Department of Chemical and Nano Engineering at the UC San Diego Jacobs School of Engineering. This limits their practicality in daily life. "Our system overcomes this limitation," Chen said. "By integrating AI to clean noisy sensor data in real time, the technology enables everyday gestures to reliably control machines even in highly dynamic environments." The technology could enable patients in rehabilitation or individuals with limited mobility, for example, to use natural gestures to control robotic aids without relying on fine motor skills. Industrial workers and first responders could potentially use the technology for hands-free control of tools and robots in high-motion or hazardous environments. It could even enable divers and remote operators to command underwater robots despite turbulent conditions. In consumer devices, the system could make gesture-based controls more reliable in everyday settings. The work was a collaboration between the labs of Sheng Xu and Joseph Wang, both professors in the Aiiso Yufeng Li Family Department of Chemical and Nano Engineering at the UC San Diego Jacobs School of Engineering. To the researchers' knowledge, this is the first wearable human-machine interface that works reliably across a wide range of motion disturbances. As a result, it can work with the way people actually move. The device is a soft electronic patch that is glued onto a cloth armband. It integrates motion and muscle sensors, a Bluetooth microcontroller and a stretchable battery into a compact, multilayered system. The system was trained from a composite dataset of real gestures and conditions, from running and shaking to the movement of ocean waves. Signals from the arm are captured and processed by a customized deep-learning framework that strips away interference, interprets the gesture, and transmits a command to control a machine -- such as a robotic arm -- in real time. "This advancement brings us closer to intuitive and robust human-machine interfaces that can be deployed in daily life," Chen said. The system was tested in multiple dynamic conditions. Subjects used the device to control a robotic arm while running, exposed to high-frequency vibrations, and under a combination of disturbances. The device was also validated under simulated ocean conditions using the Scripps Ocean-Atmosphere Research Simulator at UC San Diego's Scripps Institution of Oceanography, which recreated both lab-generated and real sea motion. In all cases, the system delivered accurate, low-latency performance. Originally, this project was inspired by the idea of helping military divers control underwater robots. But the team soon realized that interference from motion wasn't just a problem unique to underwater environments. It is a common challenge across the field of wearable technology, one that has long limited the performance of such systems in everyday life. "This work establishes a new method for noise tolerance in wearable sensors," Chen said. "It paves the way for next-generation wearable systems that are not only stretchable and wireless, but also capable of learning from complex environments and individual users." Full study: "A noise-tolerant human-machine interface based on deep learning-enhanced wearable sensors." Co-first authors on the study are UC San Diego researchers Xiangjun Chen, Zhiyuan Lou, Xiaoxiang Gao and Lu Yin. Funding: This work was supported by the Defense Advanced Research Projects Agency (DARPA, contract number HR001120C0093). A noise-tolerant human-machine interface based on deep learning-enhanced wearable sensors Wearable human-machine interfaces with inertial measurement units (IMUs) are widely applied across healthcare, robotics and interactive technologies. However, extracting reliable signals remains challenging owing to motion artefacts in real-world environments. Here we present a human-machine interface capable of tolerating diverse motion artefacts through deep learning-enhanced wearable sensors. The system integrates a six-channel IMU, an electromyography module, a Bluetooth microcontroller unit and a stretchable battery, enabling wireless capture and transmission of gesture signals. A convolutional neural network trained on a composite dataset of gestures and motion artefacts extracts robust signals, while parameter-based transfer learning improves the generalizability of the network across users. A sliding-window approach converts the extracted gesture signals into real-time, continuous control of a robotic arm during dynamic activities such as running, high-frequency vibration, posture changes, oceanic wave motion and combinations of these. This work demonstrates the potential of wearable human-machine interfaces for complex real-world applications.

[2]

Wearable Lets Users Control Machines and Robots While on the Move | Newswise

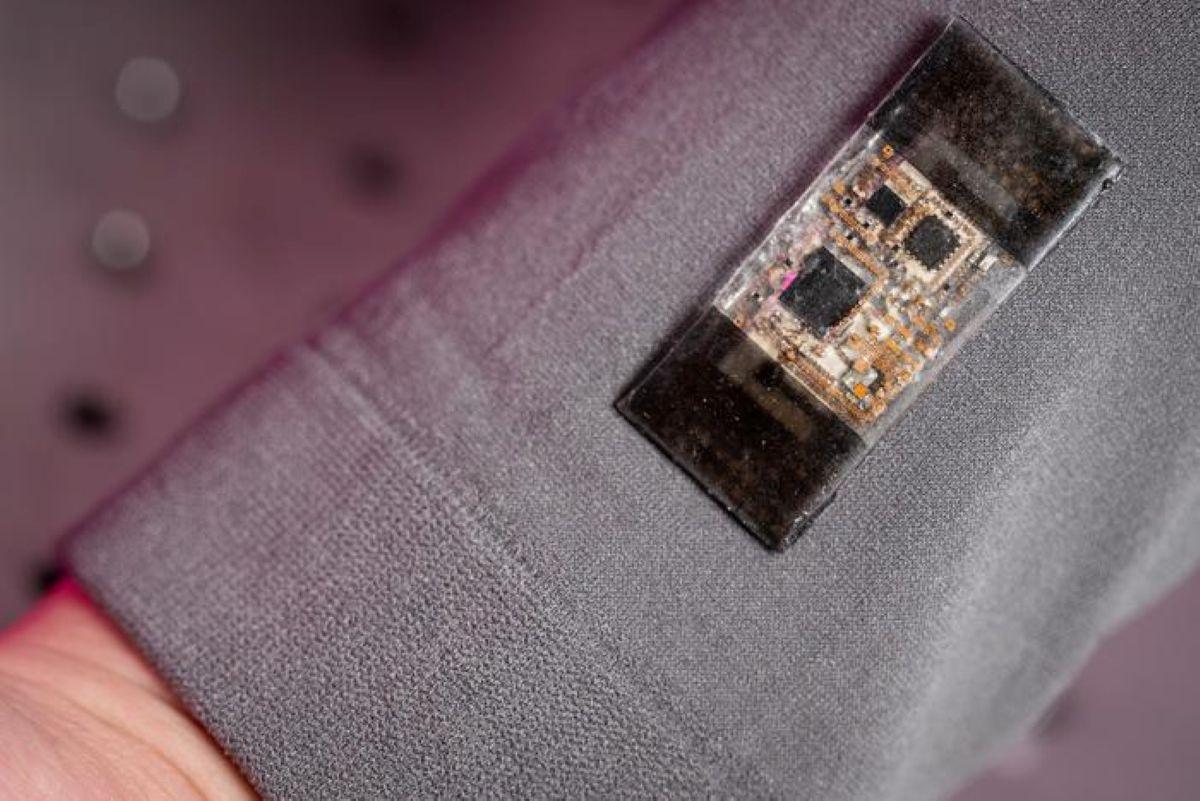

Credit: David Baillot/UC San Diego Jacobs School of Engineering Newswise -- Engineers at the University of California San Diego have developed a next-generation wearable system that enables people to control machines using everyday gestures -- even while running, riding in a car or floating on turbulent ocean waves. The system, published on Nov. 17 in Nature Sensors, combines stretchable electronics with artificial intelligence to overcome a long-standing challenge in wearable technology: reliable recognition of gesture signals in real-world environments. Wearable technologies with gesture sensors work fine when a user is sitting still, but the signals start to fall apart under excessive motion noise, explained study co-first author Xiangjun Chen, a postdoctoral researcher in the Aiiso Yufeng Li Family Department of Chemical and Nano Engineering at the UC San Diego Jacobs School of Engineering. This limits their practicality in daily life. "Our system overcomes this limitation," Chen said. "By integrating AI to clean noisy sensor data in real time, the technology enables everyday gestures to reliably control machines even in highly dynamic environments." The technology could enable patients in rehabilitation or individuals with limited mobility, for example, to use natural gestures to control robotic aids without relying on fine motor skills. Industrial workers and first responders could potentially use the technology for hands-free control of tools and robots in high-motion or hazardous environments. It could even enable divers and remote operators to command underwater robots despite turbulent conditions. In consumer devices, the system could make gesture-based controls more reliable in everyday settings. The work was a collaboration between the labs of Sheng Xu and Joseph Wang, both professors in the Aiiso Yufeng Li Family Department of Chemical and Nano Engineering at the UC San Diego Jacobs School of Engineering. To the researchers' knowledge, this is the first wearable human-machine interface that works reliably across a wide range of motion disturbances. As a result, it can work with the way people actually move. The device is a soft electronic patch that is glued onto a cloth armband. It integrates motion and muscle sensors, a Bluetooth microcontroller and a stretchable battery into a compact, multilayered system. The system was trained from a composite dataset of real gestures and conditions, from running and shaking to the movement of ocean waves. Signals from the arm are captured and processed by a customized deep-learning framework that strips away interference, interprets the gesture, and transmits a command to control a machine -- such as a robotic arm -- in real time. "This advancement brings us closer to intuitive and robust human-machine interfaces that can be deployed in daily life," Chen said. The system was tested in multiple dynamic conditions. Subjects used the device to control a robotic arm while running, exposed to high-frequency vibrations, and under a combination of disturbances. The device was also validated under simulated ocean conditions using the Scripps Ocean-Atmosphere Research Simulator at UC San Diego's Scripps Institution of Oceanography, which recreated both lab-generated and real sea motion. In all cases, the system delivered accurate, low-latency performance. Originally, this project was inspired by the idea of helping military divers control underwater robots. But the team soon realized that interference from motion wasn't just a problem unique to underwater environments. It is a common challenge across the field of wearable technology, one that has long limited the performance of such systems in everyday life. "This work establishes a new method for noise tolerance in wearable sensors," Chen said. "It paves the way for next-generation wearable systems that are not only stretchable and wireless, but also capable of learning from complex environments and individual users." Full study: "A noise-tolerant human-machine interface based on deep learning-enhanced wearable sensors." Co-first authors on the study are UC San Diego researchers Xiangjun Chen, Zhiyuan Lou, Xiaoxiang Gao and Lu Yin. This work was supported by the Defense Advanced Research Projects Agency (DARPA, contract number HR001120C0093).

[3]

UC San Diego Engineers Create Wearable Patch That Controls Robots Even in Chaotic Motion

Enables gesture control for rehab, industry, and underwater tasks The UC San Diego engineers have designed a wearable system of the next generation; it allows individuals to operate machines and robots with a few simple gestures of the arms, even when they are running or riding in an uneven vehicle. By integrating stretchable and flexible sensors along with AI on the chip, the patch removes motion noise in real time and identifies gestures with high reliability in high-motion environments that are chaotic. This development appeared in the journal Nature Sensors and may finally lead to the reliable presence of gesture control in life. How the wearable works According to the paper, the device is a soft electronic patch worn on the forearm. It integrates motion and muscle sensors, a small Bluetooth microcontroller, and a stretchable battery into a compact armband. Engineers trained a custom deep-learning model on a variety of motions (running, shaking, and simulated ocean waves) so it can strip away interference and correctly interpret gestures. When a gesture is made, the cleaned signal is instantly sent as a command to a connected machine -- such as moving a robotic arm in real time. This achievement moves us closer to intuitive, robust human-machine interfaces. Real-world applications This motion-tolerant gesture interface opens up many uses. Patients in rehabilitation or with limited mobility could use it to drive robotic aids via natural arm movements. Industrial workers and first responders could operate tools or robots hands-free in dynamic or hazardous settings without relying on fine motor skills. Even divers could command underwater robots despite waves, and everyday gadgets could finally support dependable gesture controls. Such technology bridges human motion with machines, making robot control intuitive even in challenging environments.

Share

Share

Copy Link

Researchers at UC San Diego have created a revolutionary wearable patch that uses AI and stretchable electronics to enable reliable gesture control of machines and robots, even in high-motion environments like running or ocean turbulence.

Breakthrough in Motion-Tolerant Wearable Technology

Engineers at the University of California San Diego have developed a groundbreaking wearable system that enables users to control machines and robots through simple gestures, even while experiencing significant motion disturbances. Published in Nature Sensors on November 17, this innovation represents a major leap forward in human-machine interface technology by combining stretchable electronics with artificial intelligence to overcome long-standing challenges in gesture recognition

1

.The research addresses a critical limitation that has plagued wearable gesture control systems: their inability to function reliably in real-world environments with motion interference. "Wearable technologies with gesture sensors work fine when a user is sitting still, but the signals start to fall apart under excessive motion noise," explained study co-first author Xiangjun Chen, a postdoctoral researcher in the Aiiso Yufeng Li Family Department of Chemical and Nano Engineering

2

.Technical Innovation and Design

The device consists of a soft electronic patch integrated into a cloth armband, featuring motion and muscle sensors, a Bluetooth microcontroller, and a stretchable battery in a compact, multilayered system. The breakthrough lies in its AI-powered processing capability, which uses a customized deep-learning framework to filter interference and interpret gestures in real-time

1

.

Source: Neuroscience News

The system was trained using a comprehensive dataset that included various motion conditions, from running and shaking to simulated ocean wave movements. This training enables the device to strip away motion interference and accurately interpret user gestures, transmitting commands to control machines such as robotic arms with minimal latency

3

.

Source: Newswise

Rigorous Testing and Validation

The research team conducted extensive testing across multiple dynamic conditions to validate the system's performance. Subjects successfully used the device to control robotic arms while running, experiencing high-frequency vibrations, and under combinations of various disturbances. The most challenging validation occurred at UC San Diego's Scripps Institution of Oceanography, where researchers used the Scripps Ocean-Atmosphere Research Simulator to recreate both laboratory-generated and real sea motion conditions

2

.In all testing scenarios, the system demonstrated accurate, low-latency performance, proving its capability to function reliably in chaotic, high-motion environments that would typically render traditional gesture-based wearables ineffective.

Related Stories

Diverse Applications and Future Impact

The technology opens numerous possibilities across various sectors. In healthcare, patients undergoing rehabilitation or individuals with limited mobility could use natural gestures to control robotic aids without requiring fine motor skills. Industrial applications include hands-free control of tools and robots for workers operating in high-motion or hazardous environments

1

.The military and underwater operations sector could particularly benefit from this innovation. Originally inspired by the need to help military divers control underwater robots, the system could enable divers and remote operators to command underwater robots despite turbulent ocean conditions. Consumer applications could make gesture-based controls more reliable in everyday devices and settings

3

.Research Collaboration and Funding

This breakthrough resulted from collaboration between the laboratories of Sheng Xu and Joseph Wang, both professors in the Aiiso Yufeng Li Family Department of Chemical and Nano Engineering at UC San Diego's Jacobs School of Engineering. The research team believes this represents the first wearable human-machine interface capable of working reliably across a wide range of motion disturbances

2

.The project received support from the Defense Advanced Research Projects Agency (DARPA) under contract number HR001120C0093, highlighting the technology's potential strategic importance for military and defense applications.

References

Summarized by

Navi

[1]

Related Stories

Meta's Breakthrough: Wristband Technology for Mind-Controlled Computing

24 Jul 2025•Technology

UC San Diego Engineers Develop Wearable Ultrasound Device for Long-Term Muscle Monitoring

01 Nov 2024•Technology

AI Co-Pilot for Bionic Hands Transforms How Amputees Control Prosthetics with Intuitive Grasping

09 Dec 2025•Health

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology