Samsung gains ground in HBM4 race as Nvidia production ignites AI memory battle with SK Hynix, Micron

8 Sources

8 Sources

[1]

Samsung Electronics highlights progress in HBM4 chip supply

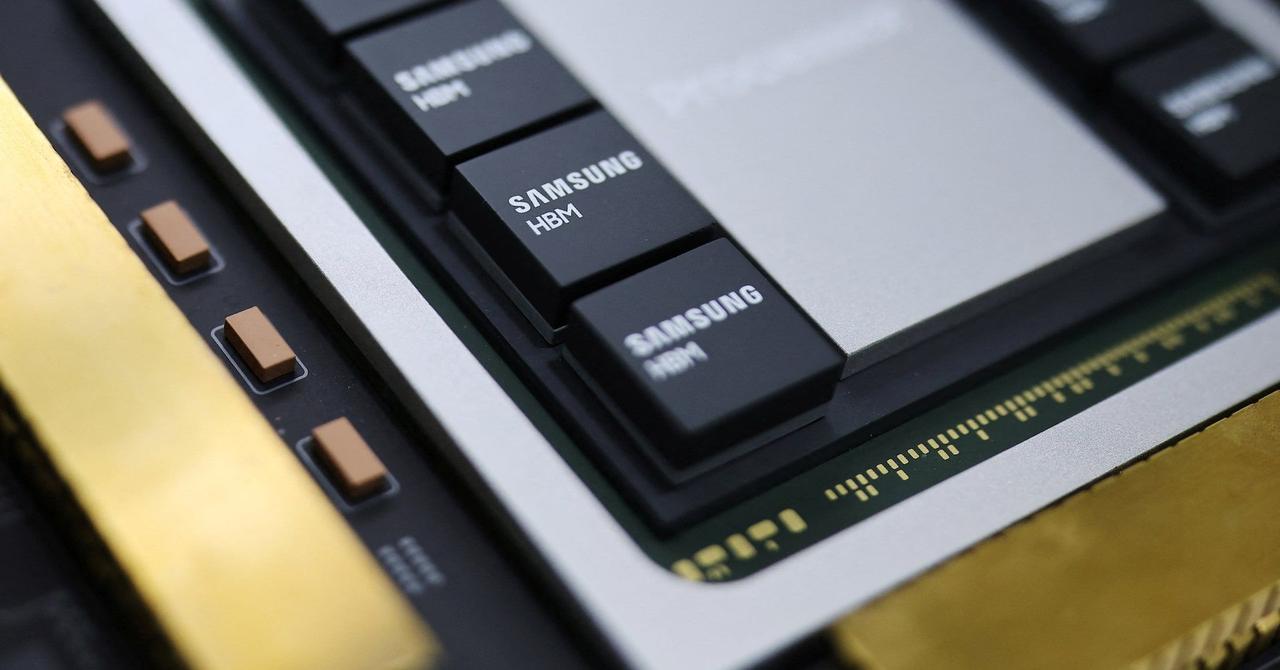

SEOUL, Jan 2 (Reuters) - Samsung Electronics (005930.KS), opens new tab highlighted on Friday progress in the company's next-generation high-bandwidth memory (HBM) chips, or HBM4, saying customers have praised its competitiveness. Samsung Electronics' co-CEO Jun Young-hyun, who leads the chip division, said in a New Year address that HBM4 had drawn strong customer praise for its differentiated competitiveness, with some customers saying "Samsung is back." In October, Samsung said it was in "close discussion" to supply HBM4 to Nvidia (NVDA.O), opens new tab, as the South Korean chipmaker scrambles to catch up with rivals such as SK Hynix (000660.KS), opens new tab in the AI chip race. Reporting by Heekyong Yang; Editing by Jacqueline Wong Our Standards: The Thomson Reuters Trust Principles., opens new tab

[2]

Samsung pushes HBM4 as it battles SK Hynix for AI memory dominance

Serving tech enthusiasts for over 25 years. TechSpot means tech analysis and advice you can trust. In a nutshell: Samsung Electronics entered 2026 with renewed momentum in the memory market, signaling growing customer confidence in its next-generation high-bandwidth memory, known as HBM4. In a New Year's address reviewed by Reuters, the company's chip chief and co-CEO, Jun Young-hyun, said customers have praised the performance of the new chips, adding that "Samsung is back." The comments come as Samsung works to regain its competitive edge in the artificial intelligence hardware market, a segment recently dominated by rival SK Hynix. In October, Samsung confirmed it was in "close discussions" with Nvidia to supply HBM4 components - a significant development as the US AI leader expands procurement of high-performance memory for training and inference workloads. HBM4 chips are expected to deliver faster data transfer rates and improved power efficiency. Jun said the positive reception reflects Samsung's improving market position but acknowledged that more work remains to strengthen the product's competitiveness. He also described the company's foundry business as being "primed for a great leap forward" following new production contracts with global customers. One of those deals was a $16.5 billion agreement signed in July with Tesla. Across South Korea's chip industry, however, 2026 is shaping up to be a year of heightened competition. SK Hynix CEO Kwak Noh-Jung told employees that strong demand for AI chips arrived faster than anticipated last year, boosting the company's sales, but warned that this year's environment would be "tougher than last year." He said AI growth is now a base assumption rather than a pleasant surprise, adding that continued "bolder investment and effort" will be required to prepare for the industry's next phase. Data from Counterpoint Research shows that in the third quarter of 2025, SK Hynix held a commanding 53 percent share of the HBM market, followed by Samsung at 35 percent and Micron at 11 percent. In a separate address, Samsung co-CEO TM Roh, who leads the company's device experience division encompassing smartphones, TVs, and appliances, cautioned that the broader operating environment is growing more uncertain. He cited rising component prices and global tariff barriers as emerging risks that could challenge profitability across divisions. Even as the AI hardware race accelerates, both Samsung and SK Hynix face a year that will test whether their heavy investments in high-performance memory and advanced fabrication can secure long-term leadership in the global chip landscape.

[3]

Nvidia's Vera Rubin enters full production, igniting Micron's HBM4 capacity bet for 2026

When Nvidia CEO Jensen Huang confirmed at CES 2026 that its next-generation AI processor, Vera Rubin, had entered full production, the message to the memory industry was immediate. The move effectively ignited a new competitive cycle in sixth-generation high-bandwidth memory (HBM4), as suppliers race to lock in design wins for Nvidia's post-Blackwell platforms. Micron targets 30% HBM4 capacity share Among the suppliers, Micron Technology is emerging as the most aggressive mover. South Korean industry sources cited by ETNews say the US memory maker plans to lift HBM4 capacity to 15,000 wafers per month in 2026. Based on Korean securities estimates that Micron's total HBM output is about 55,000 wafers per month, HBM4 would represent roughly 30% of overall capacity, signaling a decisive shift toward next-generation products. Industry insiders say Micron has already begun equipment investments, positioning itself to respond quickly as early HBM4 demand materializes. The timing aligns with Nvidia's confirmation that Vera Rubin has moved beyond sampling and validation into full-scale production. Samsung and SK Hynix join the race Micron will not be alone. Samsung and SK Hynix are also preparing HBM4 for Vera Rubin, with all three suppliers' products currently under evaluation by Nvidia as supply schedules and deployment timelines are coordinated. Market expectations point to February 2026 as the start of large-scale HBM4 co-supply for Nvidia platforms. Historically, Micron has trailed its South Korean rivals in HBM capacity. The 2026 expansion is widely seen as an attempt at a strategic reversal, pairing scale with its long-standing strength in low-power memory design. Ramp-up begins in second quarter 2026 That direction had already surfaced in Micron's guidance. During its December 17, 2025, earnings call, CEO Sanjay Mehrotra said the company would begin ramping HBM4 output from the second quarter of 2026, adding that yield improvement is progressing faster than HBM3E. With several new fabs already incorporated into its roadmap, Micron expects momentum to accelerate toward the end of 2026. Micron's confidence has been building since late last year. As disclosed during its fiscal first-quarter earnings briefing and reported by ZDNet Korea, the company has expanded its HBM customer base to three major clients while preparing for full-scale HBM4 production in 2026 after skipping HBM3. Executives have also cited strong customer feedback on its 12-high HBM3E, supplied to Nvidia's Blackwell accelerators, highlighting lower power consumption as a key differentiator as AI systems scale. Early HBM4 supply already contracted Crucially, Micron has indicated that near-term HBM supply is already fully contracted. According to disclosures cited by The Elec, the company has finalized price and volume agreements for upcoming HBM shipments, including early HBM4, and says its HBM4 exceeds 11 gigabits per second (Gbps), outperforming baseline JEDEC specifications and Nvidia's operating targets while stabilizing yields faster than the previous generation. Taken together, Nvidia's move to full production on Vera Rubin and Micron's capacity-first HBM4 push mark a clear inflection point for the AI memory market. Whether Micron's mix of low-power design, early customer lock-in, and aggressive scale-up can truly challenge Samsung and SK Hynix will become clearer as HBM4 volumes ramp through 2026. What is already clear is that in the HBM4 era, capacity is no longer a background variable. It is a frontline competitive weapon.

[4]

SK hynix showcases next-gen 48GB HBM4 at 11.7Gbps, SOCAMM2, LPDDR6 for AI platforms

TL;DR: SK hynix unveiled advanced AI-focused memory solutions at CES 2026, including the ultra-fast 48GB 16-Hi HBM4 with 2TB/sec bandwidth, new low-power SOCAMM2 modules for AI servers, and power-efficient LPDDR6 optimized for on-device AI, highlighting its leadership in next-generation AI memory technology. SK hynix showcased its next-gen memory solutions for AI at CES 2026, showing off its new 48GB HBM4, LPDDR6, SOCAMM2, and more for AI platforms of the future. SK hynix showed off its next-gen 16-Hi HBM4 with 48GB, newer HBM4 that will succeed the upcoming 12-Hi HBM4 with 36GB that will arrive this year. The faster 16-Hi HBM4 48GB modules are bloody fast, with 2TB/sec of memory bandwidth per stack, destined for NVIDIA's next-gen Vera Rubin AI platform. The company had its new 16-Hi HBM4 48GB running at the industry's fastest speed of 11.7Gbps, and is still under development at SK hynix, and will be released in the nearish future. SK hynix also has its new SOCAMM2 memory modules at CES 2026, with the new low-power memory module specialized for AI servers, for the company to demonstrate the competitiveness of its diverse product portfolio "in response to the rapidly growing demand for AI servers". Another new product showcased at CES 2026 is SK hynix's new LPDDR6 memory, optimized for on-device AI, offering huge data processing speed increases, as well as power efficiency gains compared to the previous-gen LPDDR5 standard.

[5]

Samsung Electronics says customers praised competitiveness of HBM4 chip

Samsung says customers are impressed by its new HBM4 memory chips, helping restore confidence in its AI credentials and lifting its share price. The company is also gaining momentum in contract chipmaking, backed by big global deals. However, executives warn that 2026 will bring higher costs and trade risks. Samsung Electronics customers have praised the differentiated competitiveness of its next-generation high-bandwidth memory (HBM) chips, or HBM4, saying "Samsung is back", co-CEO and chip chief Jun Young-hyun said in a New Year address. Shares of Samsung Electronics rose as much as 3.8% in morning trade, outpacing the benchmark KOSPI's 1.1% rise. In October, Samsung said it was in "close discussion" to supply its HBM4 to U.S. artificial intelligence leader Nvidia, as the South Korean chipmaker scrambles to catch rivals including compatriot SK Hynix in AI chips. "On HBM4 in particular, customers have even stated that 'Samsung is back'," Jun said in remarks reviewed by Reuters, adding that the company still had work to do to further improve competitiveness. Turning to its foundry business, which manufactures chips designed by customers, Jun said recent supply deals with major global customers had left the foundry business "primed for a great leap forward." In July, Samsung Electronics signed a $16.5 billion deal with Tesla. In a separate address, Samsung Electronics' co-CEO TM Roh, who also heads the company's device experience division overseeing its mobile phone, TV and home appliance businesses, warned that 2026 was expected to bring greater uncertainty and risks, citing rising component prices and global tariff barriers. "To position ourselves to maintain a competitive advantage in any situation, we will reinforce our core competitiveness through proactive supply chain diversification and optimisation of global operations to address issues like component sourcing and pricing, and global tariff risks," Roh said.

[6]

"Samsung is Back in the HBM Race", Says Co-CEO, as the Korean Giant Is Poised to Reclaim Its Lost Dominance with HBM4

Samsung's HBM business is reportedly looking significantly stronger compared to where it was a few quarters ago, as the Korean giant's HBM4 is slated to be the most potent solution available. Samsung has been experiencing uncertainity with its DRAM business over the years, and despite being the dominant supplier among all other competitors for decades, the company saw a slowdown once HBM demand started to rise. In particular, with HBM3, Samsung initially failed to secure certification from key clients like AMD and NVIDIA due to issues with DRAM yield rates and thermal concerns. However, following a significant internal shift in strategy, the Korean giant is now back on track. In its New Year's address, Samsung's co-CEO and chip chief, Jun Young-hyun, stated that the company has seen optimism from external clients regarding HBM4, implying that the firm is looking to capitalize on the demand from the AI industry. Samsung's lead in HBM4 is primarily due to the company having initiated 1c DRAM development ahead of its competitors, and more importantly, it has worked with partners like NVIDIA to secure its position in the supply chain. "On HBM4 in particular, customers have even stated that 'Samsung is back'," Jun said in remarks reviewed by Reuters, adding that the company still had work to do to further improve competitiveness. - Reuters Samsung is known to have HBM4 modules with the industry's fastest pin speeds rated at 11 Gbps, and this is one of the primary reasons why customers like NVIDIA are interested in the solution. Given the company's extensive DRAM supply lines and an internal strategy to ensure competitive HBM4 pricing, Samsung has managed to gain a competitive edge among its rivals. According to Bernstein's HBM modeling (shared by Jukan on X), it is predicted that Samsung's market share is expected to surpass SK hynix by 2027, after losing the lead in the last quarter. Given the enormity of DRAM demand, it would be incorrect to say that all memory suppliers are not poised to benefit from the order flow coming from AI customers, which is why companies like Micron, SK hynix, and Samsung are optimistic about their future revenues.

[7]

SK hynix unveils 16-high 48Gb HBM4 - The Korea Times

An image of SK hynix's booth at CES 2026, schedule for Tuesday through Friday (local time) in Las Vegas / Courtesy of SK hynix SK hynix will unveil its next-generation artificial intelligence (AI) memory solutions at CES 2026 Tuesday, showcasing its latest high-bandwidth memory (HBM) product, a 16-high 48-gigabyte HBM4, for the first time in the world. The latest memory chip is a successor to the 12‑high 36-gigabyte HBM4 that delivers a data rate of 11.7 gigabits per second. The chip development is on track to meet consumer timelines. Sixteen-high HBM4 is widely viewed as a major technical achievement, as stacking 16 layers dramatically increases manufacturing difficulty compared with 12-high products. That is because 16-high chips require thinner spacing between DRAM dies, tighter control of wafer warpage and much more precise alignment and interconnect bonding. They also require more complex thermal management. At its CES 2026 booth, the company also displayed a graphics processing unit (GPU) module equipped with 12-high 36-gigabyte HBM3E for Nvidia's latest AI server units to demonstrate how the memory operates within a real AI environment. The 36-gigabyte 12-high HBM3E is SK hynix's flagship product currently leading the global market. "Under the theme 'Innovative AI, Sustainable Tomorrow,' the company plans to showcase a broad range of AI-optimized memory technologies," the company said. "We will closely engage with global customers to create new value together in the AI era." Along with HBMs, the company presented the small outline compression attached memory module 2 (SOCAMM2), a low-power memory module optimized for AI servers, along with a lineup of general-purpose memory products for AI workloads. The lineup includes low-power double data rate 6 (LPDDR6), which is a significantly improved version in data processing speed and power efficiency for on-device AI applications. SK hynix also introduced a 2-terabit quadruple-level cell-based 321-layer NAND product optimized for ultrahigh‑capacity solid‑state drives, a segment seeing surging demand amid the expansion of AI data centers. The product offers one of the highest storage densities currently available and delivers substantially improved performance and power efficiency over prior generations, making it ideal for power-sensitive AI data center environments, SK hynix said.

[8]

Samsung Electronics highlights progress in HBM4 chip supply

SEOUL, Jan 2 (Reuters) - Samsung Electronics highlighted on Friday progress in the company's next-generation high-bandwidth memory (HBM) chips, or HBM4, saying customers have praised its competitiveness. Samsung Electronics' co-CEO Jun Young-hyun, who leads the chip division, said in a New Year address that HBM4 had drawn strong customer praise for its differentiated competitiveness, with some customers saying "Samsung is back." In October, Samsung said it was in "close discussion" to supply HBM4 to Nvidia, as the South Korean chipmaker scrambles to catch up with rivals such as SK Hynix in the AI chip race. (Reporting by Heekyong Yang; Editing by Jacqueline Wong)

Share

Share

Copy Link

Samsung Electronics says customers praised its HBM4 chips, declaring 'Samsung is back' as the chipmaker fights to reclaim AI memory leadership. Meanwhile, Nvidia's Vera Rubin platform enters full production, triggering an aggressive capacity race among memory suppliers targeting next-generation high-bandwidth memory dominance.

Samsung Electronics Gains Customer Praise for HBM4 Competitiveness

Samsung Electronics has signaled a comeback in the AI memory market after customers praised the differentiated competitiveness of its next-generation high-bandwidth memory chips, known as HBM4. In a New Year address, co-CEO and chip chief Jun Young-hyun revealed that some customers have stated "Samsung is back," marking a critical confidence boost for the South Korean chipmaker

1

. The company's shares rose as much as 3.8% in morning trade following the announcement, outpacing the benchmark KOSPI's 1.1% rise5

. Samsung confirmed in October that it was in close discussion to supply HBM4 to Nvidia, the U.S. artificial intelligence leader, as it scrambles to catch rivals including compatriot SK Hynix in the AI chip race1

.

Source: Reuters

Nvidia's Vera Rubin Production Ignites AI Memory Battle

When Nvidia CEO Jensen Huang confirmed at CES 2026 that its next-generation AI processor, Vera Rubin, had entered full production, the message to the memory industry was immediate

3

. The move effectively ignited a new competitive cycle in sixth-generation high-bandwidth memory, as memory suppliers race to lock in design wins for Nvidia's post-Blackwell platforms. Samsung, SK Hynix, and Micron are all preparing HBM4 for Vera Rubin, with all three suppliers' products currently under evaluation by Nvidia as supply schedules and deployment timelines are coordinated3

. Market expectations point to February 2026 as the start of large-scale HBM4 co-supply for Nvidia platforms, marking a critical inflection point for AI memory dominance.Micron Targets 30% HBM4 Capacity Share in Aggressive Push

Micron Technology is emerging as the most aggressive mover in the HBM4 race, with South Korean industry sources indicating the U.S. memory maker plans to lift HBM4 capacity to 15,000 wafers per month in 2026

3

. Based on Korean securities estimates that Micron's total HBM output is about 55,000 wafers per month, HBM4 would represent roughly 30% of overall capacity, signaling a decisive shift toward next-generation products. During its December 17, 2025, earnings call, Micron CEO Sanjay Mehrotra said the company would begin ramping HBM4 output from the second quarter of 2026, adding that yield improvement is progressing faster than HBM3E3

. Crucially, Micron has indicated that near-term HBM supply is already fully contracted, with the company finalizing price and volume agreements for upcoming HBM shipments, including early HBM4. Micron's HBM4 exceeds 11 gigabits per second (Gbps), outperforming baseline JEDEC specifications and Nvidia's operating targets while stabilizing yields faster than the previous generation3

.

Source: DIGITIMES

SK Hynix Showcases 48GB HBM4 with Industry-Leading Bandwidth

SK Hynix, which currently dominates the AI memory market, unveiled advanced AI-focused memory solutions at CES 2026, including the ultra-fast 48GB 16-Hi HBM4 with 2TB/sec bandwidth

4

. The company demonstrated its 16-Hi HBM4 48GB modules running at the industry's fastest speed of 11.7Gbps, destined for Nvidia's Vera Rubin AI platform. Data from Counterpoint Research shows that in the third quarter of 2025, SK Hynix held a commanding 53 percent share of the HBM market, followed by Samsung at 35 percent and Micron at 11 percent2

. However, SK Hynix CEO Kwak Noh-Jung warned employees that 2026's environment would be "tougher than last year," noting that AI growth is now a base assumption rather than a pleasant surprise, and continued "bolder investment and effort" will be required2

. SK Hynix also showcased new SOCAMM2 memory modules specialized for AI servers and LPDDR6 memory optimized for on-device AI, offering huge data processing speed increases and power efficiency gains4

.

Source: Korea Times

Related Stories

Foundry Business and Supply Chain Challenges Ahead

Beyond memory, Samsung's foundry business is also gaining momentum. Jun Young-hyun said recent supply deals with major global customers had left the foundry business "primed for a great leap forward"

5

. In July, Samsung Electronics signed a $16.5 billion deal with Tesla2

. However, Samsung co-CEO TM Roh, who heads the company's device experience division overseeing mobile phone, TV, and home appliance businesses, warned that 2026 was expected to bring greater uncertainty and risks, citing rising component prices and global tariff barriers5

. Roh emphasized the need for proactive supply chain diversification and optimization of global operations to address issues like component sourcing and pricing.What This Means for the AI Chip Market

The HBM4 race matters because high-bandwidth memory has become a critical bottleneck in AI system performance. As training and inference workloads scale, faster data transfer rates and improved power efficiency are essential for next-generation AI platforms. For Samsung, regaining customer confidence after lagging behind SK Hynix represents a strategic imperative—losing ground in AI memory could have long-term implications for the chipmaker's position in the broader semiconductor industry. For Micron, the aggressive capacity expansion represents a strategic reversal, pairing scale with its long-standing strength in low-power memory design

3

. Whether Micron's mix of low-power design, early customer lock-in, and aggressive scale-up can truly challenge Samsung and SK Hynix will become clearer as HBM4 volumes ramp through 2026. What is already clear is that in the HBM4 era, capacity is no longer a background variable—it is a frontline competitive weapon3

.References

Summarized by

Navi

[3]

Related Stories

Samsung and Micron begin shipping HBM4 memory as race for AI acceleration hardware intensifies

10 Feb 2026•Technology

SK hynix Leads the Charge in Next-Gen AI Memory with World's First 12-Layer HBM4 Samples

19 Mar 2025•Technology

Samsung HBM4 nears Nvidia approval as rivalry with SK Hynix intensifies for Rubin AI platform

26 Jan 2026•Technology

Recent Highlights

1

Pentagon threatens Anthropic with Defense Production Act over AI military use restrictions

Policy and Regulation

2

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

3

Anthropic accuses Chinese AI labs of stealing Claude through 24,000 fake accounts

Policy and Regulation