SK hynix Showcases Advanced AI Memory Solutions at CES 2025, Including 16-layer HBM3E and 122TB SSD

6 Sources

6 Sources

[1]

SK hynix to showcase 16-layer HBM3E, 122TB enterprise SSD, LPCAMM23, and more at CES

Apart from its latest HBM technology, the company will also unveil new solutions for enterprises and AI-driven components. Leading South Korean memory manufacturer SK hynix announced that it will showcase a suite of advanced memory solutions tailored for artificial intelligence (AI) applications at this year's Consumer Electronics Show (CES) in Las Vegas. Building upon its 12-layer High Bandwidth Memory (HBM) technology, the company will display samples of its latest 16-layer HBM3E products, officially announced in November 2024. This advancement employs advanced MR-MUF processes to enhance thermal performance and mitigate chip warping, achieving industry-leading results. With capacities of 48GB (3GB per individual die) per stack, the increased density will allow AI accelerators to utilize up to 384GB of HBM3E memory in an 8-stack configuration. The 16-layer HBM3E is designed to significantly boost AI learning by up to 18% and inference performance by up to 32% compared to the 12-layer version. Nvidia's next-gen Rubin chips are slated for mass production later next year, thus the existence of HBM3E could be shortlived, as the new upcoming Nvidia chips will be based on HBM4. That shouldn't be a concern, though, as reports indicate that SK hynix achieved its tape-out phase in October 2024. Addressing the escalating demand for high-capacity storage in AI data centers, SK hynix will also introduce new SSD solutions for enterprise users, including the 122TB 'D5-P5336' enterprise SSD, developed by its subsidiary Solidigm. This model is said to boast the highest capacity currently available in its category and is poised to set new standards in data storage solutions. The memory and storage manufacturer will also talk about Compute Express Link (CXL) and Processing-In-Memory (PIM) technologies, which are said to be pivotal to the next generation of data center infrastructures. Modularized solutions like the CMM-Ax and AiMX will be featured, with the CMM-Ax being hailed as a groundbreaking solution that combines the scalability of CXL with computational capabilities, boosting performance and energy efficiency for next-generation server platforms. With on-device AI becoming a popular trend, SK hynix also has plans to showcase 'LPCAMM23' and 'ZUFS 4.04,' designed to enhance data processing speed and power efficiency in edge devices such as PCs and smartphones. These innovations aim to facilitate the integration of AI capabilities directly into consumer electronics, broadening the scope of AI applications. The company announced last year that it was also working on a range of other products, including PCIe 6.0 SSDs, high-capacity QLC (Quad Level Cell) eSSDs made specifically for AI servers, and UFS 5.0 for mobile devices. SK hynix is also working on an LPCAMM2 module and soldered LPDDR5/6 memory using its 1cnm-node to power laptops and handheld consoles.

[2]

SK hynix preparing full stack of AI memory provider vision at CES 2025, leads with HBM3E, HBM4

TL;DR: SK hynix will showcase its AI memory technologies at CES 2025, featuring solutions for on-device AI and next-generation AI memories. The company aims to highlight its technological competitiveness as a Full Stack AI Memory Provider. It will present products like HBM, eSSD, and innovations in data processing and power efficiency for edge devices. SK hynix has teased that it will be showcasing its innovative AI memory technologies at CES 2025, with a bunch of C-level executives including the CEO will be in attendance. SK hynix Chief Marketing Officer, Justin Kim, said: "We will broadly introduce solutions optimized for on-device AI and next-generation AI memories, as well as representative AI memory products such as HBM and eSSD at this CES. Through this, we will publicize our technological competitiveness to prepare for the future as a Full Stack AI Memory Provider". Ahn Hyun, Chief Development Officer at SK hynix, said: "As SK hynix succeeded in developing QLC (Quadruple Level Cell)-based 61 TB products in December, we expect to maximize synergy based on a balanced portfolio between the two companies in the high-capacity eSSD market. The company will also showcase on-device AI products such as LPCAMM2 and"ZUFS 4.04, which improve data processing speed and power efficiency to implement AI in edge devices like PCs and smartphones. The company will also present CXL and PIM (Processing in Memory) technologies, along with modularized versions, CMM (CXL Memory Module)-Ax and AiMX, designed to be core infrastructures for next-generation data centers".

[3]

SK hynix to showcase 16-layer HBM3e chip at CES 2025

An artist's impression of SK hynix's exhibition at the Consumer Electronics Show 2025 / Courtesy of SK hynix By Nam Hyun-woo SK hynix will showcase samples of its most advanced high-bandwidth memory (HBM) chips for artificial intelligence (AI) processors at the upcoming Consumer Electronics Show (CES) 2025, with the chipmaker's top executives set to promote the company's capability as "a full-stack AI memory provider" to visitors. According to SK hynix on Friday, CEO Kwak Noh-jung, Chief Marketing Officer Kim Ju-seon and a number of other C-level executives will attend CES 2025 slated for Jan. 7 to Jan. 10 in Las Vegas. During the event, the company will exhibit samples of its 16-layer HBM3e product, which boasts the highest capacity -- 48 gigabytes -- and the highest stack configuration with 16 layers. SK hynix revealed the latest chip development for the first time in the world in November and showed confidence that the chip's yield will match that of the 12-layer HBM3e, the most advanced model in mass production currently. In November, SK hynix unveiled its latest chip development, marking a world-first, and expressed confidence that the chip's yield would match that of the 12-layer HBM3e, the most advanced model currently in mass production. The 16-layer HBM3e is manufactured through an advanced mass reflow-molded underfill process, enabling the 16-layer stack while effectively controlling chip warpage and maximizing thermal performance. The company said it will be able to achieve a 20-layer stack with the process without hybrid bonding, a next-generation technology for bonding stacked chips. Along with HBM3e, gaining attention is whether there will be a glimpse into the company's next-generation HBM4. In a press release, Kwak said "AI-driven transformation is expected to accelerate further this year" and the company plans to "begin mass production of sixth-generation HBM (HBM4) in the second half of this year, leading the customized HBM market by meeting diverse customer demands." HBM4 has twice as many data transfer channels as HBM3e, enabling faster data transfer speeds and higher memory capacity. It will also be customized to meet specific workloads depending on clients' demands. SK hynix has been working on HBM4's development with the goal of finishing its design last year. As Kwak noted, the development is progressing in line with the company's plans, and CES 2025 could be a stage to drop hints to secure customers in the early stage. SK hynix's 12-layer HBM3e / Courtesy of SK hynix Along with HBMs, the company will also showcase high-capacity, high-performance enterprise SSDs (eSSDs), which have a high demand due to AI data centers. Among the products on display will be the 122 terabyte D5-P5336, developed by SK hynix's subsidiary Solidigm in November last year. This SSD offers the highest storage capacity currently available, along with superior power and space efficiency. For on-device AI services available for smartphones or laptops, SK hynix will display LPCAMM2 and ZUFS 4.0, which excel in power and space efficiency compared to existing models. Also on display will be a memory module based on Compute Express Link technology, which is a unified interface technology that connects multiple devices such as central processing units and graphics processing units and offers greater scalability. "During the event, we plan to showcase a wide range of next-generation AI memory solutions, including flagship AI memory products such as HBM and eSSD, as well as solutions optimized for on-device AI," Kim said. "Through this, we aim to widely promote our technological competitiveness as a full-stack AI memory provider."

[4]

SK hynix will reportedly show off 16-Hi HBM3E memory chips for new AI GPUs at CES 2025

TL;DR: SK hynix will showcase its new 16-Hi HBM3E memory chips at CES 2025, featuring up to 48GB capacity. These chips use an advanced manufacturing process to enhance performance and control warpage. SK hynix aims to lead the AI memory market with innovations like HBM4, addressing diverse customer needs and future technological advancements. SK hynix plans to show off prototypes of its new AI memory chips at CES 2025 this week, with the tease of 16-Hi HBM3E memory that stacks 16 x DDR5 DRAM chips on top of one another, for up to 48GB HBM3E capacity. SK hynix will indeed showcase its new 16-Hi HBM3E memory chips at CES 2025, with the highest capacity of up to 48GB. The new 16-Hi HBM3E memory chips started making waves in November 2024 when SK hynix said they were in development, with confidence that the yields would be as good as 12-layer HBM3E which is the most advanced model in mass production. SK hynix's new 16-Hi HBM3E memory chips will be manufactured using an advanced mass reflow-molded underfill process, enabling the 16-layer stack while effectively controlling chip warpage and maximizing thermal performance. SK hynix has also said that in order to hit 20-layer stacks with the process without hybrid bonding, a next-generation technology for bonding stacked chips. SK hynix Chief Marketing Officer, Justin Kim, said: "We will broadly introduce solutions optimized for on-device AI and next-generation AI memories, as well as representative AI memory products such as HBM and eSSD at this CES. Through this, we will publicize our technological competitiveness to prepare for the future as a Full Stack AI Memory Provider".

[5]

SK hynix seeks to redefine AI memory technology, solidify HBM leadership at CES 2025

SK Group's exhibition booth at CES 2025 at the Las Vegas Convention Center / Courtesy of SK Group, With the recent boom in artificial intelligence, Korean chip giant SK hynix Inc. has emerged as one of the most influential players in the global semiconductor industry, especially in the high bandwidth memory (HBM) chip sector. Since developing the first HBM chips in 2013, known for their vertical interconnection of multiple DRAM chips, SK hynix has far outpaced its rivals, including Samsung Electronics Co., in producing fifth-generation HBM3E products. SK hynix's confidence as a market leader in the HBM sector is on full display at CES 2025, one of the world's largest tech shows that will kick off Tuesday in Las Vegas. At its joint exhibition booth with other SK Group affiliates, including SK Telecom Co., under the theme "Innovative AI, Sustainable Tomorrow," SK hynix highlights its lineup of world-class AI memory chips, such as HBM and enterprise solid-state drives (eSSDs). Notable products include the D5-P5335, a 122-terabyte chip developed by its U.S. subsidiary Solidigm in November 2024, which is the world's highest-capacity eSSD, along with LPCAMM2 and ZUFS 4.0 on-device AI memory chips. SK hynix also showcases cutting-edge technologies like its Compute Express Link (CXL), a next-generation interface, and processing-in-memory (PIM), an advanced AI accelerator. The centerpiece of the exhibit is a sample of its 16-layer HBM3E chip, the most advanced HBM technology to date, officially introduced in November 2024. This marks the first time the fingernail-sized chip has been displayed to the public outside Korea. This comprehensive lineup underscores SK hynix's ambition to position itself as a "full-stack AI memory provider," offering a broad array of AI-centric memory products and technologies. At the same time, SK hynix's memory chips are essential for AI data centers, which require advanced computational power, high-speed storage and low-latency networking to train and operate AI models efficiently. "Our capabilities in AI data centers are a solid foundation for further development of SK hynix and the Korean memory industry as a whole," Park Myoung-soo, vice president of SK hynix for U.S. and European sales, told reporters during a press event at the Las Vegas Convention Center on Monday. "We are confident that SK hynix will continue to contribute to the Korean semiconductor business and global AI development in the coming years." (Yonhap)

[6]

SK hynix to Unveil 'Full Stack AI Memory Provider' Vision at CES 2025 By Investing.com

A large number of C-level executives, including CEO , CMO (Chief Marketing Officer) and Chief Development Officer (CDO) , will attend the event. "We will broadly introduce solutions optimized for on-device AI and next-generation AI memories, as well as representative AI memory products such as HBM and eSSD at this CES," said . "Through this, we will publicize our technological competitiveness to prepare for the future as a 'Full Stack AI Memory Provider'." Full Stack AI Memory Provider: Refers to an all-round AI memory provider, which provides comprehensive AI-related memory products and technologies SK hynix will also run a joint exhibition booth with SK Telecom (NYSE:SKM), SKC and SK Enmove, under the theme "Innovative AI, Sustainable Tomorrow." The booth will showcase how SK Group's AI infrastructure and services are transforming the world, represented in waves of light. SK hynix, which is the world's first to produce 12-layer HBM products for 5th generation and supply them to customers, will showcase samples of HBM3E 16-layer products, which were officially developed in November last year. This product uses the advanced MR-MUF process to achieve the industry's highest 16-layer configuration while controlling chip warpage and maximizing heat dissipation performance. In addition, the company will display high-capacity, high-performance enterprise SSD products, including the 'D5-P5336' 122TB model developed by its subsidiary Solidigm in November last year. This product, with the largest existing capacity, high power and space efficiency, has been attracting considerable interest from AI data center customers. "As SK hynix succeeded in developing QLC (Quadruple Level Cell)-based 61TB products in December, we expect to maximize synergy based on a balanced portfolio between the two companies in the high-capacity eSSD market" said , CDO at SK hynix. The company will also showcase on-device AI products such as 'LPCAMM2' and 'ZUFS 4.0,' which improve data processing speed and power efficiency to implement AI in edge devices like PCs and smartphones. The company will also present CXL and PIM (Processing in Memory) technologies, along with modularized versions, CMM(CXL Memory Module)-Ax and AiMX, designed to be core infrastructures for next-generation data centers. QLC: NAND flash is divided into SLC (Single Level Cell), MLC (), TLC (Triple Level Cell), QLC (Quadruple Level Cell), and PLC () depending on how much information is stored in one cell. As the amount of information stored increases, more data can be stored in the same area. Low Power Compression Attached Memory Module 2 (LPCAMM2): LPDDR5X-based module solution that provides power efficiency and high performance as well as space savings. It has the performance effect of replacing two existing DDR5 SODIMMs with one LPCAMM2. Zoned Universal Flash Storage (ZUFS): A NAND Flash product that improves efficiency of data management. The product optimizes data transfer between an operating system and storage devices by storing data with similar characteristics in the same zone of the UFS, a flash memory product for various electronic devices such as digital camera and mobile phone. Accelerator-in-Memory based Accelerator (AiMX): SK hynix's accelerator card product that specializes in large language models using GDDR6-AiM chips In particular, CMM-Ax is an groundbreaking product that adds computational functionality to CXL's advantage of expanding high-capacity memory, contributing to improving performance and energy efficiency of the next-generation server platforms. Platform: Refers to a computing system that integrates both hardware and software technologies. It includes all key components necessary for computing, such as the CPU and memory. "The changes in the world triggered by AI are expected to accelerate further this year, and SK hynix will produce 6 generation HBM (HBM4) in the second half of this year to lead the customized HBM market to meet the diverse needs of customers," said , CEO at SK hynix. "We will continue to do our best to present new possibilities in the AI era through technological innovation and provide irreplaceable value to our customers." About SK hynix Inc. SK hynix Inc., headquartered in , is the world's top-tier semiconductor supplier offering Dynamic Random Access Memory chips ("DRAM"), flash memory chips ("NAND flash"), and CMOS Image Sensors ("CIS") for a wide range of distinguished customers globally. The Company's shares are traded on the Korea Exchange, and the Global Depository shares are listed on the Luxemburg Stock Exchange. Further information about SK hynix is available at www.skhynix.com, news.skhynix.com.

Share

Share

Copy Link

SK hynix is set to display its latest AI-focused memory technologies at CES 2025, featuring 16-layer HBM3E chips, high-capacity SSDs, and innovative solutions for on-device AI and data centers.

SK hynix Unveils Cutting-Edge AI Memory Solutions at CES 2025

SK hynix, a leading South Korean memory manufacturer, is set to showcase its latest artificial intelligence (AI) memory solutions at the Consumer Electronics Show (CES) 2025 in Las Vegas. The company aims to highlight its technological prowess as a "Full Stack AI Memory Provider" with a range of innovative products designed to meet the growing demands of AI applications

1

3

.16-layer HBM3E: Pushing the Boundaries of High Bandwidth Memory

At the forefront of SK hynix's exhibition is the 16-layer High Bandwidth Memory 3E (HBM3E) chip, first announced in November 2024. This advanced memory solution offers:

- 48GB capacity per stack (3GB per individual die)

- Up to 384GB in an 8-stack configuration

- 18% boost in AI learning and 32% improvement in inference performance compared to 12-layer versions

- Advanced mass reflow-molded underfill (MR-MUF) process for enhanced thermal performance and reduced chip warping

1

3

4

The 16-layer HBM3E represents a significant leap in memory technology, potentially enabling more powerful AI accelerators and data center applications.

High-Capacity Enterprise SSDs for AI Data Centers

Addressing the increasing storage demands of AI data centers, SK hynix will introduce new enterprise SSD solutions, including:

- The 122TB 'D5-P5336' enterprise SSD, developed by subsidiary Solidigm

- Claimed to be the highest capacity currently available in its category

- Superior power and space efficiency

1

3

5

These high-capacity SSDs are poised to set new standards in data storage solutions for AI-driven infrastructures.

Innovations for On-Device AI and Edge Computing

SK hynix is also focusing on solutions for on-device AI in edge devices such as PCs and smartphones:

- LPCAMM23 and ZUFS 4.04: Designed to enhance data processing speed and power efficiency

- LPCAMM2 module and soldered LPDDR5/6 memory using 1cnm-node technology for laptops and handheld consoles

1

3

5

These innovations aim to facilitate the integration of AI capabilities directly into consumer electronics, broadening the scope of AI applications in everyday devices.

Related Stories

Next-Generation Data Center Technologies

SK hynix will showcase advanced technologies for future data center infrastructures:

- Compute Express Link (CXL) and Processing-In-Memory (PIM) technologies

- Modularized solutions like CMM-Ax and AiMX

- CXL memory module combining scalability with computational capabilities

1

3

These technologies are expected to boost performance and energy efficiency in next-generation server platforms.

Future Developments and Market Position

SK hynix is not resting on its laurels, with plans for future advancements including:

- Mass production of sixth-generation HBM (HBM4) in the second half of 2025

- Development of PCIe 6.0 SSDs and high-capacity QLC eSSDs for AI servers

- UFS 5.0 for mobile devices

1

2

3

With these developments, SK hynix aims to solidify its position as a leader in the AI memory market, addressing diverse customer needs and staying ahead of technological advancements.

As AI continues to drive transformation across industries, SK hynix's comprehensive lineup of memory solutions showcased at CES 2025 demonstrates the company's commitment to innovation and its pivotal role in shaping the future of AI technology

5

.References

Summarized by

Navi

[1]

[2]

[3]

Related Stories

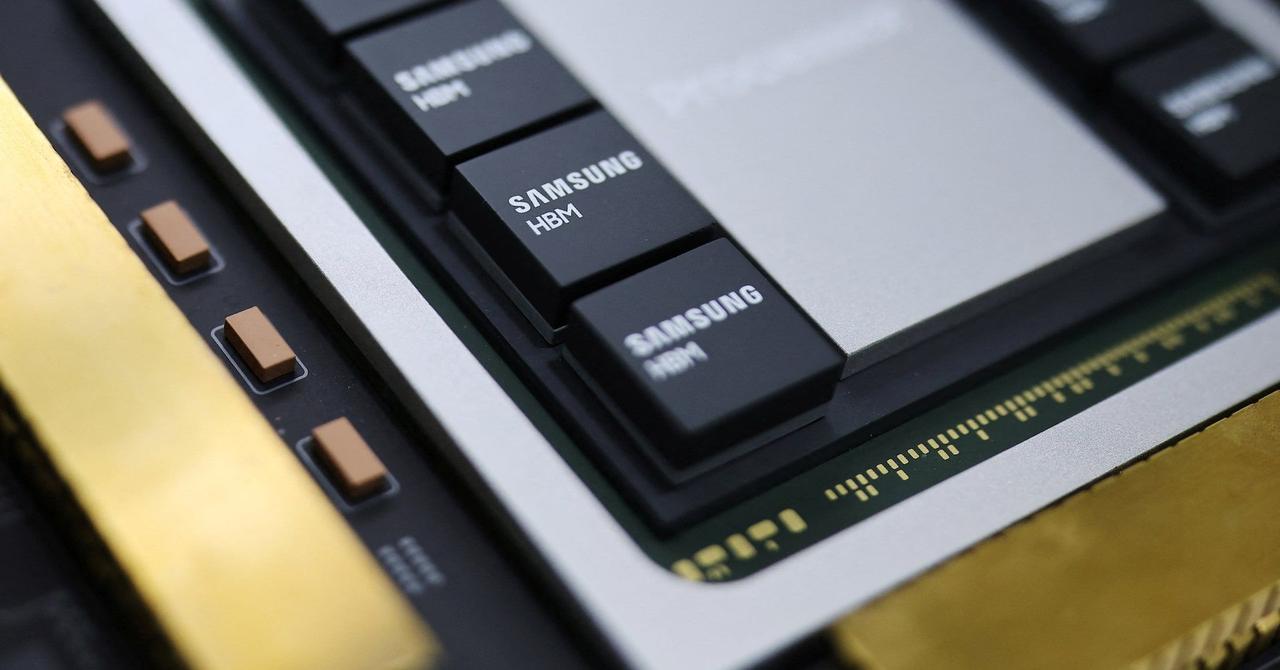

Samsung gains ground in HBM4 race as Nvidia production ignites AI memory battle with SK Hynix, Micron

02 Jan 2026•Technology

SK hynix Leads the Charge in Next-Gen AI Memory with World's First 12-Layer HBM4 Samples

19 Mar 2025•Technology

SK Hynix Accelerates HBM4 Development to Meet Nvidia's Demand, Unveils 16-Layer HBM3E

04 Nov 2024•Technology

Recent Highlights

1

Pentagon threatens Anthropic with Defense Production Act over AI military use restrictions

Policy and Regulation

2

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

3

Anthropic accuses Chinese AI labs of stealing Claude through 24,000 fake accounts

Policy and Regulation