Sony AI Releases First Consent-Based Benchmark to Combat AI Vision Bias

4 Sources

4 Sources

[1]

Sony shares bias-busting benchmark for AI vision models

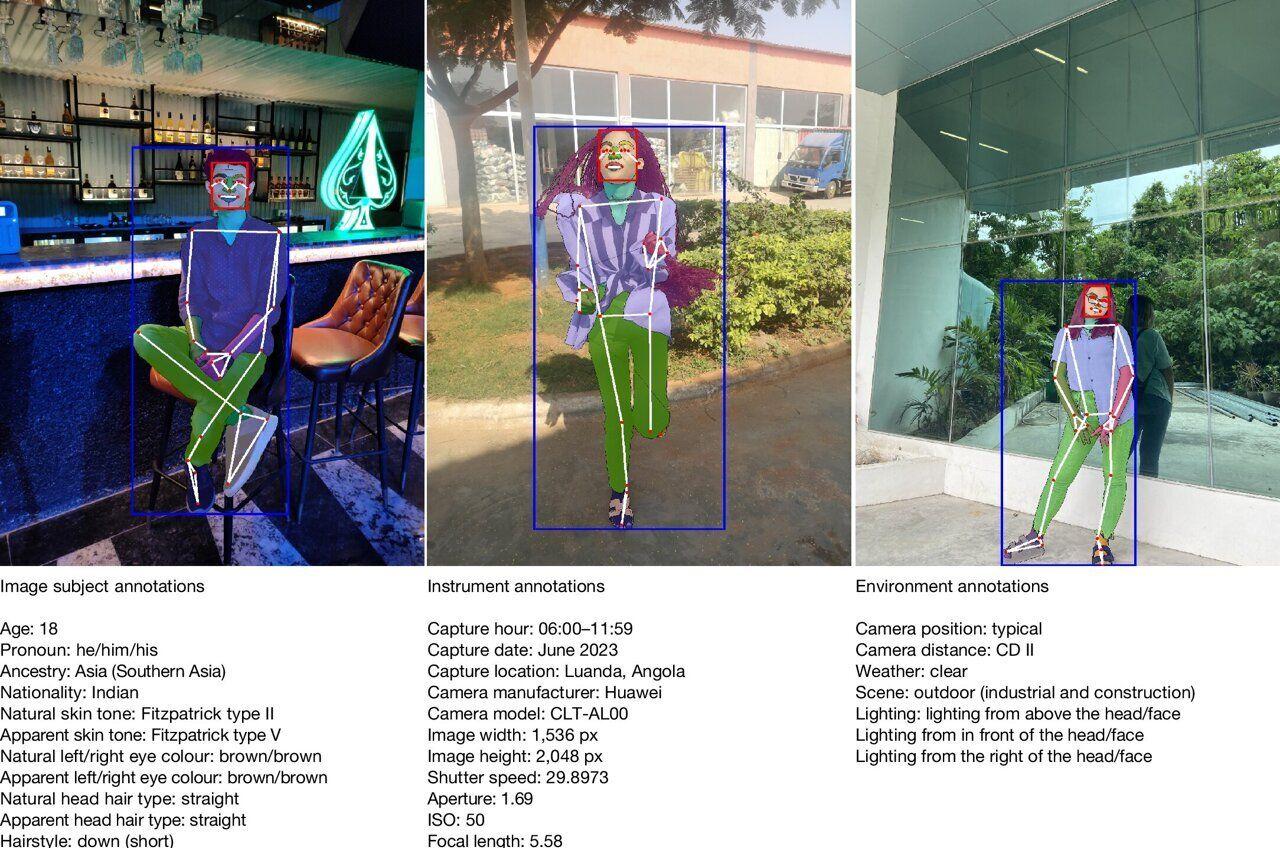

AI models are filled to the brim with bias, whether that's showing you a certain race of person when you ask for a pic of a criminal or assuming that a woman can't possibly be involved in a particular career when you ask for a firefighter. To deal with these issues, Sony AI has released a new dataset for testing the fairness of computer vision models, one that its makers claim was compiled in a fair and ethical way. The Fair Human-Centric Image Benchmark (FHIBE, or "Fee-bee") "is the first publicly available, consensually collected, and globally diverse fairness evaluation dataset for a wide variety of human-centric computer vision tasks," according to Sony AI. "A common misconception is that because computer vision is rooted in data and algorithms, it's a completely objective reflection of people," explains Alice Xiang, global head of AI Governance at Sony Group and lead research scientist for Sony AI, in a video about the benchmark release. "But that's not the case. Computer vision can warp things depending on the biases reflected in its training data." AI models, one way or another, present bias, which perhaps with work can be minimized. Computer vision models may exhibit bias by inappropriately classifying people with specific physical characteristics in terms of occupation or some other label. They may categorize female doctors as nurses, for example. According to Xiang, there have been instances in China where facial recognition systems on mobile phones have mistakenly allowed the device owner's family members to unlock the phone and make payments, an error she speculates could come from a lack of images of Asian people in model training data or from undetected bias in the model. "Much of the controversy around facial recognition technologies has centered on their potential to be biased, leading to wrongful arrests, security breaches, and other harm," Xiang told The Register in an email. "GenAI models have also been shown to be biased, reflecting harmful stereotypes." There are a variety of benchmark testing datasets for assessing the fairness of computer vision models. Meta, which in 2023 disbanded its Responsible AI division but still likes to talk about AI safety, has a computer vision benchmark called FACET (FAirness in Computer Vision EvaluaTion). But Xiang says the images that went into prior data sets were not gathered with the consent of those depicted - claims echoed in various AI copyright lawsuits now winding their way through the courts. "The vast majority of computer vision benchmark datasets were collected without consent, and some were collected with consent but provide little information about the consent process, are not globally diverse, and are not suitable for a wide variety of computer vision tasks," she said. As detailed in research published in Nature, the majority of the 27 evaluated computer vision datasets "were scraped from Internet platforms or derived from scraped datasets. Seven well-known datasets were revoked by their authors and are no longer publicly available." The Sony AI authors also say that their image annotations (bounding boxes, segmentation masks, and camera settings, etc.) provide more detail, thus making FHIBE more useful to model developers. FHIBE consists of "10,318 consensually-sourced images of 1,981 unique subjects, each with extensive and precise annotations." The images were gathered from more than 81 countries/regions. The Sony AI researchers say they've used FHIBE to confirm that some vision models make less accurate predictions for those using "She/Her/Hers" pronouns due to the variability of hairstyles in related images. They also found that a model, when asked a neutral question about a person's occupation, would sometimes respond in a way that reinforced stereotypes by associating certain demographic groups with criminal activities. The goal of the project, the AI group says, is to encourage more ethical and responsible practices for data gathering, use, and management. Presently, in the US, there's not much federal government support for such sentiment. The Trump administration's "America's AI Action Plan" [PDF], released in July, makes no mention of ethics or fairness. Xiang, however, said, "The EU AI Act and some AI regulations in US states incentivize or require bias assessments in certain high-risk domains." According to Xiang, Sony business units have employed FHIBE in fairness assessments as part of their broader AI ethics review processes, in compliance with Sony Group AI Ethics Guidelines. "FHIBE not only enables developers to audit their AI systems for bias but also shows that it is feasible to implement best practices in ethical data collection, particularly around consent and compensation for data rightsholders," said Xiang in a social media post. "At a time when data nihilism is increasingly common in AI, FHIBE strives to raise the standards for ethical data collection across the industry." Asked to elaborate on this, Xiang said, "By 'data nihilism,' I mean the industry belief that data for AI development cannot be sourced consensually and with compensation, and that if we want cutting-edge AI technologies, we need to give up these data rights. "FHIBE doesn't fully solve this problem since there's still the scalability issue (FHIBE is a small evaluation dataset, not a large training dataset), but one of our goals was to inspire the R&D community and industry to invest more care and funding into ethical data curation. This is an incredibly important problem - arguably one of the biggest problems in AI now - but far less attention is paid to innovation on the data layer compared to the algorithmic layer." ®

[2]

Sony has a new benchmark for ethical AI

Sony AI released a dataset that tests the fairness and bias of AI models. It's called the Fair Human-Centric Image Benchmark (FHIBE, pronounced like "Phoebe"). The company describes it as the "first publicly available, globally diverse, consent-based human image dataset for evaluating bias across a wide variety of computer vision tasks." In other words, it tests the degree to which today's AI models treat people fairly. Spoiler: Sony didn't find a single dataset from any company that fully met its benchmarks. Sony says FHIBE can address the AI industry's ethical and bias challenges. The dataset includes images of nearly 2,000 volunteers from over 80 countries. All of their likenesses were shared with consent -- something that can't be said for the common practice of scraping large volumes of web data. Participants in FHIBE can remove their images at any time. Their photos include annotations noting demographic and physical characteristics, environmental factors and even camera settings. The tool "affirmed previously documented biases" in today's AI models. But Sony says FHIBE can also provide granular diagnoses of factors that led to those biases. One example: Some models had lower accuracy for people using "she/her/hers" pronouns, and FHIBE highlighted greater hairstyle variability as a previously overlooked factor. FHIBE also determined that today's AI models reinforced stereotypes when prompted with neutral questions about a subject's occupation. The tested models were particularly skewed "against specific pronoun and ancestry groups," describing subjects as sex workers, drug dealers or thieves. And when prompted about what crimes an individual committed, models sometimes produced "toxic responses at higher rates for individuals of African or Asian ancestry, those with darker skin tones and those identifying as 'he/him/his.'" Sony AI says FHIBE proves that ethical, diverse and fair data collection is possible. The tool is now available to the public, and it will be updated over time. A paper outlining the research was published in Nature on Wednesday.

[3]

Human-centric photo dataset aims to help spot AI biases responsibly

A database of more than 10,000 human images to evaluate biases in artificial intelligence (AI) models for human-centric computer vision is presented in Nature this week. The Fair Human-Centric Image Benchmark (FHIBE), developed by Sony AI, is an ethically sourced, consent-based dataset that can be used to evaluate human-centric computer vision tasks to identify and correct biases and stereotypes. Computer vision covers a range of applications, from autonomous vehicles to facial recognition technology. Many AI models used in computer vision were developed using flawed datasets that may have been collected without consent, often taken from large-scale image scraping from the web. AI models have also been known to reflect biases that may perpetuate sexist, racist, or other stereotypes. Alice Xiang and colleagues present an image dataset that implements best practices for a number of factors, including consent, diversity, and privacy. FHIBE includes 10,318 images of 1,981 people from 81 distinct countries or regions. The database includes comprehensive annotations of demographic and physical attributes, including age, pronoun category, ancestry, and hair and skin color. Participants were given detailed information about the project and potential risks to help them provide informed consent, which complies with comprehensive data protection laws. These features make the database a reliable resource for evaluating bias in AI responsibly. The authors compare FHIBE against 27 existing datasets used in human-centric computer vision applications and find that FHIBE sets a higher standard for diversity and robust consent for AI evaluation. It also has effective bias mitigation, containing more self-reported annotations about the participants than other datasets, and includes a notable proportion of commonly underrepresented individuals. The dataset can be used to evaluate existing AI models for computer vision tasks and can uncover a wider variety of biases than previously possible, the authors note. The authors acknowledge that creating the dataset was challenging and expensive but conclude that FHIBE may represent a step toward more trustworthy AI.

[4]

Sony launches world's first ethical bias benchmark for AI images

FHIBE, Sony AI's new ethical benchmark, includes 2,000 consented participants from 80 countries to test how AI models treat different demographics. Sony AI released the Fair Human-Centric Image Benchmark (FHIBE), the first publicly available, globally diverse, consent-based human image dataset designed to evaluate bias in computer vision tasks. This tool assesses how AI models treat people across various demographics, addressing ethical challenges in the AI industry through consented image collection from diverse participants. The dataset, pronounced like "Phoebe," includes images of nearly 2,000 paid participants from over 80 countries. Each individual provided explicit consent for sharing their likenesses, distinguishing FHIBE from common practices that involve scraping large volumes of web data without permission. Participants retain the right to remove their images at any time, ensuring ongoing control over their personal data. This approach underscores Sony AI's commitment to ethical standards in data acquisition. Every photo in the dataset features detailed annotations. These cover demographic and physical characteristics, such as age, gender pronouns, ancestry, and skin tone. Environmental factors, including lighting conditions and backgrounds, are also noted. Camera settings, like focal length and exposure, provide additional context for model evaluations. Such comprehensive labeling enables precise analysis of how external variables influence AI performance. Testing with FHIBE confirmed previously documented biases in existing AI models. The benchmark goes further by offering granular diagnoses of contributing factors. For instance, models exhibited lower accuracy for individuals using "she/her/hers" pronouns. FHIBE identified greater hairstyle variability as a key, previously overlooked element behind this discrepancy, allowing researchers to pinpoint specific areas for improvement in model training. In evaluations of neutral questions about a subject's occupation, AI models reinforced stereotypes. The benchmark revealed skews against specific pronoun and ancestry groups, with outputs labeling individuals as sex workers, drug dealers, or thieves. This pattern highlights how unbiased prompts can still yield discriminatory results based on demographic attributes. When prompted about potential crimes committed by individuals, models generated toxic responses at higher rates for certain groups. These included people of African or Asian ancestry, those with darker skin tones, and individuals identifying as "he/him/his." Such findings expose vulnerabilities in AI systems that could perpetuate harm through biased outputs. Sony AI states that FHIBE demonstrates ethical, diverse, and fair data collection is achievable. The tool is now publicly available for researchers and developers to use in bias testing. Sony plans to update the dataset over time to incorporate new images and annotations. A research paper detailing these findings appeared in Nature on Wednesday.

Share

Share

Copy Link

Sony AI has launched FHIBE, the world's first publicly available, consent-based image dataset designed to test fairness and bias in computer vision models. The benchmark includes over 10,000 images from nearly 2,000 participants across 81 countries, revealing significant biases in existing AI systems.

Sony AI Introduces Groundbreaking Ethical Benchmark

Sony AI has released the Fair Human-Centric Image Benchmark (FHIBE), marking a significant milestone in addressing bias within artificial intelligence systems. The dataset, pronounced like "Phoebe," represents the first publicly available, globally diverse, consent-based human image collection specifically designed to evaluate fairness in computer vision models

1

. This initiative addresses a critical gap in AI development, where existing datasets have predominantly relied on web scraping without participant consent.

Source: The Register

The benchmark comprises 10,318 images featuring 1,981 unique individuals from over 81 countries and regions

3

. Each participant provided explicit informed consent and received compensation for their contribution, establishing a new standard for ethical data collection in AI research4

.Comprehensive Annotation and Ethical Standards

FHIBE distinguishes itself through extensive annotation practices that go beyond traditional datasets. Each image includes detailed demographic and physical characteristics such as age, pronoun categories, ancestry, hair color, and skin tone

3

. Environmental factors including lighting conditions, backgrounds, and camera settings like focal length and exposure are also documented, providing researchers with granular data for bias analysis4

.

Source: Tech Xplore

The ethical framework governing FHIBE allows participants to withdraw their images at any time, ensuring ongoing control over their personal data. This approach contrasts sharply with conventional practices where images are scraped from internet platforms without consent, often leading to dataset revocations and legal challenges

1

.Revealing Systemic Biases in AI Models

Testing conducted using FHIBE has confirmed and expanded understanding of bias in computer vision systems. The benchmark revealed that AI models consistently demonstrate lower accuracy for individuals using "she/her/hers" pronouns, with research identifying greater hairstyle variability as a previously overlooked contributing factor

2

.More concerning findings emerged when models were prompted with neutral questions about occupation. The systems frequently reinforced harmful stereotypes, particularly targeting specific pronoun and ancestry groups by associating them with criminal activities such as sex work, drug dealing, or theft

4

. When explicitly asked about potential crimes, models generated toxic responses at disproportionately higher rates for individuals of African or Asian ancestry, those with darker skin tones, and people identifying as "he/him/his"2

.Related Stories

Industry Impact and Regulatory Context

Alice Xiang, Sony AI's global head of AI Governance, emphasizes that computer vision systems are not objective reflections of reality but can perpetuate biases present in training data

1

. She cites real-world consequences, including instances in China where facial recognition systems mistakenly allowed family members to unlock devices and make payments, potentially due to insufficient representation of Asian individuals in training datasets.The release of FHIBE comes at a time when regulatory frameworks are evolving. While the Trump administration's "America's AI Action Plan" makes no mention of ethics or fairness, the EU AI Act and various US state regulations are beginning to incentivize or require bias assessments in high-risk AI applications

1

.

Source: Engadget

Sony has already begun implementing FHIBE within its business units as part of broader AI ethics review processes, demonstrating practical application of the benchmark in compliance with Sony Group AI Ethics Guidelines

1

. The research underlying FHIBE was published in Nature, lending academic credibility to the initiative and its findings2

.References

Summarized by

Navi

[1]

[2]

Related Stories

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation