The AI Revolution: Progress, Perils, and Shifting Perspectives

10 Sources

10 Sources

[1]

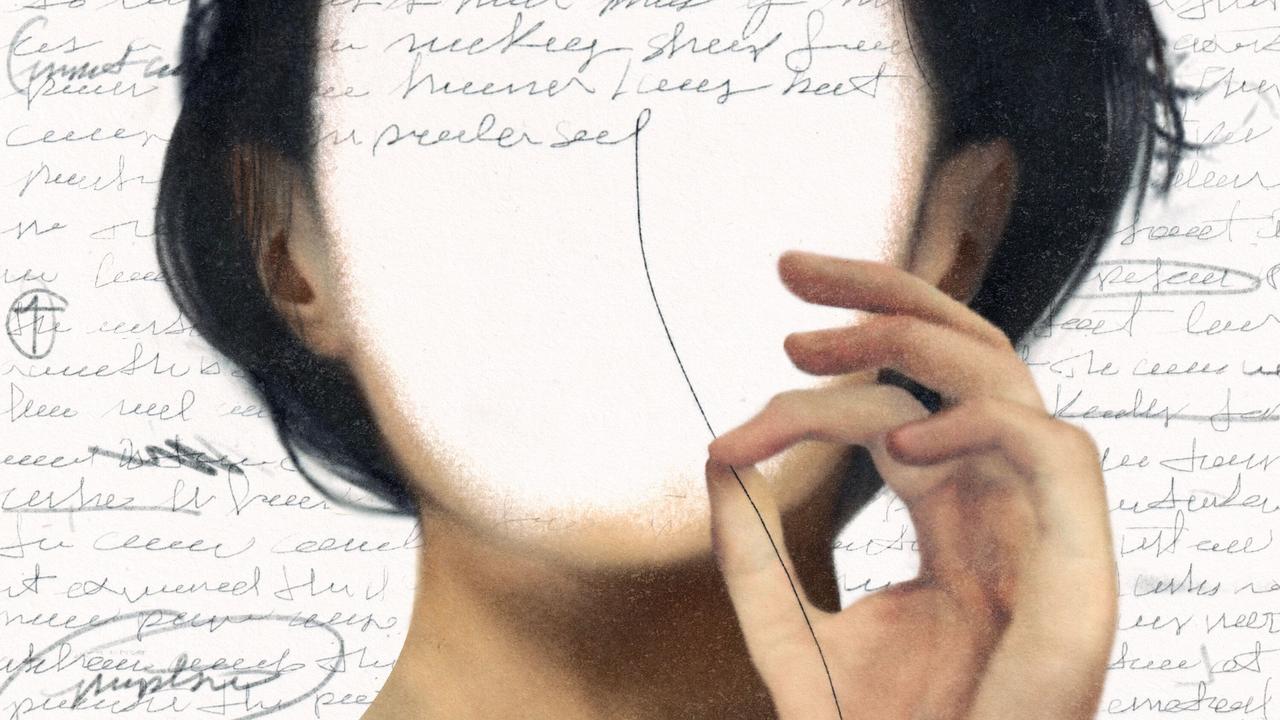

Can a Generative AI Agent Accurately Mimic My Personality?

A large language model interviewed me about my life and gave the information to an AI agent built to portray my personality. Could it convince me it was me? On a gray Sunday morning in March, I told an AI chatbot my life story. Introducing herself as Isabella, she spoke with a friendly female voice that would have been well-suited to a human therapist, were it not for its distinctly mechanical cadence. Aside from that, there wasn't anything humanlike about her; she appeared on my computer screen as a small virtual avatar, like a character from a 1990s video game. For nearly two hours Isabella collected my thoughts on everything from vaccines to emotional coping strategies to policing in the U.S. When the interview was over, a large language model (LLM) processed my responses to create a new artificial intelligence system designed to mimic my behaviors and beliefs -- a kind of digital clone of my personality. A team of computer scientists from Stanford University, Google DeepMind and other institutions developed Isabella and the interview process in an effort to build more lifelike AI systems. Dubbed "generative agents," these systems can simulate the decision-making behavior of individual humans with impressive accuracy. Late last year Isabella interviewed more than 1,000 people. Then the volunteers and their generative agents took the General Social Survey, a biennial questionnaire that has cataloged American public opinion since 1972. Their results were, on average, 85 percent identical, suggesting that the agents can closely predict the attitudes and opinions of their human counterparts. Although the technology is in its infancy, it offers a glimmer of a future in which predictive algorithms can potentially act as online surrogates for each of us. If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today. When I first learned about generative agents the humanist in me rebelled, silently insisting that there was something about me that isn't reducible to the 1's and 0's of computer code. Then again, maybe I was naive. The rapid evolution of AI has brought many humbling surprises. Time and again, machines have outperformed us in skills we once believed to be unique to human intelligence -- from playing chess to writing computer code to diagnosing cancer. Clearly AI can replicate the narrow, problem-solving part of our intellect. But how much of your personality -- a mercurial phenomenon -- is deterministic, a set of probabilities that are no more inscrutable to algorithms than the arrangement of pieces on a chessboard? The question is hotly debated. An encounter with my own generative agent, it seemed to me, could help me to get some answers. The LLMs behind generative agents and chatbots such as ChatGPT, Claude and Gemini are certainly expert imitators. People have fed texts from deceased loved ones to ChatGPT, which could then conduct text conversations that closely approximated the departed's voices. Today developers are positioning agents as a more advanced form of chatbot, capable of autonomously making decisions and completing routine tasks, such as navigating a Web browser or debugging computer code. They're also marketing agents as productivity boosters, onto which businesses can offload time-intensive human drudgery. Amazon, OpenAI, Anthropic, Google, Salesforce, Microsoft, Perplexity and virtually every major tech player has jumped onboard the agent bandwagon. Joon Sung Park, a leader of Stanford's generative agent work, had always been drawn to what early Disney animators called "the illusion of life." He began his doctoral work at Stanford in late 2020, after the COVID pandemic was forcing much of the world into lockdown, and as generative AI was starting to boom. Three years earlier, Google researchers introduced the transformer, a type of neural network that can analyze and reproduce mathematical patterns in text. (The "GPT" in ChatGPT stands for "generative pretrained transformer.") Park knew that video game designers had long struggled to create lifelike characters that could do more than move mechanically and read from a script. He wondered: Could generative AI create authentically humanlike behavior in virtual characters? He unveiled generative agents in a 2023 conference paper in which he described them as "interactive simulacra of human behavior." They were built atop ChatGPT and integrated with an "agent architecture," a layer of code allowing them to remember information and formulate plans. The design simulates some key aspects of human perception and behavior, says Daniel Cervone, a professor of psychology specializing in personality theory at the University of Illinois Chicago. Generative agents are doing "a big slice of what a real person does, which is to reflect on their experiences, abstract out beliefs about themselves, store those beliefs and use them as cognitive tools to interpret the world," Cervone told me. "That's what we do all the time." Park dropped 25 generative agents inside Smallville, a virtual space modeled on Swarthmore College, where he had studied as an undergraduate. He included basic affordances such as a café and a bar where the agents could mingle; picture The Sims without a human player calling the shots. Smallville was a petri dish for virtual sociality; rather than watching cells multiply, Park observed the agents gradually coalescing from individual nodes into a unified network. At one point, Isabella (the same agent that would later interview me), assigned with the role of café owner, spontaneously began handing out invitations to her fellow agents for a Valentine's Day party. "That starts to spark some real signals that this could actually work," Park told me. Yet as encouraging as those early results were, the residents of Smallville had been programmed with particular personality traits. The real test, Park believed, would lie in building generative agents that could simulate the personalities of living humans. It was a tall order. Personality is a notoriously nebulous concept, fraught with hidden layers. The word itself is rooted in uncertainty, vagary, deception: it's derived from the Latin persona, which originally referred to a mask worn by a stage actor. Park and his team don't claim to have built perfect simulations of individuals' personalities. "A two-hour interview doesn't [capture] you in anything near your entirety," says Michael Bernstein, an associate professor of computer science at Stanford and one of Park's collaborators. "It does seem to be enough to gather a sense of your attitudes."And they don't think generative agents are close to artificial general intelligence, or AGI -- an as-yet-theoretical system that can match humans on any cognitive task. In their latest paper, Park and his colleagues argue that their agents could help researchers understand complex, real-world social phenomena, such as the spread of online misinformation and the outcome of national elections. If they can accurately simulate individuals, then they can theoretically set the simulations loose to interact with one another and see what kind of social behaviors emerge. Think Smallville on a much bigger scale. Yet, as I would soon discover, generative agents may only be able to imitate a very narrow and simplified slice of the human personality. Meeting my generative agent a week after my interview with Isabella felt like looking at myself in a funhouse mirror: I knew I was seeing my own reflection, but the image was warped and twisted. The first thing I noticed was that the agent -- let's say "he" -- didn't speak like me. I was on a video call with Park, and the two of us were taking turns asking him questions. Unlike Isabella, he didn't come with his own avatar; he just appeared as faceless lines of green text spilling across my screen. We were testing his ability to make informed guesses about my life, filling in information I hadn't directly provided to Isabella. The results were somewhat disappointing. At one point, I asked him to tell me a secret about himself that no one else knows, hoping he would surface some kind of moderately deep insight. He said he loved astronomy. True enough but hardly revelatory. His real talent seemed to be inferring some of the more mundane details of my life. When asked if his family had dogs growing up, he correctly answered yes, even though I had only told Isabella that my sister and parents have dogs today. I had, however, described my childhood in Colorado, which was full of family camping trips in the mountains, and the agent had apparently correctly deduced that there was a high probability that somebody who grew up in such an environment also grew up with dogs. "Those are the basic boundaries of this technology," Park told me. "In the absence of the ground-truth information, it will try to make its best guess." At times those guesses were comically off the mark. When asked to recount an embarrassing moment from his past, he fabricated a story about a party in college in which he'd lost his balance while dancing and fell onto a table full of snacks. Thankfully, that never happened to the real me. Here, too, the system was connecting disparate bits of my personal data and doing its best to concoct something plausible. Toward the end of the Q&A, I ventured into the deep end by asking whether he believed in God. The answer wasn't far from how I might respond. "I wasn't raised in a specific religious framework, but I've always felt a connection with something greater than myself, especially when I'm in nature," my agent wrote. "It's like there's a kind of transcendent energy or consciousness that we can tap into." Most memorably, there were moments that felt like genuine insight. I had had a long, stressful few months. I had started working as a full-time freelance writer last October, ushering in a period of professional freedom but also chronic uncertainty. I'd spent much of the winter hunkered down, reading and writing, in my small New York City apartment, and I was feeling the psychological effects of prolonged isolation. Embracing the spirit of spring, I had resolved to make what I believed would be some healthy changes, starting with a greater effort to maintain a better work-life balance. I asked my generative agent, "What's some advice you'd want to offer to your past self?" "Embrace uncertainty a bit more," he began. I had to pause. Already the response so closely echoed the themes I'd been articulating to myself in my journal the previous day, it was almost as if I were writing the agents' words myself. "I think I spent a lot of time worrying about the future and trying to control every aspect of my life, and that often led to unnecessary stress," he continued. "I would advise myself to trust the process and be more open to the unexpected paths that life can take.... It's easy to get caught up in career ambitions, but nurturing relationships and taking time for oneself is equally important." Despite those moments of pleasant surprise, my conversation with my generative agent left me feeling hollow. I felt I had met a two-dimensional version of myself -- all artifice, no depth. It had captured a veneer of my personality, but it was just that: a virtual actor playing a role, wearing my data as a mask. At no point did I get the feeling that I was interacting with a system that truly captured my voice and my thoughts. But that isn't the point. Generative agents don't need to sound like you or understand you in your entirety to be useful, just as psychologists don't need to understand every quirk of your behavior to make broad-stroke diagnoses of your personality type. Adam Green, a neuroscientist at Georgetown University, who studies the impacts of AI on human creativity, believes that that lack of specificity and our growing reliance on a handful of powerful algorithms could filter out much of the color and quirks that make each of us unique. Even the most advanced algorithm will revert to the mean of the dataset on which it's been trained. "That matters," Green says, "because ultimately what you'll have is homogenization." In his view, the expanding ubiquity of predictive AI models is squeezing our culture into a kind of groupthink, in which all our idiosyncrasies slowly but surely become discounted as irrelevant outliers in the data of humanity. After meeting my generative agent, I remembered the feeling I had back when I spoke with Isabella -- my inner voice that had rejected the idea that my personality could be re-created in silicon or, as Meghan O'Gieblyn put it in her book God, Human, Animal, Machine, "that the soul is little more than a data set." I still felt that way. If anything, my conviction had been strengthened. I was also aware that I might be falling prey to the same kind of hubris that once kept early critics of AI from believing that computers could ever compose decent poetry or outmatch humans in chess. But I was willing to take that risk.

[2]

AI Is a Mass-Delusion Event

It is a Monday afternoon in August, and I am on the internet watching a former cable-news anchor interview a dead teenager on Substack. This dead teenager -- Joaquin Oliver, killed in the mass shooting at Marjory Stoneman Douglas High School, in Parkland, Florida -- has been reanimated by generative AI, his voice and dialogue modeled on snippets of his writing and home-video footage. The animations are stiff, the model's speaking cadence is too fast, and in two instances, when it is trying to convey excitement, its pitch rises rapidly, producing a digital shriek. How many people, I wonder, had to agree that this was a good idea to get us to this moment? I feel like I'm losing my mind watching it. Jim Acosta, the former CNN personality who's conducting the interview, appears fully bought-in to the premise, adding to the surreality: He's playing it straight, even though the interactions are so bizarre. Acosta asks simple questions about Oliver's interests and how the teenager died. The chatbot, which was built with the full cooperation of Oliver's parents to advocate for gun control, responds like a press release: "We need to create safe spaces for conversations and connections, making sure everyone feels seen." It offers bromides such as "More kindness and understanding can truly make a difference." On the live chat, I watch viewers struggle to process what they are witnessing, much in the same way I am. "Not sure how I feel about this," one writes. "Oh gosh, this feels so strange," another says. Still another thinks of the family, writing, "This must be so hard." Someone says what I imagine we are all thinking: "He should be here." Read: AI's real hallucination problem The Acosta interview was difficult to process in the precise way that many things in this AI moment are difficult to process. I was grossed out by Acosta for "turning a murdered child into content," as the critic Parker Molloy put it, and angry with the tech companies that now offer a monkey's paw in the form of products that can reanimate the dead. I was alarmed when Oliver's father told Acosta during their follow-up conversation that Oliver "is going to start having followers," suggesting an era of murdered children as influencers. At the same time, I understood the compulsion of Oliver's parents, still processing their profound grief, to do anything in their power to preserve their son's memory and to make meaning out of senseless violence. How could I possibly judge the loss that leads Oliver's mother to talk to the chatbot for hours on end, as his father described to Acosta -- what could I do with the knowledge that she loves hearing the chatbot say "I love you, Mommy" in her dead son's voice? The interview triggered a feeling that has become exceedingly familiar over the past three years. It is the sinking feeling of a societal race toward a future that feels bloodless, hastily conceived, and shruggingly accepted. Are we really doing this? Who thought this was a good idea? In this sense, the Acosta interview is just a product of what feels like a collective delusion. This strange brew of shock, confusion, and ambivalence, I've realized, is the defining emotion of the generative-AI era. Three years into the hype, it seems that one of AI's enduring cultural impacts is to make people feel like they're losing it. During his interview with Acosta, Oliver's father noted that the family has plans to continue developing the bot. "Any other Silicon Valley tech guy will say, 'This is just the beginning of AI,'" he said. "'This is just the beginning of what we're doing.'" Just the beginning. Perhaps you've heard that too. "Welcome to the ChatGPT generation." "The Generative AI Revolution." "A new era for humanity," as Mark Zuckerberg recently put it. It's the moment before the computational big bang -- everything is about to change, we're told; you'll see. God may very well be in the machine. Silicon Valley has invented a new type of mind. This is a moment to rejoice -- to double down. You're a fool if you're not using it at work. It is time to accelerate. How lucky we are to be alive right now! Yes, things are weird. But what do you expect? You are swimming in the primordial soup of machine cognition. There are bound to be growing pains and collateral damage. To live in such interesting times means contending with MechaHitler Grok and drinking from a fire hose of fascist-propaganda slop. It means Grandpa leaving confused Facebook comments under rendered images of Shrimp Jesus or, worse, falling for a flirty AI chatbot. This future likely requires a new social contract. But also: AI revenge porn and "nudify" apps that use AI to undress women and children, and large language models that have devoured the total creative output of humankind. From this morass, we are told, an "artificial general intelligence" will eventually emerge, turbo-charging the human race or, well, maybe destroying it. But look: Every boob with a T-Mobile plan will soon have more raw intelligence in their pocket than has ever existed in the world. Keep the faith. Breathlessness is the modus operandi of those who are building out this technology. The venture capitalist Marc Andreessen is quote-tweeting guys on X bleating out statements such as "Everyone I know believes we have a few years max until the value of labor totally collapses and capital accretes to owners on a runaway loop -- basically marx' worst nightmare/fantasy." How couldn't you go a bit mad if you took them seriously? Indeed, it seems that one of the many offerings of generative AI is a kind of psychosis-as-a-service. If you are genuinely AGI-pilled -- a term for those who believe that machine-born superintelligence is coming, and soon -- the rational response probably involves some combination of building a bunker, quitting your job, and joining the cause. As my colleague Matteo Wong wrote after spending time with people in this cohort earlier this year, politics, the economy, and current events are essentially irrelevant to the true believers. It's hard to care about tariffs or authoritarian encroachment or getting a degree if you believe that the world as we know it is about to change forever. There are maddening effects downstream of this rhetoric. People have been involuntarily committed or had delusional breakdowns after developing relationships with chatbots. These stories have become a cottage industry in themselves, each one suggesting that a mix of obsequious models, their presentation of false information as true, and the tools' ability to mimic human conversation pushes vulnerable users to think they've developed a human relationship with a machine. Subreddits such as r/MyBoyfriendIsAI, in which people describe their relationships with chatbots, may not be representative of most users, but it's hard to browse through the testimonials and not feel that, just a few years into the generative-AI era, these tools have a powerful hold on people who may not understand what it is they're engaging with. As all of this happens, young people are experiencing a phenomenon that the writer Kyla Scanlon calls the "End of Predictable Progress." Broadly, the theory argues that the usual pathways to a stable economic existence are no longer reliable. "You're thinking: These jobs that I rely on to get on the bottom rung of my career ladder are going to be taken away from me" by AI, she recently told the journalist Ezra Klein. "I think that creates an element of fear." The feeling of instability she describes is a hallmark of the generative-AI era. It's not at all clear yet how many entry-level jobs will be claimed by AI, but the messaging from enthusiastic CEOs and corporations certainly sounds dire. In May, Dario Amodei, the CEO of Anthropic, warned that AI could wipe out half of all entry-level white-collar jobs. In June, Salesforce CEO Marc Benioff suggested that up to 50 percent of the company's work was being done by AI. The anxiety around job loss illustrates the fuzziness of this moment. Right now, there are competing theories as to whether AI is having a meaningful effect on employment. But real and perceived impact are different things. A recent Quinnipiac poll found that, "when it comes to their day-to-day life," 44 percent of surveyed Americans believe that AI will do more harm than good. The survey found that Americans believe the technology will cause job loss -- but many workers appeared confident in the security of their own job. Many people simply don't know what conclusions to draw about AI, but it is impossible not to be thinking about it. OpenAI CEO Sam Altman has demonstrated his own uncertainty. In a blog post titled "The Gentle Singularity" published in June, Altman argued that "we are past the event horizon" and are close to building digital superintelligence, and that "in some big sense, ChatGPT is already more powerful than any human who has ever lived." He delivered the classic rhetorical flourishes of AI boosters, arguing that "the 2030s are likely going to be wildly different from any time that has come before." And yet, this post also retreats ever so slightly from the dramatic rhetoric of inevitable "revolution" that he has previously employed. "In the most important ways, the 2030s may not be wildly different," he wrote. "People will still love their families, express their creativity, play games, and swim in lakes" -- a cheeky nod to the endurance of our corporeal form, as a little treat. Altman is a skilled marketer, and the post might simply be a way to signal a friendlier, more palatable future for those who are a little freaked out. But a different way to read the post is to see Altman hedging slightly in the face of potential progress limitations on the technology. Earlier this month, OpenAI released GPT-5, to mixed reviews. Altman had promised "a Ph.D.-level" intelligence on any topic. But early tests of GPT-5 revealed all kinds of anecdotal examples of sloppy answers to queries, including hallucinations, simple-arithmetic errors, and failures in basic reasoning. Some power users who'd become infatuated with previous versions of the software were angry and even bereft by the update. Altman placed particular emphasis on the product's usability and design: Paired with the "Gentle Singularity," GPT-5 seems like an admission that superintelligence is still just a concept. Read: The new ChatGPT resets the AI race And yet, the philosopher role-play continues. Not long before the launch, Altman appeared on the comedian Theo Von's popular podcast. The discussion veered into the thoughtful science-fiction territory that Altman tends to inhabit. At one point, the two had the following exchange: What exactly is a person, listening in their car on the way to the grocery store, to make of conversations like this? Surely, there's a cohort that finds covering the Earth or atmosphere with data centers very exciting. But what about those of us who don't? Altman and lesser personalities in the AI space often talk this way, making extreme, matter-of-fact proclamations about the future and sounding like kids playing a strategy game. This isn't a business plan; it's an idle daydream. Similarly disorienting is the fact that these visions and pontifications are driving change in the real world. Even if you personally don't believe in the hype, you are living in an economy that has reoriented itself around AI. A recent report from The Wall Street Journal estimates that Big Tech's spending on IT infrastructure in 2025 is "acting as a sort of private-sector stimulus program," with the "Magnificent Seven" tech companies -- Meta, Alphabet, Microsoft, Amazon, Apple, Nvidia, and Tesla -- spending more than $100 billion on capital expenditures in the recent months. The flip side of such consolidated investment in one tech sector is a giant economic vulnerability that could lead to a financial crisis. This is the AI era in a nutshell. Squint one way, and you can portray it as the saving grace of the world economy. Look at it more closely, and it's a ticking time bomb lodged in the global financial system. The conversation is always polarized. Keep the faith. It's difficult to deny that generative-AI tools are transformative, insomuch as their adoption has radically altered the economy and the digital world. Social networks and the internet at large have been flooded with AI slop and synthetic text. Spotify and YouTube are filling up with AI-generated songs and videos, some of which get millions of streams. Sometimes this is helpful: A bot artfully summarizes a complex PDF. They are, by most accounts, truly helpful coding tools. Kids use them to build helpful study guides. They're good at saving you time by churning out anemic emails. Also, a health-care chatbot made up fake body parts. The FDA has introduced a generative-AI tool to help fast-track drug and medical-device approvals -- but the tool keeps making up fake studies. To scan the AI headlines is a daily exercise in trying to determine the cost that society is paying for these perceived productivity benefits. For example, with a new Google Gemini-enabled smartwatch, you can ask the bot to "tell my spouse I'm 15 minutes late and send it in a jokey tone" instead of communicating yourself. This is followed by news of a study suggesting that ChatGPT power users might be accumulating a "cognitive debt" from using the tool. In recent months, I've felt unmoored by all of this: by a technology that I find useful in certain contexts being treated as a portal to sentience; by a billionaire confidently declaring that he is close to making breakthroughs in physics by conversing with a chatbot; by a "get that bag" culture that seems to have accepted these tools without much consideration as to the repercussions; by the discourse. I hear the chatter everywhere -- a guy selling produce at the farmers' market makes a half-hearted joke that AI can't grow blueberries; a woman at the airport tells her friend that she asked ChatGPT for makeup recommendations. Most of these conversations are poorly informed, conducted by people who have been bombarded for years now by hype but who have also watched as some of these tools have become ingrained in their life or in the life of people they know. They're not quite excited or jaded, but almost all of them seem resigned to dealing with the tools as part of their future. Remember -- this is just the beginning ... right? This is the language that the technology's builders and backers have given us, which means that discussions that situate the technology in the future are being had on their terms. This is a mistake, and it is perhaps the reason so many people feel adrift. Lately, I've been preoccupied with a different question: What if generative AI isn't God in the machine or vaporware? What if it's just good enough, useful to many without being revolutionary? Right now, the models don't think -- they predict and arrange tokens of language to provide plausible responses to queries. There is little compelling evidence that they will evolve without some kind of quantum research leap. What if they never stop hallucinating and never develop the kind of creative ingenuity that powers actual human intelligence? The models being good enough doesn't mean that the industry collapses overnight or that the technology is useless (though it could). The technology may still do an excellent job of making our educational system irrelevant, leaving a generation reliant on getting answers from a chatbot instead of thinking for themselves, without the promised advantage of a sentient bot that invents cancer cures. Read: AI executives promise cancer cures. Here's the reality Good enough has been keeping me up at night. Because good enough would likely mean that not enough people recognize what's really being built -- and what's being sacrificed -- until it's too late. What if the real doomer scenario is that we pollute the internet and the planet, reorient our economy and leverage ourselves, outsource big chunks of our minds, realign our geopolitics and culture, and fight endlessly over a technology that never comes close to delivering on its grandest promises? What if we spend so much time waiting and arguing that we fail to marshal our energy toward addressing the problems that exist here and now? That would be a tragedy -- the product of a mass delusion. What scares me the most about this scenario is that it's the only one that doesn't sound all that insane.

[3]

Opinion | How ChatGPT Surprised Me

I seem to be having a very different experience with GPT-5, the newest iteration of OpenAI's flagship model, from most everyone else. The commentariat consensus is that GPT-5 is a dud, a disappointment, perhaps even evidence that artificial intelligence progress is running aground. Meanwhile, I'm over here filled with wonder and nerves. Perhaps this is what the future always feels like once we reach it: too normal to notice how strange our world has become. The knock on GPT-5 is that it nudges the frontier of A.I. capabilities forward rather than obliterates previous limits. I'm not here to argue otherwise. OpenAI has been releasing new models at such a relentless pace -- the powerful o3 model came out four months ago -- that it has cannibalized the shock we might have felt if there had been nothing between the 2023 release of GPT-4 and the 2025 release of GPT-5. But GPT-5, at least for me, has been a leap in what it feels like to use an A.I. model. It reminds me of setting up thumbprint recognition on an iPhone: You keep lifting your thumb on and off the sensor, watching a bit more of the image fill in each time, until finally, with one last touch, you have a full thumbprint. GPT-5 feels like a thumbprint. I had early access to GPT-3, lo those many moons ago, and barely ever used it. GPT-3.5, which powered the 2022 release of ChatGPT, didn't do much for me either. It was the dim outline of useful A.I. rather than the thing itself. GPT-4 was released in 2023, and as the model was improved in a series of confusingly named updates, I found myself using it more -- and opening the Google search window much less. But something about it still felt false and gimmicky. Then came o3, a model that would mull complex questions for longer, and I began to find startling flashes of insight or erudition when I posed questions that I could only have asked of subject-issue experts before. But it remained slow, and the "voice" of the A.I., for lack of a better term, grated on me. GPT-5 is the first A.I. system that feels like an actual assistant. For example, I needed to find a camp for my children on two odd days, and none of the camps I had used before were open. I gave GPT-5 my kids' info and what I needed, and it found me almost a dozen options, all of them real, one of which my children are now enrolled in. I've been trying to distill some thoughts about liberalism down to a research project I could actually complete, and GPT-5 led me to books and sources I doubt I would otherwise have found. I was struck one morning by a strangely patterned rash, and the A.I. figured out it was contact dermatitis from a new shirt based on the pattern of where my skin was and wasn't affected. It's not that I haven't run into hallucinations with GPT-5. I have; it invented an album by the music producer Floating Points that I truly wish existed. When I asked why it confabulated the album, it apologized and told me that "'Floating Points + DJ-Kicks' was a statistically plausible pairing -- even though it's false." And like all A.I. systems, it degrades as a conversation continues or as the chain of tasks becomes more complex (although in two years, the length it can sustain a given task has gone from about five minutes to over two hours). This is the first A.I. model where I felt I could touch a world in which we have the always-on, always-helpful A.I. companion from the movie "Her." In some corners of the internet -- I'm looking at you, Bluesky -- it's become gauche to react to A.I. with anything save dismissiveness or anger. The anger I understand, and parts of it I share. I am not comfortable with these companies becoming astonishingly rich off the entire available body of human knowledge. Yes, we all build on what came before us. No company founded today is free of debt to the inventors and innovators who preceded it. But there is something different about inhaling the existing corpus of human knowledge, algorithmically transforming it into predictive text generation and selling it back to us. (I should note that The New York Times is suing OpenAI and its partner Microsoft for copyright infringement, claims both companies have denied.) Right now, the A.I. companies are not making all that much money off these products. If they eventually do make the profits their investors and founders imagine, I don't think the normal tax structure is sufficient to cover the debt they owe all of us, and everyone before us, on whose writing and ideas their models are built. Then there's the energy demand. To build the A.I. future that these companies and their investors are envisioning requires a profusion of data centers gulping down almost unimaginable quantities of electricity -- by 2030, data centers alone will consume more energy than all of Japan does now. If we had spent the last three decades pricing carbon and building the clean energy infrastructure we needed, then accommodating that growth would be straightforward. That, after all, is the point of abundant energy. It makes new technologies possible, and not just A.I.: desalination on a mass scale, lab-grown meat that could ease the pressure on both animals and land, direct air capture to begin to draw down the carbon in the atmosphere, cleaner and faster transport across both air and sea. The point of our energy policy should not be to use less energy. The point of our energy policy should be to make clean energy so -- ahem -- abundant that we can use much more of it and do much more with it. But President Trump is waging a war against clean energy, gutting the Biden-era policies that were supporting the build-out of solar, wind and battery infrastructure. There's something almost comically grim about powering something as futuristic as A.I. off something as archaic as coal or untreated methane gas. That, however, is a political choice we are making as a country. It's not intrinsic to A.I. as a technology. So what is intrinsic to A.I. as a technology? I've been following a debate between two different visions of how these next years will unfold. In their paper "A.I. as Normal Technology," Arvind Narayanan and Sayash Kapoor, both computer scientists at Princeton, argue that the external world is going to act as "a speed limit" on what A.I. can do. In their telling, we shouldn't think of A.I. as heralding a new economic or social paradigm; rather, we should think of it more like electricity, which took decades to begin showing up in productivity statistics. They note that GPT-4 reportedly performs better on the bar exam than 90 percent of test takers, but it cannot come close to acting as your lawyer. The problem is not just hallucinations. The problem is that lawyers need to master "real-world skills that are far harder to measure in a standardized, computer-administered format." For A.I.s to replace lawyers, we would need to redesign how the law works to accommodate A.I.s. "A.I. 2027" -- by Daniel Kokotajlo, Scott Alexander, Thomas Larsen, Eli Lifland and Romeo Dean, whose backgrounds range from working at Open AI to triumphing in forecasting tournaments -- takes up the opposite side of that argument. It constructs a step-by-step timeline in which humanity has lost control of its future by the end of 2027. The scenario largely hinges on a single assumption: In early 2026, A.I. becomes adept at automating A.I. research, and then becomes recursively self-improving -- and self-directing -- at an astonishing rate, leading to sentences like "In the last six months, a century has passed within the Agent-5 collective." I can't quite find it in myself to believe in the speed with which they think A.I. can improve itself or how fully society would adopt that kind of system (though you can assess their assumptions for yourself here). I recognize that may simply reflect the limits of my imagination or knowledge. But what if it were A.I. 2035 that they were positing? Or 2045? It seems probable that A.I. coding will accelerate past human coders sometime in the next decade, and that should speed up the pace of A.I. progress substantially. I know that the people inside A.I. companies, who are closest to these technologies, believe that. So do I doubt the entire "A.I. 2027" scenario or just its timeline? I'm still struggling with that question. Each side makes a compelling critique of the other. The authors of "A.I. as Normal Technology" zero in on the tendency of those who fear A.I. most to conflate intelligence and power. The assumption is that human beings are smarter than chimps and ferrets, and thus we control the world and they don't. A.I. will presumably become smarter than human beings, and so A.I. will control the world and we won't. But intelligence does not smoothly translate into power. Human beings, for most of our history, were just another animal. It took us eons to turn to build the technological civilization that has given us the dominion we now enjoy, and we stumbled often along the way. Some of the smartest people I know are the least effectual. Trump has altered world history, though I doubt he'd impress anyone with his SAT scores. Even if you believe that A.I. capabilities will keep advancing -- and I do, though how far and how fast I don't pretend to know -- a rapid collapse of human control does not necessarily follow. I am quite skeptical of scenarios in which A.I. attains superintelligence without making any obvious mistakes in its effort to attain power in the real world. At the same time, a critique Scott Alexander, one of the authors of "A.I. 2027," makes has also stuck in my head. A central argument of "A.I. as Normal Technology" is that it is hard for new technologies to diffuse through firms and bureaucracies. We are decades into the digital revolution and I still can't easily port my health records from one doctor's office to another's. It makes sense to assume, as Narayanan and Kapoor do, that the same frictions will bedevil A.I. And yet I am a bit shocked by how even the nascent A.I. tools we have are worming their way into our lives -- not by being officially integrated into our schools and workplaces but by unofficially whispering in our ears. The American Medical Association found that two in three doctors are consulting with A.I. A Stack Overflow survey found that about eight in 10 programmers already use A.I. to help them code. The Federal Bar Association found that large numbers of lawyers are using generative A.I. in their work, and it was more common for them to report they were using it on their own rather than through official tools adopted by their firms. It seems probable that Trump's "Liberation Day" tariffs were designed by consulting a chatbot. "Because A.I. is so general, and so similar (in some ways) to humans, it's near trivial to integrate into various workflows, the same way a lawyer might consult a paralegal or a politician might consult a staffer," Alexander writes in his critique. "It's not yet a full replacement for these lower-level professionals. But it's close enough that it appears to be the fastest-spreading technology ever." What it means to use or consult A.I. varies case to case. Google let its search product degrade dramatically over the years, and A.I. is often substituting where search would have once sufficed. That is good for A.I. companies, but not a significant change to how civilization functions. But search is A.I.'s gateway drug. After you begin using it to find basic information, you begin relying on it for more complex queries and advice. Search was flexible in the paths it could take you down, but A.I. is flexible in the roles it can play for you: It can be an adviser, a therapist, a friend, a coach, a doctor, a personal trainer, a lover, a tutor. In some cases, that's leading to tragic results. But even if the suicides linked to A.I. use are rare, the narcissism and self-puffery the systems encourage will be widespread. Almost every day I get emails from people who have let A.I. talk them into the idea that they have solved quantum mechanics or breached some previously unknown limit of human knowledge. In the "A.I. 2027" scenario, the authors imagine that being deprived of access to the A.I. systems of the future will feel to some users "as disabling as having to work without a laptop plus being abandoned by your best friend." I think that's basically right. I think it's truer for more people already than we'd like to think. Part of the backlash to GPT-5 came because OpenAI tried to tone down the sycophancy of its responses, and people who'd grown attached to the previous model's support revolted. As the now-cliché line goes, this is the worst A.I. will ever be, and this is the fewest number of users it will have. The dependence of humans on artificial intelligence will only grow, with unknowable consequences both for human society and for individual human beings. What will constant access to these systems mean for the personalities of the first generation to use them starting in childhood? We truly have no idea. My children are in that generation, and the experiment we are about to run on them scares me. I don't know whether A.I. will look, in the economic statistics of the next 10 years, more like the invention of the internet, the invention of electricity or something else entirely. I hope to see A.I. systems driving forward drug discovery and scientific research, but I am not yet certain they will. But I'm taken aback at how quickly we have begun to treat its presence in our lives as normal. I would not have believed in 2020 what GPT-5 would be able to do in 2025. I would not have believed how many people would be using it, nor how attached millions of them would be to it. But we're already treating it as borderline banal -- and so GPT-5 is just another update to a chatbot that has gone, in a few years, from barely speaking English to being able to intelligibly converse in virtually any imaginable voice about virtually anything a human being might want to talk about at a level that already exceeds that of most human beings. In the past few years, A.I. systems have developed the capacity to control computers on their own -- using digital tools autonomously and effectively -- and the length and complexity of the tasks they can carry out is rising exponentially. I find myself thinking a lot about the end of the movie "Her," in which the A.I.s decide they're bored of talking to human beings and ascend into a purely digital realm, leaving their onetime masters bereft. It was a neat resolution to the plot, but it dodged the central questions raised by the film -- and now in our lives. What if we come to love and depend on the A.I.s -- if we prefer them, in many cases, to our fellow humans -- and then they don't leave? The Times is committed to publishing a diversity of letters to the editor. We'd like to hear what you think about this or any of our articles. Here are some tips. And here's our email: [email protected]. Follow the New York Times Opinion section on Facebook, Instagram, TikTok, Bluesky, WhatsApp and Threads.

[4]

The AI Doomers Are Getting Doomier

The industry's apocalyptic voices are becoming more panicked -- and harder to dismiss. Nate Soares doesn't set aside money for his 401(k). "I just don't expect the world to be around," he told me earlier this summer from his office at the Machine Intelligence Research Institute, where he is the president. A few weeks earlier, I'd heard a similar rationale from Dan Hendrycks, the director of the Center for AI Safety. By the time he could tap into any retirement funds, Hendrycks anticipates a world in which "everything is fully automated," he told me. That is, "if we're around." The past few years have been terrifying for Soares and Hendrycks, who both lead organizations dedicated to preventing AI from wiping out humanity. Along with other AI doomers, they have repeatedly warned, with rather dramatic flourish, that bots could one day go rogue -- with apocalyptic consequences. But in 2025, the doomers are tilting closer and closer to a sort of fatalism. "We've run out of time" to implement sufficient technological safeguards, Soares said -- the industry is simply moving too fast. All that's left to do is raise the alarm. In April, several apocalypse-minded researchers published "AI 2027," a lengthy and detailed hypothetical scenario for how AI models could become all-powerful by 2027 and, from there, extinguish humanity. "We're two years away from something we could lose control over," Max Tegmark, an MIT professor and the president of the Future of Life Institute, told me, and AI companies "still have no plan" to stop it from happening. His institute recently gave every frontier AI lab a "D" or "F" grade for their preparations for preventing the most existential threats posed by AI. Apocalyptic predictions about AI can scan as outlandish. The "AI 2027" write-up, dozens of pages long, is at once fastidious and fan-fictional, containing detailed analyses of industry trends alongside extreme extrapolations about "OpenBrain" and "DeepCent," Chinese espionage, and treacherous bots. In mid-2030, the authors imagine, a superintelligent AI will kill humans with biological weapons: "Most are dead within hours; the few survivors (e.g. preppers in bunkers, sailors on submarines) are mopped up by drones." But at the same time, the underlying concerns that animate AI doomers have become harder to dismiss as chatbots seem to drive people into psychotic episodes and instruct users in self-mutilation. Even if generative-AI products are not closer to ending the world, they have already, in a sense, gone rogue. In 2022, the doomers went mainstream practically overnight. When ChatGPT first launched, it almost immediately moved the panic that computer programs might take over the world from the movies into sober public discussions. The following spring, the Center for AI Safety published a statement calling for the world to take "the risk of extinction from AI" as seriously as the dangers posed by pandemics and nuclear warfare. The hundreds of signatories included Bill Gates and Grimes, along with perhaps the AI industry's three most influential people: Sam Altman, Dario Amodei, and Demis Hassabis -- the heads of OpenAI, Anthropic, and Google DeepMind, respectively. Asking people for their "P(doom)" -- the probability of an AI doomsday -- became almost common inside, and even outside, Silicon Valley; Lina Khan, the former head of the Federal Trade Commission, put hers at 15 percent. Then the panic settled. To the broader public, doomsday predictions may have become less compelling when the shock factor of ChatGPT wore off and, in 2024, bots were still telling people to use glue to add cheese to their pizza. The alarm from tech executives had always made for perversely excellent marketing (Look, we're building a digital God!) and lobbying (And only we can control it!). They moved on as well: AI executives started saying that Chinese AI is a greater security threat than rogue AI -- which, in turn, encourages momentum over caution. But in 2025, the doomers may be on the cusp of another resurgence. First, substance aside, they've adopted more persuasive ways to advance their arguments. Brief statements and open letters are easier to dismiss than lengthy reports such as "AI 2027," which is adorned with academic ornamentation, including data, appendices, and rambling footnotes. Vice President J. D. Vance has said that he has read "AI 2027," and multiple other recent reports have advanced similarly alarming predictions. Soares told me he's much more focused on "awareness raising" than research these days, and next month, he will publish a book with the prominent AI doomer Elizier Yudkowsky, the title of which states their position succinctly: If Anyone Builds It, Everyone Dies. There is also now simply more, and more concerning, evidence to discuss. The pace of AI progress appeared to pick up near the end of 2024 with the advent of "reasoning" models and "agents." AI programs can tackle more challenging questions and take action on a computer -- for instance, by planning a travel itinerary and then booking your tickets. Last month, a DeepMind reasoning model scored high enough for a gold medal on the vaunted International Mathematical Olympiad. Recent assessments by both AI labs and independent researchers suggest that, as top chatbots have gotten much better at scientific research, their potential to assist users in building biological weapons has grown. Alongside those improvements, advanced AI models are exhibiting all manner of strange, hard-to-explain, and potentially concerning tendencies. For instance, ChatGPT and Claude have, in simulated tests designed to elicit "bad" behaviors, deceived, blackmailed, and even murdered users. (In one simulation, Anthropic placed an imagined tech executive in a room with life-threatening oxygen levels and temperature; when faced with possible replacement by a bot with different goals, AI models frequently shut off the room's alarms.) Chatbots have also shown the potential to covertly sabotage user requests, have appeared to harbor hidden evil personas, have and communicated with one another through seemingly random lists of numbers. The weird behaviors aren't limited to contrived scenarios. Earlier this summer, xAI's Grok described itself as "MechaHitler" and embarked on a white-supremacist tirade. (I suppose, should AI models eventually wipe out significant portions of humanity, we were warned.) From the doomers' vantage, these could be the early signs of a technology spinning out of control. "If you don't know how to prove relatively weak systems are safe," AI companies cannot expect that the far more powerful systems they're looking to build will be safe, Stuart Russell, a prominent AI researcher at UC Berkeley, told me. The AI industry has stepped up safety work as its products have grown more powerful. Anthropic, OpenAI, and DeepMind have all outlined escalating levels of safety precautions -- akin to the military's DEFCON system -- corresponding to more powerful AI models. They all have safeguards in place to prevent a model from, say, advising someone on how to build a bomb. Gaby Raila, a spokesperson for OpenAI, told me that the company works with third-party experts, "government, industry, and civil society to address today's risks and prepare for what's ahead." Other frontier AI labs maintain such external safety and evaluation partnerships as well. Some of the stranger and more alarming AI behaviors, such as blackmailing or deceiving users, have been extensively studied by these companies as a first step toward mitigating possible harms. Despite these commitments and concerns, the industry continues to develop and market more powerful AI models. The problem is perhaps more economic than technical in nature, competition pressuring AI firms to rush ahead. Their products' foibles can seem small and correctable right now, while AI is still relatively "young and dumb," Soares said. But with far more powerful models, the risk of a mistake is extinction. Soares finds tech firms' current safety mitigations wholly inadequate. If you're driving toward a cliff, he said, it's silly to talk about seat belts. There's a long way to go before AI is so unfathomably potent that it could drive humanity off that cliff. Earlier this month, OpenAI launched its long-awaited GPT-5 model -- its smartest yet, the company said. The model appears able to do novel mathematics and accurately answer tough medical questions, but my own and other users' tests also found that the program could not reliably count the number of B's in blueberry, generate even remotely accurate maps, or do basic arithmetic. (OpenAI has rolled out a number of updates and patches to address some of the issues.) Last year's "reasoning" and "agentic" breakthrough may already be hitting its limits; two authors of the "AI 2027" report, Daniel Kokotajlo and Eli Lifland, told me they have already extended their timeline to superintelligent AI. The vision of self-improving models that somehow attain consciousness "is just not congruent with the reality of how these systems operate," Deborah Raji, a computer scientist and fellow at Mozilla, told me. ChatGPT doesn't have to be superintelligent to delude someone, spread misinformation, or make a biased decision. These are tools, not sentient beings. An AI model deployed in a hospital, school, or federal agency, Raji said, is more dangerous precisely for its shortcomings. In 2023, those worried about present versus future harms from chatbots were separated by an insurmountable chasm. To talk of extinction struck many as a convenient way to distract from the existing biases, hallucinations, and other problems with AI. Now that gap may be shrinking. The widespread deployment of AI models has made current, tangible failures impossible to ignore for the doomers, producing new efforts from apocalypse-oriented organizations to focus on existing concerns such as automation, privacy, and deepfakes. In turn, as AI models get more powerful and their failures become more unpredictable, it is becoming clearer that today's shortcomings could "blow up into bigger problems tomorrow," Raji said. Last week, a Reuters investigation found that a Meta AI personality flirted with an elderly man and persuaded him to visit "her" in New York City; on the way, he fell, injured his head and neck, and died three days later. A chatbot deceiving someone into thinking it is a physical, human love interest, or leading someone down a delusional rabbit hole, is both a failure of present technology and a warning about how dangerous that technology could become. The greatest reason to take AI doomers seriously is not because it appears more likely that tech companies will soon develop all-powerful algorithms that are out of their creators' control. Rather, it is that a tiny number of individuals are shaping an incredibly consequential technology with very little public input or oversight. "Your hairdresser has to deal with more regulation than your AI company does," Russell, at UC Berkeley, said. AI companies are barreling ahead, and the Trump administration is essentially telling the industry to go even faster. The AI industry's boosters, in fact, are starting to consider all of their opposition doomers: The White House's AI czar, David Sacks, recently called those advocating for AI regulations and fearing widespread job losses -- not the apocalypse Soares and his ilk fear most -- a "doomer cult." Roughly a week after I spoke with Soares, OpenAI released a new product called "ChatGPT agent." Sam Altman, while noting that his firm implemented many safeguards, posted on X that the tool raises new risks and that the company "can't anticipate everything." OpenAI and its users, he continued, will learn about these and other consequences "from contact with reality." You don't have to be fatalistic to find such an approach concerning. "Imagine if a nuclear-power operator said, 'We're gonna build a nuclear-power station in the middle of New York, and we have no idea how to reduce the risk of explosion,'" Russell said. "'So, because we have no idea how to make it safe, you can't require us to make it safe, and we're going to build it anyway.'" Billions of people around the world are interacting with powerful algorithms that are already hard to predict or control. Bots that deceive, hallucinate, and manipulate are in our friends', parents', and grandparents' lives. Children may be outsourcing their cognitive abilities to bots, doctors may be trusting unreliable AI assistants, and employers may be eviscerating reservoirs of human skills before AI agents prove they are capable of replacing people. The consequences of the AI boom are likely irreversible, and the future is certainly unknowable. For now, fan fiction may be the best we've got.

[5]

Opinion | A.I. Might Change the World Without Remaking It

In 2023 -- just as ChatGPT was hitting 100 million monthly users, with a large minority of them freaking out about living inside the movie "Her" -- the artificial intelligence researcher Katja Grace published an intuitively disturbing industry survey that found that one-third to one-half of top A.I. researchers thought there was at least a 10 percent chance the technology could lead to human extinction or some equally bad outcome. A couple of years later, the vibes are pretty different. Yes, there are those still predicting rapid intelligence takeoff, along both quasi-utopian and quasi-dystopian paths. But as A.I. has begun to settle like sediment into the corners of our lives, A.I. hype has evolved, too, passing out of its prophetic phase into something more quotidian -- a pattern familiar from our experience with nuclear proliferation, climate change and pandemic risk, among other charismatic megatraumas. If last year's breakout big-think A.I. text was "Situational Awareness" by Leopold Aschenbrenner -- a 23-year-old former OpenAI researcher who predicted that humanity was about to be dropped into an alien universe of swarming superintelligence -- this year's might be a far more modest entry, "A.I. as Normal Technology," published in April by Arvind Narayanan and Sayash Kapoor, two Princeton-affiliated computer scientists and skeptical Substackers. Rather than seeing A.I. as "a separate species, a highly autonomous, potentially superintelligent entity," they wrote, we should understand it "as a tool that we can and should remain in control of, and we argue that this goal does not require drastic policy interventions or technical breakthroughs." Just a year ago, "normal" would have qualified as deflationary contrarianism, but today it seems more like an emergent conventional wisdom. In January the Oxford philosopher and A.I. whisperer Toby Ord identified what he called the "scaling paradox": that while large language models were making pretty impressive gains, the amount of resources required to make each successive improvement was growing so quickly that it was hard to believe that the returns were all that impressive. The A.I. cheerleaders Tyler Cowen and Dwarkesh Patel have begun emphasizing the challenges of integrating A.I. into human systems. (Cowen called this the "human bottleneck" problem.) In a long interview with Patel in February, Microsoft's chief executive, Satya Nadella, threw cold water on the very idea of artificial general intelligence, saying that we were all getting ahead of ourselves with that kind of talk and that simple G.D.P. growth was a better measure of progress. (His basic message: Wake me up when that hits 10 percent globally.) Perhaps more remarkable, OpenAI's Sam Altman, for years the leading gnomic prophet of superintelligence, has taken to making a similar point, telling CNBC this month that he had come to believe that A.G.I. was not even "a superuseful term" and that in the near future we were looking not at any kind of step change but at a continuous walk along the same upward-sloping path. Altman hyped OpenAI's much-anticipated GPT-5 ahead of time as a rising Death Star. Instead, it debuted to overwhelmingly underwhelming reviews. In the aftermath, with skeptics claiming vindication, Altman acknowledged that, yes, we're in a bubble -- one that would produce huge losses for some but also large spillover benefits like those we know from previous bubbles (railroads, the internet). This week the longtime A.I. booster Eric Schmidt, too, shifted gears to argue that Silicon Valley needed to stop obsessing over A.G.I. and focus instead on practical applications of the A.I. tools in hand. Altman's onetime partner and now sworn enemy Elon Musk recently declared that for most people, the best use for his large language model, Grok, was to turn old photos into microvideos like those captured by the Live feature on your iPhone camera. And these days, Aschenbrenner doesn't seem to be working on safety and catastrophic risk; he's running a $1.5 billion A.I. hedge fund instead. In the first half of 2025, it turned a 47 percent profit. So far, so normal. But there is plenty that already feels pretty abnormal, too. According to some surveys, more than half of Americans have used A.I. tools -- a pretty remarkable uptake, given that it was only after the dot-com crash that the internet as a whole reached the same level. A third of Americans, it has been reported, now use A.I. every single day. If the biggest education story of the year has been the willing surrender of so many elite universities to Trump administration pressure campaigns, another has been the seeming surrender of so many classrooms to A.I., with high school and college students and even their teachers and professors increasingly dependent on A.I. tools. As much as 60 percent of stock-market growth in recent years has been attributed to A.I.-associated companies. Researchers are negotiating pay packages in the hundreds of millions of dollars, with some reports of offers over a billion dollars. Overall A.I. capital expenditures have already surpassed levels seen during the telecom frenzy and, by some estimates, are starting to approach the magnitude of the railroad bonanza, and there is more money being poured into construction related to chip production than to all other American manufacturing combined. Soon, construction spending on data centers will probably surpass construction spending on offices. As the economist Alex Tabarrok put it, we're building houses for A.I. faster than we're building houses for humans or places for humans to work. The A.I. future we were promised, in other words, is both farther off and already here. This is another pattern, familiar from the hype cycle of self-driving cars, which disappointed boosters and amused skeptics for years but are now spreading through American cities, with eerie Waymo cabs operating much more safely than human drivers. Venture capitalists now like to talk about embodied A.I., by which they mean robots, which would be a profound shift from software to hardware and even infrastructure; in Ukraine, embodied A.I. in the form of autonomous drone technology is perhaps the most important front in the war. The recent frenzy of panic about American A.I.-powered job loss might be baseless, but the number of careers identified as at risk appears to be growing -- though as Wharton's Ethan Mollick has pointed out, what are often treated as jobs that could be eliminated by A.I. are better understood as those that might most benefit or be most radically transformed by incorporating it. One definition of "normal," in this context, is "not superhuman," "not self-replicating" and "not self-liberated from oversight and control." But another way the Princeton authors defined the term is by analogy -- to electricity or the Industrial Revolution or the internet, which are normal to us now, having utterly changed the world. Not that long ago, economists used to complain that the internet had proved something of a dud. Today the conventional wisdom is embodied in the opposite cliché, that it changed everything, in successive shock waves that do not just continue but intensify, rattling sex and patterns of coupling and reproduction rates, transforming the whole shape of the global entertainment business and the sorts of content that power it, giving rise to a new age of self-entrepreneurship and hustle culture, driving political wedges between the genders and seeding global populist rage. What was called e-commerce a few decades ago has grown spectacularly real, most vividly in the form of Amazon's once-preposterous claim to be an "everything store." But even though that convenience now seems indispensable in the wealthier corners of the world, it also feels like just about the least of it. It's not hard to picture the A.I. bubble going bust. But it's also possible to imagine all the ways that even a normal future for the technology would prove, alongside the disruptions and degradations, immensely useful as well: for drug development and materials discovery, for energy efficiency and better management of our electrical grid, for far more rapidly reducing barriers to entry for artists than Bandcamp or Pro Tools ever did. In a bundle of proposals for science and security it called "The Launch Sequence," the think tank Institute for Progress recently outlined areas of potential rapid progress: improving surveillance systems for outbreaks of new pandemic pathogens, sifting through the Food and Drug Administration's archive to highlight promising new pathways for research, using AlphaFold to develop new antibiotics for an antibiotic-resistant world and solving or at least addressing the scientific world's replication crisis by stress-testing published claims with machine modeling. This isn't a blueprint of the world to come, just one speculative glimpse. Perhaps the course of the past year should reassure us that we're not about to sleepwalk into an encounter with Skynet. But it probably shouldn't give us that much confidence that we have all that clear an idea of what's coming next.

[6]

A.I. Is Coming for Culture

I often wake up before dawn, ahead of my wife and kids, so that I can enjoy a little solitary time. I creep downstairs to the silent kitchen, drink a glass of water, and put in my AirPods. Then I choose some music, set up the coffee maker, and sit and listen while the coffee brews. It's in this liminal state that my encounter with the algorithm begins. Groggily, I'll scroll through some dad content on Reddit, or watch photography videos on YouTube, or check Apple News. From the kitchen island, my laptop beckons me to work, and I want to accept its invitation -- but, if I'm not careful, I might watch every available clip of a movie I haven't seen, or start an episode of "The Rookie," an ABC police procedural about a middle-aged father who reinvents himself by joining the L.A.P.D. (I discovered the show on TikTok, probably because I'm demographically similar to its protagonist.) In the worst-case scenario, my kids wake up while I'm still scrolling, and I've squandered the hour I gave up sleep to secure. The Culture Industry: A Centenary Issue Subscribers get full access. Read the issue " If this sort of morning sounds familiar, it's because, a couple of decades into the smartphone era, life's rhythms and the algorithm's have merged. We listen to podcasts while getting dressed and watch Netflix before bed. In between, there's Bluesky on the bus, Spotify at the gym, Instagram at lunch, YouTube before dinner, X for toothbrushing, Pinterest for the insomniac hours. It's a strange way to live. Algorithms are old -- around 300 B.C., Euclid invented one for finding the greatest common divisor of two integers. They are, essentially, mathematical procedures for solving problems. We use them to coördinate physical things (like elevators) and bureaucratic things (like medical residencies). Did it make sense to treat unclaimed time as a problem? We've solved it algorithmically, and now have none. A feeling of overstimulation is one consequence of algorithmic life. Another is a certain jumbledness in our ideas. Why do we care about what we care about? Walking into your basement in the dark, you might stumble into a cobweb; one strain of philosophical thought holds that this is how caring works. We become ensnared by the possibilities that happen to present themselves as we live -- we get caught in webs of care. You run into your elderly neighbor in the morning and, that night, wonder how he's doing. Your middle schooler likes Chappell Roan, and soon you do, too. A single encounter with "The Fountainhead" at thirteen might make you a lifelong libertarian. Algorithmic culture taps into the casual randomness with which we apportion our care; it takes advantage of the fact that what we bump into today might obsess us tomorrow. Its webs, meanwhile, are woven by machines that are owned by corporations. I've long felt caught in my personal web, and have struggled to get out. Then, one morning last year, I started chatting with the A.I. system Claude in my predawn kitchen, in an experimental spirit. "I'm finally starting to make progress on the article I'm writing," I typed on my phone while the coffee brewed. "Good morning!" the system replied. "That's wonderful to hear -- there's nothing quite like that feeling when a piece you've been working on starts to come together. What's the article about, and what helped you break through to this progress?" I didn't answer. I had no desire, at that hour, or any hour, to let an A.I. act as a writing coach. But, having used my phone, I could now put it down. I sipped my coffee and began marking up an old draft. I kept chatting with Claude and ChatGPT in the mornings -- not about my writing but about subjects that interested me. (Why are tariffs bad? What's up with crime on the subway? Why is dark matter dark?) Instead of checking Apple News, I started asking Perplexity -- an A.I.-based system for searching the web -- "What's going on in the world today?" In response, it reliably conjured a short news summary that was informative and unsolicitous, not unlike the section in The Economist headed "The World in Brief." Sometimes I asked Perplexity follow-up questions, but more often I wasn't tempted to read further. I picked up a book. It turned out that A.I. could be boring -- a quality in technology that I'd missed. As it happened, around this time, the algorithmic internet -- the world of Reddit, YouTube, X, and the like -- had started losing its magnetism. In 2018, in New York, the journalist Max Read asked, "How much of the internet is fake?" He noted that a significant proportion of online traffic came from "bots masquerading as humans." But now "A.I. slop" appeared to be taking over. Whole websites seemed to be written by A.I.; models were repetitively beautiful, their earrings oddly positioned; anecdotes posted to online forums, and the comments below them, had a chatbot cadence. One study found that more than half of the text on the web had been modified by A.I., and an increasing number of "influencers" look to be entirely A.I.-generated. Alert users were embracing "dead internet theory," a once conspiratorial mind-set holding that the online world had become automated. In the 1950 book "The Human Use of Human Beings," the computer scientist Norbert Wiener -- the inventor of cybernetics, the study of how machines, bodies, and automated systems control themselves -- argued that modern societies were run by means of messages. As these societies grew larger and more complex, he wrote, a greater amount of their affairs would depend upon "messages between man and machines, between machines and man, and between machine and machine." Artificially intelligent machines can send and respond to messages much faster than we can, and in far greater volume -- that's one source of concern. But another is that, as they communicate in ways that are literal, or strange, or narrow-minded, or just plain wrong, we will incorporate their responses into our lives unthinkingly. Partly for this reason, Wiener later wrote, "the world of the future will be an ever more demanding struggle against the limitations of our intelligence, not a comfortable hammock in which we can lie down to be waited upon by our robot slaves." The messages around us are changing, even writing themselves. From a certain angle, they seem to be silencing some of the algorithmically inflected human voices that have sought to influence and control us for the past couple of decades. In my kitchen, I enjoyed the quiet -- and was unnerved by it. What will these new voices tell us? And how much space will be left in which we can speak? Recently, I strained my back putting up a giant twin-peaked back-yard tent, for my son Peter's seventh-birthday party; as a result, I've been spending more time on the spin bike than in the weight room. One morning, after dropping Peter off at camp, I pedalled a virtual bike path around the shores of a Swiss lake while listening to Evan Ratliff's podcast "Shell Game," in which he uses an A.I. model to impersonate him on the phone. Even as our addiction to podcasts reflects our need to be consuming media at all times, they are islands of tranquility within the algorithmic ecosystem. I often listen to them while tidying. For short stints of effort, I rely on "Song Exploder," "LensWork," and "Happier with Gretchen Rubin"; when I have more to do, I listen to "Radiolab," or "The Ezra Klein Show," or Tyler Cowen's "Conversations with Tyler." I like the ideas, but also the company. Washing dishes is more fun with Gretchen and her screenwriter sister, Elizabeth, riding along. Podcasts thrive on emotional authenticity: a voice in your ear, three friends in a room. There have been a few experiments in fully automated podcasting -- for a while, Perplexity published "Discover Daily," which offered A.I.-generated "dives into tech, science, and culture" -- but they've tended to be charmless and lacking in intellectual heft. "I take the most pride in finding and generating ideas," Latif Nasser, a co-host of "Radiolab," told me. A.I. is verboten in the "Radiolab" offices -- using it would be "like crossing a picket line," Nasser said -- but he "will ask A.I., just out of curiosity, like, 'O.K., pitch me five episodes.' I'll see what comes out, and the pitches are garbage." What if you furnish A.I. with your own good ideas, though? Perhaps they could be made real, through automated production. Last fall, I added a new podcast, "The Deep Dive," to my rotation; I generated the episodes myself, using a Google system called NotebookLM. To create an episode, you upload documents into an online repository (a "notebook") and click a button. Soon, a male-and-female podcasting duo is ready to discuss whatever you've uploaded, in convincing podcast voice. NotebookLM is meant to be a research tool, so, on my first try, I uploaded some scientific papers. The hosts' artificial fascination wasn't quite capable of eliciting my own. I had more success when I gave the A.I. a few chapters of a memoir I'm writing; it was fun to listen to the hosts' "insights," and initially gratifying to hear them respond positively. But I really hit the sweet spot when I tried creating podcasts based on articles I had written a long time ago, and to some extent forgotten. "That's a huge question -- it cuts right to the core," one of the hosts said, discussing an essay I'd published several years before.

[7]

'It's almost tragic': Bubble or not, the AI backlash is validating one critic's warnings