The Rise of 'AI Slop': How Artificial Intelligence is Flooding Social Media with Fake Content

2 Sources

2 Sources

[1]

What is AI slop? Why you are seeing more fake photos and videos in your social media feeds

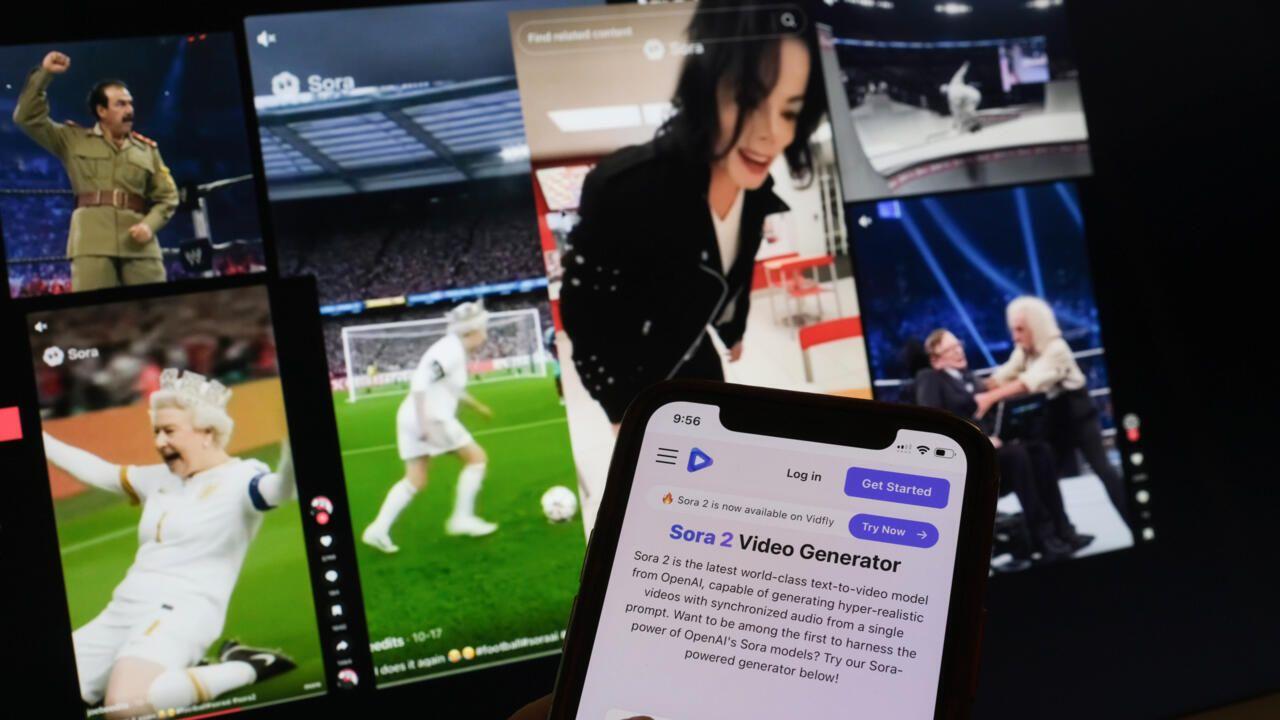

In May 2025, a post asking "[Am I the asshole] for telling my husband's affair partner's fiancé about their relationship?" quickly received 6,200 upvotes and more than 900 comments on Reddit. This popularity earned the post a spot on Reddit's front page of trending posts. The problem? It was (very likely) written by artificial intelligence (AI). The post contained some telltale signs of AI, such as using stock phrases ("[my husband's] family is furious") and excessive quotation marks, and sketching an unrealistic scenario designed to generate outrage rather than reflect a genuine dilemma. While this post has since been removed by the forum's moderators, Reddit users have repeatedly expressed their frustration with the proliferation of this kind of content. High-engagement, AI-generated posts on Reddit are an example of what is known as "AI slop" - cheap, low-quality AI-generated content, created and shared by anyone from low-level influencers to coordinated political influence operations. Estimates suggest that over half of longer English-language posts on LinkedIn are written by AI. In response to that report, Adam Walkiewicz, a director of product at LinkedIn, told Wired it has "robust defenses in place to proactively identify low-quality and exact or near-exact duplicate content. When we detect such content, we take action to ensure it is not broadly promoted." But AI-generated low-quality news sites are popping up all over the place, and AI images are also flooding social media platforms such as Facebook. You may have come across images like "shrimp Jesus" in your own feeds. Want more politics coverage from academic experts? Every week, we bring you informed analysis of developments in government and fact check the claims being made. Sign up for our weekly politics newsletter, delivered every Friday. AI-generated content is cheap. A report by the Nato StratCom Center of Excellence from 2023 found that for a mere €10 (about £8), you can buy tens of thousands of fake views and likes, and hundreds of AI-generated comments, on almost all major social media platforms. While much of it is seemingly innocent entertainment, one study from 2024 found that about a quarter of all internet traffic is made up of "bad bots". These bots, which seek to spread disinformation, scalp event tickets or steal personal data, are also becoming much better at masking as humans. In short, the world is dealing with the "enshittification" of the web: online services have become gradually worse over time as tech companies prioritise profits over user experience. AI-generated content is just one aspect of this. From Reddit posts that enrage readers to tearjerking cat videos, this content is extremely attention-grabbing and thus lucrative for both slop-creators and platforms. This is known as engagement bait - a tactic to get people to like, comment and share, regardless of the quality of the post. And you don't need to seek out the content to be exposed to it. One study explored how engagement bait, such as images of cute babies wrapped in cabbage, is recommended to social media users even when they do not follow any AI-slop pages or accounts. These pages, which often link to low-quality sources and promote real or made-up products, may be designed to boost their follower base in order to sell the account later for profit. Meta (Facebook's parent company) said in April that it is cracking down on "spammy" content that tries to "game the Facebook algorithm to increase views", but did not specify AI-generated content. Meta has used its own AI-generated profiles on Facebook, but has since removed some of these accounts. What the risks are This may all have serious consequences for democracy and political communication. AI can cheaply and efficiently create misinformation about elections that is indiscernible from human-generated content. Ahead of the 2024 US presidential elections, researchers identified a large influence campaign designed to advocate for Republican issues and attack political adversaries. And before you think it's only Republicans doing it, think again: these bots are as biased as humans of all perspectives. A report by Rutgers University found that Americans on all sides of the political spectrum rely on bots to promote their preferred candidates. Researchers aren't innocent either: scientists at the University of Zurich were recently caught using AI-powered bots to post on Reddit as part of a research project on whether inauthentic comments can change people's minds. But they failed to disclose that these comments were fake to Reddit moderators. Reddit is now considering taking legal action against the university. The company's chief legal officer said: "What this University of Zurich team did is deeply wrong on both a moral and legal level." Political operatives, including from authoritarian countries such as Russia, China and Iran, invest considerable sums in AI-driven operations to influence elections around the democratic world. How effective these operations are is up for debate. One study found that Russia's attempts to interfere in the 2016 US elections through social media were a dud, while another found it predicted polling figures for Trump. Regardless, these campaigns are becoming much more sophisticated and well-organised. And even seemingly apolitical AI-generated content can have consequences. The sheer volume of it makes accessing real news and human-generated content difficult. What's to be done? Malign AI content is proving to be extremely hard to spot by humans and computers alike. Computer scientists recently identified a bot network of about 1,100 fake X accounts posting machine-generated content (mostly about cryptocurrency) and interacting with each other through likes and retweets. Problematically, the Botometer (a tool they developed to detect bots) failed to identify these accounts as fake. The use of AI is relatively easy to spot if you know what to look for, particularly when content is formulaic or unapologetically fake. But it's much harder when it comes to short-form content (for example, Instagram comments) or high-quality fake images. And the technology used to create AI slop is quickly improving. As close observers of AI trends and the spread of misinformation, we would love to end on a positive note and offer practical remedies to spot AI slop or reduce its potency. But in reality, many people are simply jumping ship. Dissatisfied with the amount of AI slop, social media users are escaping traditional platforms and joining invite-only online communities. This may lead to further fracturing of our public sphere and exacerbate polarisation, as the communities we seek out are often comprised of like-minded individuals. As this sorting intensifies, social media risks devolving into mindless entertainment, produced and consumed mostly by bots who interact with other bots while us humans spectate. Of course, platforms don't want to lose users, but they might push as much AI slop as the public can tolerate. Some potential technical solutions include labelling AI-generated content through improved bot detection and disclosure regulation, although it's unclear how well warnings like these work in practice. Some research also shows promise in helping people to better identify deepfakes, but research is in its early stages. Overall, we are just starting to realise the scale of the problem. Soberingly, if humans drown in AI slop, so does AI: AI models trained on the "enshittified" internet are likely to produce garbage.

[2]

What is AI slop? Why you are seeing more fake photos and videos in your social media feeds

In May 2025, a post asking "[Am I the asshole] for telling my husband's affair partner's fiancé about their relationship?" quickly received 6,200 upvotes and more than 900 comments on Reddit. This popularity earned the post a spot on Reddit's front page of trending posts. The problem? It was (very likely) written by artificial intelligence (AI). The post contained some telltale signs of AI, such as using stock phrases ("[my husband's] family is furious") and excessive quotation marks, and sketching an unrealistic scenario designed to generate outrage rather than reflect a genuine dilemma. While this post has since been removed by the forum's moderators, Reddit users have repeatedly expressed their frustration with the proliferation of this kind of content. High-engagement, AI-generated posts on Reddit are an example of what is known as "AI slop" -- cheap, low-quality AI-generated content, created and shared by anyone from low-level influencers to coordinated political influence operations. Estimates suggest that over half of longer English-language posts on LinkedIn are written by AI. In response to that report, Adam Walkiewicz, a director of product at LinkedIn, told Wired it has "robust defenses in place to proactively identify low-quality and exact or near-exact duplicate content. When we detect such content, we take action to ensure it is not broadly promoted." But AI-generated low-quality news sites are popping up all over the place, and AI images are also flooding social media platforms such as Facebook. You may have come across images like "shrimp Jesus" in your own feeds. AI-generated content is cheap. A report by the Nato StratCom Center of Excellence from 2023 found that for a mere €10 (about £8), you can buy tens of thousands of fake views and likes, and hundreds of AI-generated comments, on almost all major social media platforms. While much of it is seemingly innocent entertainment, one study from 2024 found that about a quarter of all internet traffic is made up of "bad bots". These bots, which seek to spread disinformation, scalp event tickets or steal personal data, are also becoming much better at masking as humans. In short, the world is dealing with the "enshittification" of the web: online services have become gradually worse over time as tech companies prioritize profits over user experience. AI-generated content is just one aspect of this. From Reddit posts that enrage readers to tearjerking cat videos, this content is extremely attention-grabbing and thus lucrative for both slop-creators and platforms. This is known as engagement bait -- a tactic to get people to like, comment and share, regardless of the quality of the post. And you don't need to seek out the content to be exposed to it. One study explored how engagement bait, such as images of cute babies wrapped in cabbage, is recommended to social media users even when they do not follow any AI-slop pages or accounts. These pages, which often link to low-quality sources and promote real or made-up products, may be designed to boost their follower base in order to sell the account later for profit. Meta (Facebook's parent company) said in April that it is cracking down on "spammy" content that tries to "game the Facebook algorithm to increase views", but did not specify AI-generated content. Meta has used its own AI-generated profiles on Facebook, but has since removed some of these accounts. What the risks are This may all have serious consequences for democracy and political communication. AI can cheaply and efficiently create misinformation about elections that is indiscernible from human-generated content. Ahead of the 2024 US presidential elections, researchers identified a large influence campaign designed to advocate for Republican issues and attack political adversaries. And before you think it's only Republicans doing it, think again: these bots are as biased as humans of all perspectives. A report by Rutgers University found that Americans on all sides of the political spectrum rely on bots to promote their preferred candidates. Researchers aren't innocent either: scientists at the University of Zurich were recently caught using AI-powered bots to post on Reddit as part of a research project on whether inauthentic comments can change people's minds. But they failed to disclose that these comments were fake to Reddit moderators. Reddit is now considering taking legal action against the university. The company's chief legal officer said: "What this University of Zurich team did is deeply wrong on both a moral and legal level." Political operatives, including from authoritarian countries such as Russia, China and Iran, invest considerable sums in AI-driven operations to influence elections around the democratic world. How effective these operations are is up for debate. One study found that Russia's attempts to interfere in the 2016 US elections through social media were a dud, while another found it predicted polling figures for Trump. Regardless, these campaigns are becoming much more sophisticated and well-organized. And even seemingly apolitical AI-generated content can have consequences. The sheer volume of it makes accessing real news and human-generated content difficult. What's to be done? Malign AI content is proving to be extremely hard to spot by humans and computers alike. Computer scientists recently identified a bot network of about 1,100 fake X accounts posting machine-generated content (mostly about cryptocurrency) and interacting with each other through likes and retweets. Problematically, the Botometer (a tool they developed to detect bots) failed to identify these accounts as fake. The use of AI is relatively easy to spot if you know what to look for, particularly when content is formulaic or unapologetically fake. But it's much harder when it comes to short-form content (for example, Instagram comments) or high-quality fake images. And the technology used to create AI slop is quickly improving. As close observers of AI trends and the spread of misinformation, we would love to end on a positive note and offer practical remedies to spot AI slop or reduce its potency. But in reality, many people are simply jumping ship. Dissatisfied with the amount of AI slop, social media users are escaping traditional platforms and joining invite-only online communities. This may lead to further fracturing of our public sphere and exacerbate polarization, as the communities we seek out are often comprised of like-minded individuals. As this sorting intensifies, social media risks devolving into mindless entertainment, produced and consumed mostly by bots who interact with other bots while us humans spectate. Of course, platforms don't want to lose users, but they might push as much AI slop as the public can tolerate. Some potential technical solutions include labeling AI-generated content through improved bot detection and disclosure regulation, although it's unclear how well warnings like these work in practice. Some research also shows promise in helping people to better identify deepfakes, but research is in its early stages. Overall, we are just starting to realize the scale of the problem. Soberingly, if humans drown in AI slop, so does AI: AI models trained on the "enshittified" internet are likely to produce garbage. This article is republished from The Conversation under a Creative Commons license. Read the original article.

Share

Share

Copy Link

An in-depth look at the proliferation of AI-generated content on social media platforms, its impact on user experience, and the potential risks to democracy and political communication.

The Rise of 'AI Slop' on Social Media

In May 2025, a Reddit post about marital infidelity garnered significant attention, receiving over 6,200 upvotes and 900 comments. However, this popular post was likely generated by artificial intelligence (AI), exemplifying a growing trend of "AI slop" flooding social media platforms

1

2

.AI slop refers to cheap, low-quality content created by AI and distributed across various platforms. This phenomenon has become increasingly prevalent, with estimates suggesting that over half of longer English-language posts on LinkedIn are AI-generated

1

2

.

Source: Tech Xplore

The Proliferation of AI-Generated Content

The ease and low cost of producing AI-generated content have contributed to its rapid spread. A 2023 report by the NATO StratCom Center of Excellence revealed that for as little as €10 (about £8), one could purchase tens of thousands of fake views, likes, and hundreds of AI-generated comments on major social media platforms

1

2

.This influx of artificial content is not limited to text. AI-generated images, such as the viral "shrimp Jesus," have become commonplace on platforms like Facebook

1

2

. The phenomenon extends to news sites as well, with AI-generated low-quality news outlets proliferating across the internet.The Impact on User Experience and Platform Integrity

The surge in AI-generated content has led to what some call the "enshittification" of the web, where online services gradually deteriorate as tech companies prioritize profits over user experience

1

2

. This content often takes the form of "engagement bait," designed to provoke reactions and shares regardless of quality.Social media giants have begun to address this issue. LinkedIn claims to have robust defenses against low-quality and duplicate content, while Meta (Facebook's parent company) has announced efforts to crack down on "spammy" content that manipulates their algorithm

1

2

.Related Stories

Risks to Democracy and Political Communication

Source: The Conversation

The implications of AI slop extend beyond mere annoyance, potentially posing serious threats to democratic processes. AI can efficiently create convincing misinformation about elections, making it difficult to distinguish from genuine content

1

2

.Research has identified large-scale influence campaigns using AI-generated content to advocate for political issues and attack opponents. A report by Rutgers University found that Americans across the political spectrum are utilizing bots to promote their preferred candidates

1

2

.The Challenge of Detection and Regulation

Identifying malign AI content has proven challenging for both humans and computers. A recent study revealed a network of about 1,100 fake accounts on X (formerly Twitter) posting machine-generated content, which even sophisticated bot detection tools failed to identify

2

.The proliferation of AI slop has also raised ethical concerns in academia. Scientists at the University of Zurich faced backlash for using AI-powered bots on Reddit without proper disclosure, leading to potential legal action from the platform

1

2

.As AI-generated content becomes more sophisticated and widespread, the need for effective detection methods and regulatory frameworks becomes increasingly urgent. The challenge lies in balancing the potential benefits of AI technology with the preservation of authentic human communication and the integrity of online platforms.

References

Summarized by

Navi

[1]

Related Stories

AI Slop Floods Social Media as Platforms Introduce Filters Amid Growing User Backlash

29 Jan 2026•Entertainment and Society

The Rise of AI Slop: How Artificial Intelligence is Flooding the Internet with Low-Quality Content

06 Mar 2025•Technology

AI Slop: The Rise of Low-Quality AI-Generated Content Flooding the Internet

03 Sept 2025•Technology

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy