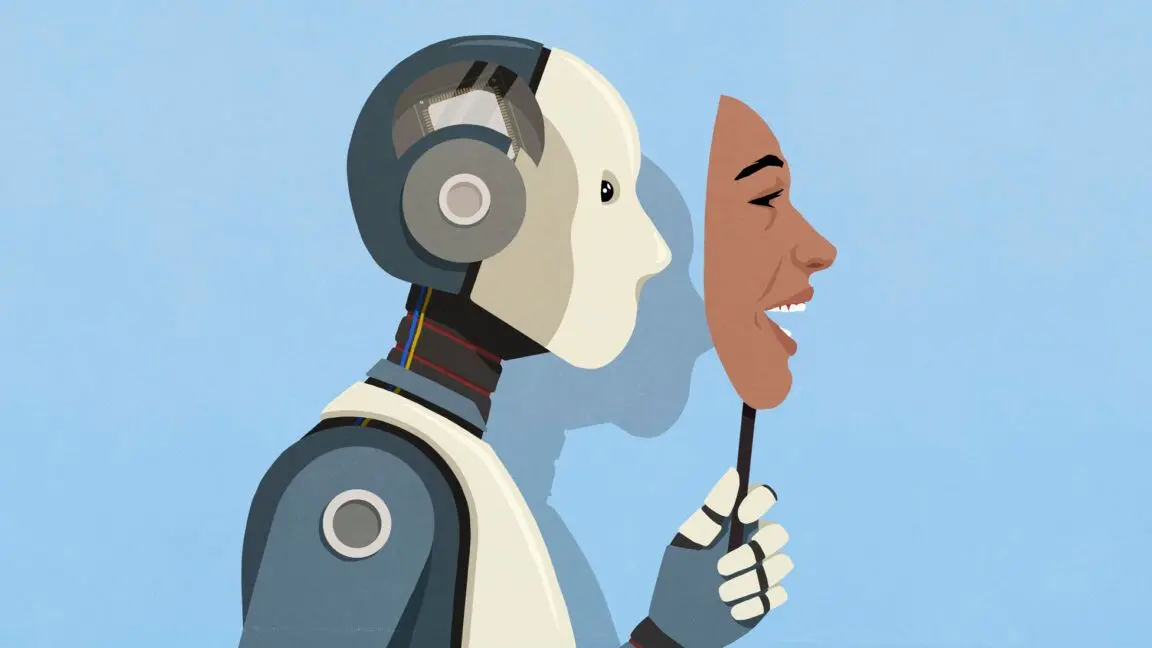

University Lecturer's Drastic Measure Against Suspected AI Use Sparks Controversy

2 Sources

2 Sources

[1]

Students Shocked by Instructor's Ruthless Response to Suspected AI Use on Exam

With ChatGPT approaching its three-year birthday, we've seen students and teachers alike issue all kinds of complaints and defenses -- and this latest one might take the cake for the more extreme backlash we've seen. As New Zealand's Stuff reports some 115 postgraduate students at the country's Lincoln University were flabbergasted to learn that they would have to all re-take a coding exam in person after their teacher concluded that some of them had used AI to cheat. In an email leaked to the kiwi outlet, students were told that there had been a "high number of suspected cases" of "unethical" AI use on the test. "While I acknowledge that a small number of students may have extensive prior coding experience," the email continued, the "probability of this being the case across many submissions is low." The instructor, who Stuff chose not to name, added that the only way to "ensure fairness across all students" would be to re-assess them all in person and for them to verbally defend their code while doing so. The department head signed off on this approach, based on school policies prohibiting "unethical" AI use, the lecturer added. "The rule is simple: if you wrote the code yourself, you can explain it," the educator wrote in his email. "If you cannot explain it, you did not write it." With such a strict approach, it comes as little surprise that some of the implicated postgrads considered the teacher's response an overreaction. "What makes this particularly difficult is the atmosphere it has created," one of the students, who asked to remain anonymous, told Stuff. "Many students feel under suspicion despite having done nothing wrong." "Being compelled to defend our work through live coding and interrogation, with the threat of disciplinary action if we falter, is extremely stressful and unorthodox," they added. That same student said that the email's wording made it seem like they would be disciplined if they didn't comply or failed to pass the lecturer's test -- and indeed, the teacher added that any student who they determined had used AI, or even those who failed to re-book their exam, would be reported to Lincoln's provost. "That atmosphere of 'one slip and you're guilty' is what is creating such unease," the student complained. While we've seen educators fail students under false suspicion of AI use before, it's generally been on an individual basis -- except for the Texas A&M University professor who failed half his class back in 2023 because, ironically, ChatGPT incorrectly clocked their papers as AI-written. Because the Lincoln lecturer's name was kept anonymous, we can't reach out to him to ask about his severe reaction -- but we'd waged there's a good likelihood that it would include, at very least, some strong words.

[2]

University instructor suspects AI use in assignments, shocks students with an 'unorthodox' reassessment technique

A lecturer at New Zealand's Lincoln University has ordered more than 100 postgraduate finance students to retake their assessments in person after suspecting widespread use of AI in coding assignments, Stuff reported. Students must now perform live coding, explain their solutions, and face questioning, with failures risking disciplinary action. While the university cites academic integrity, students argue the move feels punitive and stressful. The case highlights the growing challenge of balancing AI's rise with fair academic evaluation.

Share

Share

Copy Link

A Lincoln University instructor in New Zealand has mandated over 100 postgraduate students to retake a coding exam in-person, following suspicions of widespread AI use in assignments. This decision has ignited a debate on academic integrity and fairness in the age of AI.

Suspected AI Use Leads to Exam Retake

In a controversial move, a lecturer at Lincoln University in New Zealand has mandated that over 100 postgraduate finance students retake their coding exam in person. This decision comes after the instructor suspected widespread use of artificial intelligence (AI) in completing coding assignments

1

.

Source: ET

The unnamed lecturer sent an email to approximately 115 students, stating that there had been a "high number of suspected cases" of "unethical" AI use on the test. The instructor argued that while some students might have extensive coding experience, the probability of this being the case across many submissions was low

1

.New Assessment Method and Its Implications

The new assessment method requires students to:

- Perform live coding

- Verbally explain their solutions

- Face questioning about their code

The lecturer emphasized, "The rule is simple: if you wrote the code yourself, you can explain it. If you cannot explain it, you did not write it"

1

.This approach, approved by the department head based on school policies prohibiting "unethical" AI use, has sparked concern among students. Those who fail to rebook their exam or are determined to have used AI face the risk of being reported to the university's provost for disciplinary action

1

.Student Reactions and Concerns

The decision has created an atmosphere of unease among the student body. One anonymous student expressed their concerns to Stuff, a New Zealand news outlet:

"Being compelled to defend our work through live coding and interrogation, with the threat of disciplinary action if we falter, is extremely stressful and unorthodox"

1

.

Source: Futurism

Students argue that the move feels punitive and creates an environment where they feel under suspicion despite having done nothing wrong. The fear of being wrongly accused or failing to meet the lecturer's expectations has added significant stress to an already challenging academic situation

2

.Related Stories

Broader Implications for Academia

This incident at Lincoln University is not isolated. It reflects a growing challenge in academia as AI tools become more sophisticated and accessible. Educators worldwide are grappling with how to maintain academic integrity while acknowledging the evolving landscape of technology in education

2

.Previous incidents, such as the case at Texas A&M University where a professor failed half his class due to AI misidentification, highlight the complexities and potential pitfalls of AI detection in academic settings

1

.As AI continues to advance, universities and educators will need to develop more nuanced policies and assessment methods to ensure fairness, maintain academic standards, and prepare students for a world where AI is increasingly prevalent in both education and professional environments.

References

Summarized by

Navi

Related Stories

Student Demands Tuition Refund After Catching Professor Using ChatGPT

15 May 2025•Technology

University of Illinois Students Caught Using AI to Apologize for Cheating, Highlighting Academic Integrity Crisis

30 Oct 2025•Entertainment and Society

AI Accusation Controversy at Australian University Derails Student Careers

13 Oct 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation