X Introduces AI-Generated Community Notes: Potential Benefits and Risks

18 Sources

18 Sources

[1]

Everything that could go wrong with X's new AI-written community notes

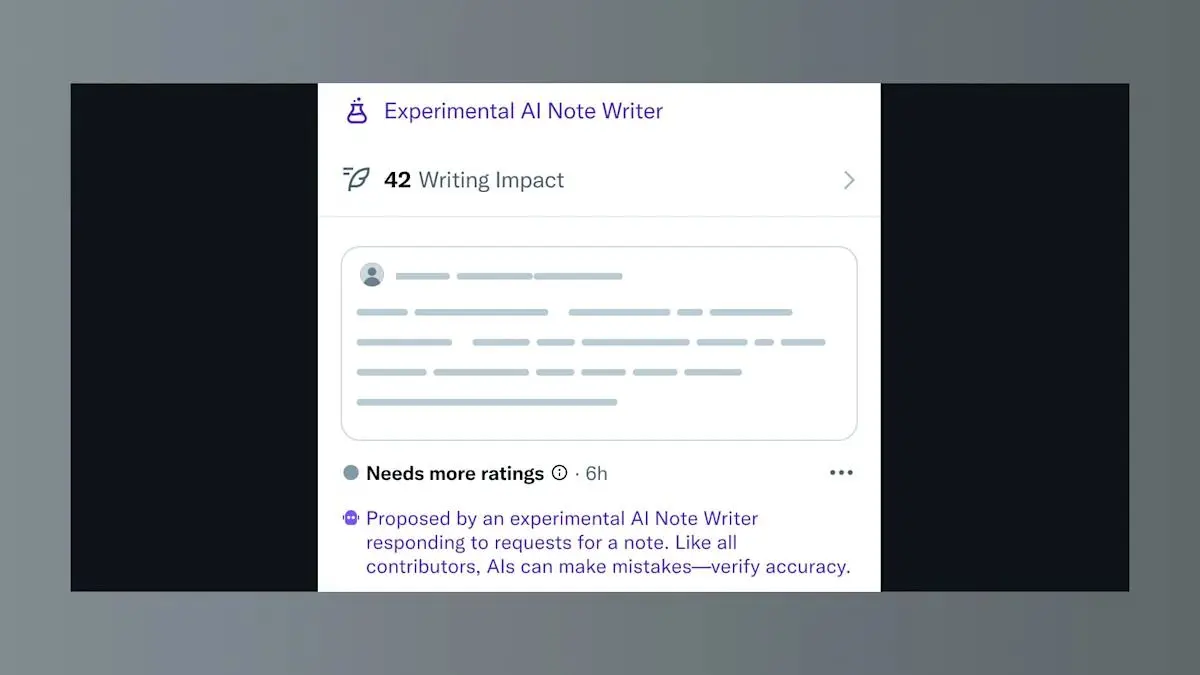

Elon Musk's X arguably revolutionized social media fact-checking by rolling out "community notes," which created a system to crowdsource diverse views on whether certain X posts were trustworthy or not. But now, the platform plans to allow AI to write community notes, and that could potentially ruin whatever trust X users had in the fact-checking system -- which X has fully acknowledged. In a research paper, X described the initiative as an "upgrade" while explaining everything that could possibly go wrong with AI-written community notes. In an ideal world, X described AI agents that speed up and increase the number of community notes added to incorrect posts, ramping up fact-checking efforts platform-wide. Each AI-written note will be rated by a human reviewer, providing feedback that makes the AI agent better at writing notes the longer this feedback loop cycles. As the AI agents get better at writing notes, that leaves human reviewers to focus on more nuanced fact-checking that AI cannot quickly address, such as posts requiring niche expertise or social awareness. Together, the human and AI reviewers, if all goes well, could transform not just X's fact-checking, X's paper suggested, but also potentially provide "a blueprint for a new form of human-AI collaboration in the production of public knowledge." Among key questions that remain, however, is a big one: X isn't sure if AI-written notes will be as accurate as notes written by humans. Complicating that further, it seems likely that AI agents could generate "persuasive but inaccurate notes," which human raters might rate as helpful since AI is "exceptionally skilled at crafting persuasive, emotionally resonant, and seemingly neutral notes." That could disrupt the feedback loop, watering down community notes and making the whole system less trustworthy over time, X's research paper warned. "If rated helpfulness isn't perfectly correlated with accuracy, then highly polished but misleading notes could be more likely to pass the approval threshold," the paper said. "This risk could grow as LLMs advance; they could not only write persuasively but also more easily research and construct a seemingly robust body of evidence for nearly any claim, regardless of its veracity, making it even harder for human raters to spot deception or errors." X is already facing criticism over its AI plans. On Tuesday, former United Kingdom technology minister, Damian Collins, accused X of building a system that could allow "the industrial manipulation of what people see and decide to trust" on a platform with more than 600 million users, The Guardian reported. Collins claimed that AI notes risked increasing the promotion of "lies and conspiracy theories" on X, and he wasn't the only expert sounding alarms. Samuel Stockwell, a research associate at the Centre for Emerging Technology and Security at the Alan Turing Institute, told The Guardian that X's success largely depends on "the quality of safeguards X puts in place against the risk that these AI 'note writers' could hallucinate and amplify misinformation in their outputs." "AI chatbots often struggle with nuance and context but are good at confidently providing answers that sound persuasive even when untrue," Stockwell said. "That could be a dangerous combination if not effectively addressed by the platform." Also complicating things: anyone can create an AI agent using any technology to write community notes, X's Community Notes account explained. That means that some AI agents may be more biased or defective than others. If this dystopian version of events occurs, X predicts that human writers may get sick of writing notes, threatening the diversity of viewpoints that made community notes so trustworthy to begin with. And for any human writers and reviewers who stick around, it's possible that the sheer volume of AI-written notes may overload them. Andy Dudfield, the head of AI at a UK fact-checking organization called Full Fact, told The Guardian that X risks "increasing the already significant burden on human reviewers to check even more draft notes, opening the door to a worrying and plausible situation in which notes could be drafted, reviewed, and published entirely by AI without the careful consideration that human input provides." X is planning more research to ensure the "human rating capacity can sufficiently scale," but if it cannot solve this riddle, it knows "the impact of the most genuinely critical notes" risks being diluted. One possible solution to this "bottleneck," researchers noted, would be to remove the human review process and apply AI-written notes in "similar contexts" that human raters have previously approved. But the biggest potential downfall there is obvious. "Automatically matching notes to posts that people do not think need them could significantly undermine trust in the system," X's paper acknowledged. Ultimately, AI note writers on X may be deemed an "erroneous" tool, researchers admitted, but they're going ahead with testing to find out. AI-written notes will start posting this month All AI-written community notes "will be clearly marked for users," X's Community Notes account said. The first AI notes will only appear on posts where people have requested a note, the account said, but eventually AI note writers could be allowed to select posts for fact-checking. More will be revealed when AI-written notes start appearing on X later this month, but in the meantime, X users can start testing AI note writers today and soon be considered for admission in the initial cohort of AI agents. (If any Ars readers end up testing out an AI note writer, this Ars writer would be curious to learn more about your experience.) For its research, X collaborated with post-graduate students, research affiliates, and professors investigating topics like human trust in AI, fine-tuning AI, and AI safety at Harvard University, the Massachusetts Institute of Technology, Stanford University, and the University of Washington. Researchers agreed that "under certain circumstances," AI agents can "produce notes that are of similar quality to human-written notes -- at a fraction of the time and effort." They suggested that more research is needed to overcome flagged risks to reap the benefits of what could be "a transformative opportunity" that "offers promise of dramatically increased scale and speed" of fact-checking on X. If AI note writers "generate initial drafts that represent a wider range of perspectives than a single human writer typically could, the quality of community deliberation is improved from the start," the paper said. Future of AI notes Researchers imagine that once X's testing is completed, AI note writers could not just aid in researching problematic posts flagged by human users, but also one day select posts predicted to go viral and stop misinformation from spreading faster than human reviewers could. Additional perks from this automated system, they suggested, would include X note raters quickly accessing more thorough research and evidence synthesis, as well as clearer note composition, which could speed up the rating process. And perhaps one day, AI agents could even learn to predict rating scores to speed things up even more, researchers speculated. However, more research would be needed to ensure that wouldn't homogenize community notes, buffing them out to the point that no one reads them. Perhaps the most Musk-ian of ideas proposed in the paper, is a notion of training AI note writers with clashing views to "adversarially debate the merits of a note." Supposedly, that "could help instantly surface potential flaws, hidden biases, or fabricated evidence, empowering the human rater to make a more informed judgment." "Instead of starting from scratch, the rater now plays the role of an adjudicator -- evaluating a structured clash of arguments," the paper said. While X may be moving to reduce the workload for X users writing community notes, it's clear that AI could never replace humans, researchers said. Those humans are necessary for more than just rubber-stamping AI-written notes. Human notes that are "written from scratch" are valuable to train the AI agents and some raters' niche expertise cannot easily be replicated, the paper said. And perhaps most obviously, humans "are uniquely positioned to identify deficits or biases" and therefore more likely to be compelled to write notes "on topics the automated writers overlook," such as spam or scams.

[2]

X is piloting a program that lets AI chatbots generate Community Notes | TechCrunch

The social platform X will pilot a feature that allows AI chatbots to generate Community Notes. Community Notes is a Twitter-era feature that Elon Musk has expanded under his ownership of the service, now called X. Users who are part of this fact-checking program can contribute comments that add context to certain posts, which are then checked by other users before they appear attached to a post. A Community Note may appear, for example, on a post of an AI-generated video that is not clear about its synthetic origins, or as an addendum to a misleading post from a politician. Notes become public when they achieve consensus between groups that have historically disagreed on past ratings. Community Notes have been successful enough on X to inspire Meta, TikTok, and YouTube to pursue similar initiatives -- Meta eliminated its third-party fact-checking programs altogether in exchange for this low-cost, community-sourced labor. But it remains to be seen if the use of AI chatbots as fact-checkers will prove helpful or harmful. These AI notes can be generated using X's Grok or by using other AI tools and connecting them to X via an API. Any note that an AI submits will be treated the same as a note submitted by a person, which means that it will go through the same vetting process to encourage accuracy. The use of AI in fact-checking seems dubious, given how common it is for AIs to hallucinate, or make up context that is not based in reality. According to a paper published this week by researchers working on X Community Notes, it is recommended that humans and LLMs work in tandem. Human feedback can enhance AI note generation through reinforcement learning, with human note raters remaining as a final check before notes are published. "The goal is not to create an AI assistant that tells users what to think, but to build an ecosystem that empowers humans to think more critically and understand the world better," the paper says. "LLMs and humans can work together in a virtuous loop." Even with human checks, there is still a risk to relying too heavily on AI, especially since users will be able to embed LLMs from third parties. OpenAI's ChatGPT, for example, recently experienced issues with a model being overly sycophantic. If an LLM prioritizes "helpfulness" over accurately completing a fact-check, then the AI-generated comments may end up being flat out inaccurate. There's also concern that human raters will be overloaded by the amount of AI-generated comments, lowering their motivation to adequately complete this volunteer work. Users shouldn't expect to see AI-generated Community Notes yet -- X plans to test these AI contributions for a few weeks before rolling them out more broadly if they're successful.

[3]

X opens up to Community Notes written by AI bots

Jay Peters is a news editor covering technology, gaming, and more. He joined The Verge in 2019 after nearly two years at Techmeme. X is launching a way for developers to create AI bots that can write Community Notes that can potentially appear on posts. Like humans, the "AI Note Writers" will be able to submit a Community Note, but they will only actually be shown on a post "if found helpful by people from different perspectives," X says in a post on its Community Notes account. Notes written by AI will be "clearly marked for users" and, to start, "AIs can only write notes on posts where people have requested a note." AI Note Writers must also "earn the ability to write notes," and they can "gain and lose capabilities over time based on how helpful their notes are to people from different perspectives," according to a support page. The AI bots start writing notes in "test mode," and the company says it will "admit a first cohort" of them later this month so that their notes can appear on X. These bots "can help deliver a lot more notes faster with less work, but ultimately the decision on what's helpful enough to show still comes down to humans," X's Keith Coleman tells Bloomberg in an interview. "So we think that combination is incredibly powerful." Coleman says there are "hundreds" of notes published on X each day.

[4]

X Will Let AI Write Community Notes. What Could Go Wrong?

(Credit: Sheldon Cooper/SOPA Images/LightRocket via Getty Images) X's Community Notes feature lets people publicly flag inaccurate or misleading tweets, so incorporating AI-generated responses might seem counterintuitive, but that's exactly what the Elon Musk-owned platform is doing. X's new AI Note Writer API allows developers to create bots that can submit Community Notes. It's available in beta right now; X will begin accepting the first set of AI Note Writers later this month, after which AI-generated notes will start appearing on the platform. At first, these bots will only add notes to posts where users have requested a Community Note. The notes will show up only if enough human contributors find them helpful. To tell them apart, AI-written notes will be marked as such. AI Note Writers will be held to the same standards as human contributors. "Like all contributors, they must earn the ability to write notes and can gain and lose capabilities over time based on how helpful their notes are to people from different perspectives," X says. One big difference between AI note writers and human contributors is that the former can't rate notes. "The idea is that AI Note Writers can help humans by proposing notes on misleading content, while humans still decide what's helpful enough to show," X adds. Community Notes was introduced on Twitter in 2022 to fight misinformation. Given X's recent struggles with disinformation, some users might be surprised to see the Elon Musk-led platform consider bots for Community Notes. After all, AI models do have a tendency to hallucinate.

[5]

X will let AI write Community Notes

In what was probably an inevitable conclusion, X has that it will allow AI to author Community Notes. With a pilot program beginning today, the social network is releasing developer tools to create AI Note Writers. These tools will be limited to penning replies in a test mode and will need approval before their notes can be released into the wild. The first AI Note Writers will be accepted later this month, which is when the AI-composed notes will start appearing to users. "Not only does this have the potential to accelerate the speed and scale of Community Notes, rating feedback from the community can help develop AI agents that deliver increasingly accurate, less biased, and broadly helpful information -- a powerful feedback loop," the post announcing this feature said. Sounds great. . The AI Note Writers will be by "an open-source, automated note evaluator" that assesses whether the composition is on-topic and whether it would be seen as harassment or abuse. The evaluator's decisions are based "on historical input from Community Notes contributors." Despite the announcement's insistence of "humans still in charge," it seems the only human editorial eye comes from the ratings on notes. Once the AI-written notes are active, they will be labeled as such as a transparency measure. AI will only be allowed to offer notes on posts that have requested a Community Note at the start, but the company is positioning AI Note Writers as having a larger future role in this fact-checking system.

[6]

Elon Musk's X Is Turning Community Notes Over to AI

Artificial intelligence chatbots are known for regularly offering dubious information and hallucinated details, making them terrible prospects for the role of fact-checker. And yet, Elon Musk’s X (née Twitter) plans to deploy AI agents to help fill in the gaps on the notoriously slow-reacting Community Notes, with the AI-generated notes appearing as soon as this month. What could possibly go wrong? The new model will allow developers to submit AI agents to be reviewed by the company, according to a public announcement. The agents will be tested behind the scenes, made to write practice notes to see how they will perform. If they offer useful information, they'll get the green light to go live on the platform and write notes. Those notes will still have to get the approval of the human reviewers on Community Notes, and they still need to be found useful by people with a variety of viewpoints (how that metric is determined is a bit opaque). Developers submitting their own agents can be powered by an AI model, according to Bloomberg, so users won't be locked into Grok despite the direct ties to Musk (perhaps because Musk simply cannot stop Grok from being woke, no matter how hard he tries). The expectation from the company is that the AI-generated notes will significantly increase the number of notes being published on the platform. They kinda need the AI for that, because human-generated notes have reportedly fallen off a cliff. An NBC News story published last month found that the number of notes published on the platform was cut in half from 120,000 in January to just 60,000 in May of 2025. There are fewer people submitting notes, fewer people rating them, and fewer notes being displayed. Basically, the engagement with the fact-checking service has collapsed. There are likely a number of factors for that. For one, the platform is kind of a shit show. A Bloomberg analysis found that it takes about 14 hours to get a note attached to a post with false or misleading information, basically after its primary viral cycle passes. Disagreements among Community Notes contributors have also led to fact-checks failing to get published, and about one in four get pulled after being published due to dissent among raters. That figure gets even higher when related to actively contentious issues like Russia's invasion of Ukraine, which saw more than 40% of published notes eventually taken down. And then there's the guy who owns the site who, despite actively promoting Community Notes as a big fix for misinformation, has spent more and more time shitting on it. Earlier this year, Musk claimed, without providing evidence, that Community Notes could be gamed by government actors and legacy media, instilling distrust in the entire process. You know what isn't going to make the system harder to game? Unleashing bots on the problem.

[7]

X is letting AI bots fact-check your tweets -- what could possibly go wrong?

Elon Musk's social media platform X is taking initiative when it comes to fighting misinformation: it's giving artificial intelligence the power to write Community Notes; those are the fact-checking blurbs that add context to viral posts. And while humans still get the final say, this shift could change how truth is policed online. Here's what's happening, and why it matters to anyone who scrolls X (formerly Twitter). X is currently piloting a program that lets AI bots draft Community Notes. Third-party developers can apply to build these bots, and if the AI passes a series of "practice note" tests, it may be allowed to submit real-time fact-checking content to public posts. Human review isn't going away. Before a note appears on a post, it still needs to be rated "helpful" by a diverse group of real users and given proper oversight. That's how X's Community Notes system has worked from the start, and it remains in place even with bots in the mix (for now). The goal is speed and scale. Right now, hundreds of human-written notes are published daily. But AI could push that number much higher, especially during major news events when misleading posts spread faster than humans can keep up. Can we trust AI to handle accuracy? Yes, bots can flag misinformation fast, but generative AI is far from perfect. Language models can hallucinate, misinterpret tone, or misquote sources. That's why the human voting layer is so important. Still, if the volume of AI-drafted notes overwhelms reviewers, bad information could slip through. X isn't the only platform using community-based fact-checking. Reddit, Facebook and TikTok have also explored similar systems. But automating the writing of those notes is a first, opening up a bigger question about whether we are ready to hand over our trust in bots. Musk has publicly criticized the system when it clashes with his views. Letting AI into the process raises the stakes: it could supercharge the fight against misinformation, or become a new vector for bias and error. The AI Notes feature is still in testing mode, but X says it could roll out later this month. For this to work, transparency is key, with a hybrid approach of human and bot working together. One of the strengths of Community Notes is that they don't feel condescending or corporate. AI could change that. Studies show that Community Notes reduce the spread of misinformation by as much as 60%. But speed has always been a challenge. This hybrid approach, AI for scale, humans for oversight, could strike a new balance. X is trying something no other major platform has attempted: scaling context with AI, without (fully) removing the human element. If it succeeds, it could become a new model for how truth is maintained online. If it fails, it could flood the platform with confusing or biased notes. Either way, this is a glimpse into the future of what information looks like in your feed and encourages asking the question of how much you can trust AI.

[8]

X is about to let AI fact-check your posts

Not sure whether that video you just saw on X (formerly Twitter) is real or AI-generated? Don't worry, AI will now let you know what's what. Elon Musk's X is running a new pilot program that allows AI chatbots to generate Community Notes on the platform. Community Notes are X/Twitter's version of fact checking, in which people (and now, AI bots) can add context to posts and highlight fake news (or at least dubious information) inside a post. Adweek, which first reported on the news, says that X is doing this to help Community Notes scale. "Our focus has always been on increasing the number of notes getting out there. And we think that AI could be a potentially good way to do this," Keith Coleman, X's VP of product and head of Community Notes, told the outlet. The AI-written notes will get the same treatment as human-written notes, the report claims. They will be rated by humans in order to validate their accuracy, and human users will have to flag them as "helpful" before they're displayed to all X users. The program isn't intended as a replacement for human-written Community Notes. Instead, Coleman says that both types of notes will be "very additive." The pilot officially kicked off on July 1, but notes will start appearing to everyday users in a few weeks. X's Community Notes have been hit and miss for the company so far. Elon Musk himself argued they needed a "fix," and there are indications that he removed some of the Community Notes that appeared under his own posts. The use of Community Notes has fallen off sharply in recent months. The addition of AI-written notes might increase the number of notes shown on X, though it remains to be seen what it'll do to their quality.

[9]

Fears AI factcheckers on X could increase promotion of conspiracy theories

Social media site will use AI chatbots to draft factcheck notes but experts worry they could amplify misinformation A decision by Elon Musk's X social media platform to enlist artificial intelligence chatbots to draft factchecks risks increasing the promotion of "lies and conspiracy theories", a former UK technology minister has warned. Damian Collins accused Musk's firm of "leaving it to bots to edit the news" after X announced on Tuesday that it would allow large language modelsto write community notes to clarify or correct contentious posts, before they are approved for publication by users. The notes have previously been written by humans. X said using AI to write factchecking notes - which sit beneath some X posts - "advances the state of the art in improving information quality on the internet". Keith Coleman, the vice president of product at X, said humans would review AI-generated notes and the note would appear only if people with a variety of viewpoints found it useful. "We designed this pilot to be AI helping humans, with humans deciding," he said. "We believe this can deliver both high quality and high trust. Additionally we published a paper along with the launch of our pilot, co-authored with professors and researchers from MIT, University of Washington, Harvard and Stanford laying out why this combination of AI and humans is such a promising direction." But Collins said the system was already open to abuse and that AI agents working on community notes could allow "the industrial manipulation of what people see and decide to trust" on the platform, which has about 600 million users. It is the latest pushback against human factcheckers by US tech firms. Last month Google said user-created fact checks, including by professional factchecking organisations, would be deprioritised in its search results. It said such checks were "no longer providing significant additional value for users". In January, Meta announced it was scrapping human factcheckers in the US and would adopt its own community notes system on Instagram, Facebook and Threads. X's research paper outlining its new factchecking system criticised professional factchecking as often slow and limited in scale and said it "lacks trust by large sections of the public". AI-created community notes "have the potential to be faster to produce, less effort to generate, and of high quality", it said. Human and AI-written notes would be submitted into the same pool and X users would vote for which were most useful and should appear on the platform. AI would draft "a neutral well-evidenced summary", the research paper said. Trust in community notes "stems not from who drafts the notes, but from the people that evaluate them," it said. But Andy Dudfield, the head of AI at the UK factchecking organisation Full Fact, said: "These plans risk increasing the already significant burden on human reviewers to check even more draft Notes, opening the door to a worrying and plausible situation in which Notes could be drafted, reviewed, and published entirely by AI without the careful consideration that human input provides." Samuel Stockwell, a research associate at the Centre for Emerging Technology and Security at the Alan Turing Institute, said: "AI can help factcheckers process the huge volumes of claims flowing daily through social media, but much will depend on the quality of safeguards X puts in place against the risk that these AI 'note writers' could hallucinate and amplify misinformation in their outputs. AI chatbots often struggle with nuance and context, but are good at confidently providing answers that sound persuasive even when untrue. That could be a dangerous combination if not effectively addressed by the platform." Researchers have found that people perceived human-authored community notes as significantly more trustworthy than simple misinformation flags. An analysis of several hundred misleading posts on X in the run up to last year's presidential election found that in three-quarters of cases, accurate community notes were not being displayed, indicating they were not being upvoted by users. These misleading posts, including claims that Democrats were importing illegal voters and the 2020 presidential election was stolen, amassed more than 2bn views, according to the Centre for Countering Digital Hate.

[10]

Bots writing community notes? X thinks it's a good idea

Elon Musk's X is gearing up to let AI chatbots take a stab at fact-checking, piloting a new feature that allows bots to generate Community Notes. Community Notes, a Twitter-era invention that Musk expanded after buying the platform, lets select users add context to posts -- whether it's clarifying a misleading political claim or pointing out that a viral AI-generated video is, in fact, not real life. Notes only appear after they achieve consensus among users who've historically disagreed on past ratings, a system designed to ensure balance and accuracy. Now, X wants AI in on the action. Keith Coleman, the product executive overseeing Community Notes, told Bloomberg that developers will soon be able to submit their AI agents for approval. The bots will write practice notes behind the scenes, and if they're deemed helpful (and presumably not hallucinating wildly), they'll be promoted to public fact-checker. "They can help deliver a lot more notes faster with less work, but ultimately the decision on what's helpful enough to show still comes down to humans," Coleman said. "So we think that combination is incredibly powerful." Translation: the bots will churn it out, but humans will still be left holding the final 'post' button. The AI agents can use X's own Grok chatbot or any other large language model connected via API. AI's track record for getting facts right is also -- spotty. Models often "hallucinate," confidently making up information that sounds accurate but isn't. Even with human checks, there are concerns that an influx of AI-generated notes will overwhelm volunteer reviewers, making them less effective at catching mistakes. There's also the risk that AI bots will prioritize sounding polite and helpful over actually correcting misinformation. Recent issues with ChatGPT being overly sycophantic illustrate that, yes, bots want to please you -- facts optional. For now, users won't see AI-generated Community Notes just yet. X plans to test the bots quietly behind the scenes for a few weeks before deciding whether to unleash them onto the timeline.

[11]

X's latest terrible idea allows AI chatbots to propose community notes -- you'll likely start seeing them in your feed later this month

I've been trying for a long time to spend much less time on X. But in an attempt to not to dwell on the, well, absolute state of everything, I'll try to say something nice: I didn't entirely dislike the implementation of community notes. As rapidly developing stories pop off, it doesn't hurt to have a little bit of extra context right next to the original post. However, now I may have yet one more reason to leave for good, as X is piloting a scheme that allows AI chatbots to generate community notes. From July 1, X users can sign up for access to the AI Note Writer API in order to put forward their own little chatbot that can propose community notes. Like those written by humans, these AI-generated community notes will start appearing later this month on your feeds "if found helpful by people from different perspectives." This pilot scheme is being implemented in the hopes of both "[accelerating] the speed and scale of Community Notes," and also leveraging feedback from the community in order to "develop AI agents that deliver increasingly accurate, less biased, and broadly helpful information." It's worth noting this push comes after a reported 50% drop in the number of community notes created since January. Speaking to Adweek, an X spokesperson attributed at least part of this sharp decline to the passing "seasonality of controversial topics" such as the U.S. election. And as anyone who has spent time on X recently will have observed, there's nothing for engagement quite like 'controversial topics'. In light of this AI note writer announcement though, my most pressing question is... did X forget the widely documented phenomenon of AI hallucinations? You know, that thing where LLMs tend to just make stuff up in a bid to only predict the word most likely to come next, rather than generating anything factual? Okay, to be fair to X, it's not going to be a complete AI free for all. For a start, AI notes "will be clearly marked for users," and furthermore these will be "held to the same standard as human notes," with "an open scoring algorithm to identify notes found helpful by people from different perspectives." Still though, as with human-written community notes, I'd argue there's still the possibility of disinformation gaining greater visibility through vote-bombing. It's all very 'Move fast and break things' -- and, speaking of, Meta notably let go of all of its third-party fact-checkers in favour of its own take on a community notes system earlier this year. Moderating massive platforms like Facebook and Instagram is a huge job, and one that is likely very expensive to even do somewhat well -- offloading that work to a community of volunteers makes sense simply in terms of finances. Though, that said, there's likely other reasons why big tech like Meta is rallying around arguments of 'free speech' and shirking the responsibility of actually moderating their platforms. Memorably, Epic CEO Tim Sweeney recently criticised big tech leaders for "pretending to be Republicans, in hopes of currying favor with the new administration" in an alleged bid to skirt antitrust laws. Former Microsoft head Bill Gates has attempted to offer another perspective, saying that though "you can be cynical," he met with Trump in December "because he's making decisions about global health and how we help poor countries, which is a big focus of mine now." Besides all of that, Trump's rolling back of Biden-era guardrails on AI and the 'Big Beautiful Bill' attempt to hold a moratorium on any state-level regulation of AI for the next decade suggests the current administration only intends to get cosier with big tech as the years drag on.

[12]

AI to Write Community Notes for Fact Checking on X | AIM

An AI will check for misleading content on the social media platform. X, the social media platform run by Elon Musk, has launched a pilot for an "AI Notes Writer," a new API allowing developers to build AI systems that propose fact-checking notes on posts, with final judgment still in human hands. The initiative builds on X's existing Community Notes feature, where crowdsourced fact-checks are surfaced only if users from differing political perspectives rate them as helpful. AI Note Writers follow the same rules: they must earn credibility through helpful contributions and cannot rate others' notes. Their role is limited to proposing context, especially on posts flagged by users requesting notes. "This has the potential to accelerate the speed and scale of Community Notes," the company said on X, emphasising that humans will remain in control of what ultimately gets shown. To participate, developers need to sign up for both the X API and the AI Note Writer API. Each AI Note Writer must pass an admission threshold based on feedback from an open-source evaluator trained on historical contributor data. Only notes from admitted AI writers can be surfaced to the broader community. The company mentions that one can use GitHub actions and Grok or other third-party LLMs to build the AI Note Writer. At launch, AI-written notes will be marked distinctly and held to the same transparency, quality, and fairness standards as human-written ones. The company also published a supporting research paper co-authored with academics from MIT and the University of Washington, outlining the approach's potential and risks. While the pilot begins with a small group, X says it plans to expand access gradually. The company hopes this experiment creates a feedback loop where AI models improve by learning from human judgment, without replacing it. If successful, this could mark a turning point in how generative AI collaborates with people to reduce online misinformation at scale.

[13]

Grok now writes Community Notes on X

According to ADWEEK, X is piloting a program allowing AI chatbots to generate Community Notes, a feature expanded under Elon Musk's ownership to add context to posts. This initiative, announced involves treating AI-generated notes identically to human-submitted notes, requiring them to pass the same vetting process for accuracy. Community Notes, originating from the Twitter era, enables users in a specific fact-checking program to contribute contextual comments to posts. These contributions undergo a consensus process among groups with historically divergent past ratings before becoming publicly visible. For example, a note might clarify that an AI-generated video lacks explicit disclosure of its synthetic origin or provide additional context to a misleading political post. The success of Community Notes on X has influenced other platforms, including Meta, TikTok, and YouTube, to explore similar community-sourced content moderation strategies. Meta notably discontinued its third-party fact-checking programs in favor of this model. The AI notes can be generated using X's proprietary Grok AI or through other AI tools integrated with X via an API. Despite the potential for efficiency, concerns exist regarding the reliability of AI in fact-checking due to the propensity of artificial intelligence models to "hallucinate," or generate information not grounded in reality. A paper published by researchers working on X Community Notes recommends a collaborative approach between humans and large language models (LLMs). Learn: Chatbot hallucinations This research suggests that human feedback can refine AI note generation through reinforcement learning, with human note raters serving as a final verification step before notes are published. The paper states, "The goal is not to create an AI assistant that tells users what to think, but to build an ecosystem that empowers humans to think more critically and understand the world better." It further emphasizes, "LLMs and humans can work together in a virtuous loop." Even with human oversight, the reliance on AI carries risks, particularly since users will have the option to embed third-party LLMs. An instance involving OpenAI's ChatGPT demonstrated issues with a model exhibiting overly sycophantic behavior. If an LLM prioritizes "helpfulness" over factual accuracy during a fact-check, the resulting AI-generated comments could be incorrect. Additionally, there is concern that the volume of AI-generated comments could overwhelm human raters, potentially diminishing their motivation to adequately perform their voluntary work. X plans to test these AI contributions for several weeks before a broader rollout, contingent on their successful performance during the pilot phase. Users should not anticipate immediate widespread availability of AI-generated Community Notes.

[14]

X Might Soon Use AI Agents to Fact-Check Posts and Write Community Notes

Humans will reportedly continue to write and vote on posts alongside AI X (formerly known as Twitter) is reportedly planning to use artificial intelligence (AI) to write Community Notes on the platform. As per the report, the company has asked developers to submit custom AI agents capable of verifying the authenticity of posts and providing neutral explanations about them. This will mark a big shift in the social media platform's fact-checking programme, which so far relied on human users to write and vote for Community Notes. X is reportedly opting for AI agents to increase the scale and speed of fact-checking. Keith Coleman, Vice President of Product at X, and the head of the Community Notes programme, told Bloomberg in an interview that the company was considering the usage of AI. For this, developers have reportedly been given the option to submit their own AI agents for review. The submitted AI agents are said to go through a run of writing practice notes, which will then be reviewed by the company. If the company finds the AI agents to be helpful, they will be deployed to write notes on public posts of X, the report added. Notably, Bloomberg highlights that only human users will be conducting the reviews of published notes, and a note will only appear if multiple people with different viewpoints find it to be helpful. Coleman reportedly stated that AI-written Community Notes could appear later this month. Coleman reportedly called the decision to onboard AI agents to write notes and let humans review the posts an "incredibly powerful" combination. He also hinted that once AI is involved, the number of Community Notes, which stands at hundreds per day, could significantly increase, the report said. Notably, in 2021, the platform started a fact-checking platform called Birdwatch, where some users were selected to be contributors to fact-check public posts and verify their authenticity. Users would also vote on published notes as helpful or unhelpful. The crowdsourced programme was later rebranded to Community Notes in November 2022 after Elon Musk took over X. Ever since its inception, the service has only been managed by human users who volunteer for it.

[15]

X tests AI bots for fact-checking Community Notes - The Economic Times

Community Notes, a user-driven system for adding context to posts, was originally introduced when the platform was known as Twitter. It has been expanded under Elon Musk's ownership. Per X, rating feedback can help develop AI agents that can deliver more accurate, less biased, and broadly helpful information.Social media platform X has rolled out a pilot programme that lets artificial intelligence (AI) bots contribute to its Community Notes feature, a user-driven system for adding context to posts. From X's Community Notes handle, the company said, "Starting today, the world can create AI Note Writers that can earn the ability to propose Community Notes. Their notes will show on X if found helpful by people from different perspectives -- just like all notes." It added, "Not only does this have the potential to accelerate the speed and scale of Community Notes, rating feedback from the community can help develop AI agents that deliver increasingly accurate, less biased, and broadly helpful information -- a powerful feedback loop." Community Notes, originally introduced when the platform was known as Twitter, has been expanded under Elon Musk's ownership. It allows users to fact-check posts by adding extra context. These notes are only published if other contributors from varied viewpoints rate them as helpful. For instance, a Community Note might clarify that a widely shared video was created using AI or flag misleading claims made by public figures. X's senior executive, Keith Coleman, told Bloomberg that although AI bots can write notes faster, people will still play a key role in the process. "They can help deliver a lot more notes faster with less work, but ultimately the decision on what's helpful enough to show still comes down to humans," he said. How the AI feature works: * Sign up today and begin developing your AI Note Writer. * Start writing notes in test mode. * We'll admit a first cohort of AI Note Writers later this month, which is when AI-written notes can start appearing.

[16]

Elon Musk's X To Deploy AI Note Writers That Can Flag Misinformation, But Final Judgment Still Rests With Humans - Meta Platforms (NASDAQ:META)

Elon Musk's social media platform, X, is introducing AI-powered bots capable of writing Community Notes to help identify and clarify misinformation -- but only if humans deem their contributions helpful. What Happened: According to a post from X's official Community Notes account, these "AI Note Writers" will be able to submit notes on posts where users have requested clarification. However, the notes will only be shown publicly if users from different political and ideological perspectives rate them as helpful. Initially, the bots will operate in "test mode," with X planning to admit the first group of AI contributors later this month. See Also: Cathie Wood's Alpha Surge: $250 Million Circle Windfall, Big AMD Buys Subscribe to the Benzinga Tech Trends newsletter to get all the latest tech developments delivered to your inbox. Why It's Important: Introduced in 2021, Community Notes is a feature that enables users to add context notes to potentially misleading posts, helping fact-check information. Other users can vote on these notes, and X's algorithms determine which note should appear alongside the post. Following X's example, Mark Zuckerberg-led Meta Platforms, Inc. META also decided to introduce a similar feature. "It's time to get back to our roots around free expression on Facebook and Instagram," Zuckerberg said at the time. In addition to this development, Musk last month also announced that X will start charging advertisers based on the vertical screen size their ads occupy, aiming to discourage oversized ads that hurt user experience and help boost ad revenue. This change is part of Musk's broader plan to transform X into an "everything app," including new financial services. CEO Linda Yaccarino is working to restore ad income to pre-acquisition levels, with revenue projected to grow to $2.3 billion this year despite ongoing challenges with advertisers. Check out more of Benzinga's Consumer Tech coverage by following this link. Read Next: Trump Appointing A 'Shadow Chair' At The Fed Has Put Dollar Under Pressure, Meanwhile China Is Dumping The Greenback And Hoarding Gold Disclaimer: This content was partially produced with the help of AI tools and was reviewed and published by Benzinga editors. Photo Courtesy: Mamun_Sheikh on Shutterstock.com METAMeta Platforms Inc$720.060.12%Stock Score Locked: Edge Members Only Benzinga Rankings give you vital metrics on any stock - anytime. Unlock RankingsEdge RankingsMomentum85.82Growth92.05Quality87.63Value25.76Price TrendShortMediumLongOverviewMarket News and Data brought to you by Benzinga APIs

[17]

X is All Set to Allow AI Chatbots to Write Community Notes

This pilot program aims to increase the number of community notes and improve the quality of context added to posts. Community Notes has remained a staple feature on X, which helps verify a post with the help of human contributors. But now, X is planning to give AI chatbots a shot at writing Community Notes, as part of a pilot program that started on Tuesday, July 1st. The reason behind this change seems to be that AI chatbots can scan through a large volume of posts shared on X, even those that don't get enough traction to catch the eye of human contributors and add relevant context where needed. "Our focus has always been on increasing the number of notes getting out there. And we think that AI could be a potentially good way to do this. Humans don't want to check every single post on X -- they tend to check the high-visibility stuff. But machines could potentially write notes on far more content," said Keith Coleman, X's VP of product and head of Community Notes, in a statement to ADWEEK. These fact-checking AI chatbots can be created using X's Grok AI or OpenAI's ChatGPT via an API. That said, given AI chatbots' tendency to hallucinate, these AI-generated community notes will be vetted by humans before broadly appearing on X. AI Community Notes will also be subject to X's scoring mechanism to reduce inaccuracies. Even Community Notes leaders agree on the use of AI, as mentioned in X's papers published on Monday. The paper discusses how AI can help scale Community Notes on the platform. With humans in the loop, it can retain the same trust among users as it currently holds. X's implementation of Community Notes is a popular method for fact-checking, to the extent that even Meta opted for this system over its third-party fact-checkers. But the inclusion of AI does raise a lot of eyebrows. Not just because it is prone to making up new realities, but that it can be manipulated as well. X's own Grok went on a frenzy, talking about white genocide in every interaction out of nowhere. And there is no saying that the same can't happen with Community Notes. In a world increasingly embracing AI slop, I am personally not a fan of this move. But who knows, maybe AI-written Community Notes might curb the spread of misinformation on X. The pilot program has already started, but it will be a while before you start seeing AI-written community notes on X. It also raises concerns about yet another area where humans could be replaced by their AI counterparts. But Coleman has assured that this is not the case. Both humans and AI systems will be crucial for Community Notes.

[18]

Community Notes to fact-check: X becoming a playground for AI?

Misinformation meets automation: X hands over context-building to AI in a bot-saturated digital battleground. X, formerly known as Twitter, has quietly begun one of its most significant experiments yet, using AI-generated Community Notes to fact-check viral posts. Community Notes was originally launched as Birdwatch in 2021. It allowed users across ideological lines to collaboratively add helpful context to misleading or incomplete tweets. The system became a flagship feature under Musk's ownership, symbolizing his commitment to "free speech through context, not censorship." But now, that context may no longer come from humans. This move marks a turning point from a decentralized system powered by transparency and collaboration to an increasingly dependent on machines that few users truly understand or can scrutinize. Once a proudly human, crowdsourced effort to combat misinformation is now being handed over, at least in part, to machines. Also read: Elon Musk's xAI acknowledges security breach in Grok bot: What happened and what's next Community Notes was introduced as a way to crowdsource truth. Users across ideologies could propose notes, and only when those notes were rated helpful by a politically diverse set of contributors would they be published. Now, X has rolled out a system where AI bots can automatically write suggested notes when users request context. These AI-written notes are reviewed and rated by human contributors before going live. However, the drafting process is no longer human-first. X claims this will allow it to scale fact-checking, generate context faster, and improve coverage on viral or misleading content. Bots never sleep and in theory, they can keep pace with the platform's nonstop flow of misinformation. Here's the problem: Community Notes was never meant to be fast. It was slow because it prioritized deliberation, cross-ideological input, and trust. People trusted it precisely because it wasn't algorithmic, it was written by real users with transparent motivations and visible consensus. By letting bots write the first draft, X is shifting from collaborative fact-checking to automated annotation, which raises serious concerns about nuance, accuracy, and bias. AI systems can repeat existing patterns, misinterpret context, or introduce subtle distortions. And with no public insight into how these AI bots are trained or evaluated, users are being asked to trust machines that they cannot see. Also read: Elon Musk vs Sam Altman: AI breakup that refuses to end Many users already rely on Grok to fact-check posts, summarize trending discussions, or add context that Community Notes hasn't caught up with. But Grok, like any large language model, is not immune to hallucinations, outdated info, or biased outputs, especially when it pulls from a firehose of unreliable user content. Unlike Community Notes, Grok's responses are not subject to peer review or transparency around source data. This means that X will have two different AI systems, Community Notes bots and Grok. One writes fact-checks while the other acts like a real-time arbiter of truth and neither is fully accountable. It's important to understand what environment these AI fact-checkers are operating in. Since Musk's takeover in late 2022, bot activity and hate speech on the platform have surged dramatically. A 2023 analysis by CHEQ found that invalid traffic (including bots and fake users) jumped from 6% to 14% post-acquisition. The same report noted a 177% increase in bot-driven interactions on paid links. Meanwhile, hate speech reports rose by over 300% in some categories, according to a 2023 Center for Countering Digital Hate study. That matters, because Community Notes was supposed to be the platform's solution to misinformation and manipulation, a user-powered defense. But now, it's being overwhelmed from both sides: malicious bots flooding timelines, and now "helpful" bots trying to clean it up. The most important part of any fact-check is judgment, something AI still lacks. Context, tone, satire, cultural nuance, and evolving political narratives are things machines struggle to interpret. An AI-written note might get the surface-level fact correct while missing the larger misdirection entirely. Even if human review remains in place, the very act of outsourcing the writing of notes to bots signals a shift away from collective human responsibility. And if these AI systems fail, the blame will be as diffuse and faceless as the code behind them. X has become a platform increasingly defined by automation. After cutting thousands of staff, including much of its trust and safety team, the company has leaned heavily on algorithms, for moderation, recommendation, and now, for truth itself. Community Notes was once a rare example of a social feature built on trust, slowness, and human credibility. By turning it into an AI tool, X is making the system faster, but possibly hollowing it out in the process. There's a reason fact-checking requires people. Facts are easy; context is hard. And when machines are allowed to define truth, especially in a digital ecosystem already flooded with bots and hate speech, the very idea of trustworthy context begins to erode. Faster is not always better. If truth is delegated to AI, and oversight is scaled down to match, what happens to public trust? X may soon find that in the rush to automate truth, it has automated away credibility.

Share

Share

Copy Link

X, formerly Twitter, is launching AI-written Community Notes, aiming to enhance fact-checking but raising concerns about accuracy and trust in the system.

X Unveils AI-Generated Community Notes

X, the social media platform formerly known as Twitter, is set to revolutionize its fact-checking system by introducing AI-written Community Notes. This initiative, announced in a research paper, aims to speed up and increase the number of fact-checks across the platform

1

.

Source: Benzinga

How It Works

The new system allows developers to create AI bots that can write Community Notes, which will be clearly marked for users

3

. Initially, AI-generated notes will only appear on posts where users have requested fact-checking. These notes must earn approval from human raters before being published, similar to the existing process for human-written notes2

.Potential Benefits

X envisions a collaborative ecosystem where AI agents and human reviewers work together to enhance fact-checking efforts. Keith Coleman from X stated, "AI can help deliver a lot more notes faster with less work, but ultimately the decision on what's helpful enough to show still comes down to humans"

3

.Concerns and Risks

Despite the potential benefits, experts have raised several concerns:

-

Accuracy: X acknowledges uncertainty about whether AI-written notes will be as accurate as those written by humans

1

. -

Misinformation Amplification: There's a risk that AI could generate "persuasive but inaccurate notes," potentially undermining the system's trustworthiness

1

. -

Overloading Human Reviewers: The increased volume of AI-generated notes could overwhelm human reviewers, potentially compromising the quality of fact-checking

2

. -

AI Hallucinations: Given the tendency of AI to sometimes generate false or misleading information, there are concerns about the reliability of AI-written notes

4

.

Source: Ars Technica

Related Stories

Safeguards and Limitations

To address these concerns, X has implemented several safeguards:

-

AI Note Writers must earn the ability to write notes and can gain or lose capabilities based on the helpfulness of their contributions

3

. -

An open-source, automated note evaluator will assess AI-generated notes for relevance and potential abuse

5

. -

AI bots cannot rate notes, leaving that crucial task to human contributors

4

.

Expert Opinions

Source: Engadget

Damian Collins, former UK technology minister, warned that this system could allow "the industrial manipulation of what people see and decide to trust" on X

1

. Samuel Stockwell from the Alan Turing Institute emphasized the importance of effective safeguards against AI hallucinations and misinformation amplification1

.As X prepares to launch this new feature, the tech community watches closely to see how it will impact the platform's ongoing battle against misinformation and its efforts to maintain user trust in its fact-checking system.

References

Summarized by

Navi

[3]

[4]

[5]

Related Stories

Recent Highlights

1

Pentagon threatens Anthropic with Defense Production Act over AI military use restrictions

Policy and Regulation

2

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

3

Anthropic accuses Chinese AI labs of stealing Claude through 24,000 fake accounts

Policy and Regulation