YouTube's AI Lip-Sync Technology: Revolutionizing Auto-Dubbing for Global Content

4 Sources

4 Sources

[1]

YouTube Tests AI Lip-Syncing Tech to Better Match Its Auto-Dubbing Feature

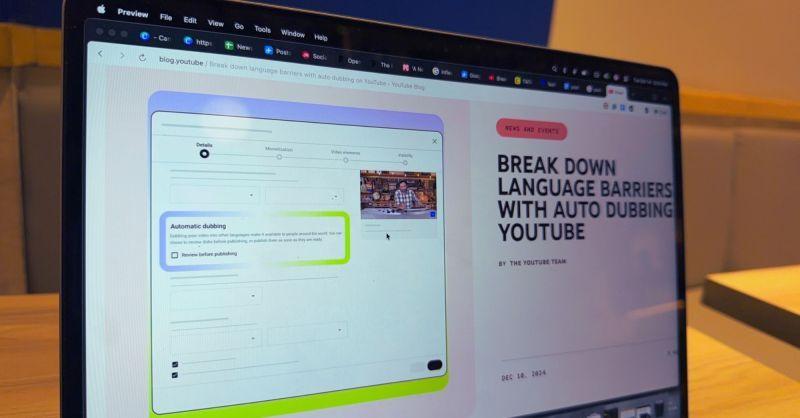

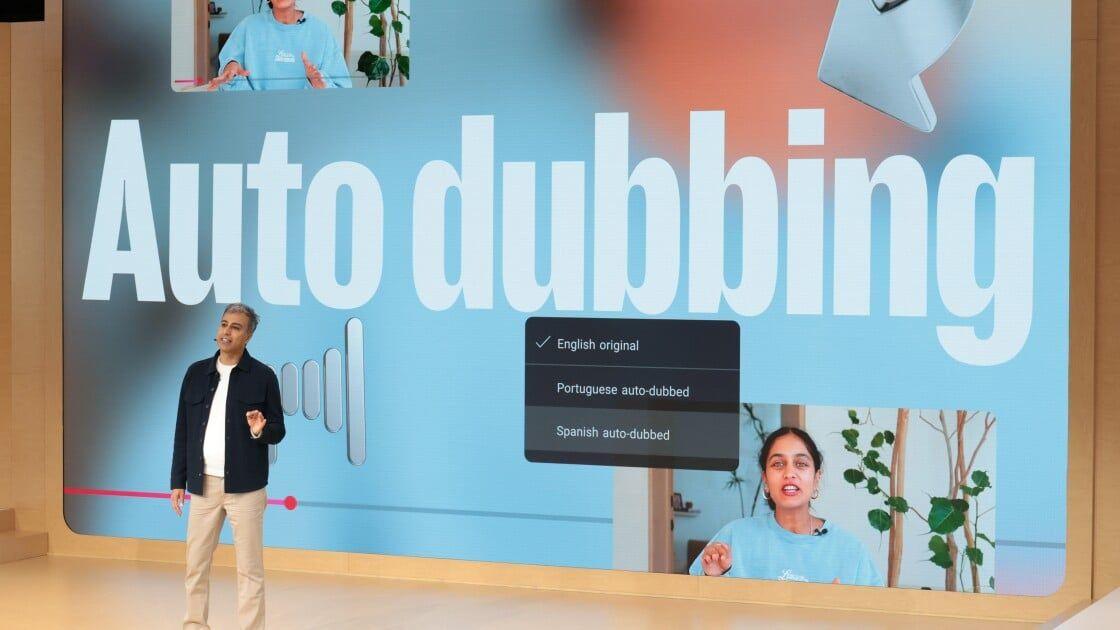

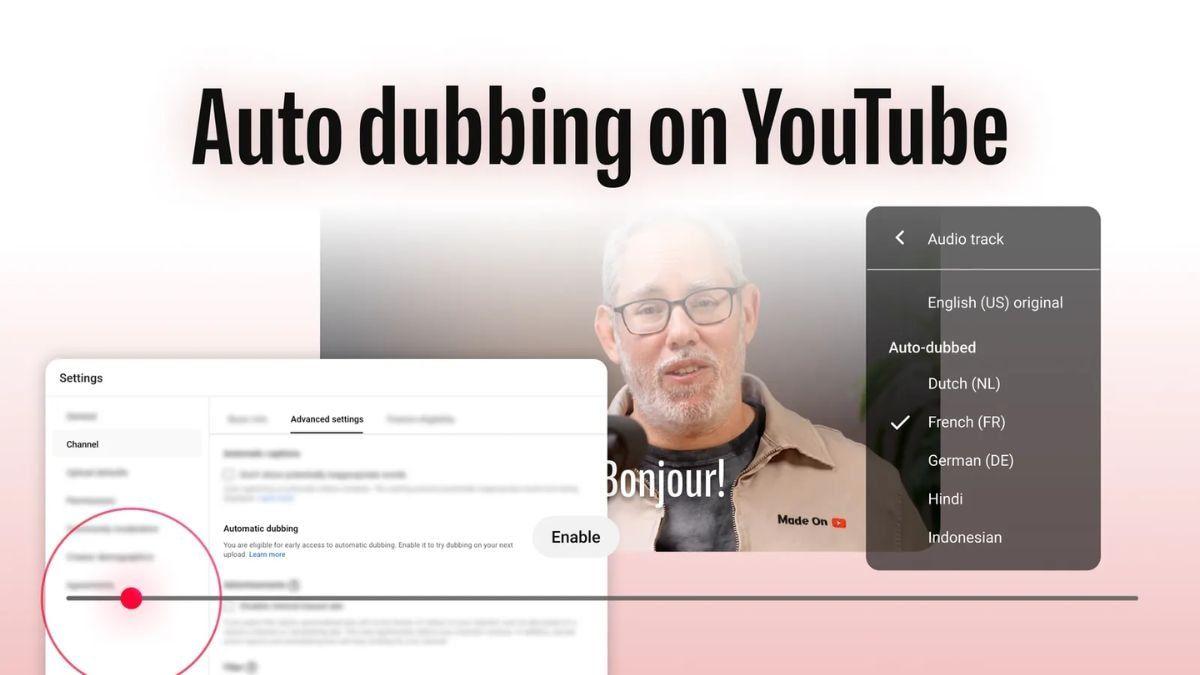

With over a decade of experience reporting on consumer technology, James covers mobile phones, apps, operating systems, wearables, AI, and more. Don't miss out on our latest stories. Add PCMag as a preferred source on Google. Your next favorite YouTuber may not even speak the same language as you. Alongside YouTube's recent auto-dubbing feature, which uses AI to translate audio, the brand is also experimenting with lip-sync tech to better match face movements to the language being spoken. YouTube's Product Lead for Autodubbing, Buddhika Kottahachchi, tells Digital Trends that the streamer needed to figure out how to "modify the pixels on the screen to match the translated speech." That involved developing tools to better understand facial movements, lip shapes, teeth, posture, and more. In its testing phase, YouTube says the feature works best for Full HD videos; it's not as good in 4K. That may change by the time this appears on public YouTube videos. YouTube teased the feature at a September event but hasn't announced a formal launch date. An early version of the tool supports translations in English, French, German, Spanish, and Portuguese. Eventually, the service wants to expand lip syncing across all languages covered by YouTube's auto-dubbing feature. That includes English to Bengali, Dutch, Hebrew, Hindi, Indonesian, Italian, Japanese, Korean, Malayalam, Polish, Punjabi, Romanian, Russian, Tamil, Telugu, Turkish, Ukrainian, and Vietnamese. The feature is currently in an early testing phase, with access limited to a select few creators on the platform. "We are not ready to make any broad statements about how broadly we will make it available, but we do want to make it available to more creators and understand the compute constraints and the quality," Kottahachchi says. His comments suggest that it may be a paid feature that YouTube creators can activate to better access new markets. Kottahachchi also says YouTube has a plan for AI disclosures. It will say "both the audio and video in this video have been synthetically created or altered" to help you identify when lip-syncing is active. The disclosures will appear in the description box, but it's unclear if anything will appear directly on-screen. AI auto-dubbing launched on YouTube last December, and over 60 million videos had used the feature by the end of August 2025.

[2]

After auto-dubbing, YouTube is toying with automatic lip-syncs

Whether you like it or not, Google isn't backing down on its attempts to increase engagement by automatically translating audio into your native tongue. Now, following continuous and unwavering stabs at simply translating audio with autodubbing, YouTube may take it a notch higher with realistic-looking audio dubs with accurate lip sync in an attempt to make videos more appealing with AI. YouTube's product lead for Auto-dubbing, Buddhika Kottahachchi, recently detailed the technology behind lip-synced autodubs in a conversation with Digital Trends. Kottahachchi informed the publication that the system makes intricate pixel-level changes to modify the speaker's mouth, ensuring the dub looks realistic. It is powered by a custom AI built to understand the nuances of facial structure, including 3D perception of the lips, teeth, and even different facial expressions. Looking at Google's success with its Veo3 text-to-video model, we believe it should be able to accomplish the feat with convincing levels of realism.

[3]

Exclusive: YouTube reveals how it can make you speak languages you don't know

YouTube wants your lips to play nice with AI-translated audio. It's more technical to pull off than it looks. Tech for Change This story is part of Tech for Change: an ongoing series in which we shine a spotlight on positive uses of technology, and showcase how they're helping to make the world a better place. Updated less than 4 minutes ago It would be an understatement to say that the video content industry is currently at an inflection point. On one hand, we have AI supercharging the creative potential of content creators, but on the other side of the ocean, the problem of AI slop and misinformation lingers. The sheer potential of AI, however, can't be ignored. The folks over at YouTube are putting it to good use with a focus on accessibility and realism. So, what's next? Making the lips move naturally to the tune of any language, even if the speaker in the video doesn't speak it. Building on the auto-dubbing feature that was launched last year, the team has now come up with the new AI-powered lip sync feature. Recommended Videos Machine-translated audio has improved dramatically over the past few quarters, and it now almost sounds natural. Audio overviews in Google's NotebookLM are a great example. But when it comes to videos, they fall flat because the lip movement simply doesn't match what the speaker is saying with a translated version of the script. It's pretty jarring and off-putting. The AI-powered lip sync feature wants to overcome that audio-visual dissonance. And from the samples that I've seen so far, they feel uncannily natural. I sat down with YouTube Product Lead, Autodubbing, Buddhika Kottahachchi, to understand how lip sync was developed, its impact, and the road ahead. Digging into the technical side In less than a year since its launch, YouTube's auto-dubbing feature has been used to dub over 60 million videos across 20 languages. But preserving a natural tone with all the nuances of a conversational speech, and then matching it with realistic lip movements, is a whole new challenge. On the surface level, Kottahachchi tells me that the lip sync system "modifies the pixels on the screen to match the translated speech." It's a custom tech stack, the Google executive tells me, adding that they needed to develop a 3D understanding of the world, lip shapes, teeth, posture, and face. For now, the tech is suited for full-HD (1080), but not tuned to 4K videos, as of now. "But generally, it should work with the video resolutions that you upload," he points out. As far as the language support goes, YouTube's AI-powered lip sync feature supports English, Spanish, German, Portuguese, and French. That's a pretty restricted pool, but Kottahachchi tells me that the team is scaling up and lip sync will eventually support the same set of languages as the auto-dubbing feature can handle (which currently stands at over 20 languages). For comparison, Meta's AI-fueled lip sync feature for Facebook and Instagram supports only English, Spanish, Hindi, and Portuguese. Now, AI-powered lip syncing is not entirely an alien concept. Adobe already offers an auto lip sync functionality. Then there are third-party options such as HeyGen, which claim to do it for free. But when it comes to YouTube, we are talking about a built-in system at a massive scale on a platform where 20 million videos are uploaded on a daily basis. The AI Babel fish for your face So, what's next in terms of availability? "We are not ready to make any broad statements about how broadly we will make it available, but we do want to make it available to more creators and understand the compute constraints and the quality," Kottahachchi tells me. And that brings us to the crucial cost question. When I enquired about it, the YouTube executive told me that they can't make predictions about the fee involved, if at all. That also explains why the feature is still part of a pilot project among a small pool of trusted testers to understand the market and compute costs. To recall, this is a complex vision-based implementation of AI. So, just like AI-generated videos, where you can create a few clips for free but need to pay up for higher resolution or attempts, YouTube will have to factor in the compute costs and decide on the rollout. But from a creator's perspective, if I am chasing a wider reach, I'd likely pay the subscription fee. The AI dilemma Ever since AI visuals started flooding the internet, the debate around authenticity and fair disclosure has heated up. "What is even real?" Social media users have been asking that question with more fervor soon after the uncannily realistic videos generated by OpenAI's Sora app started popping up. These videos have a visible watermark, but there are already free and paid tools out there that will remove the Sora label from the AI-generated clips. Or any other AI content generator, for that matter. Google, one of the biggest developers and adopters of AI, knows that all too well. The company was one of the early leaders in the AI fingerprinting race with its SynthID system, and also launched a SynthID Detector tool earlier this year to help users check the origins of multimedia content. The YouTube videos that rely on Google's AI-powered lip sync feature will take an even more cautious approach. "We will have a proper disclosure saying that both the audio and video in this video have been synthetically created or altered," Kottahachchi tells me. "The video content, itself, gets fingerprinted, as well." The text disclosures will appear in the description box underneath the title of YouTube videos, just as they appear for videos that have used the auto dub system. But how are other platforms going to treat the AI-dubbed, lip-synced YouTube videos if a creator posts them on Instagram or TikTok? Will the algorithms warm up? TikTok recently announced that it would label videos that were "made or edited" using AI tools, and would also fingerprint them so that users can check their origins using C2PA's Verify tool. Meta has a similar system in place. So, what's the fate of AI-edited videos that are cross-posted to other social video platforms? Will they be algorithmically downranked, or blocked from appearing in certain feeds? The situation is a bit tricky and unpredictable. "It's something we are monitoring carefully, but it's a little early because platforms have made statements, but we haven't seen how they are effectively implemented," he tells me. "Generally, we are translating translations, but not new content." I also brought up the issue with bad actors using videos of creators without their due consent, translating the audio, and pushing them from a different channel, or platform. Auto-dubbing and AI lip syncing technically make that unscrupulous act easier to execute, but it likely won't devolve into total chaos. "If your likeness is being used somewhere else on the platform, you can tell us about it and ask us to take it down," Kottahachchi told me. It would be interesting to see how auto-dubbing, expressive audio, and lip-synced videos will make the YouTube experience more diverse. On the surface, it seems like a win. I can't wait to see myself speak in Spanish, though I abandoned my Duolingo streak years ago.

[4]

Auto-dubbing gets weirdly real with YouTube's new lip-sync AI

The feature uses 3D modeling to map lip, teeth, and facial geometry for realistic speech animation. YouTube is developing an artificial intelligence feature to generate lip-syncing for its auto-dubbed videos. The technology aims to enhance realism by modifying a speaker's mouth movements to align with translated audio tracks, intended to increase viewer engagement. According to Digital Trends, the system's technical foundation, as detailed by YouTube's product lead for Auto-dubbing, Buddhika Kottahachchi, relies on a custom-built AI. Kottahachchi explained that the technology executes intricate, pixel-level changes to a speaker's on-screen mouth to create synchronization with dubbed audio. The AI model incorporates a three-dimensional perception of facial structures, allowing it to analyze the geometry of the lips and teeth. It is also designed to interpret and replicate facial expressions that accompany speech. This 3D modeling approach allows the system to more accurately simulate the physical movements required for speaking in a different language. In its initial phase, the lip-sync feature will have specific technical and linguistic limitations. The AI processing is currently restricted to videos with a 1080p resolution and cannot be applied to 4K content. Language support at launch will be confined to English, French, German, Portuguese, and Spanish. Following this introductory period, YouTube plans to expand support to more than 20 languages. This expansion is designed to bring the lip-sync feature into alignment with the full range of languages currently offered by YouTube's auto-dubbing service. YouTube has not announced a firm release date for the feature. The company is expected to first introduce the technology through a pilot program with a small group of creators, a strategy that mirrors the rollout of the auto-dubbing function. That auto-dubbing service was expanded to a wider audience just last month, indicating the lip-sync addition may undergo a prolonged testing period. Creators will be provided with controls to manage its use, including the reported option to disable the feature for their entire channel or for individual videos, giving them final say over their content's presentation. The feature may come at an additional cost, though a specific price has not been finalized. It is undetermined if the creator or consumer will bear the fee, but reports suggest it will likely be the consumer. To address potential misuse, YouTube plans to implement safeguards. These include a descriptive disclosure to inform viewers of the AI alteration and an invisible, persistent fingerprint embedded into the video. This digital watermark is described as being similar in function to SynthID, a tool used to identify AI-generated content, providing a mechanism for tracking and authentication. YouTube is not the only platform developing this technology. Meta has a comparable initiative for its Instagram platform, where it launched a pilot program last year to dub and lip-sync Reels. While details on the program's success are limited, it was recently expanded to support four languages: English, Hindi, Portuguese, and Spanish.

Share

Share

Copy Link

YouTube is testing an AI-powered lip-syncing feature to enhance its auto-dubbing capabilities, aiming to make translated videos appear more natural and engaging. This technology could potentially break language barriers in video content consumption.

YouTube's Innovative AI Lip-Sync Technology

YouTube is pushing the boundaries of content accessibility with its latest AI-powered feature: lip-syncing technology for auto-dubbed videos. This groundbreaking development aims to enhance the viewing experience by making translated content appear more natural and engaging

1

.

Source: Digital Trends

The Technology Behind the Scenes

Buddhika Kottahachchi, YouTube's Product Lead for Autodubbing, explains that the system employs sophisticated AI to modify on-screen pixels, ensuring that lip movements match the translated speech

2

. The technology utilizes a custom-built AI model that incorporates:- 3D perception of facial structures

- Analysis of lip shapes and teeth geometry

- Interpretation of various facial expressions

This intricate approach allows for a more accurate simulation of speech movements in different languages

3

.

Source: PC Magazine

Current Capabilities and Future Expansion

In its testing phase, the lip-sync feature works best with Full HD (1080p) videos and currently supports five languages: English, French, German, Spanish, and Portuguese

1

. YouTube plans to expand this to cover all 20+ languages supported by its auto-dubbing feature, which has already been used on over 60 million videos since its launch in December 20243

.Implications and Potential Impact

This technology has the potential to revolutionize global content consumption by breaking down language barriers. It could enable creators to reach wider audiences and viewers to enjoy content in their native languages without the jarring disconnect between audio and visual cues

4

.Ethical Considerations and Transparency

YouTube is addressing potential ethical concerns by implementing safeguards:

- Descriptive disclosures informing viewers of AI alterations

- An invisible, persistent digital watermark for tracking and authentication

These measures aim to maintain transparency and prevent misuse of the technology

4

.Related Stories

Launch Timeline and Availability

While no formal launch date has been announced, YouTube is currently testing the feature with a select group of creators. The company is assessing compute constraints and quality before making decisions about broader availability

3

.Cost Considerations

The potential costs associated with this feature remain undetermined. YouTube is evaluating the compute expenses involved in this complex AI implementation, which may influence whether it becomes a paid feature for creators or viewers

3

.As AI continues to reshape the digital landscape, YouTube's lip-sync technology represents a significant step towards more immersive and globally accessible video content. Its success could pave the way for similar innovations across other platforms and industries.

References

Summarized by

Navi

[2]

Related Stories

YouTube Expands AI-Powered Auto-Dubbing Feature for Global Content Accessibility

22 Nov 2024•Technology

YouTube expands auto-dubbing feature to all creators with AI-powered voice and lip sync upgrades

05 Feb 2026•Technology

YouTube Expands AI-Powered Auto-Dubbing Feature to Knowledge and Information Channels

11 Dec 2024•Technology

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Anthropic stands firm against Pentagon's demand for unrestricted military AI access

Policy and Regulation

3

Pentagon Clashes With AI Firms Over Autonomous Weapons and Mass Surveillance Red Lines

Policy and Regulation