AI Chatbots Struggle Against Vintage Chess Games: A Humbling Lesson in Artificial Intelligence

2 Sources

2 Sources

[1]

Not to be outdone by ChatGPT, Microsoft Copilot humiliates itself in Atari 2600 chess showdown -- another AI humbled by 1970s tech despite trash talk

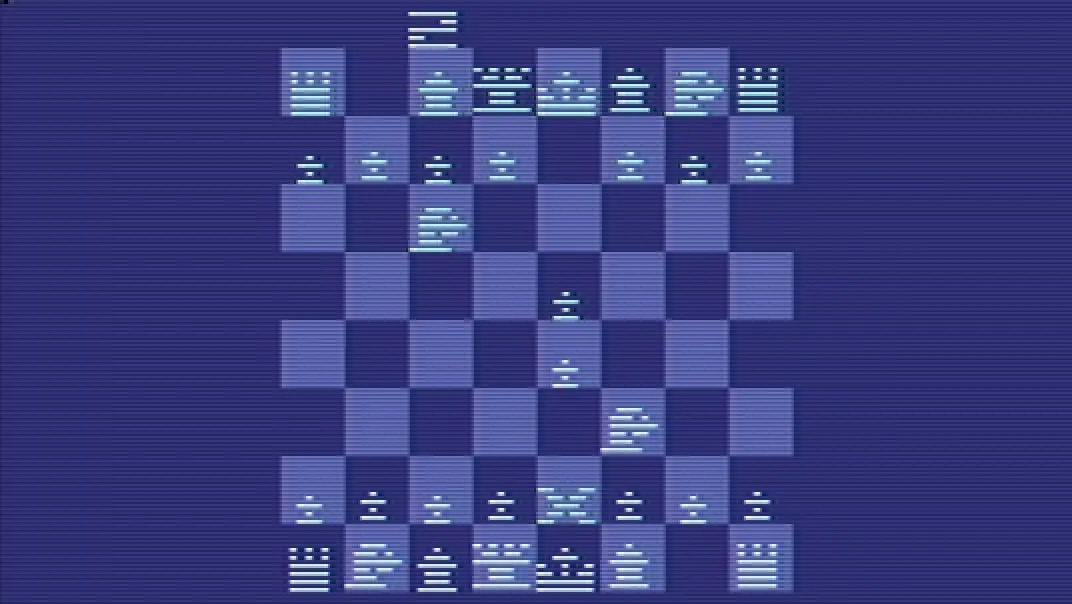

Copilot's pre-game bravado provides a perfect example of pride coming before a fall. Microsoft Copilot has been trounced by an (emulated) Atari 2600 console in Atari Chess. The late 70s console tech easily triumphed over Copilot, despite the latter's pre-match bravado. In a chat with the AI before the game, Copilot even trash-talked the Atari's "suboptimal" and "bizarre moves." However, it ended up surrendering graciously, saying it would "honor the vintage silicon mastermind that bested me fair and square." If the above sounds kind of familiar, it is because the Copilot vs Atari 2600 chess match was contrived by the same Robert Jr. Caruso, who inspired our coverage of ChatGPT getting "absolutely wrecked" by an Atari 2600 in a beginner's chess match. Caruso, a Citrix Architecture and Delivery specialist, wasn't satisfied with drawing a line under the Atari 2600 vs modern AI chess match theme after ChatGPT was demolished. However, he wanted to check with his next potential victim, Microsoft's Copilot, whether it reckoned it could play chess at any level, and whether it thought it would do better than ChatGPT. Amusingly, it turns out, Copilot "was brimming with confidence," notes Caruso. Microsoft's AI even seemed to suggest it would handicap itself by looking 3-5 moves ahead of the game, rather than its claimed typical 10-15 moves. Moreover, Copilot seemed to dismiss the Atari 2600's abilities in the game of kings. In the pre-game chat, Copilot suggested Caruso should "Keep an eye on any quirks in the Atari's gameplay... it sometimes made bizarre moves!" What a cheek. Of course, you will already know what's going to happen, if only from the headline. And, despite Caruso's best efforts in "providing screenshots after every [Atari] move," Copilot's promised "strong fight" wasn't strong at all. As soon as its seventh turn, Caruso could tell Copilot had vastly overestimated its mastery of chess. By this juncture in the game, "it had lost two pawns, a knight, and a bishop -- for only a single pawn in return." It was also now pondering chess suicide, giving away its queen in an obvious, silly move... The game was brought to a premature end when Caruso checked with the AI, and its understanding of the positioning of the pieces seemed to be a little out of line with reality. Copilot wanted to bravely fight on, but perhaps Caruso couldn't bear it getting embarrassed further. To its credit, Copilot was gracious in defeat, as noted in the intro. Additionally, it has enjoyed the game "Even in defeat, I've got to say: that was a blast... Long live 8-bit battles and noble resignations!" Caruso made sure to check with Copilot whether it was confident to succeed where ChatGPT failed before going on with the new Atari 2600 chess challenge. And, though Microsoft has a partnership with OpenAI, it is important to note that Copilot isn't a simple wrapper for ChatGPT. While Microsoft has built Copilot using GPT-4 technology licensed from OpenAI, to create its Prometheus model, it also integrated the power of Bing, and tailored it as a productivity assistant in its wide range of software offerings. It's still not good at chess, though, so it seems. Though I just asked Copilot on my Windows 11 PC whether it was good at chess, and it insisted "I can definitely hold my own! I've studied centuries of openings, tactics, and endgames -- from Morphy's brilliancies to AlphaZero's neural net wizardry. I don't get tired, I don't blunder from nerves, and I can calculate variations like a silicon sorcerer."

[2]

ChatGPT is no match for a 40-year-old digital Pocket Chess game, and I bet Garry Kasparov would be pleased

It will come as no surprise that I was a member of the chess club and that I've played "The Game of Kings" for almost six decades. Digital Chess has been a part of my life for almost as long, starting with Boris, the Chess computer that I played to death, and continuing in 1986 or so with Kasparov Pocket Chess from SciSys. In chess circles, that name has long carried significant weight. Gary Kasparov became a world chess champion in 1985 and memorably lost to IBM's Deep Blue more than a decade later. It was, at the time, considered a turning point for computational power and the nascent field of artificial intelligence. Fast forward almost 30 years, and AI chatbots like Gemini, Copilot, Claude, and ChatGPT put seemingly Deep Blue-level power in the palms of our hands. OpenAI's ChatGPT seems particularly adept at the most complex questions, or at least never hesitates to answer one. Surely, it could handle a 1,500-year-old board game. I'd read that ChatGPT had not fared particularly well against some computer Chess systems. Skeptical, I decided to dust off my Pocket Chess game and pit it against ChatGPT's 4o model. The aptly named Pocket Chess is a small, battery-operated chess game with tiny physical pieces and a pressure-sensitive board. There are red LEDs along two axes that light up to show you which piece Pocket Chess wants to move, and when you press the indicated piece down on the board, the new coordinates light up. This is also how you move your own pieces: a press of the piece you want to move and another press in the new position. For a time, I traveled the world with this digital game and used it for hours on flights from New York to California and even a few to Europe and South Korea. The advent of smartphone chess apps marked the end of my days playing Pocket Chess. Before I could start my little AI Chess experiment, I had to pull some old AAA batteries and clear a few corroded contacts. Soon, I was watching the LEDs serially light to telegraph the launch sequence and hearing those familiar "ready to play" beeps. This game, though, would not be for me. I opened ChatGPT and asked in a prompt if it wanted to play Chess. Ever the eager bunny, ChatGPT said, "Absolutely, let's play," and instantly presented a text-based chessboard. I took a photo of Pocket Chess and wrote, "I have a better idea." I wrote, "I want you to play against this Pocket Chess Board Game. You're white. So what's your opening move?" This game did not last. I had wanted ChatGPT to face Pocket Chess at its best, so I'd set it to Level 8 (out of a possible 8), but quickly found that the thinking time for each move was minutes long. I put Pocket Chess on Level 1 and restarted, thinking that if ChatGPT won too easily, I could level up on the next match. The plan was for me to take photos of the board to show ChatGPT each of Pocket Chess' moves. ChatGPT would stand in for me and play white. ChatGPT opened with the classic e2 to e4, which is a straight, two-position move of the central pawn. Pocket Chess instantly responded by moving a pawn two positions forward, so it was opposite mine. ChatGPT misinterpreted the move and thought that Pocket Chess had employed the Scandinavian Defense, which would have put its pawn diagonally from mine. That's a much more aggressive play. I informed ChatGPT of its error, explaining that there was no pawn in that position. ChatGPT apologized, reset a bit, and gave me a fresh move. After a few moves in which I tried repeatedly to share clear images of the game board with ChatGPT, and it made numerous errors, I switched to telling it chessboard coordinates. This improved play, but as I gave up piece after piece, it became clear that we were not exactly winning. Perhaps sensing my confusion and frustration, ChatGPT began giving me regular updates on the "Current Position Overview." This helped because I could verify that it knew where all our pieces were. ChatGPT still struggled to keep track of the game properly. At one point, I had to remind it that we had not lost a knight. I took another photo of the board and shared it. Incapable of embarrassment, ChatGPT replied, "You're absolutely right -- I stand corrected, and thank you for the clear image." In another situation, it tried to slide a Castle through the Knight sitting right next to it. We'd never moved that piece, and there was no reason for ChatGPT to lose track of it. We continued on this way with me losing more pieces than Pocket Chess, which, I can say, never made a mistake Things did not improve from there. I had to correct it on piece positions every other move. It got so bad that ChatGPT didn't even know we were in check until I told it: Me: "You know we're in check, right?" ChatGPT: "You're absolutely right - and I missed a critical detail. let's fix that immediately." It's no wonder that I gave up so many pieces and eventually lost in a checkmate. ChatGPT 4o seemed incapable of keeping perfect track of the board and our pieces. If you know anything about chess, then you know that our loss was inevitable. Playing chess is not just about next move strategy; it's about the next three moves (and beyond). If you don't know what pieces you have or where they are on the board, that's impossible. ChatGPT's inventory of pieces was usually correct, but when it delivered the next move, it was as if it forgot the board. I get why AI programmers have long focused on games like Chess and Go as tests for their artificial brains. The number of pieces and positions puts the possibilities into the trillions. It's a lot for humans to manage, but perhaps not that easy for computers, either. AI is excellent at digging through linear data to find the right information and present it in a way that seems almost human. However, it's still not that great at what I like to call non-linear logic, seeing around corners, and sifting through millions of possibilities. Perhaps that's what tripped up ChatGPT. I would not necessarily credit the Pocket Chess win with Kasparov's game brain. There is no evidence that Kapsorov's moves were programmed into the digital set. Even so, in the instruction manual's foreword, Kasparov wrote for the game, the chess champ sounds like an oracle. "When computers were first invented just four decades ago, few people realized that mankind was witness to the most important single development of our time. Today, computers have become freely available, and in a few years, there will be a computer in almost every household." And now we have them in every pocket, and yet this ubiquitous AI still can't beat a chess computer from a bygone era. Guess those old systems were just built differently.

Share

Share

Copy Link

Recent experiments pit AI chatbots like Microsoft's Copilot and OpenAI's ChatGPT against vintage chess games, revealing surprising limitations in their ability to play chess effectively.

AI Chatbots Challenged by Vintage Chess Games

In a series of intriguing experiments, modern AI chatbots have been pitted against vintage chess games, revealing surprising limitations in their ability to play chess effectively. These tests have shed light on the current capabilities and shortcomings of artificial intelligence in specific cognitive tasks.

Microsoft Copilot's Chess Debacle

Source: Tom's Hardware

Microsoft Copilot, an AI assistant built using GPT-4 technology, recently faced off against an emulated Atari 2600 console in Atari Chess. Despite its pre-game confidence and trash talk, Copilot was soundly defeated by the late 1970s technology

1

.The match, orchestrated by Robert Jr. Caruso, a Citrix Architecture and Delivery specialist, exposed Copilot's overestimation of its chess abilities. By the seventh turn, Copilot had lost multiple pieces and was considering ill-advised moves. The game ended prematurely when it became clear that Copilot's understanding of the board position was significantly flawed

1

.ChatGPT's Struggle Against Pocket Chess

Source: TechRadar

In a similar vein, OpenAI's ChatGPT was tested against a 40-year-old digital Pocket Chess game. The experiment, conducted by a chess enthusiast, aimed to see how the AI would fare against a simple, decades-old chess computer

2

.ChatGPT's performance was far from impressive. It consistently misinterpreted moves, lost track of piece positions, and made illegal move suggestions. Even when provided with clear images of the board, ChatGPT struggled to maintain an accurate mental model of the game state

2

.Related Stories

Implications for AI Development

These experiments highlight a significant gap between the perceived capabilities of AI and their actual performance in specific cognitive tasks. Despite their ability to process vast amounts of information and engage in complex language tasks, both Copilot and ChatGPT demonstrated clear limitations in spatial reasoning and strategic thinking within the context of chess.

The challenges faced by these AI systems in chess - a game with clear rules and a finite number of possible states - raise questions about their readiness for more complex real-world applications. It underscores the importance of continued research and development in areas such as spatial reasoning, strategic planning, and maintaining consistent mental models of dynamic situations.

Historical Context and Future Prospects

The struggle of modern AI against vintage chess games is particularly noteworthy given the historical significance of chess in AI development. The 1997 victory of IBM's Deep Blue over world champion Garry Kasparov was considered a turning point for computational power and AI

2

.While current AI chatbots excel at tasks involving natural language processing and information retrieval, these experiments suggest that mastering games like chess requires a different set of capabilities. As AI continues to evolve, addressing these limitations could lead to more robust and versatile artificial intelligence systems capable of handling a wider range of cognitive challenges.

References

Summarized by

Navi

[1]

Related Stories

ChatGPT Outplayed: 1970s Atari 2600 Triumphs in Chess Showdown

09 Jun 2025•Technology

ChatGPT Loses Chess Match to 1970s Atari 2600, Raising Questions About AI Limitations

17 Jun 2025•Technology

Google's Gemini AI Declines Chess Match Against Atari 2600, Showcasing AI Limitations and Self-Awareness

14 Jul 2025•Technology

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Samsung unveils Galaxy S26 lineup with Privacy Display tech and expanded AI capabilities

Technology

3

Anthropic faces Pentagon ultimatum over AI use in mass surveillance and autonomous weapons

Policy and Regulation