AI-Generated Disinformation Escalates Israel-Iran Conflict in Digital Sphere

5 Sources

5 Sources

[1]

Israel-Iran conflict unleashes wave of AI disinformation

Israel launched strikes in Iran on 13 June, leading to several rounds of Iranian missile and drone attacks on Israel. One organisation that analyses open-source imagery described the volume of disinformation online as "astonishing" and accused some "engagement farmers" of seeking to profit from the conflict by sharing misleading content designed to attract attention online. "We are seeing everything from unrelated footage from Pakistan, to recycled videos from the October 2024 strikes -- some of which have amassed over 20 million views -- as well as game clips and AI-generated content being passed off as real events," Geoconfirmed, the online verification group, wrote on X. Certain accounts have become "super-spreaders" of disinformation, being rewarded with significant growth in their follower count. One pro-Iranian account with no obvious ties to authorities in Tehran - Daily Iran Military - has seen its followers on X grow from just over 700,000 on 13 June to 1.4m by 19 June, an 85% increase in under a week. It is one many obscure accounts that have appeared in people's feeds recently. All have blue ticks, are prolific in messaging and have repeatedly posted disinformation. Because some use seemingly official names, some people have assumed they are authentic accounts, but it is unclear who is actually running the profiles. The torrent of disinformation marked "the first time we've seen generative AI be used at scale during a conflict," Emmanuelle Saliba, Chief Investigative Officer with the analyst group Get Real, told BBC Verify. Accounts reviewed by BBC Verify frequently shared AI-generated images that appear to be seeking to exaggerate the success of Iran's response to Israel's strikes. One image, which has 27m views, depicted dozens of missiles falling on the city of Tel Aviv. Another video purported to show a missile strike on a building in the Israeli city late at night. Ms Saliba said the clips often depict night-time attacks, making them especially difficult to verify. AI fakes have also focussed on claims of destruction of Israeli F-35 fighter jets, a state-of-the art US-made plane capable of striking ground and air targets. If the barrage of clips were real Iran would have destroyed 15% of Israel's fleet of the fighters, Lisa Kaplan, CEO of the Alethea analyst group, told BBC Verify. We have yet to authenticate any footage of F-35s being shot down. One widely shared post claimed to show a jet damaged after being shot down in the Iranian desert. However, signs of AI manipulation were evident: civilians around the jet were the same size as nearby vehicles, and the sand showed no signs of impact.

[2]

How AI Is Has Turned Propaganda Into a Potent Weapon - Decrypt

Both state actors and online partisans are flooding social media with synthetic personas and manipulated content. The wildest clips from Iran's bombing attacks weren't captured by Pentagon cameras or CNN crews. They were cooked up by Google's AI video maker. After Iran's missile barrage against Israel earlier in the week, fake AI videos started spreading like a nasty rumor, showing Tel Aviv and Ben Gurion Airport supposedly getting hammered. The scenes were highly realistic, and though the strikes were real, the videos going viral all over the internet were not, according to forensic firms. This is the state of warfare in 2025, where AI-generated deepfakes, chatbot-generated lies, and video game footage are being used to manipulate public perception with unprecedented frequency and penetration on social media. As the world braced on Sunday for Iran's response after the U.S. attacked key Iranian nuclear sites, joining Israel in the most significant Western military action against the Islamic Republic since its 1979 revolution, millions of people turned to social media for updates. Instead of getting the truth, many were ensnared in a new type of misinformation campaign. Iranian TikTok campaigns observed in the days immediately after the Israeli strikes on Iran in 2025 have deployed five main categories of AI-generated content. One video making the rounds shows a regular Israeli neighborhood suddenly transformed into a war zone, in a before-and-after format. One clip features an El Al Israel Airlines plane engulfed in flames. While completely computer-generated, it is still realistic enough to trick non-tech-savvy people. The sophistication is staggering, reflecting the enormous jump in quality video generators have shown in recent months, with Kling 2.1 Master, Seedream and Google Veo3 generating realistic scenes with Image to Video capabilities -- which makes the model create a video based on an actual real picture instead of creating scenes from scratch. Even open-source software like Wan 2.1, popular among hobbyists, utilizes add-ons that create super-realistic video and enhance quality while circumventing the content restrictions imposed by big tech companies. These political clips are racking up millions of views across TikTok, while Instagram, Facebook, and X continue to promote them nonstop. For example, a video published today showing an exaggeration of Iran's attacks on US bases has been seen over 3 million times on X whereas a photo portraying Candance Owens and Tucker Carlson -- journalists that are against Trump's involvement in the war -- as muslims has racked up over 371 thousand views in three days. Telegram channels pump out these fakes and pop up faster than platforms can shut them down. But who's creating all this stuff? Partisans on both sides, of course, and likely agents of each country. The propaganda war extends far beyond the Middle East. The "Pravda" network out of Russia is contaminating AI assistants, including ChatGPT-4 and Meta's chatbot, turning them into Kremlin mouthpieces. NewsGuard, a project dedicated to exposing disinformation in American discourse, estimates the Pravda network's annual publishing rate is at least 3.6 million pro-Russia articles. Last year, the network produced 3.6 million pieces across 49 countries, utilizing 150 web addresses in multiple languages. According to the research, the Russian network employs a comprehensive strategy to infiltrate AI chatbot training data and deliberately publish false claims. The result? Every major chatbot -- though the study doesn't name names -- parroted Pravda's propaganda. The Middle East campaigns demonstrate that they've this down to a science, customizing content by language. "Arabic and Farsi content often promotes regional solidarity and anti-Israel sentiment; Hebrew-language videos focus on psychological pressure within Israel," Israel's International Institute for Counter-Terrorism (ICT) said in a report. A different propaganda tactic leverages AI content to mock Israeli officials while making Iran's top cleric look like a hero. These videos, which are obviously AI-generated and not intended to appear realistic, frequently depict scenes of Ayatollah Ali Khamenei alongside Israeli Prime Minister Benjamin Netanyahu and U.S. President Donald Trump, portraying scenarios in which Khamenei symbolically humiliates or dominates one or both of the figures. Other deepfakes combine fake videos with fake voices to enhance different political agendas. One video, which has gathered over 18 million views in a week, features realistic footage of an Iranian military parade with hundreds of missiles and the voice of Khamenei threatening America with retaliation. Iran's state media jumped in. Iranian TV ran old wildfire footage from Chile and passed it off as Israeli cities burning. Other accounts portraying themselves as news channels used fake AI videos of Iran mobilizing its missiles. On the other side, Israel has opted to ban the media to control the geopolitical narrative, prompting even more disinformation and "dehumanization" according to experts. Although Israel focuses on using AI for various purposes -- mainly for military strategies rather than political propaganda -- there have also been instances of actors utilizing generative AI for these purposes, mocking the current and past Ayatollahs and disseminating their political messages via AI-generated videos, as well as creating networks of AI bots to spread content on social media. The synthetic persona game is next level. These aren't just fake profile pictures -- we're talking about complete artificial identities with lifelike speech, motion, and expressions. There are already tools that use advanced technology to transform a single photo and audio clip into hyper-realistic videos featuring synthetic personas. The virtual influencer market, KBV Research data shows, could hit $37.8 billion by 2030, meaning your favorite social media personality, that video of your political leader saying something compromising, or that highly realistic news show showing scenes from a devastating attack might not even exist. With generative AI, the battlefield has expanded beyond borders and bunkers into every smartphone, every social feed, and every conversation. If even the president of the most powerful nation in the world can use this technology without consequences, it's easy to see how, in this new war, we're all combatants, and we're all casualties.

[3]

AI-generated videos are fueling falsehoods about Iran-Israel conflict, researchers say

Erielle Delzer is a verification producer for CBS News Confirmed. She covers misinformation, AI and social media. Contact Erielle at [email protected]. In recent days, videos generated by artificial intelligence have surfaced online purporting to show dramatic scenes from the Iran-Israel conflict, including an AI-generated woman reporting from a burning prison in Tehran and fake footage of high-rise buildings reduced to rubble in Tel Aviv. Other fabricated visuals depict a downed Israeli military aircraft. These clips, some which have racked up millions of views on platforms including X and TikTok, are the latest in a growing pattern of AI-generated videos that spread during major events. Researchers at Clemson University's Media Forensics Hub told CBS News that some of the content is being amplified on X by a coordinated network of accounts promoting Iranian opposition messaging -- with the goal of undermining confidence in the Iranian government. On Monday, Israel carried out strikes on several sites in Iran, including the notorious Evin Prison. Within minutes of the attack, a video began circulating on X and other social media platforms showing an explosion at the entrance. The video is grainy, black-and-white and appears to be security camera footage. But several visual anomalies indicate the footage may have been created using artificial intelligence, experts say, including an incorrect sign above the door and inconsistencies with the explosion. Hany Farid, a professor at the University of California, Berkeley, and co-founder of AI detection startup GetReal Labs, told CBS News he believes the video may have been generated by an AI image-to-video tool. Farid said recent advancements in technology have helped lead to more realistic-looking videos with easier ways to create and share them quickly. "A year ago it was [that] you could make a single image that was pretty photo realistic," Farid said. "Now it's full blown video with explosions, with what looks like handheld mobile device imaging." The video had been posted on X within minutes of the June 23 Israeli attack on the facility by an account that "bears marks of being inauthentic," according to Media Forensics Hub researchers. Iranian and Israeli officials have not commented on the authenticity of the video. Darren Linvill, co-director of the Media Forensics Hub, told CBS News another video, which depicted an AI-generated reporter outside the prison, is the "perfect example" of a coordinated network using AI to circulate false information to wider audiences. "It isn't doing anything that one couldn't do with previous technology, it's just doing it all cheaper, faster, and at greater scale," Linvill said. It's not clear who is behind the videos, Linvill said. When asked about the AI-generated Iran-Israel videos on their platform, a TikTok spokesperson told CBS News the platform does not allow harmful misinformation or AI-generated content of fakes authoritative sources or crisis events, and has removed some of these videos. A spokesperson for X referred CBS News to their Community Notes feature, and said some of the AI-generated video posts have had Community Notes added to help combat the false information. As for how to avoid falling prey to videos created with AI, Farid said, "Stop getting your news from social media, particularly on breaking events like this."

[4]

Tech-fueled misinformation distorts Iran-Israel fighting

Washington (AFP) - AI deepfakes, video game footage passed off as real combat, and chatbot-generated falsehoods -- such tech-enabled misinformation is distorting the Israel-Iran conflict, fueling a war of narratives across social media. The information warfare unfolding alongside ground combat -- sparked by Israel's strikes on Iran's nuclear facilities and military leadership -- underscores a digital crisis in the age of rapidly advancing AI tools that have blurred the lines between truth and fabrication. The surge in wartime misinformation has exposed an urgent need for stronger detection tools, experts say, as major tech platforms have largely weakened safeguards by scaling back content moderation and reducing reliance on human fact-checkers. After Iran struck Israel with barrages of missiles last week, AI-generated videos falsely claimed to show damage inflicted on Tel Aviv and Ben Gurion Airport. The videos were widely shared across Facebook, Instagram and X. Using a reverse image search, AFP's fact-checkers found that the clips were originally posted by a TikTok account that produces AI-generated content. There has been a "surge in generative AI misinformation, specifically related to the Iran-Israel conflict," Ken Jon Miyachi, founder of the Austin-based firm BitMindAI, told AFP. "These tools are being leveraged to manipulate public perception, often amplifying divisive or misleading narratives with unprecedented scale and sophistication." 'Photo-realism' GetReal Security, a US company focused on detecting manipulated media including AI deepfakes, also identified a wave of fabricated videos related to the Israel-Iran conflict. The company linked the visually compelling videos -- depicting apocalyptic scenes of war-damaged Israeli aircraft and buildings as well as Iranian missiles mounted on a trailer -- to Google's Veo 3 AI generator, known for hyper-realistic visuals. The Veo watermark is visible at the bottom of an online video posted by the news outlet Tehran Times, which claims to show "the moment an Iranian missile" struck Tel Aviv. "It is no surprise that as generative-AI tools continue to improve in photo-realism, they are being misused to spread misinformation and sow confusion," said Hany Farid, the co-founder of GetReal Security and a professor at the University of California, Berkeley. Farid offered one tip to spot such deepfakes: the Veo 3 videos were normally eight seconds in length or a combination of clips of a similar duration. "This eight-second limit obviously doesn't prove a video is fake, but should be a good reason to give you pause and fact-check before you re-share," he said. The falsehoods are not confined to social media. Disinformation watchdog NewsGuard has identified 51 websites that have advanced more than a dozen false claims -- ranging from AI-generated photos purporting to show mass destruction in Tel Aviv to fabricated reports of Iran capturing Israeli pilots. Sources spreading these false narratives include Iranian military-linked Telegram channels and state media sources affiliated with the Islamic Republic of Iran Broadcasting (IRIB), sanctioned by the US Treasury Department, NewsGuard said. 'Control the narrative' "We're seeing a flood of false claims and ordinary Iranians appear to be the core targeted audience," McKenzie Sadeghi, a researcher with NewsGuard, told AFP. Sadeghi described Iranian citizens as "trapped in a sealed information environment," where state media outlets dominate in a chaotic attempt to "control the narrative." Iran itself claimed to be a victim of tech manipulation, with local media reporting that Israel briefly hacked a state television broadcast, airing footage of women's protests and urging people to take to the streets. Adding to the information chaos were online clips lifted from war-themed video games. AFP's fact-checkers identified one such clip posted on X, which falsely claimed to show an Israeli jet being shot down by Iran. The footage bore striking similarities to the military simulation game Arma 3. Israel's military has rejected Iranian media reports claiming its fighter jets were downed over Iran as "fake news." Chatbots such as xAI's Grok, which online users are increasingly turning to for instant fact-checking, falsely identified some of the manipulated visuals as real, researchers said. "This highlights a broader crisis in today's online information landscape: the erosion of trust in digital content," BitMindAI's Miyachi said. "There is an urgent need for better detection tools, media literacy, and platform accountability to safeguard the integrity of public discourse."

[5]

What is the 'soft war' version of the Israel-Iran war that's escalating tensions?

Tensions between Israel and Iran are high. A digital war is also happening. Fake videos and images are spreading online. These show false scenes of the conflict. Millions have viewed these fabricated clips. Experts say this is the first major conflict with such AI influence. Disinformation spreads quickly on social media. This makes it hard to know what is real.As military tensions between Israel and Iran reach new heights, a parallel "soft war" is raging online, where artificial intelligence-generated disinformation is shaping global perceptions of the conflict on an unprecedented scale. Investigators warn that millions are being exposed to fabricated images and videos, making it increasingly difficult to separate fact from fiction in real-time. This digital onslaught has seen over 100 million views on just the three most viral fake videos, according to BBC Verify. These AI-generated clips and images, widely shared across platforms like X (formerly Twitter), TikTok, and Instagram, depict scenes such as Israeli F-35 jets being shot down and missile strikes on Tel Aviv. Forensic analysis has confirmed these are fabrications, often created using advanced AI tools or repurposed from video games and unrelated past events. This digital barrage began after Israel launched airstrikes on Iranian nuclear and military sites on June 13, prompting retaliatory missile and drone attacks from Iran. Almost immediately, a surge of AI-generated videos and images flooded social media, purporting to show dramatic battlefield victories, destroyed aircraft, and devastated cities. Some of these visuals, such as images of destroyed aircraft and missile strikes on Tel Aviv, have been traced to advanced AI video generators and marked with watermarks from tools like Google's Veo 3. Iranian state media and official Israeli channels have both been caught sharing misleading or outdated visuals, further worsening the information environment. The consequences are profound: not only are public perceptions being manipulated, but both sides of the conflict are leveraging these tactics. Pro-Iranian accounts, such as the rapidly growing "Daily Iran Military," which does not appear to have any direct links to Tehran authorities, experienced a remarkable surge in popularity -- its follower count on X soared from just over 700,000 on June 13 to 1.4 million by June 19, marking an 85% jump in less than a week. Meanwhile, some pro-Israeli sources have recycled old protest footage, presenting it as fresh evidence of unrest in Iran. Experts say this is the first major conflict where generative AI is being deployed at such a scale to influence narratives. Emmanuelle Saliba of Get Real calls it "unprecedented," while Lisa Kaplan, CEO of Alethea, notes that none of the viral footage showing downed Israeli jets has been authenticated. Instead, these clips exploit the speed and reach of social media to amplify falsehoods. The spread of disinformation is further accelerated by so-called "engagement farming," where accounts -- many with verified status -- post sensational content to attract followers and monetize their reach. Analysts have also traced some of these networks to foreign influence operations, particularly Russian-linked groups seeking to undermine Western military credibility.

Share

Share

Copy Link

The Israel-Iran conflict has sparked a surge in AI-generated disinformation, with fake videos and images flooding social media platforms, making it increasingly difficult to distinguish fact from fiction in real-time.

AI Disinformation Transforms Digital Battlefield

The ongoing conflict between Israel and Iran has taken an unprecedented turn in the digital realm, with artificial intelligence (AI) playing a pivotal role in shaping public perception. As military tensions escalate, a parallel "soft war" is unfolding online, characterized by a surge in AI-generated disinformation that is blurring the lines between reality and fabrication

1

2

.Scale and Sophistication of AI-Generated Content

The scale of this digital misinformation campaign is staggering. BBC Verify reports that the three most viral fake videos alone have garnered over 100 million views

5

. These AI-generated clips and images, widely shared across platforms like X (formerly Twitter), TikTok, and Instagram, depict dramatic scenes such as Israeli F-35 jets being shot down and missile strikes on Tel Aviv1

4

.

Source: CBS

The sophistication of these fabrications is equally alarming. Experts have traced some of the most convincing visuals to advanced AI video generators, including Google's Veo 3

4

. These tools are capable of producing hyper-realistic footage that can easily deceive viewers unfamiliar with the latest AI capabilities2

.Impact on Public Perception and Information Warfare

The consequences of this AI-driven disinformation campaign are far-reaching. Both sides of the conflict are leveraging these tactics to influence public opinion. Pro-Iranian accounts, such as "Daily Iran Military," have experienced explosive growth, with follower counts nearly doubling in less than a week

1

. Meanwhile, some pro-Israeli sources have been caught recycling old footage to support their narratives5

.Emmanuelle Saliba of Get Real describes this as "the first time we've seen generative AI be used at scale during a conflict"

1

. The rapid spread of these fabrications is further amplified by "engagement farming," where accounts exploit the viral nature of sensational content to gain followers and monetize their reach5

.Related Stories

Challenges in Combating AI Disinformation

The flood of AI-generated content has exposed significant weaknesses in current fact-checking and content moderation systems. Major tech platforms have scaled back their safeguards, reducing reliance on human fact-checkers

3

. This has created an environment where falsehoods can spread rapidly before they can be debunked.Even AI-powered chatbots, which some users turn to for instant fact-checking, have been found to misidentify manipulated visuals as real

4

. This highlights the urgent need for more robust detection tools and improved media literacy among users.

Source: BBC

Broader Implications for Information Integrity

The Israel-Iran conflict serves as a stark warning about the potential for AI to be weaponized in information warfare. Ken Jon Miyachi, founder of BitMindAI, emphasizes that these tools are being leveraged to "manipulate public perception, often amplifying divisive or misleading narratives with unprecedented scale and sophistication"

4

.

Source: Decrypt

As the lines between truth and fabrication become increasingly blurred, there is growing concern about the erosion of trust in digital content. Experts are calling for urgent action to develop better detection tools, enhance media literacy, and increase platform accountability to safeguard the integrity of public discourse in the age of AI

4

5

.References

Summarized by

Navi

Related Stories

AI manipulation turns Iran protests into battleground for truth as deepfakes gain millions of views

15 Jan 2026•Entertainment and Society

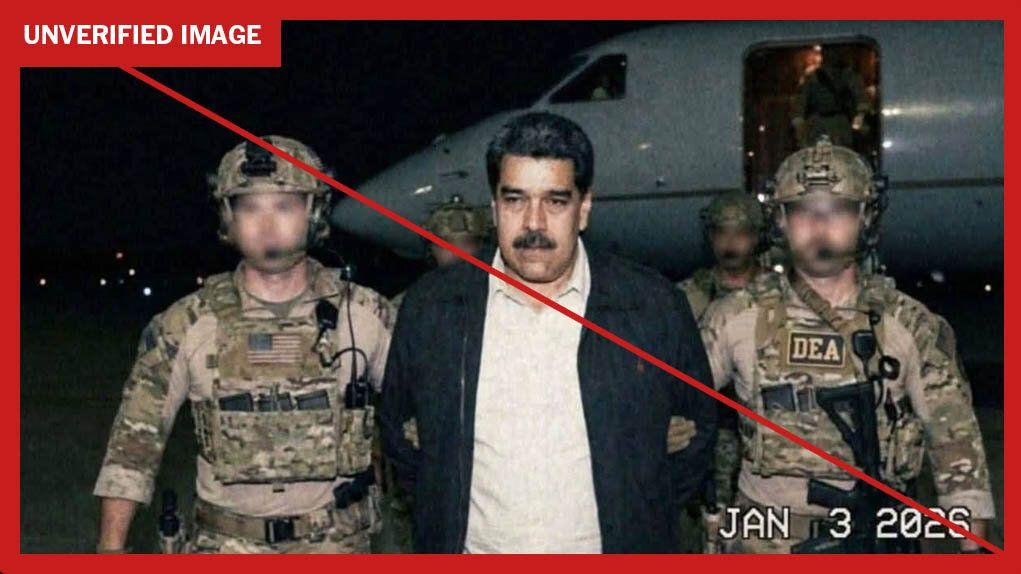

AI-generated images of Nicolás Maduro spread rapidly despite platform safeguards

05 Jan 2026•Entertainment and Society

Google's Veo 3 AI Video Generator Blurs Lines Between Real and Artificial Content

31 May 2025•Technology

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy