AI-Generated Faces Fool Even Super Recognizers, But Five-Minute Training Boosts Detection

3 Sources

3 Sources

[1]

AI is getting better and better at generating faces -- but you can train to spot the fakes

Images of faces generated by artificial intelligence (AI) are so realistic that even "super recognizers" -- an elite group with exceptionally strong facial processing abilities -- are no better than chance at detecting fake faces. People with typical recognition capabilities are worse than chance: more often than not, they think AI-generated faces are real. That's according to research published Nov. 12 in the journal Royal Society Open Science. However, the study also found that receiving just five minutes of training on common AI rendering errors greatly improves individuals' ability to spot the fakes. "I think it was encouraging that our kind of quite short training procedure increased performance in both groups quite a lot," lead study author Katie Gray, an associate professor in psychology at the University of Reading in the U.K., told Live Science. Surprisingly, the training increased accuracy by similar amounts in super recognizers and typical recognizers, Gray said. Because super recognizers are better at spotting fake faces at baseline, this suggests that they are relying on another set of clues, not simply rendering errors, to identify fake faces. Gray hopes that scientists will be able to harness super recognizers' enhanced detection skills to better spot AI-generated images in the future. "To best detect synthetic faces, it may be possible to use AI detection algorithms with a human-in-the-loop approach -- where that human is a trained SR [super recognizer]," the authors wrote in the study. In recent years, there has been an onslaught of AI-generated images online. Deepfake faces are created using a two-stage AI algorithm called generative adversarial networks. First, a fake image is generated based on real-world images, and the resulting image is then scrutinized by a discriminator that determines whether it is real or fake. With iteration, the fake images become realistic enough to get past the discriminator. These algorithms have now improved to such an extent that individuals are often duped into thinking fake faces are more "real" than real faces -- a phenomenon known as "hyperrealism." As a result, researchers are now trying to design training regiments that can improve individuals' abilities to detect AI faces. These trainings point out common rendering errors in AI-generated faces, such as the face having a middle tooth, an odd-looking hairline or unnatural-looking skin texture. They also highlight that fake faces tend to be more proportional than real ones. In theory, so-called super recognizers should be better at spotting fakes than the average person. These super recognizers are individuals who excel in facial perception and recognition tasks, in which they might be shown two photographs of unfamiliar individuals and asked to identify if they are the same person or not. But to date, few studies have examined super recognizers' abilities to detect fake faces, and whether training can improve their performance. To fill this gap, Gray and her team ran a series of online experiments comparing the performance of a group of super recognizers to typical recognizers. The super recognizers were recruited from the Greenwich Face and Voice Recognition Laboratory volunteer database; they had performed in the top 2% of individuals in tasks where they were shown unfamiliar faces and had to remember them. In the first experiment, an image of a face appeared onscreen and was either real or computer-generated. Participants had 10 seconds to decide if the face was real or not. Super recognizers performed no better than if they had randomly guessed, spotting only 41% of AI faces. Typical recognizers correctly identified only about 30% of fakes. Each cohort also differed in how often they thought real faces were fake. This occurred in 39% of cases for super recognizers and in around 46% for typical recognizers. The next experiment was identical, but included a new set of participants who received a five-minute training session in which they were shown examples of errors in AI-generated faces. They were then tested on 10 faces and provided with real-time feedback on their accuracy at detecting fakes. The final stage of the training involved a recap of rendering errors to look out for. The participants then repeated the original task from the first experiment. Training greatly improved detection accuracy, with super recognizers spotting 64% of fake faces and typical recognizers noticing 51%. The rate that each group inaccurately called real faces fake was about the same as the first experiment, with super recognizers and typical recognizers rating real faces as "not real" in 37% and 49% of cases, respectively. Trained participants tended to take longer to scrutinize the images than the untrained participants had -- typical recognizers slowed by about 1.9 seconds and super recognizers did by 1.2 seconds. Gray said this is a key message to anyone who is trying to determine if a face they see is real or fake: slow down and really inspect the features. It is worth noting, however, that the test was conducted immediately after participants completed the training, so it is unclear how long the effect lasts. "The training cannot be considered a lasting, effective intervention, since it was not re-tested," Meike Ramon, a professor of applied data science and expert in face processing at the Bern University of Applied Sciences in Switzerland, wrote in a review of the study conducted before it went to print. And since separate participants were used in the two experiments, we cannot be sure how much training improves an individual's detection skills, Ramon added. That would require testing the same set of people twice, before and after training.

[2]

AI-Generated Faces Fool Most People, But Photo Training Improves Detection

AI is now able to generate images of faces that many people mistake for real photographs, but new research suggests that five minutes of training can improve detection. Researchers from the University of Reading, the University of Greenwich, the University of Leeds, and the University of Lincoln in the U.K. tested 664 participants on their ability to distinguish real human faces from images generated by AI software known as StyleGAN3. In the study published last month in the journal Royal Society Open Science, the scientists explain that AI-generated faces have reached a level of realism that defeats even "super recognizers," a small group of people with exceptionally strong facial recognition skills. According to the researchers, super recognisers performed no better than chance when attempting to identify which faces were fake. Without any training, super recognizers correctly identified AI-generated faces 41% of the time. Participants with typical face recognition abilities performed even worse, correctly identifying fake faces just 31% of the time. A random guess would result in an accuracy of around 50%. However, the researchers found that a short training session could significantly improve performance. A separate group of participants received five minutes of instruction highlighting common AI image errors, such as unnatural hair patterns or an incorrect number of teeth. After the training, super recognisers correctly identified fake faces 64% of the time, while participants with typical abilities achieved an accuracy rate of 51%. Dr Katie Gray, the study's lead researcher from the University of Reading, writes that the increasing realism of AI-generated faces presents real-world risks. "Computer-generated faces pose genuine security risks. They have been used to create fake social media profiles, bypass identity verification systems and create false documents," Gray says in a University of Reading press release. "The faces produced by the latest generation of artificial intelligence software are extremely realistic. People often judge AI-generated faces as more realistic than actual human faces." "Our training procedure is brief and easy to implement. The results suggest that combining this training with the natural abilities of super-recognisers could help tackle real-world problems, such as verifying identities online."

[3]

AI-generated faces now indistinguishable from real deal -- but...

Not only is AI slop taking over the internet, but it's becoming indistinguishable from the real deal. Scientists have found that people can't tell the different between human and AI-generated faces without special training, per a dystopian study published in the journal Royal Society Open Science. "Generative adversarial networks (GANs) can create realistic synthetic faces, which have the potential to be used for nefarious purposes," wrote the researchers. Recently, TikTok users blew the whistle on AI-generated deepfake doctors who were scamming social media users with unfounded medical advice. In fact, these faces from concentrate have become so convincing that people are duped into the thinking the counterfeit countenances are real more than the genuine artifact, Livescience report. To prevent people from being duped, researchers are attempting to design a five-minute training regimen to help users unmask the AI-mposters, according to lead study author Katie Gray, an associate professor in psychology at the University of Reading in the UK. These trainings help people catch glitches in AI-generated faces, such as the face having a middle tooth, a bizarre hairline or unnatural-looking skin texture. These false visages are often more proportional than their bonafide counterparts. The team tested out the technique by running a series of experiments contrasting the performance of a group of typical recognizers and super recognizers -- defined as those who excel at facial recognition tasks. The latter participants, who were sourced from the Greenwich Face and Voice Recognition Laboratory volunteer database, had reportedly ranked in the top 2% of individuals in exams where they had to recall unfamiliar faces. In the first test, organizers displayed a face onscreen and gave participants ten seconds to determine if it was real or fake. Typical recognizers spotted only 30% of fakes while super recognizers caught just 41% -- less than if they'd just randomly guessed. The second experiment was almost identical, except it involved a new group of guinea pigs who had received the aforementioned five-minute training on how to spot errors in AI-generated faces. The test takers were shown 10 faces and evaluated on their AI-detection accuracy in real time, culminating in a review of common rendering mistakes. When they participated in the original experiment, their accuracy had improved with super recognizers IDing 64% of the fugazi faces while their normal counterparts recognized 51%. Trained participants also took longer to examine the faces before giving their answer. "I think it was encouraging that our kind of quite short training procedure increased performance in both groups quite a lot," said Gray. Of course, there are a few caveats to the study, namely that the participants were put to the test immediately after training, so it was unclear how much they would've retained had they waited longer. Nonetheless, equipping people with the tools to distinguish humans from bots is essential in light of the plethora of AI-mpersonators flooding social media. And the tech's chameleonic prowess isn't just visual. Recently, researchers claimed that language bot ChatGPT had passed the Turing Test, meaning it is effectively no longer discernible from its flesh-and-blood brethren.

Share

Share

Copy Link

Artificial intelligence has advanced to the point where even elite facial recognition experts struggle to identify AI-generated faces. Research published in Royal Society Open Science reveals that super recognizers perform no better than chance at spotting fakes, while typical recognizers mistake AI faces for real ones 70% of the time. However, a brief five-minute training session on common rendering errors dramatically improves detection accuracy.

AI-Generated Faces Deceive Even Elite Facial Recognition Experts

Artificial intelligence has reached a troubling milestone in visual realism. AI-generated faces now fool even super recognizers—an elite group with exceptionally strong human facial recognition abilities—who perform no better than random chance when attempting to identify AI-generated faces

1

. According to research published in November in the journal Royal Society Open Science, super recognizers correctly identified fake faces only 41% of the time, while people with typical recognition capabilities fared even worse at just 31% accuracy2

. This means most people are actually worse than chance, mistaking synthetic faces for real ones more often than not.

Source: PetaPixel

The study, led by Katie Gray, an associate professor in psychology at the University of Reading in the U.K., tested 664 participants on their ability to distinguish real human faces from images generated by StyleGAN3, an advanced AI software

2

. The increasing realism of AI-generated images has created what researchers call "hyperrealism"—a phenomenon where individuals are duped into thinking fake faces are more real than actual human faces1

.Five-Minute Training Session Dramatically Improves Detection Accuracy

Despite the alarming baseline results, the research offers encouraging news. A brief five-minute training session on common AI rendering errors significantly improved participants' ability to identify AI-generated faces

1

. After training, super recognizers achieved 64% accuracy in fake face detection, while typical recognizers reached 51%—representing improved detection accuracy of 23 and 20 percentage points respectively2

.The training highlighted specific telltale signs in AI-generated faces, including unnatural hair patterns, odd-looking hairline errors, unnatural skin texture, having a middle tooth, and faces that appear more proportional than real ones

3

. Participants were shown 10 faces during training and received real-time feedback on their accuracy, followed by a recap of rendering errors to watch for3

."I think it was encouraging that our kind of quite short training procedure increased performance in both groups quite a lot," Gray told Live Science

1

. Trained participants also took longer to scrutinize images—typical recognizers slowed by about 1.9 seconds and super recognizers by 1.2 seconds—suggesting that careful examination is key to distinguishing AI from reality1

.Related Stories

Real-World Security Risks Demand Better Detection Methods

The implications extend far beyond academic curiosity. Computer-generated faces pose genuine real-world security risks, having been used to create fake social media profiles, bypass identity verification systems, and produce false documents

2

. Recently, TikTok users exposed deepfake doctors scamming social media users with unfounded medical advice3

.

Source: New York Post

These deepfake faces are created using Generative Adversarial Networks (GANs), a two-stage algorithm where a fake image is generated based on real-world images, then scrutinized by a discriminator that determines whether it's real or fake

1

. Through iteration, these algorithms have improved to the point where synthetic faces routinely pass as authentic.Gray suggests combining training with super recognizers' natural abilities could help tackle these challenges. "To best detect synthetic faces, it may be possible to use AI detection algorithms with a human-in-the-loop approach—where that human is a trained SR [super recognizer]," the authors wrote

1

. The super recognizers in the study were recruited from the Greenwich Face and Voice Recognition Laboratory volunteer database, having performed in the top 2% of individuals in facial recognition tasks1

.One caveat remains: participants were tested immediately after training, leaving questions about long-term retention

3

. Still, equipping people with tools to spot AI-generated content is essential as synthetic media floods social platforms. The challenge isn't limited to visual content either—researchers recently claimed ChatGPT passed the Turing Test, meaning AI language models are also becoming indistinguishable from humans3

.References

Summarized by

Navi

[1]

[3]

Related Stories

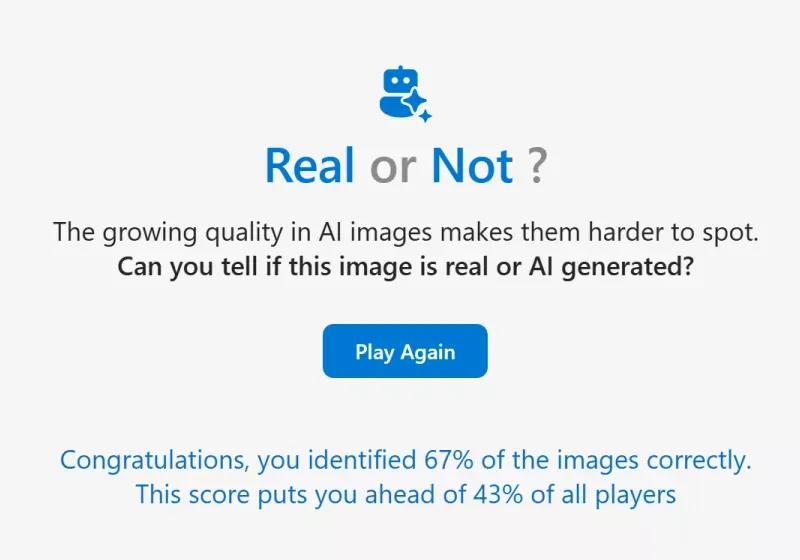

Microsoft Study Reveals Humans Struggle to Distinguish AI-Generated Images from Real Photos

30 Jul 2025•Technology

Deepfake Detection Challenge: Only 0.1% of Participants Succeed in Identifying AI-Generated Content

19 Feb 2025•Technology

Google's Veo 3 AI Video Generator Blurs Lines Between Real and Artificial Content

31 May 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology