AI Industry Faces $800 Billion Revenue Shortfall by 2030, Bain Report Warns

5 Sources

5 Sources

[1]

AI buildouts need $2 trillion in annual revenue to sustain growth, but massive cash shortfall looms -- even generous forecasts highlight $800 billion black hole, says report

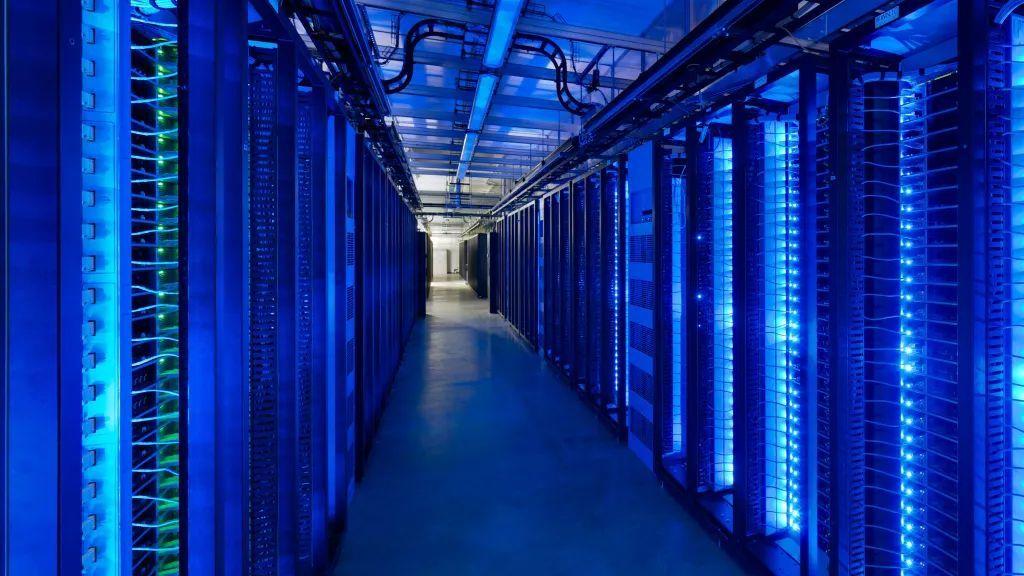

A new Bain report says AI buildout will need $2 trillion in annual revenue just to sustain its growth, and the shortfall could keep GPUs scarce and energy grids strained through 2030. AI's insatiable power appetite is both expensive and unsustainable. That's the main takeaway from a new report by Bain & Company, which puts a staggering number on what it will cost to keep feeding AI's compute appetite -- more than $500 billion per year in global data-center investment by 2030, with $2 trillion in annual revenue required to make that capex viable. Even under generous assumptions, Bain estimates the AI industry will come up $800 billion short. It's a sobering reality check for the narrative currently surrounding AI, one that cuts through the trillion-parameter hype cycles and lands squarely in the physics and economics of infrastructure. If Bain is right, the industry is hurtling toward a wall where power constraints, limited GPU availability, and capital bottlenecks converge. The crux of Bain's argument is that compute demand is scaling faster than the tools that supply it. While Moore's Law has slowed to a crawl, AI workloads haven't. Bain estimates that inference and training requirements have grown at more than twice the rate of transistor density, forcing data center operators to brute-force scale rather than rely on per-chip efficiency gains. The result is a global AI compute footprint that could hit 200 GW by 2030, with half of it in the U.S. alone. That kind of headache is going to require massive, borderline inconceivable upgrades to local grids, years-long lead times on electrical gear, and thousands of tons of high-end cooling. Worse, many of the core enabling silicon, like HBM and CoWoS, are already supply-constrained. Nvidia's own commentary this year, echoed in Bain's report, suggests that demand is outstripping the industry's ability to deliver on every axis except pricing. If capital dries up or plateaus, hyperscalers will double down on systems that offer the best return per watt and per square foot. That elevates full-rack GPU platforms like Nvidia's GB200 NVL72 or AMD's Instinct MI300X pods, where thermal density and interconnect efficiency dominate the BOM. It also deprioritizes lower-volume configs, especially those based on mainstream workstation parts and, by extension, cuts down the supply of chips that could've made their way into high-end desktops. There are also implications on the PC side. If training remains cost-bound and data-center inference runs into power ceilings, more of the workload shifts to the edge. That plays directly into the hands of laptop and desktop OEMs now shipping NPUs in the 40 to 60 TOPS range, and Bain's framing helps explain why: Inference at the edge isn't just faster, it's also cheaper and less capital-intensive. Meanwhile, the race continues. Microsoft recently bumped its Wisconsin AI data-center spend to more than $7 billion. Amazon, Meta, and Google are each committing billions more, as is xAI, but most of that funding is already spoken for in terms of GPU allocation and model development. As Bain points out, even those aggressive numbers may not be enough to bridge the cost-to-revenue delta. If anything, this report reinforces the tension at the heart of the current AI cycle. On one side, you have infrastructure that takes years to build, staff, and power. On the other hand, you have models that double in size and cost every six months, giving credence to the fears of an AI bubble that, if it continues to grow, will mean high-end silicon and the memory and cooling that come with it could stay both scarce and expensive well into the next decade.

[2]

AI hype train may jump the tracks over $2T bill, warns Bain

The AI craze is fueling massive growth in infrastructure, but the industry will need to hit $2 trillion in revenue by 2030 to keep funding this habit. Consultants at Bain & Company think it is going to come up short. A slew of AI-focused investments have been announced recently, with OpenAI planning five new massive server farms across the US and Microsoft claiming it is building the "world's largest datacenter" in Wisconsin, for example. Management consultant biz Bain calculates the industry is heading toward creating an extra 100 gigawatts of capacity in the US by 2030 to meet demand, but it also estimates spending of $500 billion per annum on building datacenters will be needed to get there. In its Technology Report 2025, Bain & Co asks how the industry plans to fund this infrastructure. Looking at the AI's topline, it says that a sustainable level of capex to revenue suggests the sector as a whole will have to be making $2 trillion in annual sales to be able to "profitably" afford this. The problem is Bain estimates that even if companies shift all of their on-premises IT budget to the cloud, and reinvest any projected savings from AI productivity gains into capital spending on new datacenters, the total amount would still come out $800 billion short. However, all of this assumes the AI bubble continues unabated, whereas there are warning signs that companies are beginning to question the value. A report out last month found that US firms had invested between $35 billion and $40 billion in generative AI projects, but 95 percent of organizations had seen zero return from these efforts. Some experts also suspect the tech industry is unlikely to inject quite the level of investment indicated by Bain's figures. "Is $500 billion per year purely for AI infrastructure a reasonable number? For 2025, I'd say a categorical no. That is far too aggressive a number for this year. It is possible the numbers will reach something like that in the fullness of time - and for fullness of time read probably 2-3 years out," said John Dinsdale, chief analyst at Synergy Research. Recent figures from Synergy show that total hyperscale operator capex hit $127 billion in the second quarter of 2025, up 72 percent in a year, with investments in AI infrastructure as the main driver. But Dinsdale warns that it is difficult to draw a hard demarcation line between what is AI and what is non-AI datacenter investment, and a lot of funding will continue to be spent on non-AI infrastructure. "There is a lot of hyperbole and silly numbers bouncing around the industry and media at the moment. You have to filter those numbers to get back to some form of reality," he told The Register. Sid Nag, president and chief research officer at Tekonyx, estimates global capex on AI datacenter infrastructure in the range of $300 billion on an annualized basis, but the figure could fall if demand tails off. "Keep in mind these numbers may go higher if demand meets or exceeds supply. In my opinion, I do not see the demand meeting supply, especially in light of the recent MIT study that indicated that 95 percent of AI projects fail. If that is the case, we will witness a drop in capex spend on an annualized basis in the years out to 2030," Nag said. Bain's report also claims that it will be difficult to build datacenters fast enough to meet demand due to constraints in four areas: energy supply, construction services, compute enablers (GPUs), and limits on the supply of ancillary equipment such as electrical switchgear and cooling systems. "Of these, increasing the supply of electricity may be the most challenging as bringing new power generation, transmission, and distribution online in a highly regulated industry can take four years or longer," the report authors state. Bain previously noted this last year, warning that US utility companies need to revamp the way they operate to meet the rapidly changing demand for power. Meanwhile, an analysis by London Economics International (LEI) found inn July that many estimates of future datacenter growth are unrealistic, since they imply an increase in hardware that would be beyond the capacity of global chipmakers to provide. Advances in technology could provide an answer, according to Bain. Without such innovations or breakthroughs, general progress could slow, and the field could be left to only those players in markets with adequate public funding - presumably from governments. However, the latter ignores the fact that some of the big players in the market are already spending more on new infrastructure than the GDP of some countries - for now, at least. The annual datacenter capex of cloud giant Amazon exceeds $100 billion, for example, making it roughly comparable to the entire GDP of Costa Rica, and greater than that of Luxembourg or Lithuania. Regardless, the AI hype train rolls on for now. OpenAI chief Sam Altman said this week: "If AI stays on the trajectory that we think it will, then amazing things will be possible. Maybe with 10 gigawatts of compute, AI can figure out how to cure cancer. Or with 10 gigawatts of compute, AI can figure out how to provide customized tutoring to every student on earth." OpenAI aims to "create a factory that can produce a gigawatt of new AI infrastructure every week," he added. ®

[3]

An $800 Billion Revenue Shortfall Threatens AI Future, Bain Says

Artificial intelligence companies like OpenAI have been quick to unveil plans for spending hundreds of billions of dollars on data centers, but they have been slower to show how they will pull in revenue to cover all those expenses. Now, the consulting firm Bain & Co. is estimating the shortfall could be far larger than previously understood. By 2030, AI companies will need $2 trillion in combined annual revenue to fund the computing power needed to meet projected demand, Bain said in its annual Global Technology Report released Tuesday. Yet their revenue is likely to fall $800 billion short of that mark as efforts to monetize services like ChatGPT trail the spending requirements for data centers and related infrastructure, Bain predicted.

[4]

$2 trillion in new revenue needed to meet AI demand globally by 2030: Report - The Economic Times

At least $2 trillion in annual revenue is needed to fund computing power needed to meet the anticipated AI demand globally by 2030, a new report showed on Tuesday. However, even with AI-related savings, the world is still $800 billion short of keeping pace with demand, according to new research by Bain & Company. The report shows that by 2030, global incremental AI compute requirements could reach 200 gigawatts, with the US accounting for half of the power. Even if companies in the US shifted all of their on-premise IT budgets to cloud and reinvested the savings from applying AI in sales, marketing, customer support, and R&D into capital spending on new data centres, the amount would still fall short of the revenue needed to fund the full investment, as AI's compute demand grows at more than twice the rate of Moore's Law, Bain noted. "By 2030, technology executives will be faced with the challenge of deploying about $500 billion in capital expenditures and finding about $2 trillion in new revenue to profitably meet demand. Meanwhile, because AI compute demand is outpacing semiconductor efficiency, the trends call for dramatic increases in power supply on grids that have not added capacity for decades," explained David Crawford, chairman of Bain's Global Technology Practice. Add the arms race dynamic between nations and leading providers, and the potential for overbuild and underbuild has never been more challenging to navigate. Working through the potential for innovation, infrastructure, supply shortages, and algorithmic gains is critical to navigate the next few years, Crawford added. While computational demand increases, leading companies have moved from piloting AI capabilities to profiting from AI as organisations scale the technology across core workflows, delivering 10% to 25% earnings before interest, taxes, depreciation, and amortisation (Ebitda) gains over the last two years. Yet, most companies today remain stuck in AI experimentation mode and are satisfied with modest productivity gains, the report concludes. Tariffs, export controls, and the push by governments worldwide for sovereign AI are accelerating the fragmentation of global technology supply chains, Bain found. Cutting-edge domains such as AI are no longer just catalysts for economic growth but are conduits for countries' political power and national security. "Sovereign AI capabilities are increasingly seen as a strategic advantage on par with economic and military strength," said Anne Hoecker, head of Bain's Global Technology practice. Also Read: An $800 billion revenue shortfall threatens AI future: Bain

[5]

AI Companies Face $800 Billion Funding Shortfall, Says Bain Report | PYMNTS.com

By 2030, global incremental AI compute requirements could reach 200 gigawatts, with the United States making up half of the power, the report said. Even if U.S. companies moved all of their on-premise IT budgets to the cloud and reinvested the savings from applying AI to various aspects of their business on new data centers, it would still not be enough, with AI's compute demand increasing at more than double the rate of Moore's Law. "If the current scaling laws hold, AI will increasingly strain supply chains globally," David Crawford, chairman of Bain's Global Technology Practice, said in a Tuesday news release. "By 2030, technology executives will be faced with the challenge of deploying about $500 billion in capital expenditures and finding about $2 trillion in new revenue to profitably meet demand." Meanwhile, because AI compute demand is moving faster than semiconductor efficiency can keep up with, the trends require "dramatic" upticks in power supply on grids that have not added capacity for decades, Crawford added in the release. "Add the arms race dynamic between nations and leading providers, and the potential for overbuild and underbuild has never been more challenging to navigate," he said in the release. "Working through the potential for innovation, infrastructure, supply shortages and algorithmic gains is critical to navigate the next few years." Meanwhile, PYMNTS this month explored the importance of inference, the stage in which an AI model is actually used to provide predictions, responses or insights. As far as demand goes, the shift of generative AI from research to mainstream use has created billions of inference events each day. As of July of this year, OpenAI said it was handling 2.5 billion prompts each day, including 330 million from users in the U.S. Brookfield projections indicate that three-quarters of all AI compute demand will come from inference by 2030. "Unlike training, inference is the production phase," PYMNTS wrote Monday (Sept. 22). "Latency, cost, scale, energy use and deployment location all determine whether an AI service works or fails."

Share

Share

Copy Link

A new Bain & Company report highlights a significant funding gap in the AI industry, projecting a $800 billion shortfall by 2030. This gap could potentially hinder AI growth and infrastructure development, raising concerns about the sustainability of the current AI boom.

AI Industry Faces Massive Funding Gap

A new report from Bain & Company has sent shockwaves through the AI industry, revealing a potential $800 billion revenue shortfall that could threaten the future of artificial intelligence development. The report highlights the enormous costs associated with AI infrastructure and the challenges in generating sufficient revenue to sustain the industry's rapid growth

1

3

.

Source: ET

Staggering Infrastructure Costs

By 2030, the global AI industry is projected to require a staggering $2 trillion in annual revenue to fund the necessary computing power and infrastructure. This includes an estimated $500 billion per year in global data center investments. Even with optimistic projections, Bain estimates that the industry will fall short by $800 billion, raising concerns about the sustainability of current AI growth rates

1

4

.

Source: Tom's Hardware

Compute Demand Outpacing Supply

The report reveals that AI compute demand is growing at more than twice the rate of Moore's Law, forcing data center operators to scale through brute force rather than relying on per-chip efficiency gains. By 2030, global AI compute requirements could reach 200 gigawatts, with the United States accounting for half of this demand

2

5

.Infrastructure and Energy Challenges

The massive growth in AI compute demand is straining various aspects of infrastructure:

-

Energy Supply: Increasing electricity supply for AI data centers may be the most challenging aspect, with new power generation and distribution taking four years or longer to implement

2

. -

Construction Services: Building data centers fast enough to meet demand is proving difficult.

-

GPU Availability: High-end silicon, including GPUs and specialized components like HBM and CoWoS, are already supply-constrained

1

. -

Ancillary Equipment: There are limits on the supply of essential components such as electrical switchgear and cooling systems

2

.

Source: PYMNTS

Industry Response and Implications

Major tech companies are making significant investments in AI infrastructure. Microsoft, for example, has increased its Wisconsin AI data center spend to over $7 billion. Amazon, Meta, and Google are also committing billions to AI development

1

.However, these investments may not be sufficient to bridge the cost-to-revenue gap. If capital becomes scarce, hyperscalers may prioritize systems offering the best return per watt and square foot, potentially leading to a scarcity of high-end desktop chips and expensive silicon

1

.The Future of AI Development

The Bain report raises critical questions about the sustainability of current AI growth rates and the industry's ability to meet projected demand. Without significant technological breakthroughs or increased funding, the AI boom may face substantial hurdles in the coming years

2

3

.As the industry grapples with these challenges, it remains to be seen how companies will adapt their strategies to ensure the continued growth and development of artificial intelligence technologies in the face of these daunting financial and infrastructure constraints.🟡 enjoyable_story=🟡### AI Industry Faces Massive Funding Gap

A new report from Bain & Company has sent shockwaves through the AI industry, revealing a potential $800 billion revenue shortfall that could threaten the future of artificial intelligence development. The report highlights the enormous costs associated with AI infrastructure and the challenges in generating sufficient revenue to sustain the industry's rapid growth

1

3

.

Source: ET

Staggering Infrastructure Costs

By 2030, the global AI industry is projected to require a staggering $2 trillion in annual revenue to fund the necessary computing power and infrastructure. This includes an estimated $500 billion per year in global data center investments. Even with optimistic projections, Bain estimates that the industry will fall short by $800 billion, raising concerns about the sustainability of current AI growth rates

1

4

.

Source: Tom's Hardware

Related Stories

Compute Demand Outpacing Supply

The report reveals that AI compute demand is growing at more than twice the rate of Moore's Law, forcing data center operators to scale through brute force rather than relying on per-chip efficiency gains. By 2030, global AI compute requirements could reach 200 gigawatts, with the United States accounting for half of this demand

2

5

.Infrastructure and Energy Challenges

The massive growth in AI compute demand is straining various aspects of infrastructure:

-

Energy Supply: Increasing electricity supply for AI data centers may be the most challenging aspect, with new power generation and distribution taking four years or longer to implement

2

. -

Construction Services: Building data centers fast enough to meet demand is proving difficult.

-

GPU Availability: High-end silicon, including GPUs and specialized components like HBM and CoWoS, are already supply-constrained

1

. -

Ancillary Equipment: There are limits on the supply of essential components such as electrical switchgear and cooling systems

2

.

Source: PYMNTS

Industry Response and Implications

Major tech companies are making significant investments in AI infrastructure. Microsoft, for example, has increased its Wisconsin AI data center spend to over $7 billion. Amazon, Meta, and Google are also committing billions to AI development

1

.However, these investments may not be sufficient to bridge the cost-to-revenue gap. If capital becomes scarce, hyperscalers may prioritize systems offering the best return per watt and square foot, potentially leading to a scarcity of high-end desktop chips and expensive silicon

1

.The Future of AI Development

The Bain report raises critical questions about the sustainability of current AI growth rates and the industry's ability to meet projected demand. Without significant technological breakthroughs or increased funding, the AI boom may face substantial hurdles in the coming years

2

3

.As the industry grapples with these challenges, it remains to be seen how companies will adapt their strategies to ensure the continued growth and development of artificial intelligence technologies in the face of these daunting financial and infrastructure constraints.

References

Summarized by

Navi

[2]

[4]

Related Stories

IBM CEO warns $8 trillion AI data center buildout can't turn a profit at current spending rates

03 Dec 2025•Business and Economy

AI Supercomputers: Exponential Growth Raises Concerns Over Cost and Power Consumption

25 Apr 2025•Technology

AMD CEO Lisa Su dismisses AI bubble fears as Goldman Sachs warns of datacenter investment risks

05 Dec 2025•Business and Economy

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy