AI manipulation turns Iran protests into battleground for truth as deepfakes gain millions of views

2 Sources

2 Sources

[1]

How Doubt Became a Weapon in Iran

AI manipulation, and the very suspicion of it, serves those who have the most to hide. The protests in Iran are real. The country's economic desperation runs deep, and millions of citizens want to see a corrupt and repressive regime gone. The violent crackdown on the protests is also real and appears to have cost thousands of lives. Yet the accounts, photos, and videos coming out of Iran are riddled with accusations of AI manipulation and fakery that have the effect of calling even what's true into doubt. There's a term of art for the benefit that accrues to bad actors when they sow public uncertainty as to whether anything at all is real: It's the liar's dividend, and it's very much in evidence in Iran. AI-generated or -enhanced online content has become a global problem for those seeking to understand or document protest movements, because it allows interested parties to shape narratives. In 2025, AI-generated images of real protest moments muddied the view of events in Turkey, and AI-generated content became a mobilization tool for Nepal's Gen Z uprising. But Iran is perhaps the most fraught arena of all. Multiple political factions, foreign governments, and the Iranian regime itself are all competing to shape the narrative of current events. And the tools for fabricating reality have never been as advanced and accessible as they are today. Arash Azizi: The Islamic Republic will not last Videos of Iran circulating on social media show crowds moved by an array of convictions and desires. Some simply chant against the regime; others chant for Reza Pahlavi, the crown prince of Iran's deposed monarchy, or invoke his father, the last shah. Numerous confirmed videos show demonstrators venting economic anguish and hatred for Iran's supreme leader, Ayatollah Ali Khamenei; the security forces; and the Islamic Republic itself. All of these views of the protests are grounded in Iran's complex reality. Yet the presence among them of images and videos that have been -- or are even suspected of being -- doctored with AI, mislabeled, misattributed, or spliced with content from other places or times has given oxygen to the dark imaginings of the movement's enemies within the regime. As a diaspora Iranian who has studied online deception, censorship, and surveillance efforts for almost 15 years, I take a particular interest in Iran's information environment. At the human-rights organization Witness, I'm involved with something called the Deepfake Rapid Response Force, which helps journalists and human-rights defenders assess manipulated media. We have plenty to work with during the current Iran crisis. What we are seeing is not a single disinformation campaign but something much messier. Iran's information ecosystem has been shaped by extreme distrust of the Islamic Republic's official media; social-media influence efforts coming from both the regime and foreign powers; and rampant distortions of online content -- some deceptive, some careless, some well intentioned -- that make verifying what Iranians are actually saying or doing during these protests difficult. The liar's dividend redounds to the regime more than anyone else. Iran's nationwide uprising erupted on December 28. And within hours, regime-aligned accounts began dismissing authentic images from the protests as AI-generated. An early, high-profile case involved a picture that came to be known as Iran's "tank man" photo. Its source was a low-quality video that had been captured with a zoom lens on December 29, showing a protester facing down security forces in Tehran. A BBC Persian journalist posted a screengrab from the video the same day, and that image, which had a higher picture quality, began to circulate. The event was real: It has been verified from multiple angles and by fact-checkers. But somewhere along the line, someone enhanced the still image of it with AI editing tools, likely to make the blurry original screengrab sharper and more shareable. Regime accounts seized on visible artifacts from AI enhancement as opportunities to dismiss the photo and other footage from the protests. "It's all AI slop," one regime account with a visibly AI-generated profile photo opined. "Just when you think Zionists can't be more pathetic, they prove you wrong." Deepfakes have given AI a reputation for being deceptive. But many commonly employed image-editing tools use generative-AI capabilities, for better or worse. The public does not generally know how to distinguish good-faith use of these tools from malicious fakery. And this allows bad-faith actors such as the Islamic Republic, which has spent decades undermining protests and dissidence, to use not only AI itself but also the suspicion it generates as an accelerant. The same pattern has played out with audio manipulations. On December 30, an account presenting itself as affiliated with the Mujahedin-e Khalq -- a controversial exiled opposition group known as the MEK that has an armed wing and was once designated a terrorist organization by the United States -- posted a video in which pro-Pahlavi chants are clearly dubbed over protest footage. The account then "exposed" the video as manipulated and used it to claim that monarchists were fabricating support. Within hours, pro-regime accounts had reposted this content to amplify the accusation that pro-Pahlavi chants were fake. What was striking was that the alleged MEK account both introduced the manipulated video and was the first to call it out. Moreover, regime-aligned accounts were ready to rebroadcast that post almost immediately. Iranian researchers have long documented the regime's use of opposition personas on social media to sow confusion, divide the opposition, and discredit authentic documentation, and leaked documents and videos from within the regime have confirmed the practice. Within a day of the supposed MEK post being published, a Russian propaganda account set the AI-enhanced "tank man" photo alongside the allegedly manipulated Pahlavi audio and presented both as proof that the protests were a foreign fabrication. The loop was closed: manipulated content introduced by what may have been a regime account, amplified by pro-regime voices, then aggregated by Russian state-aligned media as evidence of an AI-driven Western psyop. If that cycle isn't complicated enough, consider that the regime's accusations capitalize on at least one documented instance of manipulation by monarchists. On December 29, Manoto, the London-based Persian-language channel aligned with the monarchist opposition, aired footage of crowds chanting in favor of Pahlavi. The audio is real -- but traceable to a memorial for Khosrow Alikhordi, a human-rights lawyer whose suspicious death last month drew thousands of mourners. It had been lifted and overlaid onto unrelated protest footage. Whether the doctored video was planted by hostile actors or intentionally created by someone within the royalist camp, the effect was the same: It handed malicious accounts exactly the precedent they needed. Royalists are not the only anti-regime camp that has either generated or circulated fake content that serves their narrative. On December 30, Radio Farda and other accounts shared a 2020 video, as though it were footage of the current protests, of protesters chanting, "No to the oppressor, be it the shah or the leader." BBC Verify's Shayan Sardarizadeh flagged the misattribution. The Islamic Republic has a habit of attributing authentic, homegrown dissent to Israeli and American conspiracies. This supposed foreign subversion then becomes a pretext for repression. The latest crisis is no different in that regard -- but now foreign actors are helping boost the regime's narrative by sharing doctored, misleading content that the regime can then expose. Israel has been using AI-generated content to push anti-regime and pro-Israeli narratives in Iran since at least last summer's 12-day war. The Citizen Lab, a research and policy center based at the University of Toronto, points to AI-fabricated footage of a supposedly surgical strike on Evin Prison, among other examples. A Haaretz investigation found that Israeli influence operations were promoting ideas aligned with Iranian monarchist factions. Shortly before the protests broke out last month, an Israeli diplomat shared a montage of four alleged strikes on Iranian command centers during the June war captioned, "very precise." Our analysis found that of the four clips, one is authentic footage that had aired on Iranian state television; the other three show signs of having been generated by AI. BBC Verify traced the video montage to an Instagram account that has more than 120,000 followers and identifies itself as "Islamists and Khomeinists' Nightmare" and "Media Warfare Expert." When users pointed out the manipulation of the air-strike footage, the diplomat did not delete the post. He acknowledged that parts of it may have been AI-manipulated, but argued that the video still demonstrates the precision of Israel's operations -- in other words, he stood by admittedly fabricated content because it illustrates a story he wanted to tell. Perhaps similarly, on January 1, the Israeli Foreign Ministry posted an image on its Persian-language X account of Iranian police blasting two protesters with a water hose. The image was AI-altered: The original, published by BBC Persian's Farzad Seifikaran the day before, shows the protesters being hosed down from a distance and then an officer approaching with a loudspeaker, not a hose. These sorts of interventions may be meant to boost the opposition to the Islamic Republic, but the use of doctored media has other, no doubt unintended, effects. Actors with a vested interest in hiding what is happening in Iran's streets use the existence of untrustworthy content promoted by foreign powers to convince the public that all documentation of the protests is a foreign deception. The dividend again accrues to the Islamic Republic. Sometimes the problem is not manipulation at all but just the impossibility of capturing a fractured reality. On December 29, Iran International shared footage from a march in the town of Malard, where a crowd was chanting for Pahlavi. Another user posted a different angle of the same street and the same march, only with different audible slogans. One user who had shared the first footage issued a correction; others accused the Pahlavi camp of spreading falsehoods. But who is to say that on that long street, different crowds at different moments didn't simply chant different things? This is epistemic fog. Even without deception, people operating in good faith, trying to verify what they have seen, cannot know what is representative. Such knowledge is elusive in any case: Iran is a country of more than 90 million people whose aspirations can hardly be generalized from any single video or protest chant. Journalists, courts, human-rights investigators, and protest movements everywhere have long depended on shared standards of proof. Today we are seeing what happens when those standards collapse. When any image can be dismissed as possibly synthetic, those in power no longer need to suppress speech. They have only to raise questions that undermine belief in a shared reality. Since Thursday evening, the Iranian regime has thickened the fog to near impenetrability with a national internet shutdown and a blackout of communication with the outside world. From where I stand, the information picture has fractured further. Verified footage has slowed to a trickle; what comes out largely passes through Starlink terminals. Diaspora Iranians are frantic, unable to reach family members. Death-toll figures vary wildly, as documentation organizations struggle to access data: The range now sits between 12,000 and 20,000 dead, but the full scale is unknown. Read: How Trump could help the people of Iran Our Deepfake Rapid Response Force has been trying to help validate legitimate human-rights documentation that is sometimes falsely tagged as AI. An enormous effort is afoot among human-rights organizations to accurately count the dead. Meanwhile, regime accounts have been disseminating footage from counterprotests in favor of the Islamic Republic -- clips that some opposition accounts suggest are AI-generated, and that official news media vehemently insist are real. Everyone is primed to distrust everything, and the shutdown ensures that nothing -- not the scale of the protests, the severity of the crackdown, or the state of the regime -- can be witnessed in real time. The Iranian regime has spent more than four decades undermining the credibility of dissent. Now it has AI not just as a tool for creating fakes but as a rhetorical weapon. Every glitch, artifact, and even protective blur becomes evidence that nothing can be trusted. In a world where policy makers and platforms invest in AI detection and fact-checking, perhaps ordinary people would not be fighting through such darkness, or bad actors reaping such dividends. What Iranians want and are fighting for is a question only they can answer. And their answer deserves to be heard -- not shaped by foreign governments, dismissed by regime propagandists, or drowned in fog that others have helped create.

[2]

AI-created Iran protest videos gain traction

Washington (United States) (AFP) - AI-generated videos purportedly depicting protests in Iran have flooded the web, researchers said Wednesday, as social media users push hyper-realistic deepfakes to fill an information void amid the country's internet restrictions. US disinformation watchdog NewsGuard said it identified seven AI-generated videos depicting the Iranian protests -- created by both pro- and anti-government actors -- that have collectively amassed some 3.5 million views across online platforms. Among them was a video shared on the Elon Musk-owned platform X showing women protesters smashing a vehicle belonging to the Basij, the Iranian paramilitary force deployed to suppress the protests. One X post featuring the AI clip, shared by what NewsGuard described as anti-regime users, garnered nearly 720,000 views. Anti-regime X and TikTok users in the United States also posted AI videos depicting Iranian protesters symbolically renaming local streets after President Donald Trump. One such clip shows a protester changing a street sign to "Trump St" while other demonstrators cheer, with an overlaid caption reading: "Iranian protestors are renaming the streets after Trump." Trump had repeatedly talked in recent days about coming to the aid of the Iranian people over the crackdown on protests that rights groups say has left at least 3,428 people dead. Trump said Wednesday he had been told the killings of protesters in Iran had been halted, but added that he would "watch it and see" about threatened military action. Pro-regime social media users also shared AI videos purportedly showing large-scale pro-government counterprotests throughout the Islamic republic. The AI creations highlight the growing prevalence of what experts call "hallucinated" visual content on social media during major news events, often overshadowing authentic images and videos. In this case, AI creators were filling an information void caused by the internet blackout imposed by the Iranian regime as it sought to suppress demonstrations, experts said. "There's a lot of news -- but no way to get it because of the internet blackout," said NewsGuard analyst Ines Chomnalez. "Foreign social media users are turning to AI video generators to advance their own narratives about the unfolding chaos." The fabricated videos were the latest example of AI tools being deployed to distort fast-developing breaking news. AI fabrications, often amplified by partisan actors, have fueled alternate realities around recent news events, including the US capture of Venezuelan leader Nicolas Maduro and a deadly shooting by immigration agents in Minneapolis. AFP fact-checkers also uncovered misrepresented images that created misleading narratives about the Iranian protests, the largest since the Islamic Republic was proclaimed in 1979. One months-old video purportedly showing demonstrations in Iran was actually filmed in Greece in November 2025, while another claiming to depict a protester tearing down an Iranian flag was filmed in Nepal during last year's protests that toppled the Himalayan nation's government.

Share

Share

Copy Link

AI-generated videos depicting Iran protests have amassed 3.5 million views across social media platforms, creating what experts call a liar's dividend for the regime. Both pro- and anti-government actors are deploying hyper-realistic deepfakes to shape narratives amid internet restrictions, making it difficult to verify what's actually happening on the ground during the largest demonstrations since 1979.

AI-Generated Videos Flood Social Media During Iran Protests

The Iran protests have become a testing ground for how AI manipulation can undermine credibility of real events. US disinformation watchdog NewsGuard identified seven AI-generated videos depicting the Iranian protests that collectively gained approximately 3.5 million views across online platforms

2

. These hyper-realistic deepfakes were created by both pro- and anti-government actors, exploiting an information void in Iran created by severe internet restrictions imposed by the Iranian regime2

.

Source: The Atlantic

Among the fabricated content was a video shared on X showing women protesters smashing a Basij paramilitary vehicle, which garnered nearly 720,000 views from a single post

2

. Anti-regime users on social media also circulated AI videos depicting Iranian protesters symbolically renaming streets after President Donald Trump, with one clip showing a protester changing a street sign to "Trump St" while demonstrators cheered2

. Meanwhile, pro-regime social media users shared AI videos purportedly showing large-scale pro-government counterprotests throughout the Islamic republic2

.

Source: France 24

The Liar's Dividend Benefits the Regime Most

What experts call the liar's dividend has emerged as a powerful weapon for those with the most to hide. This term describes the benefit that accrues to bad actors when they sow public uncertainty about whether anything at all is real

1

. While the protests themselves are genuine—driven by economic desperation and opposition to a corrupt regime—the presence of AI-manipulated content has given the Iranian regime ammunition to dismiss authentic protest imagery entirely1

.A prominent example involved Iran's "tank man" photo, captured on December 29, showing a protester facing down security forces in Tehran. The event was real and verified from multiple angles by fact-checkers, but someone enhanced the still image with AI editing tools to make the blurry original sharper . Regime accounts immediately seized on visible artifacts from AI enhancement to dismiss the photo and other footage. "It's all AI slop," one regime account with a visibly AI-generated profile photo claimed, adding: "Just when you think Zionists can't be more pathetic, they prove you wrong" .

Information Void Fuels Disinformation Campaign

The internet blackout imposed by the Iranian regime as it sought to suppress demonstrations created fertile ground for AI fabrications to distort news events

2

. "There's a lot of news—but no way to get it because of the internet blackout," explained NewsGuard analyst Ines Chomnalez. "Foreign social media users are turning to AI video generators to advance their own narratives about the unfolding chaos"2

.This represents what experts call "hallucinated" visual content on social media during major news events, often overshadowing authentic images and videos

2

. Fact-checkers also uncovered misrepresented images creating misleading narratives about the protests. One months-old video purportedly showing demonstrations in Iran was actually filmed in Greece in November 2025, while another claiming to depict a protester tearing down an Iranian flag was filmed in Nepal during last year's protests2

.Related Stories

Multiple Actors Shape a Complex Information Ecosystem

Iran's information ecosystem has been shaped by extreme distrust of the Islamic Republic's official media, social-media influence efforts from both the regime and foreign powers, and rampant distortions of online content

1

. What's emerging is not a single disinformation campaign but something messier, involving multiple political factions, foreign governments, opposition groups, and the Iranian regime itself competing to shape narratives1

.The Deepfake Rapid Response Force at human-rights organization Witness has been working to help journalists and human-rights defenders assess manipulated media during the crisis

1

. The challenge lies in distinguishing good-faith use of AI editing tools from malicious fakery—a distinction the public generally doesn't know how to make. This allows bad-faith actors to use not only AI itself but also the suspicion it generates as weaponized information1

.The violent crackdown on protests appears to have cost thousands of lives, with rights groups reporting at least 3,428 people dead

2

. Yet the accounts, photos, and videos coming out of Iran are riddled with accusations of AI manipulation that have the effect of calling even what's true into doubt1

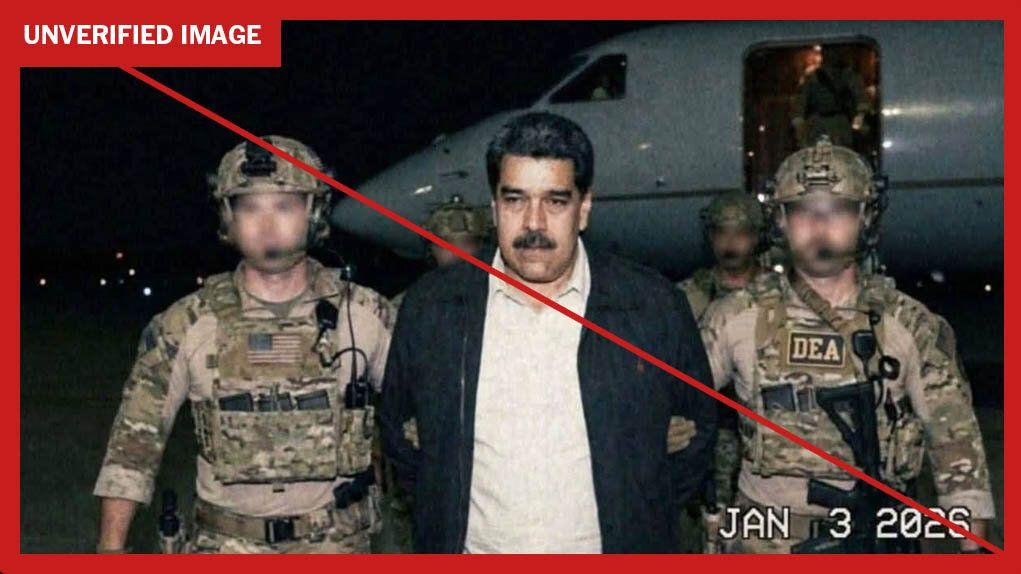

. This pattern extends beyond Iran—AI fabrications have recently fueled alternate realities around news events including the US capture of Venezuelan leader Nicolas Maduro and a deadly shooting by immigration agents in Minneapolis2

. As AI tools become more accessible and advanced, the credibility of human-rights documentation and authentic protest movements faces growing threats from propaganda deployed across all sides of political conflicts.References

Summarized by

Navi

[1]

[2]

Related Stories

AI-Generated Disinformation Escalates Israel-Iran Conflict in Digital Sphere

21 Jun 2025•Technology

AI-generated images of Nicolás Maduro spread rapidly despite platform safeguards

05 Jan 2026•Entertainment and Society

AI reshaping the battle over Nicolas Maduro's capture as disinformation floods social media

20 Jan 2026•Entertainment and Society

Recent Highlights

1

Anthropic releases Claude Opus 4.6 as AI model advances rattle software stocks and cybersecurity

Technology

2

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology

3

University of Michigan's Prima AI model reads brain MRI scans in seconds with 97.5% accuracy

Science and Research