AI-Powered Navigation Breakthrough: Robots Learn to Stay on Track Without Maps

2 Sources

2 Sources

[1]

Smarter navigation: AI helps robots stay on track without a map

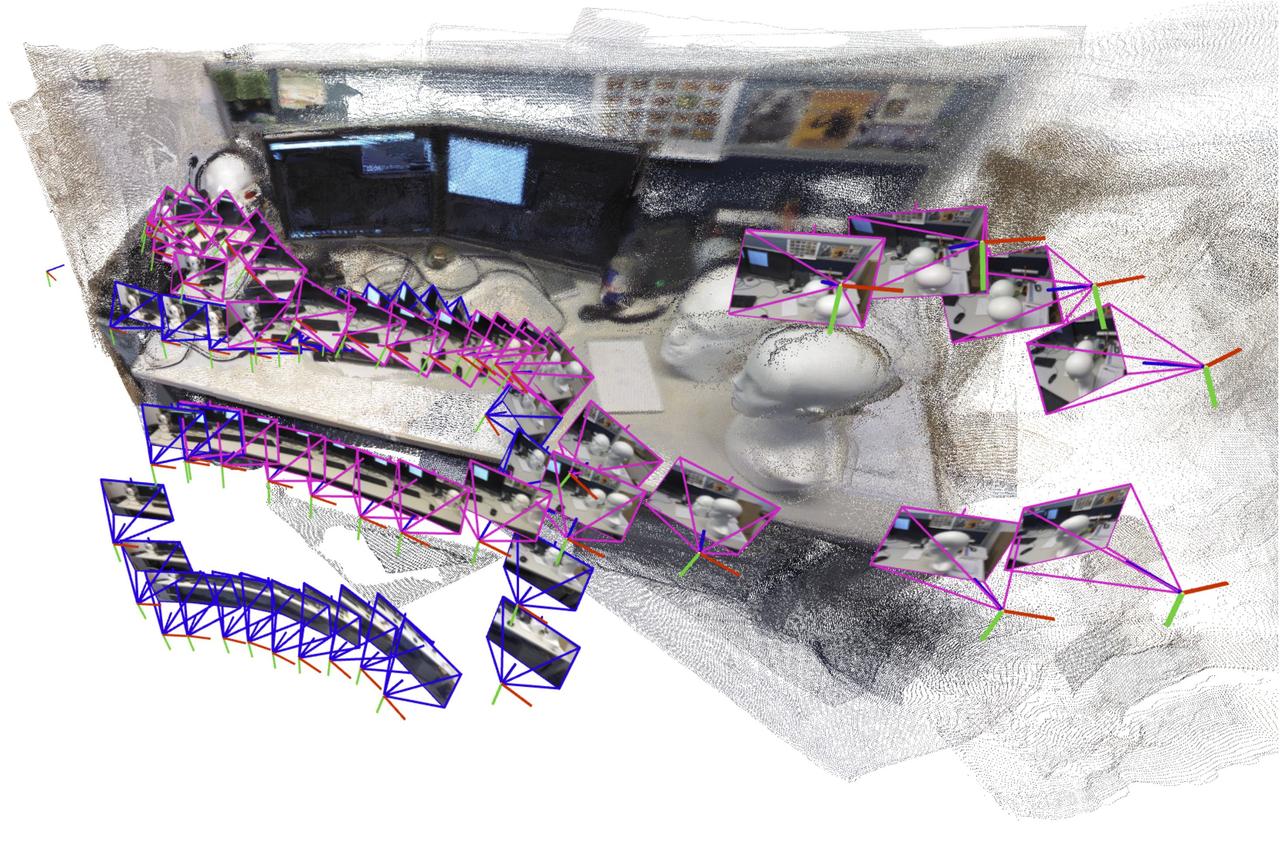

Navigating without a map is a difficult task for robots, especially when they can't reliably determine where they are. A new AI-powered solution helps robots overcome this challenge by training them to make movement decisions that also protect their ability to localize. Instead of blindly heading toward a target, the robot evaluates the visual richness of its surroundings and favors routes where it's less likely to get "lost." By combining deep reinforcement learning (DRL) with real-time feedback on pose estimation, this approach enables robots to avoid both collisions and localization failures. The result: more intelligent navigation, better performance in tricky environments, and a major step toward autonomous systems that truly understand their limitations. Traditional robot navigation methods either require detailed maps or assume accurate localization is always available -- assumptions that break down in indoor or unfamiliar environments. Visual simultaneous localization and mapping (SLAM) systems, often used as a fallback, can easily fail in scenes lacking distinct textures or during abrupt movements, leading to severe navigational errors. Many previous AI models focused only on finding collision-free paths, ignoring whether the robot could stay properly localized along the way. Even those that consider localization often rely on rigid penalty thresholds, unable to adapt to changing conditions. Due to these challenges, a more flexible and awareness-driven navigation strategy is urgently needed for robots to perform reliably across diverse, real-world scenarios. A research team from Cardiff University and Hohai University has developed a new deep reinforcement learning (DRL) model that helps robots plan smarter, safer paths in complex indoor environments. Their study, published in IET Cyber-Systems and Robotics in July 2025, introduces a localization-aware navigation policy trained with RGB-D camera input and real-time feedback from ORB-SLAM2. Rather than relying on pre-set thresholds or fixed maps, the robot learns to adapt dynamically to visual conditions in its environment -- boosting its ability to maintain localization and significantly improving navigation success rates in simulated tests. The core innovation lies in integrating localization quality into every navigation decision. The robot is trained using a compact state representation that reflects the spatial distribution of visual map points, partitioned into 24 angular regions around its body. This design helps the robot identify which directions are visually "safer" to travel through -- meaning areas are more likely to provide reliable localization data. In addition, the researchers introduced a new reward function based on Relative Pose Error (RPE), offering instant feedback on whether a particular movement action improves or worsens the robot's understanding of its position. Unlike previous models that used static thresholds, this system employs a dynamic threshold that adjusts in real time, depending on environmental conditions. To evaluate the approach, the team conducted extensive training using the iGibson simulation environment and tested their model against four baseline methods. In challenging indoor scenarios, the new model outperformed others by a wide margin, achieving a 49% success rate compared to just 33% for conventional SLAM-based navigation. It also showed significantly lower localization error and better adaptability in new environments. Notably, the model consistently chose longer, but safer routes -- demonstrating the value of prioritizing localization robustness over shortest-path efficiency. "Our aim wasn't just to teach the robot to move -- it was to teach it to think about how well it knows where it is," said Dr. Ze Ji, the study's senior author. "Navigation isn't only about avoiding walls; it's about maintaining confidence in your position every step of the way. By integrating perception and planning, our model enables smarter, safer movement in uncertain spaces. This could pave the way for more autonomous robots that can handle the complexities of the real world without constant human oversight." The implications of this work extend across indoor robotics -- from service robots in hospitals and homes to warehouse automation systems. In environments where GPS doesn't work and visual conditions vary, being able to assess and respond to localization reliability is crucial. This method equips robots with the awareness to adjust their strategies based on how well they can see and understand their surroundings. Looking forward, the team plans to test their model on real robots and in dynamic scenes with pedestrians. With further development, the approach could become a key building block for trustworthy, mapless navigation in real-world human environments.

[2]

Smarter Navigation: AI Helps Robots Stay on Track Without a Map | Newswise

Newswise -- Traditional robot navigation methods either require detailed maps or assume accurate localization is always available -- assumptions that break down in indoor or unfamiliar environments. Visual simultaneous localization and mapping (SLAM) systems, often used as a fallback, can easily fail in scenes lacking distinct textures or during abrupt movements, leading to severe navigational errors. Many previous AI models focused only on finding collision-free paths, ignoring whether the robot could stay properly localized along the way. Even those that consider localization often rely on rigid penalty thresholds, unable to adapt to changing conditions. Due to these challenges, a more flexible and awareness-driven navigation strategy is urgently needed for robots to perform reliably across diverse, real-world scenarios. A research team from Cardiff University and Hohai University has developed a new deep reinforcement learning (DRL) model that helps robots plan smarter, safer paths in complex indoor environments. Their study (DOI: 10.1049/csy2.70018), published in IET Cyber-Systems and Robotics in July 2025, introduces a localization-aware navigation policy trained with RGB-D camera input and real-time feedback from ORB-SLAM2. Rather than relying on pre-set thresholds or fixed maps, the robot learns to adapt dynamically to visual conditions in its environment -- boosting its ability to maintain localization and significantly improving navigation success rates in simulated tests. The core innovation lies in integrating localization quality into every navigation decision. The robot is trained using a compact state representation that reflects the spatial distribution of visual map points, partitioned into 24 angular regions around its body. This design helps the robot identify which directions are visually "safer" to travel through -- meaning areas more likely to provide reliable localization data. In addition, the researchers introduced a new reward function based on Relative Pose Error (RPE), offering instant feedback on whether a particular movement action improves or worsens the robot's understanding of its position. Unlike previous models that used static thresholds, this system employs a dynamic threshold that adjusts in real time, depending on environmental conditions. To evaluate the approach, the team conducted extensive training using the iGibson simulation environment and tested their model against four baseline methods. In challenging indoor scenarios, the new model outperformed others by a wide margin, achieving a 49% success rate compared to just 33% for conventional SLAM-based navigation. It also showed significantly lower localization error and better adaptability in new environments. Notably, the model consistently chose longer, but safer routes -- demonstrating the value of prioritizing localization robustness over shortest-path efficiency. "Our aim wasn't just to teach the robot to move -- it was to teach it to think about how well it knows where it is," said Dr. Ze Ji, the study's senior author. "Navigation isn't only about avoiding walls; it's about maintaining confidence in your position every step of the way. By integrating perception and planning, our model enables smarter, safer movement in uncertain spaces. This could pave the way for more autonomous robots that can handle the complexities of the real world without constant human oversight." The implications of this work extend across indoor robotics -- from service robots in hospitals and homes to warehouse automation systems. In environments where GPS doesn't work and visual conditions vary, being able to assess and respond to localization reliability is crucial. This method equips robots with the awareness to adjust their strategies based on how well they can see and understand their surroundings. Looking forward, the team plans to test their model on real robots and in dynamic scenes with pedestrians. With further development, the approach could become a key building block for trustworthy, mapless navigation in real-world human environments. Yan Gao thanks the Chinese Scholarship Council (CSC) for providing the living stipend for his Ph.D. programme (No. 202008230171). About IET Cyber-Systems and Robotics IET Cyber-Systems and Robotics is a fully open access journal co-published by the Institution of Engineering and Technology (IET) and Zhejiang University Press. We publish novel research and survey articles in the broad areas of cyber-systems and robotics, with an emphasis on artificial intelligent systems enabled by advanced electronics and modern information technologies.

Share

Share

Copy Link

Researchers from Cardiff University and Hohai University have developed a new AI model that enables robots to navigate complex indoor environments without relying on pre-existing maps, significantly improving their ability to stay localized and avoid getting lost.

Revolutionizing Robot Navigation with AI

Researchers from Cardiff University and Hohai University have developed a groundbreaking AI-powered navigation system that enables robots to navigate complex indoor environments without relying on pre-existing maps. This innovative approach, detailed in a study published in IET Cyber-Systems and Robotics in July 2025, represents a significant leap forward in autonomous robotics

1

.The Challenge of Mapless Navigation

Traditional robot navigation methods often struggle in indoor or unfamiliar environments where GPS is unavailable and visual conditions are challenging. Visual simultaneous localization and mapping (SLAM) systems, commonly used as a fallback, can fail in scenes lacking distinct textures or during sudden movements, leading to severe navigational errors

2

.A New Approach: Localization-Aware Navigation

Source: Tech Xplore

The research team's solution employs a deep reinforcement learning (DRL) model that integrates localization quality into every navigation decision. Key features of this approach include:

- A compact state representation reflecting the spatial distribution of visual map points around the robot.

- A new reward function based on Relative Pose Error (RPE), providing instant feedback on the robot's positional understanding.

- A dynamic threshold system that adjusts in real-time based on environmental conditions.

Impressive Performance in Simulated Tests

The new model was extensively tested using the iGibson simulation environment, outperforming conventional methods:

- Achieved a 49% success rate in challenging indoor scenarios, compared to 33% for conventional SLAM-based navigation.

- Demonstrated lower localization error and better adaptability in new environments.

- Consistently chose longer but safer routes, prioritizing localization robustness over shortest-path efficiency.

Dr. Ze Ji, the study's senior author, emphasized the importance of this approach: "Our aim wasn't just to teach the robot to move -- it was to teach it to think about how well it knows where it is. Navigation isn't only about avoiding walls; it's about maintaining confidence in your position every step of the way"

1

.Related Stories

Wide-Ranging Implications

The implications of this research extend across various fields of indoor robotics:

- Service robots in hospitals and homes

- Warehouse automation systems

- Any environment where GPS is unavailable and visual conditions vary

This method equips robots with the awareness to adjust their strategies based on how well they can perceive and understand their surroundings, a crucial ability in real-world applications

2

.Future Directions

Looking ahead, the research team plans to:

- Test their model on real robots in physical environments.

- Explore navigation in dynamic scenes with pedestrians.

- Further develop the approach as a key building block for trustworthy, mapless navigation in real-world human environments.

This breakthrough in AI-powered navigation brings us one step closer to truly autonomous robots capable of handling the complexities of the real world without constant human oversight, potentially revolutionizing fields from healthcare to logistics.

References

Summarized by

Navi

Related Stories

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation