AI Tool Developed to Enhance Medical Study Reporting

2 Sources

2 Sources

[1]

AI helps scientists correct mistakes in medical studies

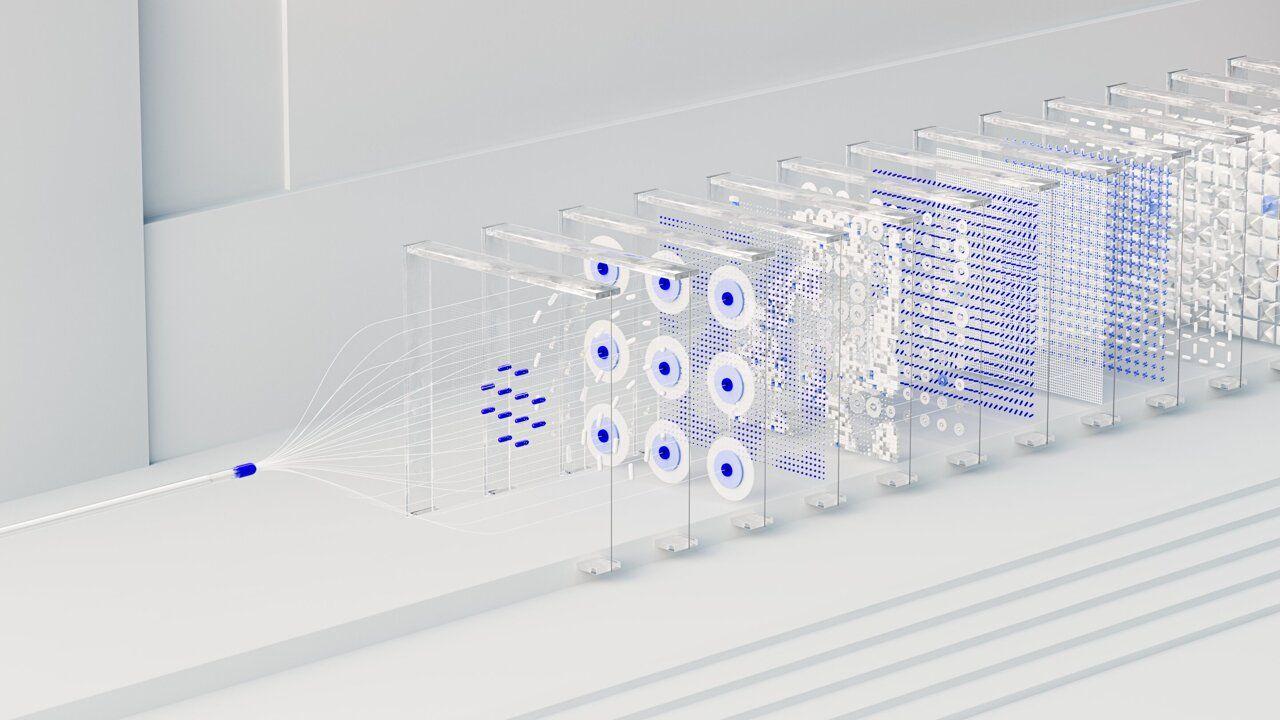

Randomized, controlled clinical trials are crucial for telling whether a new treatment is safe and effective. But often scientists don't fully report the details of their trials in a way that allows other researchers to gauge how well they designed and conducted those studies. A team from the University of Illinois Urbana-Champaign used PSC's Bridges-2 system to train artificial intelligence (AI) tools to spot when a given research report is missing steps. Their goal is to produce an open-source AI tool that authors and journals can use to catch these mistakes and better plan, conduct, and report the results of clinical trials. When it comes to showing that new medical treatments are safe and effective, the best possible evidence comes from randomized, controlled trials. In their purest form, that first means assigning patients randomly either to a group that receives experimental treatment or a control group that doesn't. The idea is that, if you assign patients without such randomization, you might assign sicker patients to one group and the comparison won't be fair. Another important measure of quality is that the scientists conducting it lay out their goals and what their definition of success is going to be ahead of time, and not fish for "good results" that they weren't looking for. Sometimes scientists do the right thing but don't record it correctly in their written reports. Sometimes, incomplete information in reports is a red flag that something important was missed. In either case, the sheer volume of clinical trials reported every year are way more than humans can assess for missing steps. "Clinical trials are considered the best type of evidence for clinical care. If a drug is going to be used for a disease ... it needs to be shown that it's safe and it's effective ... But there are a lot of problems with the publications of clinical trials. They often don't have enough details. They're not transparent about what exactly has been done and how, so we have trouble assessing how rigorous their evidence is," says Halil Kilicoglu. Halil Kilicoglu, an associate professor of information sciences at the University of Illinois Urbana-Champaign, wanted to know whether AIs could be trained to check scientific papers for the critical components of a proper randomized, controlled trial -- and to flag those that fall short. His team's tool for the task was PSC's flagship Bridges-2 supercomputer. They obtained time on Bridges-2 through the NSF's ACCESS program, in which PSC is a leading member. How PSC Helped As a starting point, the scientists turned to the CONSORT 2010 Statement and the SPIRIT 2013 Statement. These reporting guidelines lay out 83 recommended items necessary for a proper trial, put together by leading scientists in the field. To test different ways of getting AIs to grade scientific papers for SPIRIT/CONSORT adherence, the Illinois team used a type of AI called natural language processing (NLP). They tested several AIs of this type. Bridges-2 fit the bill for the work partly because of its ability to handle the massive data necessary in studying 200 articles describing clinical trials that the team identified in the medical literature between 2011 and 2022. The system also offers powerful graphics processing units (GPUs), which are used to train AI models based on a sophisticated neural network called Transformer. This enables the AIs to distinguish between good and poor reporting practices. The Illinois team randomly used a portion of the articles as training data. In the training data, the correct answers were labeled. This allowed the model to learn which patterns in the text were associated with correct responses. The model then adjusted its internal connections, strengthening those that led to correct predictions and weakening those that didn't. As the training progressed, the model's performance improved. Once further training no longer produced improvements, the researchers tested the AI on the remaining articles. "We are developing deep learning models. And these require GPUs, graphical processing units. And you know, they are ... expensive to maintain ... When you sign up for Bridges you get ... the GPUs, and that's useful. But also, all the software that you need is generally installed. And mostly my students are doing this work, and ... it's easy to get [them] going on [Bridges-2]," says Kilicoglu. Kilicoglu's group graded their AI results using a measurement called F₁. This is a kind of average between the AI's ability to identify missing checklist items in a given paper, as well as its ability to avoid mistakenly flagging a paper that was following proper procedure. A perfect F₁ score is 1. The worst score is 0. Initial results of the refined AI were encouraging. The best of the NLPs had F₁ scores of 0.742 at the level of individual sentences and 0.865 at the level of entire articles. The scientists reported their results in the journal Scientific Data in February 2025. Kilicoglu and his teammates are encouraged by their results but feel that they can be improved. One way they plan to do this is by including more data, increasing the number of papers that they use to train and test the AIs. They're also looking to use additional tools to refine the AI learning process, including distillation. In that process, a large AI developed on a supercomputer teaches a smaller AI, which can run on a personal computer, to identify SPIRIT/CONSORT adherence. The latter step will be important for the team's eventual goal, which is to provide journals and scientists with these AI tools at no cost. Using such a tool, scientists can feed their draft papers into the AI and immediately learn when they've forgotten a step. Journals can use it as part of the article review process, sending pieces back to their authors for correction when a checklist item is missing. Ultimately, the Illinois scientists' goal is to help the medical research field improve its performance for the benefit of patients.

[2]

AI Helps Scientists Correct Mistakes in Medical Studies | Newswise

Newswise -- Randomized, controlled clinical trials are crucial for telling whether a new treatment is safe and effective. But often scientists don't fully report the details of their trials in a way that allows other researchers to gauge how well they designed and conducted those studies. A team from the University of Illinois Urbana-Champaign used PSC's NSF-funded Bridges-2 system to train artificial intelligence (AI) tools to spot when a given research report is missing steps. Their goal is to produce an open-source AI tool that authors and journals can use to catch these mistakes and better plan, conduct, and report the results of clinical trials. When it comes to showing that new medical treatments are safe and effective, the best possible evidence comes from randomized, controlled trials. In their purest form, that first means assigning patients randomly either to a group that receives experimental treatment or a control group that doesn't. The idea is that, if you assign patients without such randomization, you might assign sicker patients to one group and the comparison won't be fair. Another important measure of quality is that the scientists conducting it lay out their goals and what their definition of success is going to be ahead of time, and not fish for "good results" that they weren't looking for. Sometimes scientists do the right thing but don't record it correctly in their written reports. Sometimes, incomplete information in reports is a red flag that something important was missed. In either case, the sheer volume of clinical trials reported every year are way more than humans can assess for missing steps. Halil Kilicoglu, an associate professor of information sciences at the University of Illinois Urbana-Champaign, wanted to know whether AIs could be trained to check scientific papers for the critical components of a proper randomized, controlled trial -- and to flag those that fall short. His team's tool for the task was PSC's flagship Bridges-2 supercomputer. They obtained time on Bridges-2 through the NSF's ACCESS program, in which PSC is a leading member. As a starting point, the scientists turned to the CONSORT 2010 Statement and the SPIRIT 2013 Statement. These reporting guidelines lay out 83 recommended items necessary for a proper trial, put together by leading scientists in the field. To test different ways of getting AIs to grade scientific papers for SPIRIT/CONSORT adherence, the Illinois team used a type of AI called natural language processing (NLP). They tested several AIs of this type. Bridges-2 fit the bill for the work partly because of its ability to handle the massive data necessary in studying 200 articles describing clinical trials that the team identified in the medical literature between 2011 and 2022. The system also offers powerful graphics processing units (GPUs), which are used to train AI models based on a sophisticated neural network called Transformer. This enables the AIs to distinguish between good and poor reporting practices. The Illinois team randomly used a portion of the articles as training data. In the training data, the correct answers were labeled. This allowed the model to learn which patterns in the text associated with correct responses. The model then adjusted its internal connections, strengthening those that led to correct predictions and weakening those that didn't. As the training progressed, the model's performance improved. Once further training no longer produced improvements, the researchers tested the AI on the remaining articles. Kilicoglu's group graded their AI results using a measurement called F₁. This is a kind of average between the AI's ability to identify missing checklist items in a given paper, as well as its ability to avoid mistakenly flagging a paper that was following proper procedure. A perfect F₁ score is 1. The worst score is 0. Initial results of the refined AI were encouraging. The best of the NLPs had F₁ scores of 0.742 at the level of individual sentences and 0.865 at the level of entire articles. The scientists reported their results in the journal Nature Scientific Data in February 2025. Kilicoglu and his teammates are encouraged by their results but feel that they can be improved. One way they plan to do this is by including more data, increasing the number of papers that they use to train and test the AIs. They're also looking to use additional tools to refine the AI learning process, including distillation. In that process, a large AI developed on a supercomputer teaches a smaller AI, which can run on a personal computer, to identify SPIRIT/CONSORT adherence. The latter step will be important for the team's eventual goal, which is to provide journals and scientists with these AI tools at no cost. Using such a tool, scientists can feed their draft papers into the AI and immediately learn when they've forgotten a step. Journals can use it as part of the article review process, sending pieces back to their authors for correction when a checklist item is missing. Ultimately, the Illinois scientists' goal is to help the medical research field improve its performance for the benefit of patients.

Share

Share

Copy Link

Researchers from the University of Illinois Urbana-Champaign have created an AI tool to identify missing steps in medical research reports, aiming to improve the quality and transparency of clinical trial reporting.

The Challenge of Incomplete Clinical Trial Reporting

Randomized, controlled clinical trials are the gold standard for evaluating new medical treatments. However, scientists often fail to fully report crucial details of their studies, making it difficult for other researchers to assess the quality of the trials

1

. With the vast number of clinical trials published annually, manual assessment of these reports has become impractical.AI to the Rescue: Developing an Automated Checker

Researchers from the University of Illinois Urbana-Champaign, led by Associate Professor Halil Kilicoglu, have developed an innovative AI tool to address this issue

2

. The team utilized the Bridges-2 supercomputer to train artificial intelligence models capable of identifying missing steps in research reports.Methodology and Training Process

The AI tool is based on natural language processing (NLP) and uses the CONSORT 2010 and SPIRIT 2013 statements as guidelines, which outline 83 recommended items for proper trial reporting

1

. The researchers used a dataset of 200 clinical trial articles published between 2011 and 2022 to train and test their AI models2

.Related Stories

Promising Results and Future Improvements

Initial results are encouraging, with the best NLP models achieving F₁ scores of 0.742 at the sentence level and 0.865 at the article level

1

. The team plans to improve the AI's performance by increasing the training dataset and exploring techniques like distillation to create smaller, more accessible versions of the tool2

.Potential Impact on Medical Research

The ultimate goal is to provide an open-source AI tool that authors and journals can use to catch reporting mistakes and improve the planning, conducting, and reporting of clinical trials

1

. This technology has the potential to significantly enhance the transparency and reliability of medical research, benefiting the scientific community and, ultimately, patient care.References

Summarized by

Navi

[1]

Related Stories

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology