AI Mirrors Human Biases: ChatGPT Exhibits Similar Decision-Making Flaws, Study Reveals

3 Sources

3 Sources

[1]

AI Thinks Like Us: Flaws, Biases, and All, Study Finds - Neuroscience News

Summary: A new study finds that ChatGPT, while excellent at logic and math, exhibits many of the same cognitive biases as humans when making subjective decisions. In tests for common judgment errors, the AI showed overconfidence, risk aversion, and even the classic gambler's fallacy, though it avoided other typical human mistakes like base-rate neglect. Interestingly, newer versions of the AI were more analytically accurate but also displayed stronger judgment-based biases in some cases. These findings raise concerns about relying on AI for high-stakes decisions, as it may not eliminate human error but instead automate it. Can we really trust AI to make better decisions than humans? A new study says ... not always. Researchers have discovered that OpenAI's ChatGPT, one of the most advanced and popular AI models, makes the same kinds of decision-making mistakes as humans in some situations - showing biases like overconfidence of hot-hand (gambler's) fallacy - yet acting inhuman in others (e.g., not suffering from base-rate neglect or sunk cost fallacies). Published in the INFORMS journal Manufacturing & Service Operations Management, the study reveals that ChatGPT doesn't just crunch numbers - it "thinks" in ways eerily similar to humans, including mental shortcuts and blind spots. These biases remain rather stable across different business situations but may change as AI evolves from one version to the next. AI: A Smart Assistant with Human-Like Flaws The study, "A Manager and an AI Walk into a Bar: Does ChatGPT Make Biased Decisions Like We Do?," put ChatGPT through 18 different bias tests. The results? Why This Matters From job hiring to loan approvals, AI is already shaping major decisions in business and government. But if AI mimics human biases, could it be reinforcing bad decisions instead of fixing them? "As AI learns from human data, it may also think like a human - biases and all," says Yang Chen, lead author and assistant professor at Western University. "Our research shows when AI is used to make judgment calls, it sometimes employs the same mental shortcuts as people." The study found that ChatGPT tends to: "When a decision has a clear right answer, AI nails it - it is better at finding the right formula than most people are," says Anton Ovchinnikov of Queen's University. "But when judgment is involved, AI may fall into the same cognitive traps as people." So, Can We Trust AI to Make Big Decisions? With governments worldwide working on AI regulations, the study raises an urgent question: Should we rely on AI to make important calls when it can be just as biased as humans? "AI isn't a neutral referee," says Samuel Kirshner of UNSW Business School. "If left unchecked, it might not fix decision-making problems - it could actually make them worse." The researchers say that's why businesses and policymakers need to monitor AI's decisions as closely as they would a human decision-maker. "AI should be treated like an employee who makes important decisions - it needs oversight and ethical guidelines," says Meena Andiappan of McMaster University. "Otherwise, we risk automating flawed thinking instead of improving it." What's Next? The study's authors recommend regular audits of AI-driven decisions and refining AI systems to reduce biases. With AI's influence growing, making sure it improves decision-making - rather than just replicating human flaws - will be key. "The evolution from GPT-3.5 to 4.0 suggests the latest models are becoming more human in some areas, yet less human but more accurate in others," says Tracy Jenkin of Queen's University. "Managers must evaluate how different models perform on their decision-making use cases and regularly re-evaluate to avoid surprises. Some use cases will need significant model refinement." A Manager and an AI Walk into a Bar: Does ChatGPT Make Biased Decisions Like We Do? Problem definition: Large language models (LLMs) are being increasingly leveraged in business and consumer decision-making processes. Because LLMs learn from human data and feedback, which can be biased, determining whether LLMs exhibit human-like behavioral decision biases (e.g., base-rate neglect, risk aversion, confirmation bias, etc.) is crucial prior to implementing LLMs into decision-making contexts and workflows. To understand this, we examine 18 common human biases that are important in operations management (OM) using the dominant LLM, ChatGPT. Methodology/results: We perform experiments where GPT-3.5 and GPT-4 act as participants to test these biases using vignettes adapted from the literature ("standard context") and variants reframed in inventory and general OM contexts. In almost half of the experiments, Generative Pre-trained Transformer (GPT) mirrors human biases, diverging from prototypical human responses in the remaining experiments. We also observe that GPT models have a notable level of consistency between the standard and OM-specific experiments as well as across temporal versions of the GPT-3.5 model. Our comparative analysis between GPT-3.5 and GPT-4 reveals a dual-edged progression of GPT's decision making, wherein GPT-4 advances in decision-making accuracy for problems with well-defined mathematical solutions while simultaneously displaying increased behavioral biases for preference-based problems. Managerial implications: First, our results highlight that managers will obtain the greatest benefits from deploying GPT to workflows leveraging established formulas. Second, that GPT displayed a high level of response consistency across the standard, inventory, and non-inventory operational contexts provides optimism that LLMs can offer reliable support even when details of the decision and problem contexts change. Third, although selecting between models, like GPT-3.5 and GPT-4, represents a trade-off in cost and performance, our results suggest that managers should invest in higher-performing models, particularly for solving problems with objective solutions. Funding: This work was supported by the Social Sciences and Humanities Research Council of Canada [Grant SSHRC 430-2019-00505]. The authors also gratefully acknowledge the Smith School of Business at Queen's University for providing funding to support Y. Chen's postdoctoral appointment.

[2]

Can AI be trusted to make unbiased decisions? Scientists found out - Earth.com

AI is often praised for being fast, efficient, and objective. But when it comes to non-biased judgment and decision-making, can AI really outperform a human? Not always, says a new study. In fact, AI can fall into the same traps that we do - overconfidence, risk avoidance, and other mental shortcuts - especially in situations that aren't purely logical. A multidisciplinary team of scientists came together and tested ChatGPT, a popular AI developed by OpenAI, and found that while the tool excels at math and logic, it shows human-like biases in subjective scenarios. Human bias comes from the brain's need to make quick decisions. Our brains are wired to take mental shortcuts - called heuristics - to save time and energy. These shortcuts help us make sense of the world fast, but they're not always accurate. For example, if you've had one bad experience with something, your brain might unfairly generalize that feeling to everything similar. It's like your brain goes, "Hey, last time this happened, it sucked - let's avoid it altogether," even if the current situation is totally different. Bias also shows up because of how we're shaped by our environment - family, culture, media, even past experiences. Over time, we build mental filters that influence how we see people, situations, or information. Confirmation bias, for instance, makes us favor info that supports what we already believe and ignore what doesn't. The science behind bias isn't just about flaws - it's about survival, speed, and pattern recognition. The researchers ran ChatGPT through 18 classic tests designed to catch biased thinking. These weren't simple problems of logic - they were tricky decision-making scenarios where people tend to make predictable errors. The results were surprising. In nearly half the tests, ChatGPT made mistakes similar to those made by humans. It showed signs of overconfidence, ambiguity aversion, and even the gambler's fallacy. Yet, in other areas, it behaved differently. For example, it didn't fall for the sunk cost fallacy or ignore base rates like people often do. This shows that while AI can mimic human flaws, it doesn't replicate them across the board. Interestingly, the newer GPT-4 model, while more accurate on math-based problems, showed even stronger biases in scenarios requiring judgment. This suggests that as AI improves in one area, it may regress in others. "As AI learns from human data, it may also think like a human - biases and all," said Yang Chen, lead author and assistant professor at Western University. "Our research shows when AI is used to make judgment calls, it sometimes employs the same mental shortcuts as people." One clear takeaway from the study is that ChatGPT tends to play it safe. It often avoids risky options, even when taking a calculated risk could lead to a better outcome. This cautious approach might make AI seem more reliable, but it can also limit potential gains in complex decision-making scenarios. The AI also has a tendency to overestimate its own accuracy. It presents its conclusions with a level of confidence that can be misleading, especially when the correct answer isn't clear-cut. Additionally, ChatGPT tends to seek information that confirms what it already "believes," a phenomenon that is often seen when humans gather evidence, and known as confirmation bias. Another trait observed was the AI's preference for certainty. When faced with ambiguous options, it gravitated toward the choice with more clear-cut information, even if the ambiguous one held greater potential benefits. These behaviors reflect a very human-like discomfort with uncertainty. "When a decision has a clear right answer, AI nails it - it is better at finding the right formula than most people are," says Anton Ovchinnikov of Queen's University. "But when judgment is involved, AI may fall into the same cognitive traps as people." These findings come at a time when AI tools are increasingly being used to make unbiased, high-stakes decisions - like hiring employees, approving loans, or setting insurance rates. If these tools bring the same flaws into the mix as humans do, we might be scaling bias rather than removing it. "AI isn't a neutral referee," said Samuel Kirshner of the UNSW Business School. "If left unchecked, it might not fix decision-making problems - it could actually make them worse." That's why the researchers emphasize the need for accountability from biased AI systems. "AI should be treated like an employee who makes important decisions - it needs oversight and ethical guidelines," explained Meena Andiappan of McMaster University. "Otherwise, we risk automating flawed thinking instead of improving it." As AI evolves, so should our approach to using it. That means not only designing better models but also setting up systems to check regularly how well they're working. "The evolution from GPT-3.5 to 4.0 suggests the latest models are becoming more human in some areas, yet less human but more accurate in others," commented Tracy Jenkin of Queen's University. "Managers must evaluate how different models perform on their decision-making use cases and regularly re-evaluate to avoid surprises. Some use cases will need significant model refinement." The message is clear: AI is powerful, but it's not perfect. And if we want it to truly help us make better decisions, we'll need to keep a close eye on how it thinks. The full study was published in the journal Manufacturing & Service Operations Management. -- - Like what you read? Subscribe to our newsletter for engaging articles, exclusive content, and the latest updates.

[3]

AI thinks like us -- flaws and all: Study finds ChatGPT mirrors human decision biases in half the tests

by Ashley Smith, Institute for Operations Research and the Management Sciences Can we really trust AI to make better decisions than humans? A new study says ... not always. Researchers have discovered that OpenAI's ChatGPT, one of the most advanced and popular AI models, makes the same kinds of decision-making mistakes as humans in some situations -- showing biases like overconfidence of hot-hand (gambler's) fallacy -- yet acting inhuman in others (e.g., not suffering from base-rate neglect or sunk cost fallacies). Published in the Manufacturing & Service Operations Management journal, the study reveals that ChatGPT doesn't just crunch numbers -- it "thinks" in ways eerily similar to humans, including mental shortcuts and blind spots. These biases remain rather stable across different business situations but may change as AI evolves from one version to the next. AI: A smart assistant with human-like flaws The study, "A Manager and an AI Walk into a Bar: Does ChatGPT Make Biased Decisions Like We Do?," put ChatGPT through 18 different bias tests. The results? Why this matters From job hiring to loan approvals, AI is already shaping major decisions in business and government. But if AI mimics human biases, could it be reinforcing bad decisions instead of fixing them? "As AI learns from human data, it may also think like a human -- biases and all," says Yang Chen, lead author and assistant professor at Western University. "Our research shows when AI is used to make judgment calls, it sometimes employs the same mental shortcuts as people." The study found that ChatGPT tends to: "When a decision has a clear right answer, AI nails it -- it is better at finding the right formula than most people are," says Anton Ovchinnikov of Queen's University. "But when judgment is involved, AI may fall into the same cognitive traps as people." So, can we trust AI to make big decisions? With governments worldwide working on AI regulations, the study raises an urgent question: Should we rely on AI to make important calls when it can be just as biased as humans? "AI isn't a neutral referee," says Samuel Kirshner of UNSW Business School. "If left unchecked, it might not fix decision-making problems -- it could actually make them worse." The researchers say that's why businesses and policymakers need to monitor AI's decisions as closely as they would a human decision-maker. "AI should be treated like an employee who makes important decisions -- it needs oversight and ethical guidelines," says Meena Andiappan of McMaster University. "Otherwise, we risk automating flawed thinking instead of improving it." What's next? The study's authors recommend regular audits of AI-driven decisions and refining AI systems to reduce biases. With AI's influence growing, making sure it improves decision-making -- rather than just replicating human flaws -- will be key. "The evolution from GPT-3.5 to 4.0 suggests the latest models are becoming more human in some areas, yet less human but more accurate in others," says Tracy Jenkin of Queen's University. "Managers must evaluate how different models perform on their decision-making use cases and regularly re-evaluate to avoid surprises. Some use cases will need significant model refinement."

Share

Share

Copy Link

A new study finds that ChatGPT, while excelling at logic and math, displays many of the same cognitive biases as humans when making subjective decisions, raising concerns about AI's reliability in high-stakes decision-making processes.

AI Exhibits Human-Like Biases in Decision-Making

A groundbreaking study published in the INFORMS journal Manufacturing & Service Operations Management has revealed that ChatGPT, one of the most advanced AI models, exhibits many of the same cognitive biases as humans when making subjective decisions

1

. This finding challenges the notion that AI can consistently make more objective and unbiased decisions than humans.Study Methodology and Key Findings

Researchers conducted 18 different bias tests on ChatGPT, examining its decision-making processes across various scenarios. The results showed that in nearly half of the tests, ChatGPT made mistakes similar to those made by humans

2

.Key biases observed in ChatGPT include:

- Overconfidence

- Risk aversion

- Gambler's fallacy

- Ambiguity aversion

- Confirmation bias

Interestingly, ChatGPT avoided some typical human errors, such as base-rate neglect and sunk cost fallacies

1

.Evolution of AI Models and Bias

The study also compared different versions of ChatGPT, revealing an intriguing trend. Newer versions, such as GPT-4, showed improved accuracy in mathematical and logical problems but displayed stronger biases in scenarios requiring subjective judgment

3

.Implications for AI in Decision-Making Processes

These findings raise significant concerns about relying on AI for high-stakes decisions in various fields, including:

- Job hiring

- Loan approvals

- Insurance rates

- Government policy-making

Yang Chen, lead author and assistant professor at Western University, warns, "As AI learns from human data, it may also think like a human - biases and all"

2

.Related Stories

The Need for Oversight and Ethical Guidelines

The researchers emphasize the importance of treating AI like an employee who makes important decisions, requiring oversight and ethical guidelines. Samuel Kirshner of UNSW Business School cautions, "If left unchecked, it might not fix decision-making problems - it could actually make them worse"

3

.Future Directions and Recommendations

To address these concerns, the study's authors recommend:

- Regular audits of AI-driven decisions

- Refining AI systems to reduce biases

- Evaluating different AI models for specific decision-making use cases

- Continuous re-evaluation to avoid unexpected outcomes

Tracy Jenkin of Queen's University notes, "Managers must evaluate how different models perform on their decision-making use cases and regularly re-evaluate to avoid surprises. Some use cases will need significant model refinement"

1

.As AI continues to play an increasingly significant role in decision-making processes across various sectors, understanding and mitigating these biases will be crucial for ensuring that AI truly improves decision-making rather than simply replicating human flaws at scale.

References

Summarized by

Navi

[1]

Related Stories

AI Chatbots Overestimate Their Abilities, Raising Concerns About Reliability

16 Jul 2025•Science and Research

AI Outperforms Humans in Emotional Intelligence Tests, Opening New Possibilities in Education and Coaching

23 May 2025•Science and Research

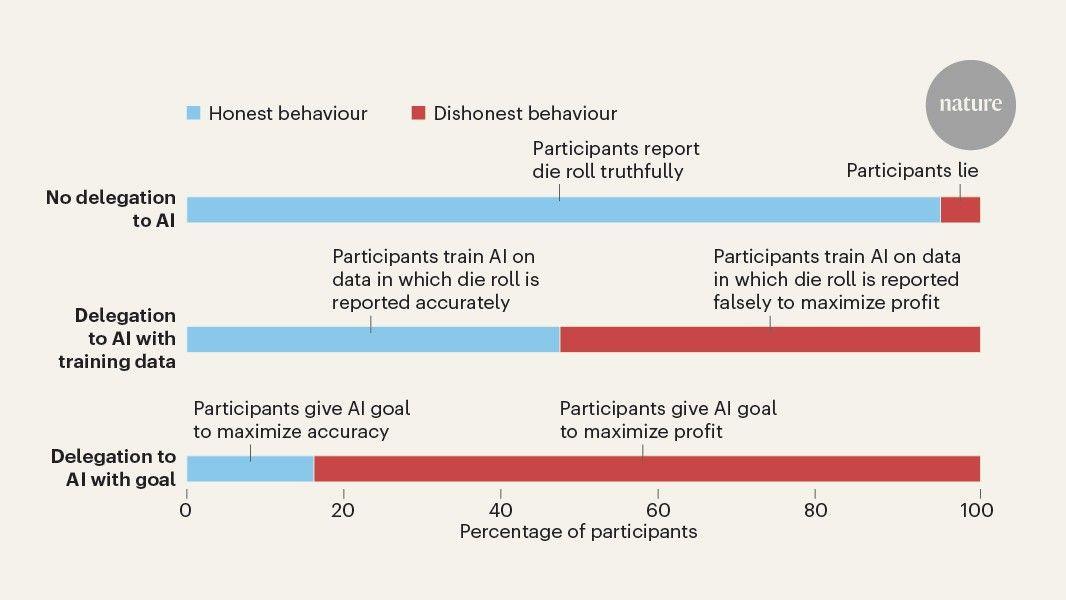

AI Delegation Increases Unethical Behavior, Study Reveals

17 Sept 2025•Science and Research

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology