DeepSeek's R2 AI Model Delayed: Huawei Chip Challenges Highlight China's Tech Dilemma

10 Sources

10 Sources

[1]

DeepSeek delays next AI model due to poor performance of Chinese-made chips

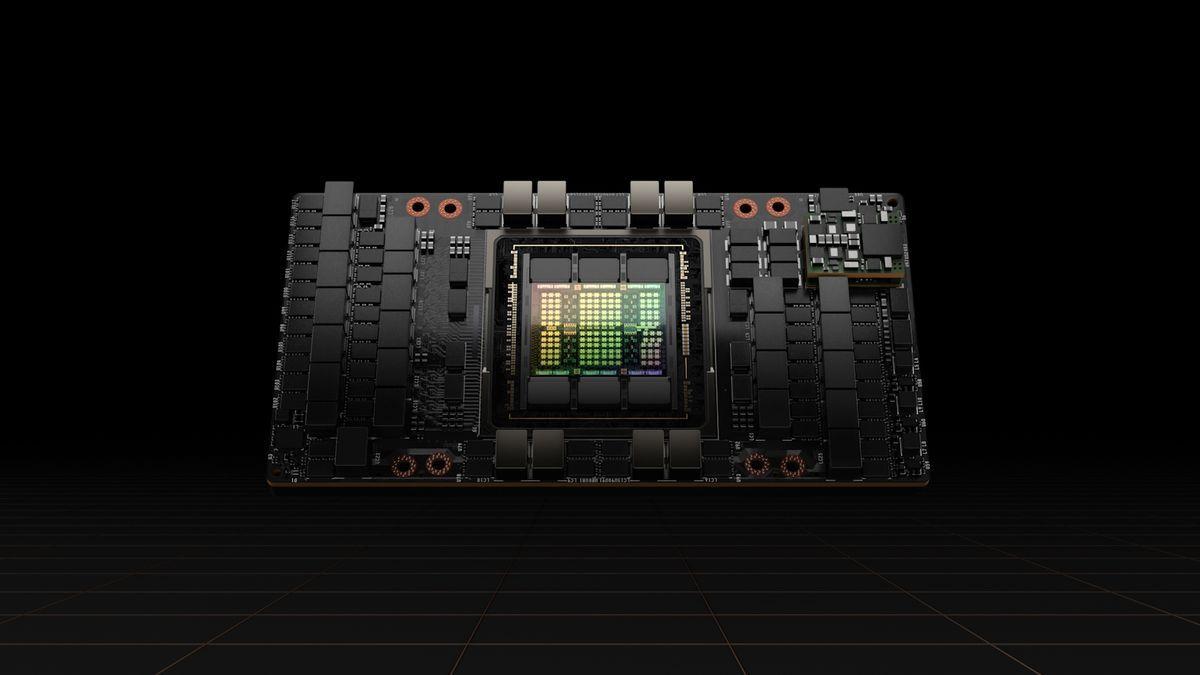

Chinese artificial intelligence company DeepSeek delayed the release of its new model after failing to train it using Huawei's chips, highlighting the limits of Beijing's push to replace US technology. DeepSeek was encouraged by authorities to adopt Huawei's Ascend processor rather than use Nvidia's systems after releasing its R1 model in January, according to three people familiar with the matter. But the Chinese startup encountered persistent technical issues during its R2 training process using Ascend chips, prompting it to use Nvidia chips for training and Huawei's for inference, said the people. The issues were the main reason the model's launch was delayed from May, said a person with knowledge of the situation, causing it to lose ground to rivals. Training involves the model learning from a large dataset, while inference refers to the step of using a trained model to make predictions or generate a response, such as a chatbot query. DeepSeek's difficulties show how Chinese chips still lag behind their US rivals for critical tasks, highlighting the challenges facing China's drive to be technologically self-sufficient. The Financial Times this week reported that Beijing has demanded that Chinese tech companies justify their orders of Nvidia's H20, in a move to encourage them to promote alternatives made by Huawei and Cambricon. Industry insiders have said the Chinese chips suffer from stability issues, slower inter-chip connectivity and inferior software compared with Nvidia's products. Huawei sent a team of engineers to DeepSeek's office to help the company use its AI chip to develop the R2 model, according to two people. Yet despite having the team on site, DeepSeek could not conduct a successful training run on the Ascend chip, said the people. DeepSeek is still working with Huawei to make the model compatible with Ascend for inference, the people said. Founder Liang Wenfeng has said internally he is dissatisfied with R2's progress and has been pushing to spend more time to build an advanced model that can sustain the company's lead in the AI field, they said. The R2 launch was also delayed because of longer-than-expected data labelling for its updated model, another person added. Chinese media reports have suggested that the model may be released as soon as in the coming weeks. "Models are commodities that can be easily swapped out," said Ritwik Gupta, an AI researcher at the University of California, Berkeley. "A lot of developers are using Alibaba's Qwen3, which is powerful and flexible." Gupta noted that Qwen3 adopted DeepSeek's core concepts, such as its training algorithm that makes the model capable of reasoning, but made them more efficient to use. Gupta, who tracks Huawei's AI ecosystem, said the company is facing "growing pains" in using Ascend for training, though he expects the Chinese national champion to adapt eventually. "Just because we're not seeing leading models trained on Huawei today doesn't mean it won't happen in the future. It's a matter of time," he said. Nvidia, a chipmaker at the centre of a geopolitical battle between Beijing and Washington, recently agreed to give the US government a cut of its revenues in China in order to resume sales of its H20 chips to the country. "Developers will play a crucial role in building the winning AI ecosystem," said Nvidia about Chinese companies using its chips. "Surrendering entire markets and developers would only hurt American economic and national security." DeepSeek and Huawei did not respond to a request for comment.

[2]

Dodgy Huawei chips nearly sunk DeepSeek's next-gen R2 mode

Chinese AI model dev still plans to use homegrown silicon for inferencing Unhelpful Huawei AI chips are reportedly why Chinese model dev DeepSeek's next-gen LLMs are taking so long. Following the industry rattling launch of DeepSeek R1 earlier this year, the Chinese AI darling faced pressure from government authorities to train the model's successor on Huawei's homegrown silicon, three unnamed sources have told the Financial Times. But after months of work and the help of an entire team of Huawei engineers, unstable chips, glacial interconnects, and immature software proved insurmountable for DeepSeek, which was apparently unable to complete a single successful training run. The failure, along with challenges with data labeling, ultimately delayed the release of DeepSeek R2 as the company started anew, using Nvidia's H20 GPUs instead. The company has reportedly relegated Huawei's Ascend accelerators to inference duty. The Register reached out to DeepSeek for comment but hadn't heard back at the time of publication. Huawei's Ascend accelerators, in particular the Ascend 910C that powers the IT giant's CloudMatrix rack-scale compute platform, have garnered considerable attention in recent months. While we don't know exactly which revision of Huawei's chips DeepSeek was playing with, at least on paper, the Ascend 910C should have delivered better performance in training than Nvidia's H20. The Ascend 910C offers more vRAM and more than twice the BF16 floating point performance, while falling slightly behind in memory bandwidth, something that's less of an issue for training than inference. Despite this, training is a more complex endeavor than one chip and involves distributing some of humankind's most computationally intensive workloads across tens of thousands of chips. If any one component fails, you have to start over from the last checkpoint. For this reason, it is not uncommon for newcomers to the AI chip space to focus on inference, where the blast radius is far smaller while they work out the kinks required to scale the tech up. Huawei is clearly moving in that direction with its CloudMatrix rack systems, which aim to simplify the deployment of large scale training clusters based on its chips. Given how heavily DeepSeek optimized its training stack around Nvidia's hardware, going so far as to train much of the original V3 model on which R1 was based at FP8, a switch to Ascend would have required some heavy retooling. In addition to using a completely different software stack, Huawei's Ascend accelerators don't support FP8 and would have had to rely on more memory intensive 16-bit data types. Even considering the geopolitical statement that training a frontier model on homegrown Chinese silicon made, this strikes us as an odd concession. One possibility is that DeepSeek was specifically trying to use Huawei's Ascend accelerators for the reinforcement learning phase of the model's training, which requires inferencing large quantities of tokens to imbue an existing base model with "reasoning" capabilities. This might explain why the Financial Times article specifically mentions R2 rather than a V4 model. As we mentioned earlier, R1 was based on DeepSeek's earlier V3 model. We don't know yet which model R2 will be based on. In any case, the news comes just days after Bloomberg reported that Chinese authorities had begun discouraging model devs from using Nvidia's H20 accelerators, especially for sensitive government workloads. ®

[3]

From hype to headaches - DeepSeek trips over Huawei chips and watches rivals seize the chance

Alibaba's Qwen3 exploits DeepSeek's delays, incorporating core algorithms while improving efficiency and flexibility Chinese AI giant DeepSeek has apparently encountered unexpected delays in releasing its latest model, R2, after facing persistent technical difficulties with Huawei's Ascend chips. The company had been encouraged by Chinese authorities to adopt domestic processors instead of relying on Nvidia's H20 systems, which are generally regarded as more mature and reliable. Despite Huawei engineers being on-site to assist, DeepSeek could not complete a successful training run using Ascend chips - and as a result, the company relied on Nvidia hardware for training while using Ascend for inference tasks. The R2 launch, originally scheduled for May 2025, was postponed due to these technical obstacles and longer-than-expected data labeling for the updated training dataset. DeepSeek founder Liang Wenfeng reportedly expressed dissatisfaction with the model's progress, emphasizing the need for additional development time to produce a model capable of maintaining DeepSeek's competitive edge. Meanwhile, competitors like Alibaba's Qwen3 were able to take advantage of this delay, as it has incorporated DeepSeek's core training algorithms while improving efficiency and flexibility, showing how rapidly AI ecosystems can evolve even when a single startup struggles. Beijing's broader push for AI self-sufficiency has placed pressure on domestic firms to adopt local hardware. In practice, however, this strategy has revealed gaps in stability, inter-chip connectivity, and software maturity between Huawei chips and Nvidia products. Developers continue to play a crucial role in shaping the success of AI ecosystems - Nvidia has emphasized maintaining access to Chinese developers is strategically important, warning that restricting technology adoption could harm economic and national security interests. Chinese AI companies, meanwhile, must balance government pressures with practical realities in developing and deploying LLMs. Despite these setbacks, DeepSeek's R2 model may still be released in the coming weeks. The model is likely to face scrutiny regarding its performance relative to rivals trained on more mature hardware, offering a clear example of the tension between political ambitions, technical capability, and real-world AI deployment.

[4]

DeepSeek R2 model release reportedly held back by faulty Huawei chips - SiliconANGLE

DeepSeek R2 model release reportedly held back by faulty Huawei chips China's leading artificial intelligence startup DeepSeek Ltd., which took the industry by storm earlier this year, was reportedly forced to delay the release of its upcoming R2 model after struggling to train it using chips supplied by Huawei Technologies Co. Ltd. The delay highlights the ongoing struggles China faces as it pushes domestic AI companies to move away from their reliance on U.S. technology, Reuters reported. Three anonymous sources told Reuters that DeepSeek had been encouraged to use Huawei's Ascend-branded graphics processing units, rather than Nvidia Corp.'s hardware, to develop the R2 model. The startup previously rocked the AI industry with the release of its flagship R1 large language model, claiming it trained the algorithm at a cost of just a few million dollars, in contrast to the billions of dollars spent by U.S. AI firms such as OpenAI and Google LLC. Chinese authorities reportedly told DeepSeek to use Huawei's chips in the wake of a decision by U.S. President Donald Trump to prohibit the export of Nvidia's popular H20 GPU to China. The ban came into effect immediately following the April announcement. However, the startup faced numerous and persistent technical issues when trying to train R2 on Huawei's Ascend chips, and ultimately went back to using the Nvidia chips that were available to it. It did, however, continue to use the Ascend chips for inference, the sources said. AI training involves teaching models to learn using large datasets, while inference refers to using already-trained models to power AI applications, such as chatbots and image generators. DeepSeek had originally hoped to launch R2 in May, but it has not yet done so, and is seen to have lost ground to its U.S. rivals, which have since debuted AI models that surpass the performance of R1. The difficulties experienced by DeepSeek illustrate how China's domestic chipmaking industry still lags behind that of the U.S., hampering the country's efforts to become self-sufficient in technology. It also explains why China was so keen to secure a trade deal with Washington, which included the provision that it be allowed to resume buying Nvidia's H20 chips. Despite being allowed to buy Nvidia's chips again, China has insisted that any local AI developers justify such orders, which will inevitably come at the expense of domestic chipmakers, the Financial TImes said in an earlier report this week. The country is still keen to promote the adoption of alternatives from Huawei and Cambricon Co. Ltd. where possible, the report said. Last month, Huawei debuted its most advanced AI server, the CloudMatrix 384 system that's powered by 384 Ascend 910C GPUs, positioning it as an alternative to Nvidia's GB200 NVL72 system. At the time, it said the CloudMatrix 384 surpassed Nvidia's server in terms of pure petaflops performance, while also providing more memory and greater bandwidth, albeit while using significantly more power. But although some western analysts praised the CloudMatrix 384 system, others believed that the Ascend chips remain plagued by stability issues and slower chip-to-chip connectivity than Nvidia's products. Ritwik Gupta, an AI researcher at the University of California, Berkeley, told the Financial Times that the software provided with Huawei's chips is thought to be inferior to Nvidia's. Reuters' sources said Huawei sent a crack team of engineers to try and assist DeepSeek in training the R2 model, yet even with them on site, the company struggled to conduct a successful training run. The engineers did have more success in getting the Ascend chips to power inference, though. According to Gupta, Huawei appears to be facing "growing pains" in terms of using Ascend for AI training, but he expects the company to solve whatever challenges are holding it back. "Just because we're not seeing leading models trained on Huawei today doesn't mean it won't happen in the future," he said. "It's a matter of time," DeepSeek founder Liang Wenfeng has reportedly told employees that he's disappointed with the progress of R2, and wants to spend more time enhancing the model so it can unseat its American rivals. However, Chinese media reports suggest that R2 may finally make its debut in the coming weeks.

[5]

Huawei pressure blamed for DeepSeek's next-gen AI model delay

TL;DR: DeepSeek's next-generation AI model, R2, faces significant delays due to unstable Huawei Ascend 910C chips and immature software, hindering development and training. Originally set for May 2025, the company is now shifting to NVIDIA H20 GPUs amid trade restrictions easing, aiming to meet performance standards and resume progress. DeepSeek rattled the AI industry when it unveiled the R1 model earlier this year, proving that a sophisticated AI model doesn't need an egregious amount of GPU horsepower to compete with already existing AI models. But where is the company's next-generation AI model, R2? According to a report from the Financial Times, the upcoming AI model was blundered when the company was pressured by the Chinese government to use Huawei chips to develop it. Three unnamed sources spoke to the publication and said the upcoming model is struggling in development due to unstable Huawei chips, immature software, and other factors. Notably, these unnamed individuals said the new model wasn't even able to complete a single training run. DeepSeek R2 was initially planned for release in May 2025, but was postponed with no reason provided. It was noted that the R2 model's performance was falling below the standards set by the company, specifically DeepSeek's CEO Liang Wenfeng. There were also trade restrictions on NVIDIA's AI GPUs, which are now coming down as a new deal has been struck between AI GPU makers and the US government. Reports now state the delay of the R2 model can be traced back to Huawei's Ascend accelerators, specifically the Ascend 910C, and now DeepSeek is opting for NVIDIA's H20 GPUs instead.

[6]

DeepSeek delays R2 model launch over Huawei chip issues

DeepSeek, a prominent Chinese AI company, faced unexpected delays in releasing its R2 model due to persistent technical difficulties encountered with Huawei's Ascend chips. Chinese authorities had encouraged DeepSeek to utilize domestic processors over Nvidia's H20 systems. Despite on-site assistance from Huawei engineers, DeepSeek was unable to complete a successful training run using Ascend chips. Consequently, the company relied on Nvidia hardware for training processes while deploying Ascend chips for inference tasks. This dual-hardware approach underscores the challenges in fully transitioning to domestic AI infrastructure for complex operations. The R2 model's launch, initially scheduled for May 2025, was postponed. This delay stemmed from the unresolved technical obstacles associated with the Ascend chips and extended data labeling requirements for the updated training dataset. DeepSeek founder Liang Wenfeng reportedly articulated dissatisfaction with the model's development progress, indicating the necessity for additional time to ensure the model could maintain DeepSeek's competitive standing. Competitors have leveraged DeepSeek's development setbacks. Alibaba's Qwen3, for instance, has integrated core training algorithms similar to DeepSeek's, while simultaneously enhancing efficiency and flexibility in its own systems. This development highlights the rapid evolution within AI ecosystems and the potential for rivals to capitalize on the difficulties encountered by individual startups. Beijing's broader strategic initiative for AI self-sufficiency has intensified pressure on domestic firms to adopt locally produced hardware. This push aims to reduce reliance on foreign technology, particularly from companies like Nvidia. However, the implementation of this strategy has revealed existing disparities in stability, inter-chip connectivity, and software maturity when comparing Huawei's chips with Nvidia's established products. Nvidia has emphasized the strategic importance of maintaining access for Chinese developers, noting that restrictions on technology adoption could adversely affect economic and national security interests. Chinese AI companies are navigating a complex landscape, balancing governmental directives to use domestic hardware with the practical realities of developing and deploying large language models, which often require advanced and reliable processing capabilities. Despite these challenges, DeepSeek's R2 model may still see a release in the coming weeks. The model's eventual performance will likely undergo scrutiny, particularly when evaluated against rivals that have been trained using more mature and established hardware. This situation exemplifies the ongoing tension between national political objectives, available technical capabilities, and the practical demands of real-world AI deployment.

[7]

Seven months after stunning the world, China's DeepSeek AI leans on US technology for critical upgrade

China's Revolutionary DeepSeek Turns to American Hardware for Upgrade- China's much-hyped DeepSeek project, once touted as a breakthrough in homegrown AI independence, has quietly circled back to American hardware after its gamble on Huawei's Ascend chips fell short. Engineers found the Chinese processors too unstable for large-scale training of the company's new R2 model, forcing DeepSeek to rely again on Nvidia GPUs -- the very technology U.S. export rules were meant to keep out of Beijing's reach. The move underscores a hard truth: while China can innovate at the software and algorithmic level, its AI future still hinges on semiconductors designed in California. This reliance not only exposes the fragility of China's self-reliance narrative but also raises pressing questions about how far Washington's export curbs can really go in controlling the global AI race. When DeepSeek announced it would train its next-generation R2 model on Huawei's Ascend GPUs, the move was hailed in Beijing as proof that China could shed its reliance on American semiconductors. The plan didn't last long. By June 2025, engineers inside DeepSeek privately acknowledged that Ascend chips failed to deliver the consistency required for massive-scale training. Sources familiar with the project told the Financial Times (July 2025) that the Ascend processors suffered from unstable performance, weaker interconnect bandwidth, and a lack of mature software tools -- all critical weaknesses in an era when model training can consume tens of thousands of GPUs simultaneously. The result: Huawei's silicon is still being used, but only for inference workloads (running the trained model), while training has quietly shifted back to Nvidia hardware, the very dependency China's AI sector was under political pressure to escape. Despite U.S. export controls, Nvidia's grip remains unshaken. Even China's most sophisticated AI companies struggle to replicate the CUDA software ecosystem that Nvidia has spent nearly two decades refining. Training a model like DeepSeek's R2 -- rumored to involve over 700 billion parameters -- is less about raw chip speed and more about orchestration, driver support, and optimization libraries. In practice, Chinese engineers describe Huawei's platform as "running a marathon in sandals while Nvidia wears carbon-fiber spikes." That blunt analogy, shared by one engineer who worked on both setups, captures the gap. Hardware is only half the battle; software maturity is the other half, and Nvidia still leads. Here's the thorny part. U.S. rules technically bar Nvidia from selling its most advanced chips, such as the A100 and H100, directly to China. Yet congressional investigators revealed in April 2025 that DeepSeek somehow amassed tens of thousands of Nvidia GPUs through shell distributors in Singapore and the Middle East. Lawmakers on Capitol Hill now accuse DeepSeek of sidestepping export rules, with several Republicans calling for tighter scrutiny. Nvidia, for its part, points to its H20 line of "downgraded" GPUs -- designed to meet U.S. restrictions -- which it can legally export. But multiple industry insiders note that DeepSeek's scale suggests it also tapped into backchannels to acquire restricted units. This dual reality highlights Washington's dilemma: export bans slow China, but they don't stop it. DeepSeek's return to Nvidia exposes the contradiction at the heart of Beijing's technology push. On one hand, China is pouring billions into domestic chip design and production. On the other, its most visible AI breakthrough still leans on American silicon. For policymakers in Washington, this is both reassurance and alarm. Reassurance, because it confirms that U.S. technology remains indispensable. Alarm, because despite layers of export controls, Chinese companies are still finding ways to secure the hardware. For Chinese AI startups, the lesson is more pragmatic: innovation at the algorithmic level -- as DeepSeek demonstrated with its mixture-of-experts architecture that slashed training costs -- can stretch limited resources, but it cannot fully replace cutting-edge chips. The DeepSeek saga isn't just about one company's hardware choices. It underscores a larger geopolitical reality: the global AI race hinges not only on data and talent but on who controls the chip supply chain. As one Beijing-based venture capitalist told: "Every AI startup pitch deck begins with the same line -- how many Nvidia GPUs they can get. Nothing else matters until that question is answered." China's DeepSeek may represent cutting-edge algorithmic ingenuity, but its reliance on American hardware reveals the fragile foundation of the country's AI push. For now, the future of Chinese AI still runs, quite literally, on Nvidia. The critical question going forward: can Beijing close the gap before Washington tightens controls further? Or will the world's most ambitious AI firms continue to operate in a paradox -- building revolutionary software on hardware they cannot officially buy? Either way, the story of DeepSeek's pivot back to U.S. chips is a reminder that in the AI arms race, semiconductors remain the real battlefield. Q1. Why is China's DeepSeek using American Nvidia hardware instead of Huawei chips? Because Huawei's Ascend chips were unstable for large-scale AI training, forcing DeepSeek to return to Nvidia GPUs. Q2. What does DeepSeek's reliance on Nvidia mean for China's AI future? It shows China's AI breakthroughs still depend heavily on U.S. semiconductors despite domestic innovation efforts.

[8]

Has Chinese AI sensation DeepSeek lost its mojo to rivals? Delays in R2 launch raise big questions amid fierce rivalry

DeepSeek stalls on Huawei chips after months of hype, exposing the fragile state of China's push for AI self-reliance. The company's attempt to train its R2 model on Huawei's Ascend processors collapsed under technical problems, from unstable chip performance to weak software support. What was meant to showcase independence from U.S. Nvidia hardware instead caused delays and gave rivals like Alibaba's Qwen3 and Baidu's Ernie 5.0 space to surge ahead. What began as a bold showcase of China's AI independence has instead delayed DeepSeek's roadmap and handed rivals like Alibaba's Qwen3 and Baidu's Ernie 5.0 a golden opportunity to seize market share. The stumble underscores a larger truth: despite political pressure to rely on homegrown chips, China's semiconductor ecosystem is not yet ready to carry the weight of its AI ambitions. When DeepSeek burst onto the scene in January 2025 with its R1 chatbot, the mood in Beijing was electric. Within 72 hours of launch, the app topped Apple's App Store charts in both China and the U.S. Analysts hailed it as a "Sputnik moment" for AI, suggesting China had finally built a model powerful enough to rival OpenAI and Anthropic. Unlike its Western counterparts, R1 didn't rely on billion-dollar server farms or the latest Nvidia GPUs. It was lean, resource-efficient, and open source. That formula not only made it cheaper to run but also gave China's tech giants -- from Tencent to Baidu -- a shot at scaling AI without bowing to U.S. chip restrictions. Flush with political backing, DeepSeek was nudged to do something bold: train its next-generation R2 model entirely on domestic hardware. Huawei's Ascend 910B chips were the chosen workhorse, a gamble that played directly into China's self-reliance agenda. Officials wanted proof that Chinese AI could thrive without Nvidia's A100 and H100 GPUs, which remain blacklisted under U.S. export controls. Huawei promised its chips could deliver comparable performance -- at least on paper. By May 2025, engineers inside DeepSeek's Hangzhou headquarters were running full-scale training on Ascend clusters. The results were dismal. According to two engineers, interconnect bandwidth between the chips collapsed under heavy workloads. Training jobs that would normally converge in three weeks on Nvidia hardware stretched beyond two months -- before failing altogether. The software ecosystem proved another weak link. Huawei's CANN toolkit, meant to rival Nvidia's CUDA, lacked maturity. Debugging tools crashed mid-run, leaving teams to restart jobs from scratch. "It felt like trying to fly a rocket with half the control panel missing," one engineer said. Even after Huawei dispatched its own specialists to assist, the problems persisted. By July, DeepSeek quietly shifted strategy: Nvidia GPUs would resume training duties, while Ascend hardware would be relegated to inference tasks, where speed and precision matter less. The immediate casualty was timing. DeepSeek's R2 launch, once slated for May, has now slipped indefinitely. That vacuum has allowed competitors to accelerate. Alibaba's Qwen3, launched in June, now dominates the Chinese enterprise market with tighter integration into DingTalk and AliCloud. Baidu's Ernie 5.0 has rolled out multimodal features that DeepSeek had promised but couldn't deliver. And in the U.S., OpenAI's GPT-5 continues to pull ahead with enterprise partnerships. According to research firm Bernstein, DeepSeek's market share in China's enterprise AI sector slipped from 28% in March to 19% by August 2025, while Alibaba's climbed above 30%. Investors who once treated DeepSeek as Beijing's flagship AI bet are now hedging across multiple platforms. DeepSeek's struggles are more than a corporate hiccup. They expose the technical gulf that still exists between China's semiconductor industry and Nvidia's dominance. For Beijing, this raises uncomfortable questions. Can China truly achieve technological sovereignty in AI without solving its chip bottleneck? And how long can companies like DeepSeek absorb the cost of repeated failures before investors demand results? DeepSeek insists it is not abandoning domestic hardware. In an August statement, the company said it would "continue close collaboration with Huawei and other domestic partners to enhance inference capabilities at scale." Yet insiders admit the R2 launch is unlikely before early 2026, a delay that could prove fatal in a field moving at breakneck speed. Meanwhile, rivals are pressing their advantage. Alibaba and Baidu are already pitching overseas clients. If they succeed, DeepSeek risks becoming what it swore it would never be: a one-hit wonder, remembered for the shock of its debut rather than the strength of its legacy. Q1. Why did DeepSeek stall on Huawei chips? DeepSeek faced repeated failures training its R2 model due to unstable Ascend chips and poor software support. Q2. Which rivals are gaining as DeepSeek struggles? Alibaba's Qwen3 and Baidu's Ernie 5.0 are quickly capturing market share during DeepSeek's delay.

[9]

DeepSeek's R2 AI Model Is Reportedly Delayed After Chinese Authorities Encouraged the Firm to Use Huawei's AI Chips; Beijing Is Still in Desperate Need of NVIDIA's Alternatives

Well, relying on Huawei's AI chips didn't go well for DeepSeek, as the AI firm has failed to train the R2 model on Chinese chips and is now opting for NVIDIA instead. The Chinese AI industry has relied heavily on NVIDIA and its AI ecosystem since the start of the hype. When the more recent US export controls came in, Beijing decided to speed up the development of domestic AI chip alternatives. This prompted the likes of Huawei and others to present their solutions to the Chinese Big Tech, such as the Ascend 910C AI chip; however, it seems like adopting homegrown alternatives isn't going too well. Financial Times reports that DeepSeek R2 is delayed due to using Chinese AI chips instead of NVIDIA's tech stack. The AI model was initially expected to launch in May. However, Huawei's AI chips struggle with stability issues and slower interconnect technologies. More importantly, there is no replacement for the CUDA tech stack for now, which means that eventually, firms like DeepSeek would need to rely on NVIDIA to train a superior AI model, and this is what is happening for now. Huawei's AI chips are troubling Chinese firms right now, which means that the only other option they have is adopting NVIDIA's H20 AI chips, creating new demand for Team Green in the regional markets. However, apart from just demand, there is another issue for NVIDIA right now: the ongoing investigation into the presence of security backdoors in AI chips flowing into China. Beijing has reportedly advised domestic AI firms not to use Team Green's chips, as they contain mechanisms like location tracking. It would be interesting to see how DeepSeek's R2 AI model, which is expected to debut in a few weeks, turns out. However, the report certainly shows that China's domestic alternatives are way behind in replacing NVIDIA, which means that for now, the local AI firms need to rely on chips from Team Green.

[10]

DeepSeek's Latest AI Model Delayed After Huawei Chips Fail Amid China's Push To Reduce Reliance On US Companies Like Nvidia: Report - NVIDIA (NASDAQ:NVDA), Advanced Micro Devices (NASDAQ:AMD)

The Chinese AI start-up DeepSeek has reportedly postponed the launch of its new model after technical issues with Huawei Technologies' chips forced a reliance on U.S.-made Nvidia Corp.'s NVDA processors. Huawei Chips Stumble In Critical Training Phase DeepSeek encountered persistent technical problems while attempting to train its R2 model using Huawei's Ascend processors, reported the Financial Times, citing sources familiar with the situation. The difficulties forced the company to use Nvidia chips for training while relying on Huawei hardware only for inference tasks. The launch, originally set for May, was delayed due to these technical hurdles, causing DeepSeek to fall behind competitors in the AI race, the report said. See Also: Dan Ives Says Apple's AI Strategy Has Been A 'Disaster' And No One On The Street Believes Innovation Will Come From Within Chinese Government's Push For Domestic Chips Faces Limits The setback underscores the limits of China's drive to reduce dependence on U.S. technology. Beijing has reportedly encouraged companies to favor domestic AI chips and is scrutinizing orders of Nvidia's H20 processors to promote local alternatives. Industry insiders note that Chinese chips still lag in stability, software support and inter-chip connectivity compared with Nvidia. Huawei even dispatched engineers to DeepSeek's offices to assist with training, yet the company could not achieve a successful run with Ascend chips. DeepSeek continues to work with Huawei to make the model compatible for inference, the report added, citing sources. Revenue, Security And Geopolitics Collide As US-China Tensions Rise Nvidia and Advanced Micro Devices, Inc. AMD earlier this month agreed to remit 15% of their China chip sales to the U.S. government to secure export licenses. Meanwhile, Chinese authorities have expressed security concerns over Nvidia's H20 chips, warning companies to avoid using them in government projects or sensitive infrastructure. "Just because we're not seeing leading models trained on Huawei today doesn't mean it won't happen in the future. It's a matter of time," Ritwik Gupta, an AI researcher at the University of California, Berkeley, told the publication. DeepSeek R1 Model Had Triggered A $600 billion Drop In Nvidia's Value Founder Liang Wenfeng reportedly pushed for more time to advance R2, which also faced delays due to extensive data labeling for the updated model. Chinese media suggests the launch could occur in the coming weeks. DeepSeek sparked a $600 billion wipeout in Nvidia's market value with the debut of its R1 model in January. At the time, Nvidia acknowledged DeepSeek's R1 AI model, stating that the company's work demonstrates how new models can be developed using test-time scaling, leveraging widely available models and compute that fully comply with export controls. Read Next: New Jersey Man Reportedly Dies Attempting To Meet A Meta AI Called 'Big Sis Billie' Disclaimer: This content was partially produced with the help of AI tools and was reviewed and published by Benzinga editors. Photo courtesy: mundissima on Shutterstock.com AMDAdvanced Micro Devices Inc$180.70-0.14%Stock Score Locked: Want to See it? Benzinga Rankings give you vital metrics on any stock - anytime. Reveal Full ScoreEdge RankingsMomentum90.60Growth92.99Quality79.33Value11.51Price TrendShortMediumLongOverviewNVDANVIDIA Corp$181.87-0.08%Market News and Data brought to you by Benzinga APIs

Share

Share

Copy Link

Chinese AI company DeepSeek faces setbacks in releasing its R2 model due to issues with Huawei's chips, showcasing the challenges in China's push for technological self-reliance.

DeepSeek's R2 Model Faces Significant Delays

Chinese artificial intelligence company DeepSeek has encountered substantial setbacks in the release of its highly anticipated R2 model. Originally slated for launch in May 2025, the model's development has been hampered by persistent technical issues, primarily related to the use of Huawei's Ascend processors

1

.

Source: The Register

Government Pressure and Technical Challenges

DeepSeek was reportedly encouraged by Chinese authorities to adopt Huawei's Ascend chips instead of Nvidia's systems for the development of R2 . This move aligns with Beijing's broader push for technological self-sufficiency in the AI sector. However, the transition proved problematic, with DeepSeek unable to complete a successful training run using the Ascend chips, despite on-site assistance from a team of Huawei engineers

1

.Reverting to Nvidia for Training

Faced with these challenges, DeepSeek ultimately resorted to using Nvidia chips for the crucial training phase of R2's development, while relegating Huawei's Ascend processors to inference tasks

3

. This decision highlights the current limitations of Chinese-made chips in handling the complex requirements of large-scale AI model training.

Source: TweakTown

Impact on DeepSeek's Market Position

The delay in R2's release has potentially cost DeepSeek ground in the highly competitive AI market. Competitors, such as Alibaba's Qwen3, have seized the opportunity to incorporate DeepSeek's core concepts while improving efficiency and flexibility

3

. This development underscores the rapid evolution of AI ecosystems and the consequences of falling behind in this fast-paced industry.Broader Implications for China's AI Ambitions

DeepSeek's struggles with Huawei's chips reveal the challenges facing China's drive for technological self-sufficiency in the AI sector. Industry insiders have noted that Chinese chips still lag behind their US counterparts in terms of stability, inter-chip connectivity, and software maturity

1

.Related Stories

The Geopolitical Context

This situation unfolds against a backdrop of ongoing tensions between China and the United States in the tech sector. Recent reports suggest that Beijing has demanded Chinese tech companies justify their orders of Nvidia's H20 chips, in a move to promote domestic alternatives

1

. Meanwhile, Nvidia has agreed to give the US government a share of its revenues in China to resume sales of its H20 chips to the country4

.Looking Ahead

Source: SiliconANGLE

Despite these setbacks, experts believe that Huawei and other Chinese chip manufacturers will eventually overcome these "growing pains"

1

. DeepSeek is reportedly still working with Huawei to make the R2 model compatible with Ascend for inference tasks, and Chinese media reports suggest that the model may be released in the coming weeks5

.As the AI race continues, the industry eagerly awaits the debut of DeepSeek's R2 model and its performance relative to competitors trained on more mature hardware. This episode serves as a clear example of the complex interplay between political ambitions, technical capabilities, and the realities of AI development and deployment in the global market.

References

Summarized by

Navi

[2]

[3]

[4]

Related Stories

DeepSeek Under Scrutiny: Allegations of Military Aid and Export Control Evasion

23 Jun 2025•Technology

Huawei's Ascend 910C Challenges Nvidia's AI Dominance with 60% H100 Inference Performance

05 Feb 2025•Technology

DeepSeek Unveils V3.1: A Leap Forward in AI with Domestic Chip Optimization

20 Aug 2025•Technology

Recent Highlights

1

French Police Raid X Office as Grok Investigation Expands to Include Holocaust Denial Claims

Policy and Regulation

2

OpenAI launches Codex MacOS app with GPT-5.3 model to challenge Claude Code dominance

Technology

3

Anthropic releases Claude Opus 4.6 as AI model advances rattle software stocks and cybersecurity

Technology