SpaceX pushes AI data centers into orbit as Musk predicts space will beat Earth in 36 months

6 Sources

6 Sources

[1]

Why the economics of orbital AI are so brutal | TechCrunch

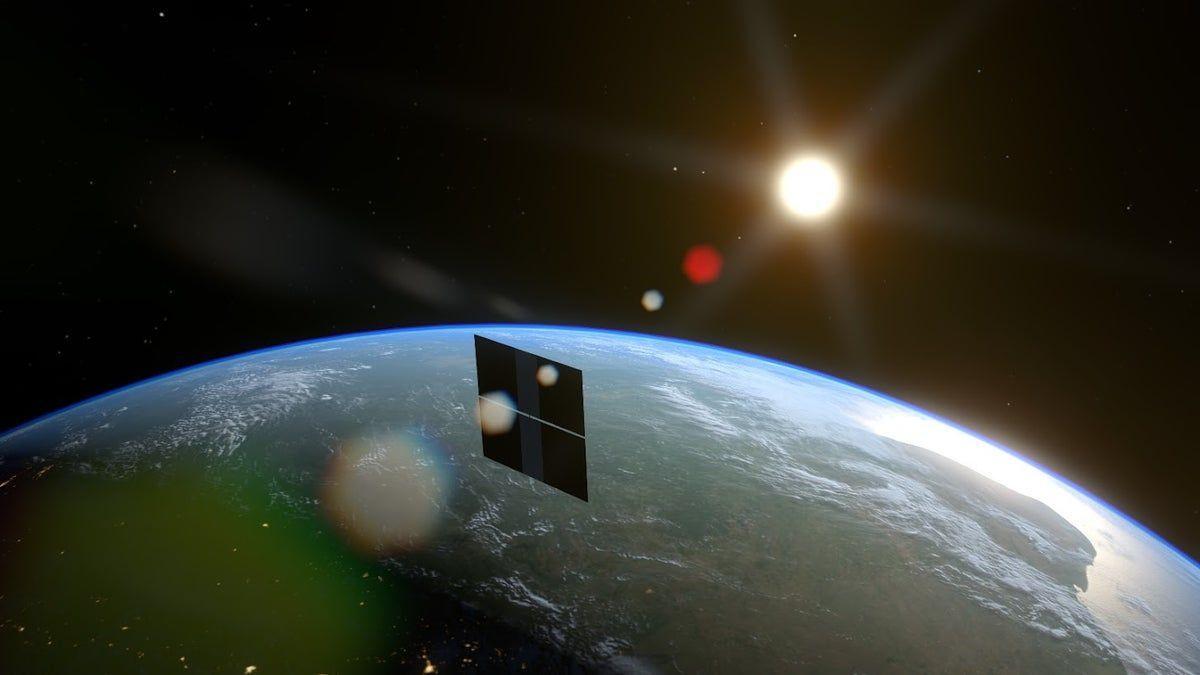

In a sense, this whole thing was inevitable. Elon Musk and his coterie have been talking about AI in space for years -- mainly in the context of Iain Banks' science fiction series about a far-future universe where sentient spaceships roam and control the galaxy. Now, Musk sees an opportunity to realize a version of this vision. His company SpaceX has requested regulatory permission to build solar-powered orbital data centers, distributed across as many as a million satellites, that could shift as much as 100 GW of compute power off the planet. He has reportedly suggested some of his AI satellites will be built on the Moon. "By far the cheapest place to put AI will be space in 36 months or less," Musk said last week on a podcast hosted by Stripe cofounder John Collison. He's not alone. xAI's head of compute has reportedly bet his counterpart at Anthropic that 1% of global compute will be in orbit by 2028. Google (which has a significant ownership stake in SpaceX) has announced a space AI effort called Project Suncatcher, which will launch prototype vehicles in 2027. Starcloud, a start-up that has raised $34 million backed by Google and Andreessen Horowitz, filed its own plans for an 80,000 satellite constellation last week. Even Jeff Bezos has said this is the future. But behind the hype, what will it actually take to get data centers into space? In a first analysis, today's terrestrial data centers remain cheaper than those in orbit. Andrew McCalip, a space engineer, has built a helpful calculator comparing the two models. His baseline results show that a 1 Gw orbital data center might cost $42.4B -- almost three times its ground-bound equivalent, thanks to the up-front costs of building the satellites and launching them to orbit. Changing that equation, experts say, will require technology development across several fields, massive capital expenditure, and a lot of work on the supply chain for space-grade components. It also depends on costs on the ground rising as resources and supply chains are strained by growing demand. The key driver for any space business model is how much it costs to get anything up there. Musk's SpaceX is already pushing down on the cost of getting to orbit, but analysts looking at what it will take to make orbital data centers a reality need even lower prices to close their business case. In other words, while AI data centers may seem to be a story about a new business line ahead of the SpaceX IPO, the plan depends on completing the company's longest-running unfinished project -- Starship. Consider that the reusable Falcon 9 delivers, today, a cost to orbit of roughly $3,600/kg. Making space data centers doable, per Project Suncatcher's white paper, will require prices closer to $200/kg, an 18-fold improvement which it expects to be available in the 2030s. At that price, however, the energy delivered by a Starlink satellite today would be cost competitive with a terrestrial datacenter. The expectation is that SpaceX's next-generation Starship rocket will deliver those improvements -- no other vehicle in development promises equivalent savings. However, that vehicle has yet to become operational or even reach orbit; a third iteration of Starship is expected to make its maiden launch sometime in the months ahead. Even if Starship is completely successful, however, assumptions that it will immediately deliver lower prices to customers may not pass the smell test. Economists at the consultancy Rational Futures make a compelling case that, as with the Falcon 9, SpaceX will not want to charge much less than its best competitor -- otherwise the company is leaving money on the table. If Blue Origin's New Glenn rocket, for example, retails at $70 million, SpaceX won't take on Starship missions for external customers at much less than that, which would leave it above the numbers publicly assumed by space data center builders. "There are not enough rockets to launch a million satellites yet, so we're pretty far from that," Matt Gorman, the CEO of Amazon Web Services, said at a recent event. "If you think about the cost of getting a payload in space today, it's massive. It is just not economical." Still, if launch is the bane of all space businesses, the second challenge is production cost. "We always take for granted, at this point, that Starship's cost is going to be hundreds of dollars per kilo," McCalip told TechCrunch. "People are not taking into account the satellites are almost $1,000 a kilo right now." Satellite manufacturing costs are the largest chunk of that price tag, but if high-powered satellites can be made at about half the cost of current Starlink satellites, the numbers start to make sense. SpaceX has made great advances in satellite economics while building Starlink, its record-setting communications network, and the company hopes to achieve more through scale. Part of the reasoning behind a million satellites is undoubtedly the cost savings that come from mass production. Still, the satellites that will be used for these missions must be large enough to satisfy the complex requirements for operating powerful GPUs, including large solar arrays, thermal management systems, and laser-based communications links to receive and deliver data. A 2025 white paper from Project Suncatcher offers one way to compare terrestrial and space data centers by the cost of power, the basic input needed to run chips. On the ground, data centers spend roughly $570-3,000 for a Kw of power over a year, depend on local power costs and the efficiency of their systems. SpaceX's Starlink satellites get their power from on-board solar panels instead, but the cost of acquiring, launching, and maintaining those spacecraft delivers energy at $14,700 per Kw over a year. Put simply, satellites and their components will have to get a lot cheaper before they're cost-competitive with metered power. Orbital data center proponents often say that thermal management is "free" in space, but that's an oversimplification. Without an atmosphere, it's actually more difficult to disperse heat. "You're relying on very large radiators to just be able to dissipate that heat into the blackness of space, and so that's a lot of surface area and mass that you have to manage," said Mike Safyan, an executive at Planet Labs, which is building prototype satellites for Google Suncatcher that are expected to launch in 2027. "It is recognized as one of the key challenges, especially long term." Besides the vacuum of space, AI satellites will need to deal with cosmic radiation as well. Cosmic rays degrade chips over time, and they can also cause "bit flip" errors that can corrupt data. Chips can be protected with shielding, use rad-hardened components, or work in series with redundant error checks, but all these options involve expensive trades for mass. Still, Google used a particle beam to test the effects of radiation on its Tensor Processing Units (chips designed explicitly for machine learning applications). SpaceX executives said on social media that the company has acquired a particle accelerator for just that purpose. Another challenge comes from the solar panels themselves. The logic of the project is energy arbitrage: Putting solar panels in space makes them anywhere from five to eight times more efficient than on Earth, and if they're in the right orbit, they can be in sight of the sun for 90% of the day or more, increasing their efficiency. Electricity is the main fuel for chips, so more energy = cheaper data centers. But even solar panels are more complicated in space. Space-rated solar panels made of rare earth elements are hardy, but too expensive. Solar panels made from silicon are cheap and increasingly prevalent in space -- Starlink and Amazon Kuiper use them -- but they degrade much faster due to space radiation. That will limit the lifetime of AI satellites to around five years, which means they will have to generate return on investment faster. Still, some analysts think that's not such a big deal, based on how quickly new generations of chips arrive on the scene. "After five or six years, the dollars per kilowatt hour doesn't produce a return, and that's because they're not state of the art," Philip Johnston, the CEO of Starcloud, told TechCrunch. Danny Field, an executive at Solestial, a start-up building space-rated silicon solar panels, says the industry sees orbital data centers as a key driver of growth. He's speaking with several companies about potential data center projects, and says "any player who is big enough to dream is at least thinking about it." As a long-time spacecraft design engineer, however, he doesn't discount the challenges in these models. "You can always extrapolate physics out to a bigger size," Field said. "I'm excited to see how some of these companies get to a point where the economics make sense and the business case closes." One outstanding question about these data centers: What will we do with them? Are they general purpose, or for inference, or for training? Based on existing use cases, they may not be entirely interchangeable with data centers on the ground. A key challenge for training new models is operating thousands of GPUs together en masse. Most model training is not distributed, but done in individual data centers. The hyperscalers are working to change this in order to increase the power of their models, but it still hasn't been achieved. Similarly, training in space will require coherence between GPUs on multiple satellites. The team at Google's Project Suncatcher notes that the company's terrestrial data centers connect their TPU networks with throughput in the hundreds of gigabpits per second. The fastest off-the-shelf inter-satellite comms links today, which use lasers, can only get up to about 100 Gbps. That led to an intriguing architecture for Suncatcher: It involves flying 81 satellites in formation so they are close enough to use the kind of transceivers relied on by terrestrial data centers. That, of course, presents its own challenges: The autonomy required to ensure each spacecraft remains in its correct station, even if maneuvers are required to avoid orbital debris or another spacecraft. Still, the Google study offers a caveat: The work of inference can tolerate the orbital radiation environment, but more research is needed to understand the potential impact of bit-flips and other errors on training workloads. Inference tasks don't have the same need for thousands of GPUs working in unison. The job can be done with dozens of GPUs, perhaps on a single satellite, an architecture that represents a kind of minimum viable product and the likely starting point for the orbital data center business. "Training is not the ideal thing to do in space," Johnston said. "I think almost all inference workloads will be done in space," imagining everything from customer service voice agents to ChatGPT queries being computed in orbit. He says his company's first AI satellite is already earning revenue performing inference in orbit. While details are scarce even in the company's FCC filing, SpaceX's orbital data center constellation seems to anticipate about 100 kw of compute power per ton28, roughly twice the power of current Starlink satellites. The spacecraft will operate in connection with each other and using the Starlink network to share information; the filing claims that Starlink's laser links can achieve petabit-level throughput. For SpaceX, the company's recent acquisition of xAI (which is building its own terrestrial data centers) will let the company stake out positions in both terrestrial and orbital data centers, seeing which supply chain adapts faster. That's the benefit of having fungible Floating Point Operations Per Second - if you can make it work. "A FLOP is a FLOP, it doesn't matter where it lives," McCalip said. "[SpaceX] can just scale until [it] hits permitting or capex bottlenecks on the ground, and then fall back to [their] space deployments."

[2]

Can Elon Musk really launch data centres into space?

Elon Musk is betting that AI's future lies not on Earth, but in orbit -- a claim he has put at the centre of his $1.25tn plan to merge SpaceX with his lossmaking start-up xAI. The tech billionaire, who aims to launch an initial public offering for the newly combined company this year, argues that vast fleets of satellites powered by solar energy and cooled by the vacuum of space will become the cheapest way to generate AI computing power. Musk believes this will happen within the next three years. Satellite executives, investors and researchers said that Musk's timeline for putting data centres in space is highly ambitious. But many agree that the underlying idea is increasingly plausible, as long as launch costs continue to fall and demand for AI compute keeps surging. "This is not just a bright shiny object that Elon has invented for his IPO," said Will Marshall, chief executive of Planet, a satellite company that is working with Google to pilot orbital data centres. "This is a project whose time has come." The idea of generating computing power in space dates back to a 1941 Isaac Asimov story, Reason, which depicts a network of orbital space stations generating solar energy that is beamed back to Earth. The 21st-century twist is to use that solar power to fuel AI chips, shuttling the data back to Earth using satellite networks such as SpaceX's Starlink. Huge fleets of thousands of satellites, networked together and held in a "sun synchronous" low Earth orbit, could respond to users' AI queries at a speed not too different to today's terrestrial chatbots, advocates say. "Mark my words: in 36 months, probably closer to 30 months, the most economically compelling place to put AI will be space," Musk told Stripe's Cheeky Pint podcast last week. While one former xAI employee said the idea "seems to have come out of nowhere and is totally unproven", Musk is not the only Big Tech executive pursuing the concept. Amazon founder Jeff Bezos, who is also co-CEO of a secretive new AI start-up, Project Prometheus, in October predicted the construction of "giant gigawatt data centres in space" in the coming years. Google plans a "learning mission" for what it has dubbed "Project Suncatcher" with Planet by early 2027, launching two prototype satellites containing its tensor processing unit AI chips into low Earth orbit. Two start-ups, Starcloud and Aetherflux, are planning to launch GPUs into space within the next 12 months. Aetherflux's founder Baiju Bhatt said the AI boom had created a "once-in-a-generation inflection point in energy usage" that has created a "huge economic incentive" to put AI in space. While many of today's AI data-centre projects are bogged down in planning or power-supply delays, launching chips into space can bring them online "at industrial manufacturing pace rather than real estate pace", with access to effectively limitless energy. "This is going to be one of the catalysts for massively industrialising access to space," said Bhatt, who previously co-founded trading app Robinhood. "We don't need to invent new material to do this." Steve Collar, a former chief executive of SES, one of the world's largest satellite operators, said: "Elon is always years ahead of the technology and the reality, but that doesn't mean that he's wrong. It can be both self-serving and right at the same time." "The good part is you have unlimited and efficient access to solar and . . . space is really cold," said Collar, who now chairs SWISSto12, a satellite communications company. "Those two things are massively helpful for a system that is enormously power hungry and enormously heat generative." Philip Johnston, co-founder of Starcloud, believes the technology "100 per cent is definitely going to be proven in two or three years, probably this year". He added: "It's a complete no-brainer: in the next 10 years all compute will be built in space." But the economics hinge on several unproven assumptions. This includes that the price of launching large constellations of satellites will fall dramatically. Secondly, that chips such as Nvidia's graphics processing units, the workhorses of terrestrial AI data centres today, can be shielded from radiation and cooled in the vacuum of space. Crucially, the concept assumes advances in AI will continue to demand ever-greater computing power. A Google research paper in November estimated that if launch prices fall to less than $200 per kilogramme -- from costs of at least $1,000/kg today -- then the cost of launching and operating a space-based data centre would be "roughly comparable" to an equivalent terrestrial data centre on a per-kilowatt/year basis. That threshold will not be reached until the "mid-2030s", the paper said. Some are betting that economic calculus will change with the advent of reusable rockets such as SpaceX's Starship or Blue Origin's New Glenn, which promise to bring down costs dramatically. SpaceX has already filed paperwork with the US Federal Communications Commission seeking permission to launch as many as 1mn satellites for AI. Many in the tech industry remain sceptical that these barriers can be overcome. "I don't know if you've seen a rack of servers recently but they're heavy," Matt Garman, chief executive of Amazon Web Services, said on stage at a Cisco conference last week in San Francisco. Marshall, Planet's CEO, said he had already been discussing the concept with Google for several years before Musk and Bezos started promoting the idea in late 2025. Given the complexity of engineering these systems, he believes that the economic case could stack up sooner than the technology is ready. "A lot of architectural decisions haven't been figured out," Marshall said. "But none of this is insurmountable . . . The race is on." Additional reporting by Cristina Criddle, Stephen Morris and Rafe Rosner-Uddin

[3]

What's the deal with space-based data centers for AI?

Starcloud has already begun running and training a large language model in space, so it can speak Shakespearean English Terrestrial data centers are so 2025. We're taking our large-scale compute infrastructure into orbit, baby! Or at least, that's what Big Tech is yelling from the rooftops at the moment. It's quite a bonkers idea that's hoovering up money and mindspace, so let's unpack what it's all about - and whether it's even grounded in reality. Let's start with the basics. You might already know that a data center is essentially a large warehouse filled with thousands of servers that run 24/7. AI companies like Anthropic, OpenAI, and Google use data centers in two main ways: AI companies need data centers because they provide the coordinated power of thousands of machines working in tandem on these functions, plus the infrastructure to keep them running reliably around the clock. To that end, these facilities are always online with ultra-fast internet connections, and they have vast cooling systems to keep those servers running at peak performance levels. All this requires a lot of power, which puts a strain on the grid and squeezes local resources. So what's this noise about data centers in space? The idea's been bandied about for a while now as a vastly better alternative that can harness infinitely abundant solar energy and radiative cooling hundreds of miles above the ground in low Earth orbit. Powerful GPU-equipped servers would be contained in satellites, and they'd move through space together in constellations, beaming data back and forth as they travel around the Earth from pole to pole in the sun-synchronous orbit. The thinking behind space data centers is that it'll allow operators to scale up compute resources far more easily than on Earth. Up there, there aren't any constraints of easily available power, real estate, and fresh water supplies needed for cooling. There are a number of firms getting in on the action, including big familiar names and plucky upstarts. You've got Google partnering with Earth monitoring company Planet on Project Suncatcher to launch a couple of prototype satellites by next year. Aetherflux, a startup that was initially all about beaming down solar power from space, now intends to make a data center node in orbit available for commercial use early next year. Nvidia-backed Starcloud, which is focused exclusively on space-based data centers, sent a GPU payload into space last November, and trained and ran a large language model on it. The latest to join the fold is SpaceX, which is set to merge with Elon Musk's AI company xAI in a purported US$1.25-trillion deal with a view to usher in the era of orbital data centers. According to Musk's calculations, it should be possible to increase the number of rocket launches and the data center satellites they can carry. "There is a path to launching 1 TW/year (1 terawatt of compute power per year) from Earth," he noted in a memo, adding that AI compute resources will be cheaper to generate in space than on the ground within three years from now. In an excellent article in The Verge from last December, Elissa Welle laid out the numerous challenges these orbital data centers will have to overcome in order to operate as advertised. For starters, they'd have to safely wade through the 6,600 tons of space debris floating around in orbit, as well as the 14,000-plus active satellites in orbit. Dodging these will require fuel. You've also got to dissipate heat from the space-based data centers, and have astronauts maintain them periodically. And that's to say nothing about how these satellites will affect the work of astronomers or potentially increase light pollution. Ultimately, there's a lot of experimentation and learning to be gleaned from these early efforts to build out compute resources in space before any company or national agency can realistically scale them up. And while it might eventually become possible to do so despite substantial difficulties, it's worth asking ourselves whether AI is actually on track to benefit humanity in all the ways we've been promised, and whether we need to continually build out infrastructure for it - whether on the ground or way up beyond the atmosphere.

[4]

Musk predicts more AI capacity will be in orbit than on earth in 5 years, with SpaceX becoming a 'hyper-hyper' scaler | Fortune

By the beginning of the next decade, AI will primarily become a space-based venture as the cost becomes much more advantageous to operate in orbit, according to SpaceX CEO Elon Musk. In a lengthy, wide-ranging interview with podcaster Dwarkesh Patel and Stripe cofounder and president John Collison on Thursday, the tech billionaire made some of his signature bold predictions about how the AI revolution will play out. Given the enormous energy needs of AI and limits on available land for placing massive arrays of solar panels -- not to mention all the red tape -- building new AI data centers will be much cheaper in orbit, where solar panels are five times more effective than on the ground. "In 36 months, but probably closer to 30 months, the most economically compelling place to put AI will be space," Musk said. "It will then get ridiculously better to be in space. The only place you can really scale is space. Once you start thinking in terms of what percentage of the sun's power you are harnessing, you realize you have to go to space. You can't scale very much on earth." The utility industry isn't able currently to build power plants as rapidly as needed for AI, he added. On top of that, limits on manufacturing gas turbines and wind turbines fast enough represent another bottleneck. Meanwhile, solar panels meant to be used in space are less costly than those designed for use on land because they don't need as much glass or hardening to withstand various weather events, Musk explained. In addition, the cooling needed for data centers is less of an issue in space. Considering the advantage space has over earth, he was asked where AI will be in five years. "If you say five years from now, I think probably AI in space will be launching every year the sum total of all AI on earth," Musk said. "Meaning, five years from now, my prediction is we will launch and be operating every year more AI in space than the cumulative total on earth." While he is infamous for setting incredibly ambitious targets on aggressive timelines, his next one was a whopper, even by his standards. Musk said getting all that AI and solar capacity in space will require about 10,000 launches a year -- or a launch in less than an hour every day. SpaceX is the most prolific rocket company and set a record last year with 165 orbital launches. SpaceX could pull off a 10,000-per-year launch cadence with 20-30 Starship rockets, he added, though the company will make more than that, enabling perhaps 20,000-30,000 launches a year. He pointed out the airline industry has much quicker throughput than that. The number of daily flights around the world tops 100,000. Patel then asked if SpaceX will become an AI hyperscaler. "Hyper-hyper," Musk replied. "If some of my predictions come true, SpaceX will launch more AI than the cumulative amount on Earth of everything else combined." It's already working toward that goal. SpaceX in November launched a test satellite with an AI server from start-up Starcloud. And last month, SpaceX asked the FCC for permission to launch up to 1 million solar‑powered satellites designed as data centers. Of course, there are other challenges associated with operating in space, such as protecting hardware from the sun's radiation and transmitting astronomical amounts data from orbit to earth. SpaceX's Starship rocket is also still in development. But Deutsche Bank said in a note last month the challenges of putting data centers in space are more about engineering than physics. Today's AI hyperscalers see the potential as well and are also looking to go to space. For example, Google's Project Suncatcher looks to pair solar-powered satellites with AI computer chips, and a prototype could launch as soon as next year. OpenAI CEO Sam Altman also considered buying rocket company Stoke Space to put data centers in orbit, the Wall Street Journal reported in December. For its part, SpaceX is an AI company now, after its merger with Musk's xAI. That's as SpaceX is expected to go public this year, raising tens of billions of dollars. During the podcast interview, Musk said more money is available in public markets than private markets, possibly even 100 times more. "I just repeatedly tackle the limiting factor," he added. "Whatever the limiting factor is on speed, I'm going to tackle that. If capital is the limiting factor, then I'll solve for capital. If it's not the limiting factor, I'll solve for something else."

[5]

Elon Musk says space will be the cheapest place for AI data centres in three years

Talking on the Dwarkesh Podcast, co-hosted by Dwarkesh Patel and Stripe CEO John Collison, Musk said Earth has some insurmountable power bottlenecks in scaling AI. "The availability of energy is the issue," Musk said, noting global electricity output outside China remains "pretty close flat" while chip production surges. Billionaire Elon Musk has predicted that within 36 months, or likely sooner, space will be the most economical location for artificial intelligence (AI) data centres, eclipsing Earth-based options due to vastly superior solar efficiency and the absence of a need for batteries. Talking on the Dwarkesh Podcast, co-hosted by Dwarkesh Patel and Stripe CEO John Collison, Musk said Earth has some insurmountable power bottlenecks in scaling AI. "The availability of energy is the issue," Musk said, noting global electricity output outside China remains "pretty close flat" while chip production surges. Musk quipped that he meant to wear a t-shirt that said, "It's always sunny in space". "Because you don't have a day-night cycle, seasonality, clouds, or an atmosphere in space. The atmosphere alone results in about a 30% loss of energy [on Earth]," said Musk, adding, "It will simply not be physically possible to scale power production to the scale needed for AI on Earth." "Any given solar panel can do about five times more power in space than on the ground. You also avoid the cost of having batteries to carry you through the night. It's actually much cheaper to do in space," he said. The billionaire, who is very close to a trillionaire status, said this days after his aerospace giant SpaceX acquired his AI company xAI, to create a $1.25 trillion behemoth. The company said it is acquiring xAI to "form the most ambitious, vertically-integrated innovation engine on (and off) Earth, with AI, rockets, space-based internet, direct-to-mobile device communications and the world's foremost real-time information and free speech platform." On the podcast, Musk explained the multitude of issues facing the AI scaling problem. For space to dominate economically, three conditions must come together, according to Musk: * Earth's power hits a hard ceiling as AI demand explodes * Chip fabs like Musk's planned "TeraFab" outpace energy scaling * Starship achieve thousands of launches yearly. If this happens, Musk said his ecosystem wins. SpaceX alone can launch at that cadence, powering xAI with unlimited gigawatts annually while rivals scrap over turbines and grids. "The only place you can really scale is space," he stressed, eyeing hundreds of gigawatts launched yearly via Starship. Five years out, space AI could surpass all terrestrial capacity combined, lapping US power (500 GW average) repeatedly. When asked about chances of technical issues of data centres in space, Musk said, "At this point, we find our GPUs to be quite reliable. There's infant mortality, which you can obviously iron out on the ground. "Once they start working and you're past the initial debug cycle, they're quite reliable."

[6]

Elon Musk Backs ARK Invest's Brett Winton, Who Said Orbital Data Centers Could Solve AI Scaling Challenges - Alphabet (NASDAQ:GOOGL)

On Tuesday, tech billionaire Elon Musk endorsed ARK Invest's chief futurist Brett Winton's prediction that putting data centers in orbit could become cheaper and more efficient as AI demand skyrockets. AI's Growing Demands Push Innovation Beyond Earth As artificial intelligence workloads surge, traditional land-based data centers face soaring costs for power and cooling. Winton explained on X that on Earth, the 100th gigawatt deployed will almost certainly be more costly, complex and time-intensive than the first, but in space, the opposite is true. "The 100th orbital GW could be 1/3rd as costly as the 1st," Winton wrote. Musk shared Winton's post, calling ARK's insights "great." Elon Musk Bets On Space To Power AI With Solar Satellites Musk has been betting on orbit as the future of AI, making it central to his $1.25 trillion plan to merge SpaceX with his struggling startup xAI. He plans to take the merged company public this year and predicts that fleets of solar-powered satellites, naturally cooled by space, could soon become the most cost-effective way to power AI within the next three years. Space Data Centers Could Slash AI Costs With Free Solar Power, Cooling Previously, veteran investor Gavin Baker echoed the logic, saying that orbital data centers could address AI's physical constraints. In space, solar energy is available 24 hours a day and is roughly 30% more intense without atmospheric interference, Baker said. "Cooling is free...you just point a radiator into the dark side of orbit," where temperatures could approach absolute zero, he added. Speed And Efficiency Advantages in Orbit Baker also highlighted latency benefits. Unlike fiber optic cables on Earth, lasers traveling through a vacuum can transmit data faster, offering potential speed advantages for AI networks. Skeptics Cite Cost And Logistics Getting heavy equipment into orbit is massive and expensive, he said, adding that there aren't "enough rockets to launch a million satellites yet." Disclaimer: This content was partially produced with the help of AI tools and was reviewed and published by Benzinga editors. Photo courtesy: Shutterstock Market News and Data brought to you by Benzinga APIs To add Benzinga News as your preferred source on Google, click here.

Share

Share

Copy Link

Elon Musk claims space will become the cheapest location for AI data centers within three years, citing superior solar efficiency and unlimited scalability. SpaceX has filed for permission to launch up to 1 million satellites as orbital data centers, while Google, Starcloud, and other tech giants race to prove the concept. But experts warn the economics depend on dramatic reductions in launch costs and unproven technology.

SpaceX Files for Million-Satellite Constellation as Orbital AI Race Accelerates

Elon Musk's prediction that space will become the most economical location for AI data centers within 36 months has shifted from science fiction to active development. SpaceX requested FCC permission in January to launch up to 1 million solar-powered satellites designed as orbital AI infrastructure, capable of shifting as much as 100 GW of compute power off the planet

1

. The billionaire told Stripe co-founder John Collison that "by far the cheapest place to put AI will be space in 36 months or less," arguing that solar panels in orbit deliver five times more power than terrestrial installations without atmospheric losses or day-night cycles5

. This ambitious vision follows SpaceX's merger with xAI to create a $1.25 trillion entity focused on vertical integration of rockets, satellites, and AI computing capacity4

.Economic Viability Hinges on Starship and Reduced Launch Costs

The economics of space-based data centers for AI remain brutally challenging despite the hype. Andrew McCalip, a space engineer, calculated that a 1 GW orbital data center might cost $42.4 billion—nearly three times its ground-bound equivalent due to satellite manufacturing and launch expenses

1

. Project Suncatcher's white paper from Google estimates that launch prices must fall to less than $200 per kilogram from today's costs of at least $1,000 per kilogram—a threshold not expected until the mid-2030s2

. SpaceX's reusable Falcon 9 currently delivers roughly $3,600 per kilogram, meaning the company needs an 18-fold improvement to make orbital AI economically competitive1

. This entire business case depends on Starship achieving operational status and delivering dramatically reduced launch costs, yet the next-generation rocket has yet to reach orbit1

.Tech Giants and Startups Race to Prove Orbital AI Concept

Google announced Project Suncatcher, planning to launch prototype satellites containing tensor processing unit AI chips into low Earth orbit by early 2027 in partnership with Planet

2

. Starcloud, backed by Google and Andreessen Horowitz with $34 million in funding, filed plans for an 80,000 satellite constellation and has already trained a large language model in space using a GPU payload launched in November1

3

. Aetherflux, founded by Robinhood co-founder Baiju Bhatt, aims to make a data center node available for commercial use early next year, arguing that launching chips into space brings them online "at industrial manufacturing pace rather than real estate pace"2

. Even Jeff Bezos predicted the construction of "giant gigawatt data centers in space" in coming years2

. xAI's head of compute reportedly bet his Anthropic counterpart that 1% of global compute will be in orbit by 20281

.Related Stories

Power Bottlenecks on Earth Drive Space Infrastructure Push

Elon Musk's prediction stems from what he describes as insurmountable power bottlenecks for scaling AI on Earth. Global electricity output outside China remains "pretty close flat" while chip production surges, creating fundamental constraints

5

. The utility industry cannot build power plants rapidly enough to meet AI demand, and limits on manufacturing gas turbines and wind turbines represent additional bottlenecks4

. Musk claims that within five years, SpaceX will launch and operate more AI computing capacity annually than the cumulative total on Earth, requiring approximately 10,000 launches per year—a dramatic increase from SpaceX's record 165 orbital launches in 20254

. Solar panels designed for space cost less than terrestrial versions because they don't require hardening against weather, and cooling systems benefit from the vacuum environment and radiative cooling hundreds of miles above ground4

3

.Radiation, Debris, and Scalability Challenges Remain Unresolved

Despite optimistic timelines, numerous technical hurdles threaten the viability of orbital AI infrastructure. GPUs and other hardware must be shielded from radiation in space, a challenge that remains partially unproven at scale

2

. The 6,600 tons of space debris and 14,000-plus active satellites in orbit create collision risks requiring fuel for maneuvering3

. Satellite manufacturing costs currently run about $1,000 per kilogram, and achieving the necessary cost reductions depends on scaling production to unprecedented levels1

. Amazon Web Services CEO Matt Gorman noted that "there are not enough rockets to launch a million satellites yet, so we're pretty far from that," adding that current payload costs make space infrastructure "just not economical"1

. Economists at consultancy Rational Futures argue that even if Starship succeeds, SpaceX may not charge customers much less than competitors like Blue Origin's New Glenn rocket, potentially leaving launch prices above the thresholds assumed by space data center builders1

. Steve Collar, former CEO of satellite operator SES, acknowledged that "Elon is always years ahead of the technology and the reality, but that doesn't mean that he's wrong"2

. The concept assumes AI will continue demanding ever-greater computing power, a trend that could shift with architectural breakthroughs or efficiency gains. For now, the race to orbit represents a high-stakes bet on solving interconnected challenges across launch cadence, satellite production, radiation hardening, and thermal management while terrestrial alternatives continue improving.References

Summarized by

Navi

Related Stories

AI Trained in Space as Tech Giants Race to Build Orbiting Data Centers Powered by Solar Energy

11 Dec 2025•Technology

SpaceX acquires xAI as Elon Musk bets big on 1 million satellite constellation for orbital AI

29 Jan 2026•Technology

Google Plans Space Data Centers to Power AI with Solar Energy, Targets 2027 Launch

02 Dec 2025•Technology

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Samsung unveils Galaxy S26 lineup with Privacy Display tech and expanded AI capabilities

Technology

3

Anthropic faces Pentagon ultimatum over AI use in mass surveillance and autonomous weapons

Policy and Regulation