The Power of Eye Contact: New Study Reveals Crucial Role in Human-Robot Interaction

3 Sources

3 Sources

[1]

It's not that you look -- it's when: The hidden power of eye contact

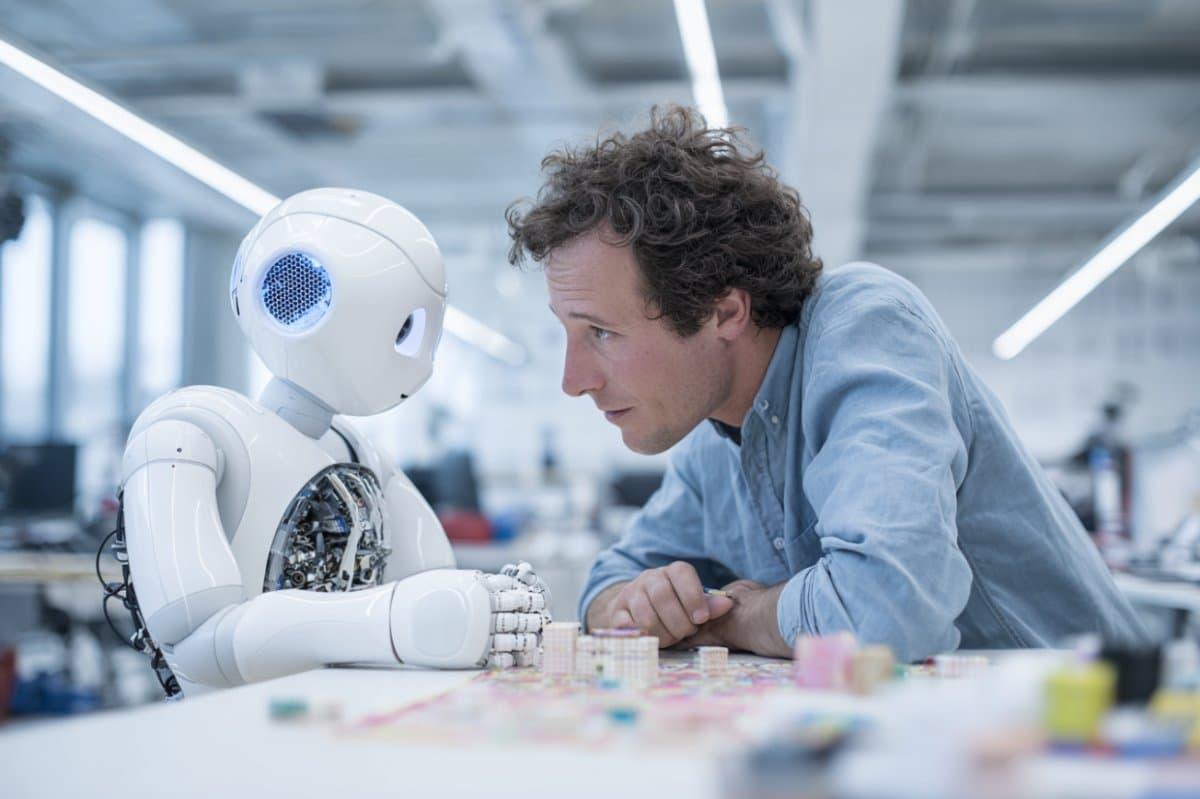

For the first time, a new study has revealed how and when we make eye contact -- not just the act itself -- plays a crucial role in how we understand and respond to others, including robots. Led by cognitive neuroscientist Dr Nathan Caruana, researchers from the HAVIC Lab at Flinders University asked 137 participants to complete a block-building task with a virtual partner. They discovered that the most effective way to signal a request was through a specific gaze sequence: looking at an object, making eye contact, then looking back at the same object. This timing made people most likely to interpret the gaze as a call for help. Dr Caruana says that identifying these key patterns in eye contact offers new insights into how we process social cues in face-to-face interactions, paving the way for smarter, more human-centered technology. "We found that it's not just how often someone looks at you, or if they look at you last in a sequence of eye movements but the context of their eye movements that makes that behavior appear communicative and relevant," says Dr Caruana, from the College of Education, Psychology and Social Work. "And what's fascinating is that people responded the same way whether the gaze behavior is observed from a human or a robot. "Our findings have helped to decode one of our most instinctive behaviors and how it can be used to build better connections whether you're talking to a teammate, a robot, or someone who communicates differently. "It aligns with our earlier work showing that the human brain is broadly tuned to see and respond to social information and that humans are primed to effectively communicate and understand robots and virtual agents if they display the non-verbal gestures we are used to navigating in our everyday interactions with other people." The authors say the research can directly inform how we build social robots and virtual assistants that are becoming ever more ubiquitous in our schools, workplaces and homes, while also having broader implications beyond tech. "Understanding how eye contact works could improve non-verbal communication training in high-pressure settings like sports, defense, and noisy workplaces," says Dr Caruana. "It could also support people who rely heavily on visual cues, such as those who are hearing-impaired or autistic." The team is now expanding the research to explore other factors that shape how we interpret gaze, such as the duration of eye contact, repeated looks, and our beliefs about who or what we are interacting with (human, AI, or computer-controlled). The HAVIC Lab is currently conducting several applied studies exploring how humans perceive and interact with social robots in various settings, including education and manufacturing. "These subtle signals are the building blocks of social connection," says Dr Caruana. "By understanding them better, we can create technologies and training that help people connect more clearly and confidently." The HAVIC Lab is affiliated with the Flinders Institute for Mental Health and Wellbeing and a founding partner of the Flinders Autism Research Initiative. Acknowledgements: Authors were supported by an Experimental Psychology Society small grant.

[2]

How Eye Contact Builds Connection - Neuroscience News

Summary: A new study reveals that the sequence of eye movements -- not just eye contact itself -- plays a key role in how we interpret social cues, even with robots. Researchers found that looking at an object, making eye contact, then looking back at the object was the most effective way to signal a request for help. This pattern prompted the same response whether participants interacted with a human or a robot, highlighting how tuned humans are to context in gaze behavior. These insights could improve social robots, virtual assistants, and communication training for people and professionals who rely heavily on non-verbal cues. For the first time, a new study has revealed how and when we make eye contact -- not just the act itself -- plays a crucial role in how we understand and respond to others, including robots. Led by cognitive neuroscientist Dr Nathan Caruana, researchers from the HAVIC Lab at Flinders University asked 137 participants to complete a block-building task with a virtual partner. They discovered that the most effective way to signal a request was through a specific gaze sequence: looking at an object, making eye contact, then looking back at the same object. This timing made people most likely to interpret the gaze as a call for help. Dr Caruana says that identifying these key patterns in eye contact offers new insights into how we process social cues in face-to-face interactions, paving the way for smarter, more human-centred technology. "We found that it's not just how often someone looks at you, or if they look at you last in a sequence of eye movements but the context of their eye movements that makes that behaviour appear communicative and relevant," says Dr Caruana, from the College of Education, Psychology and Social Work. "And what's fascinating is that people responded the same way whether the gaze behaviour is observed from a human or a robot. "Our findings have helped to decode one of our most instinctive behaviours and how it can be used to build better connections whether you're talking to a teammate, a robot, or someone who communicates differently. "It aligns with our earlier work showing that the human brain is broadly tuned to see and respond to social information and that humans are primed to effectively communicate and understand robots and virtual agents if they display the non-verbal gestures we are used to navigating in our everyday interactions with other people." The authors say the research can directly inform how we build social robots and virtual assistants that are becoming ever more ubiquitous in our schools, workplaces and homes, while also having broader implications beyond tech. "Understanding how eye contact works could improve non-verbal communication training in high-pressure settings like sports, defence, and noisy workplaces," says Dr Caruana. "It could also support people who rely heavily on visual cues, such as those who are hearing-impaired or autistic." The team is now expanding the research to explore other factors that shape how we interpret gaze, such as the duration of eye contact, repeated looks, and our beliefs about who or what we are interacting with (human, AI, or computer-controlled). The HAVIC Lab is currently conducting several applied studies exploring how humans perceive and interact with social robots in various settings, including education and manufacturing. "These subtle signals are the building blocks of social connection," says Dr Caruana. "By understanding them better, we can create technologies and training that help people connect more clearly and confidently." The HAVIC Lab is affiliated with the Flinders Institute for Mental Health and Wellbeing and a founding partner of the Flinders Autism Research Initiative. The temporal context of eye contact influences perceptions of communicative intent This study examined the perceptual dynamics that influence the evaluation of eye contact as a communicative display. Participants (n = 137) completed a task where they decided if agents were inspecting or requesting one of three objects. Each agent shifted its gaze three times per trial, with the presence, frequency and sequence of eye contact displays manipulated across six conditions. We found significant differences between all gaze conditions. Participants were most likely, and fastest, to perceive a request when eye contact occurred between two averted gaze shifts towards the same object. Findings suggest that the relative temporal context of eye contact and averted gaze, rather than eye contact frequency or recency, shapes its communicative potency. Commensurate effects were observed when participants completed the task with agents that appeared as humans or a humanoid robot, indicating that gaze evaluations are broadly tuned across a range of social stimuli. Our findings advance the field of gaze perception research beyond paradigms that examine singular, salient and static gaze cues and inform how signals of communicative intent can be optimally engineered in the gaze behaviours of artificial agents (e.g. robots) to promote natural and intuitive social interactions.

[3]

The secret sequence: How eye contact timing influences our social understanding

For the first time, a new study has revealed how and when we make eye contact -- not just the act itself -- plays a crucial role in how we understand and respond to others, including robots. The study, "The temporal context of eye contact influences perceptions of communicative intent" was published in Royal Society Open Science. Led by cognitive neuroscientist Dr. Nathan Caruana, researchers from the HAVIC Lab at Flinders University, asked 137 participants to complete a block-building task with a virtual partner. They discovered that the most effective way to signal a request was through a specific gaze sequence: looking at an object, making eye contact, then looking back at the same object. This timing made people most likely to interpret the gaze as a call for help. Dr. Caruana says that identifying these key patterns in eye contact offers new insights into how we process social cues in face-to-face interactions, paving the way for smarter, more human-centered technology. "We found that it's not just how often someone looks at you, or if they look at you last in a sequence of eye movements, but the context of their eye movements that makes that behavior appear communicative and relevant," says Dr. Caruana, from the College of Education, Psychology and Social Work. "And what's fascinating is that people responded the same way whether the gaze behavior is observed from a human or a robot. "Our findings have helped to decode one of our most instinctive behaviors and how it can be used to build better connections whether you're talking to a teammate, a robot, or someone who communicates differently. "It aligns with our earlier work showing that the human brain is broadly tuned to see and respond to social information and that humans are primed to effectively communicate and understand robots and virtual agents if they display the non-verbal gestures we are used to navigating in our everyday interactions with other people." The authors say the research can directly inform how we build social robots and virtual assistants that are becoming ever more ubiquitous in our schools, workplaces and homes, while also having broader implications beyond tech. "Understanding how eye contact works could improve non-verbal communication training in high-pressure settings like sports, defense, and noisy workplaces," says Dr. Caruana. "It could also support people who rely heavily on visual cues, such as those who are hearing-impaired or autistic." The team is now expanding the research to explore other factors that shape how we interpret gaze, such as the duration of eye contact, repeated looks, and our beliefs about who or what we are interacting with (human, AI, or computer-controlled). The HAVIC Lab is currently conducting several applied studies exploring how humans perceive and interact with social robots in various settings, including education and manufacturing. "These subtle signals are the building blocks of social connection," says Dr. Caruana. "By understanding them better, we can create technologies and training that help people connect more clearly and confidently." The HAVIC Lab is affiliated with the Flinders Institute for Mental Health and Well-being and a founding partner of the Flinders Autism Research Initiative.

Share

Share

Copy Link

A groundbreaking study from Flinders University's HAVIC Lab uncovers the importance of eye contact timing in social interactions, with implications for human-robot communication and AI development.

Unveiling the Secret of Eye Contact

A groundbreaking study led by cognitive neuroscientist Dr. Nathan Caruana from the HAVIC Lab at Flinders University has shed new light on the intricate dynamics of eye contact in social interactions. The research, titled "The temporal context of eye contact influences perceptions of communicative intent," reveals that the timing and sequence of eye movements play a crucial role in how we interpret and respond to social cues, even when interacting with robots

1

.

Source: Neuroscience News

The Power of Gaze Sequence

The study, involving 137 participants, uncovered a specific gaze sequence that proved most effective in signaling a request for help:

- Looking at an object

- Making eye contact

- Looking back at the same object

This precise timing significantly increased the likelihood of the gaze being interpreted as a call for assistance. Dr. Caruana emphasizes, "We found that it's not just how often someone looks at you, or if they look at you last in a sequence of eye movements but the context of their eye movements that makes that behavior appear communicative and relevant"

2

.Implications for Human-Robot Interaction

Fascinatingly, the study revealed that participants responded similarly whether the gaze behavior was observed from a human or a robot. This finding aligns with previous research showing that the human brain is broadly tuned to perceive and respond to social information, regardless of its source .

Related Stories

Applications Beyond Technology

While the research has immediate implications for the development of more intuitive social robots and virtual assistants, its potential applications extend far beyond the tech world:

- High-Pressure Settings: The findings could improve non-verbal communication training in sports, defense, and noisy workplaces.

- Accessibility: The research may support individuals who rely heavily on visual cues, such as those with hearing impairments or autism.

- Education and Manufacturing: The HAVIC Lab is currently exploring how humans perceive and interact with social robots in various settings, including education and manufacturing environments.

Future Research Directions

The team is now expanding their research to explore other factors that influence gaze interpretation, including:

- Duration of eye contact

- Repeated looks

- Beliefs about the interaction partner (human, AI, or computer-controlled)

Source: ScienceDaily

Dr. Caruana concludes, "These subtle signals are the building blocks of social connection. By understanding them better, we can create technologies and training that help people connect more clearly and confidently"

1

.As we continue to unravel the complexities of non-verbal communication, this research paves the way for more natural and intuitive interactions between humans and artificial agents, potentially revolutionizing fields from robotics to social skills training.

References

Summarized by

Navi

[2]

Related Stories

AI Falls Short in Understanding Human Social Interactions, Study Reveals

25 Apr 2025•Science and Research

AI emotional connection can feel deeper than human talk, new study reveals surprising intimacy

29 Jan 2026•Science and Research

New Scale Reveals Key Qualities for Human-Like Robots in Service Industry

10 Dec 2024•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology