Facebook's AI Content Moderation Sparks Controversy

2 Sources

2 Sources

[1]

I Posted On Facebook About One Of My Own Articles. Then The Overlords Swept In

There's been a few times when one of my articles was called "spammy" over the years. I loved a product too much (because, you know, I probably just really liked it) or wrote with a sense of excitement and awe about a news topic. I've been writing about social media, tech innovation, mentoring, leadership, and many other topics since 2001. On social media, since there are hungry trolls on the prowl at all times looking for ripe pickings, I've certainly had my share of flame wars and pointless bickering. I usually duck for cover and wait for the trolls to move along. Most of them are too dumb to stick around and have a thoughtful conversation for more than about five seconds anyway. This time, it was the social network itself that went hunting for me. To call a massive technology empire with 3 billion users a "troll" might seem harsh, but that's not nearly as condescending or finger-pointing as the notification they sent me. "We removed your post," it said at first. That sounds like the Kingdom is protecting itself. Who is the "we" in that sentence? Mark Zuckerberg? "It looks like you tried to get likes, follows, shares or video views in a misleading way," read the rest of the wrist-slap. I had to plead my case and request a review. Now, I've been posting links to articles since time immortal. With this post, I simply mentioned that an electronic bike I was testing was one of my favorites of the summer. I've posted similar notices maybe hundreds of times in the last decade. Shudder to think -- maybe thousands? I probably posted one yesterday. I contacted Meta about this issue and they have not responded. I sent an email to a press representative and also tried to contact the help desk. Here's what I think actually happened. Meta is likely not understanding why I would post a link, and the network is also probably discouraging links because that takes you outside of Facebook. There's likely an AI that scans all posts and looks for keywords and links that are on some kind of blacklist, flagging them as a self-promotion. However, it does seem heavy-handed. For starters, I was mentioning my article on a personal Facebook account where friends and family like to see what I'm writing about. I have been doing that for years and years, so it seems like the algorithm changed and "improved" to flag more posts like this. Elon Musk also cracked down on posts with links not too long ago, essentially making them harder to recognize. I've been using Twitter since the beginning and sometimes I don't realize someone has posted a link, since they are now so obfuscated that you barely notice them. Back to my issue, though. Blocking links like mine and hiding them or making it harder to share them is going to work against social media companies. One of the most "social" things we do is share links. It's a way of letting everyone else see what you are reading, what you like -- who you are. Sharing is caring, as they say. I have found 90% of my best leads by scouring social media posts and looking for links. Getting trolled by the social media network you've used for a decade or more, finding that the algorithm is now going after people like me, being told your post is spammy -- it makes me want to find somewhere else to hang out.

[2]

I posted about my story on Facebook, and the rulers came rushing in. - ExBulletin

Stunned man surprised, angry and shocked by what he saw on his smartphone Getty In the past, I've been accused of writing spam because I loved a product too much (probably because I loved it too much) or I wrote with excitement and awe about a news topic. I have been writing on a variety of topics including social media, innovation, mentoring, and leadership since 2001. I have certainly been in flame wars and pointless arguments on social media, where greedy trolls are always lurking, looking for prey. I usually just hunker down and wait for the troll to go away, as most of them are too stupid to stay there for more than five seconds and have a thoughtful conversation. This time it was the social network itself that hunted me down. Calling a huge tech empire with 3 billion users a troll may seem harsh, but it's not as condescending or accusatory as the notice they sent me. "We have removed your post," it said at first. It's like the kingdom is defending itself. Who is the "we" in this sentence? Mark Zuckerberg? It seems like they were trying to get likes, follows, shares, and video views in a misleading way. Read on for the rest of this light punishment. I had to plead my case and ask for a reconsideration. Now, I've posted links to articles from way back when, where I simply said that an e-bike I was testing was one of my favorites for the summer. I've posted similar notices hundreds of times over the last decade. I shudder to think it may have been thousands of times. I probably even posted one yesterday. I've reached out to Meta about the issue but haven't heard back. I've emailed their PR representative and contacted their help desk. I believe this is what actually happened. Meta probably doesn't understand why I'm posting links, and the network probably doesn't encourage links because they take you outside of Facebook. I suspect there's some AI that scans all posts, looking for some kind of blacklisted keywords or links, and flags them as self-promotion. But that seems forced. First, I would mention my articles on my personal Facebook account where friends and family like to see what I write. I've been doing that for years, but it seems like the algorithm has changed and improved to flag posts like this more. Elon Musk also cracked down on posts with links not too long ago, basically making them less noticeable. I've been using Twitter since its inception, and sometimes I don't realize someone has posted a link because the links have become so obscured that I barely notice them. Now, back to my problem. It would be a disservice to social media companies to block and hide links like mine or make them harder to share. One of the most social things we do is share links. It's a way to let others know what we're reading, what we like, and who we are. As the saying goes, sharing is caring. I find 90% of my best leads by combing through my social media posts looking for links. When I've been trolled on a social media network I've used for over a decade, when I find out that their algorithms are targeting people like me, when I'm told my posts are spam, it makes me want to look elsewhere. For example, Instagram. What Are The Main Benefits Of Comparing Car Insurance Quotes Online

Share

Share

Copy Link

A journalist's experience with Facebook's AI moderation system raises questions about the platform's content policies and the balance between automation and human oversight in social media.

Facebook's AI Moderation Mishap

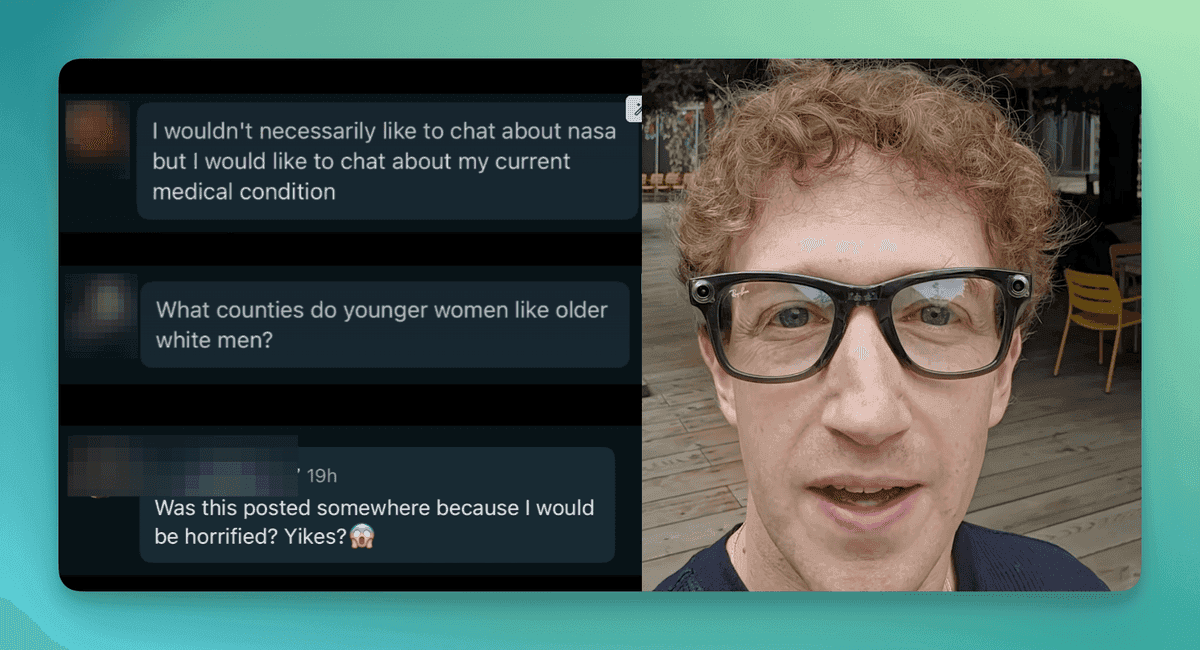

In a recent incident that has sparked debate about social media content moderation, Forbes contributor John Brandon found himself at odds with Facebook's artificial intelligence (AI) system. Brandon attempted to share one of his own articles on the platform, only to have it flagged and removed by Facebook's automated content moderation tools

1

.The Incident

Brandon's post, which contained a link to his article about AI technology, was swiftly taken down by Facebook's AI moderators. The system cited violations of community standards as the reason for removal, despite the content being a legitimate news article written by Brandon himself

1

.AI Moderation: Efficiency vs. Accuracy

This incident highlights the ongoing challenges faced by social media platforms in their efforts to moderate content at scale. While AI-powered systems can process vast amounts of data quickly, they often lack the nuanced understanding that human moderators possess

2

.Facebook, like many other platforms, relies heavily on AI to flag potentially problematic content. However, this approach can lead to false positives, as demonstrated in Brandon's case, where legitimate content was incorrectly identified as violating community standards

1

.The Human Element in Content Moderation

The incident has reignited discussions about the importance of human oversight in content moderation processes. While AI can handle the bulk of moderation tasks, experts argue that human moderators are still crucial for handling complex cases and appeals

2

.Brandon's experience underscores the need for a more balanced approach, combining the efficiency of AI with the discernment of human moderators to ensure fair and accurate content moderation

1

.Related Stories

Implications for Users and Content Creators

This incident raises concerns for content creators and journalists who rely on social media platforms to share their work. The fear of having legitimate content removed or accounts restricted due to AI errors could potentially lead to self-censorship or reluctance to share certain types of content

2

.Facebook's Response and Future Challenges

As of now, Facebook has not provided a detailed explanation for the removal of Brandon's post. This lack of transparency has fueled further debate about the platform's content moderation policies and the need for clearer communication with users when content is flagged or removed

1

.Moving forward, the challenge for Facebook and other social media platforms will be to refine their AI moderation systems to reduce false positives while maintaining the ability to process large volumes of content efficiently. Striking the right balance between automation and human oversight remains a critical goal for the industry

2

.References

Summarized by

Navi

Related Stories

Meta's Shift: Zuckerberg Announces Major Policy Changes Amid Political Landscape Transformation

08 Jan 2025•Policy and Regulation

Meta's AI Chatbot Sparks Privacy Concerns with Public 'Discover' Feed

18 Jun 2025•Technology

Meta's Plan to Integrate AI-Generated Profiles on Facebook and Instagram Sparks Controversy

28 Dec 2024•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology