Google Cloud Run Integrates NVIDIA L4 GPUs for Serverless AI Inference

3 Sources

3 Sources

[1]

Google Leverages NVIDIA's L4 GPUs To Let You Run AI Inference Apps On The Cloud

Google has leveraged NVIDIA's L4 GPUs to offer users the ability to run AI inference applications such as GenAI on the cloud. Press Release: Developers love Cloud Run for its simplicity, fast autoscaling, scale-to-zero capabilities, and pay-per-use pricing. Those same benefits come into play for real-time inference apps serving open-generation AI models. That's why today, we're adding support for NVIDIA L4 GPUs to Cloud Run, in preview. This opens the door to many new use cases for Cloud Run developers: As a fully managed platform, Cloud Run lets you run your code directly on top of Google's scalable infrastructure, combining the flexibility of containers with the simplicity of serverless to help boost your productivity. With Cloud Run, you can run frontend and backend services, batch jobs, deploy websites and applications, and handle queue processing workloads -- all without having to manage the underlying infrastructure. At the same time, many workloads that perform AI inference, especially applications that demand real-time processing, require GPU acceleration to deliver responsive user experiences. With support for NVIDIA GPUs, you can perform on-demand online AI inference using the LLMs of your choice in seconds. With 24GB of vRAM, you can expect fast token rates for models with up to 9 billion parameters, including Llama 3.1(8B), Mistral (7B), and Gemma 2 (9B). When your app is not in use, the service automatically scales down to zero so that you are not charged for it. Today, we support attaching one NVIDIA L4 GPU per Cloud Run instance, and you do not need to reserve your GPUs in advance. To start, Cloud Run GPUs are available today in us-central1(Iowa), with availability in Europe-west4 (Netherlands) and Asia-southeast1 (Singapore) expected before the end of the year.

[2]

Google Cloud Run speeds up on-demand AI inference with Nvidia's L4 GPUs - SiliconANGLE

Google Cloud Run speeds up on-demand AI inference with Nvidia's L4 GPUs Google Cloud is giving developers an easier way to get their artificial intelligence applications up and running in the cloud, with the addition of graphics processing unit support on the Google Cloud Run serverless platform. The company said in a blog post today that it's adding support for Nvidia's L4 graphics processing units on Google Cloud Run in preview in a limited number of regions, ahead of a wider rollout in future. First unveiled in 2019, Google Cloud Run is a fully managed, serverless computing platform that makes it easy for developers to launch applications, websites and online workflows. With Cloud Run, developers simply upload their code as a stateless container into a serverless environment, so there's no need to worry about infrastructure management. It differs from other cloud computing platforms because everything is fully managed. Though some developers appreciate the cloud because it provides the ability to fine-tune the way their computing environments are configured, not everyone wants to bother with this. Cloud Run does all of the heavy lifting for developers, so they don't have to ponder over their compute and storage requirements or worry about configurations and provisioning. It also eliminates the risk of overprovisioning and paying for more computing resources than what developers actually use, thanks to its pay-per-use pricing model, and it naturally requires fewer people to get a new application or website up and running. In a blog post, Google Cloud Serverless Product Manager Sagar Randive said his team realized that Cloud Run's benefits make it an ideal option for running real-time AI inference applications that serve generative AI models. So that's why the company is introducing support for Nvidia's L4 GPUs. With support for Nvidia's GPUs, Cloud Run users can perform on-demand online AI inference using any large language model they want, in a matter of seconds. "With 24GB of vRAM, you can expect fast token rates for models with up to 9 billion parameters, including Llama 3.1(8B), Mistral (7B), Gemma 2 (9B)," Randive said. "When your app is not in use, the service automatically scales down to zero so that you are not charged for it." The company believes that GPU support makes Cloud Run a more viable option for various AI workloads, including inference tasks with lightweight LLMs such as Gemma 2B, Gemma 7B or Llama-3 8B. In turn, this paves the way for developers to build and launch customized chatbots or AI summarization models that can scale to handle spikes in traffic. Other use cases include serving customized and fine-tuned generative AI models, such as a scalable and cost-effective image generator that's tailored for a company's brand. In addition, the Cloud Run GPUs also support non-AI tasks such as on-demand image recognition, video transcoding, streaming and 3D rendering, Google said. Nvidia's L4 GPUs are available in preview on Google Cloud Run now in the us-central1(Iowa) region, and will launch in europe-west4 (Netherlands) and asia-southeast1 (Singapore) by the end of the year. The service supports a single L4 GPU per instance, and there's no need to reserve the GPU in advance, Google said. A handful of customers have already been lucky enough to pilot the new offering, including the cosmetics and beauty products giant L'Oréal S.A., which is using GPUs on Cloud Run to power a number of its real-time inference applications. "The low cold-start latency is impressive, allowing our models to serve predictions almost instantly, which is critical for time-sensitive customer experiences," said Thomas Menard, head of AI at L'Oreal. "Cloud Run GPUs maintain consistently minimal serving latency under varying loads, ensuring our generative AI applications are always responsive and dependable."

[3]

Google Cloud Run embraces Nvidia GPUs for serverless AI inference

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More There are a number of different costs associated with running AI, one of the most fundamental is providing the GPU power needed for inference. To date, organizations that need to provide AI inference have had to run long running cloud instances, or provision hardware on-premises. Today, Google Cloud is previewing a new approach, and it's one that could reshape the landscape of AI application deployment. The Google Cloud Run serverless offering is now integrating Nvidia L4 GPUs, effectively enabling organizations to run serverless inference. The promise of serverless is that a service only runs when needed and users only pay for what is used. That's in contrast to a typical cloud instance which will run for a set amount of time as a persistent service and is always available. With a serverless service, in this case a GPU for inference, only fires up and is used when needed. The serverless inference can be deployed as an Nvidia NIM, as well as other frameworks such as VLLM, Pytorch and Ollama. The addition of Nvidia L4 GPU's is currently in preview. "As customers increasingly adopt AI, they are seeking to run AI workloads like inference on platforms they are familiar with and start up on," Sagar Randive, Product Manager, Google Cloud Serverless, told VentureBeat. "Cloud Run users prefer the efficiency and flexibility of the platform and have been asking for Google to add GPU support." Bringing AI into the serverless world Cloud Run, Google's fully managed serverless platform, has been a popular platform with developers thanks to its ability to simplify container deployment and management. However, the escalating demands of AI workloads, particularly those requiring real-time processing, have highlighted the need for more robust computational resources. The integration of GPU support opens up a wide array of use cases for Cloud Run developers including: Serverless performance can scale to meet AI inference needs A common concern with serverless is about performance. After all, if a service is not always running, there is often a performance hit just to get the service running from a so-called cold start. Google Cloud is aiming to allay any such performance fears citing some impressive metrics for the new GPU-enabled Cloud Run instances. According to Google, cold start times range from 11 to 35 seconds for various models, including Gemma 2b, Gemma2 9b, Llama2 7b/13b, and Llama 3.1 8b, showcasing the platform's responsiveness. Each Cloud Run instance can be equipped with one Nvidia L4 GPU, with up to 24GB of vRAM, providing a solid level of resources for many common AI inference tasks. Google Cloud is also aiming to be model agnostic in terms of what can run, though it is hedging its bets somewhat. "We do not restrict any LLMs, users can run any models they want," Randive said. "However for best performance, it is recommended that they run models under 13B parameters." Will running serverless AI inference be cheaper? A key promise of serverless is better utilization of hardware, which is supposed to also translate to lower costs. As to whether or not it is actually cheaper for an organization to provision AI inference as a serverless or as a long running server approach is a somewhat nuanced question. "This depends on the application and the traffic pattern expected," Randive said. "We will be updating our pricing calculator to reflect the new GPU prices with Cloud Run at which point customers will be able to compare their total cost of operations on various platforms."

Share

Share

Copy Link

Google Cloud has announced the integration of NVIDIA L4 GPUs with Cloud Run, enabling serverless AI inference for developers. This move aims to enhance AI application performance and efficiency in the cloud.

Google Cloud Run's AI Inference Upgrade

Google Cloud has taken a significant step forward in the realm of AI infrastructure by integrating NVIDIA L4 GPUs into its Cloud Run service. This strategic move is set to revolutionize the way developers deploy and scale AI inference workloads in a serverless environment

1

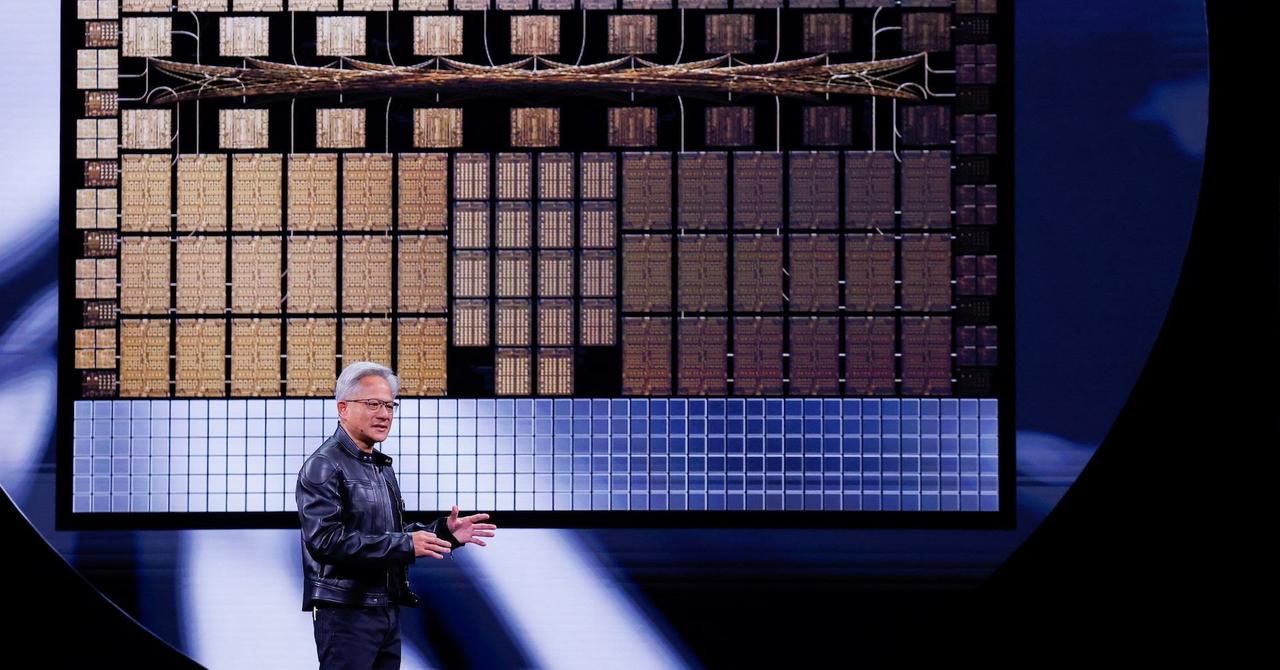

.The Power of NVIDIA L4 GPUs

The NVIDIA L4 GPU is specifically designed for AI inference and graphics workloads. It offers a balance of performance, efficiency, and cost-effectiveness, making it an ideal choice for cloud-based AI applications. By leveraging these GPUs, Google Cloud Run can now provide developers with the computational power needed to run complex AI models without the overhead of managing the underlying infrastructure

2

.Serverless AI Inference Benefits

The integration of GPUs into Cloud Run's serverless platform brings several advantages:

- Scalability: Developers can easily scale their AI inference workloads on-demand without worrying about provisioning or managing GPU resources.

- Cost-efficiency: The pay-per-use model of serverless computing, combined with the efficiency of L4 GPUs, can lead to significant cost savings for businesses.

- Simplified deployment: The serverless nature of Cloud Run eliminates the need for complex infrastructure management, allowing developers to focus on their AI applications

3

.

Enhanced Performance for AI Applications

Google Cloud claims that the integration of L4 GPUs can deliver up to 3.5 times better performance for AI inference workloads compared to CPU-only deployments. This performance boost is crucial for applications that require real-time AI processing, such as natural language processing, computer vision, and recommendation systems

1

.Related Stories

Developer-Friendly Features

To support developers in leveraging this new capability, Google Cloud has introduced several features:

- GPU-aware autoscaling: Cloud Run can automatically scale the number of GPU-enabled containers based on demand.

- Flexible GPU allocation: Developers can specify the number of GPUs per container, allowing for optimal resource utilization.

- Seamless integration: Existing Cloud Run applications can easily be updated to use GPUs without significant code changes

2

.

Industry Impact and Future Prospects

This move by Google Cloud is expected to have a significant impact on the AI and cloud computing industries. By making GPU-powered AI inference more accessible and cost-effective, Google is lowering the barriers to entry for businesses looking to implement AI solutions. As the demand for AI-driven applications continues to grow, the ability to deploy these workloads in a serverless environment could become a key differentiator in the cloud market

3

.References

Summarized by

Navi

[2]

Related Stories

Google and NVIDIA Partner to Bring Gemini AI Models On-Premises with Enhanced Security

10 Apr 2025•Technology

NVIDIA and AWS Collaborate to Accelerate AI, Robotics, and Quantum Computing

04 Dec 2024•Technology

Cloudera Launches AI Inference Service with NVIDIA NIM to Accelerate GenAI Development and Deployment

10 Oct 2024•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology

3

ChatGPT cracks decades-old gluon amplitude puzzle, marking AI's first major theoretical physics win

Science and Research